Source: Java Backend Programming

- History of HTTP

- Overview of QUIC Protocol

- RTT Connection Establishment

- Connection Migration

- Head-of-Line Blocking / Multiplexing

- Congestion Control

- Flow Control

After years of effort, on June 6, the IETF (Internet Engineering Task Force) officially released the RFC for HTTP/3, which is the third major version of the Hypertext Transfer Protocol (HTTP). The complete RFC exceeds 20,000 words and explains HTTP/3 in great detail.

History of HTTP

- 1991 HTTP/1.1

- 2009 Google designed SPDY based on TCP

- 2013 QUIC

- 2015 HTTP/2

- 2018 HTTP/3

HTTP/3 achieves high speed by using UDP while maintaining the stability of QUIC, without sacrificing the security of TLS.

Overview of QUIC Protocol

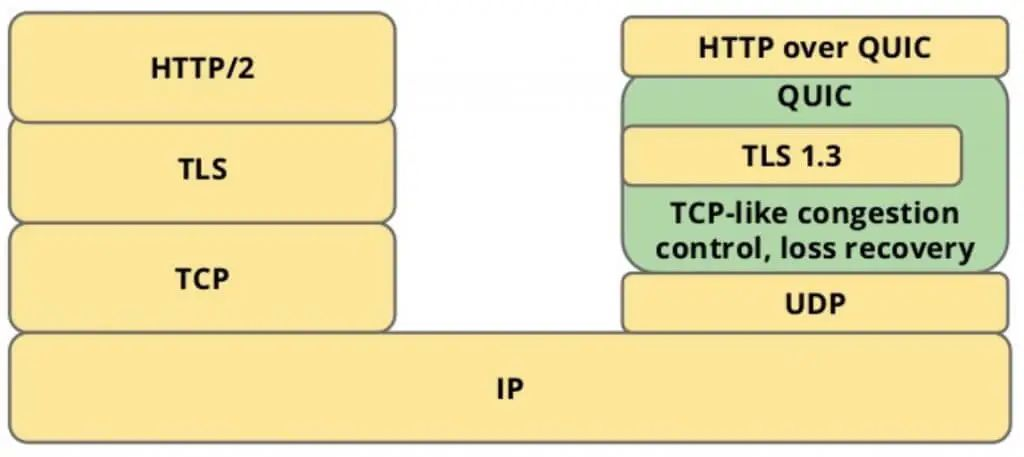

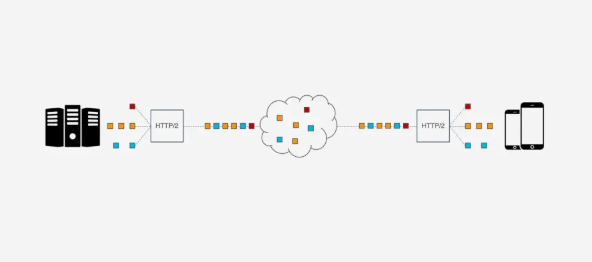

QUIC (Quick UDP Internet Connections) is a protocol based on UDP that leverages the speed and efficiency of UDP while integrating and optimizing the advantages of TCP, TLS, and HTTP/2. The relationship between them can be clearly illustrated in a diagram.

QUIC is designed to replace TCP and SSL/TLS as the transport layer protocol, with application layer protocols such as HTTP, FTP, IMAP, etc., theoretically able to run over QUIC. The protocol running over QUIC is referred to as HTTP/3, which means<span><span>HTTP over QUIC</span></span>, or<span><span>HTTP/3</span></span>.

Therefore, to understand<span><span>HTTP/3</span></span>, one must consider QUIC, and here are several important features of QUIC.

RTT Connection Establishment

RTT: round-trip time, includes only the time for the request to travel back and forth.

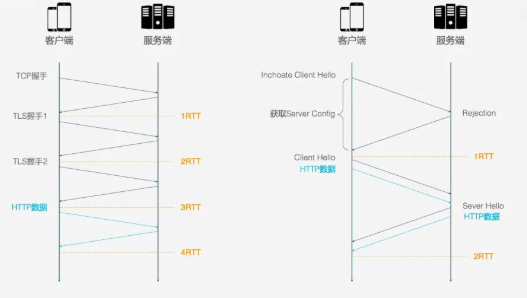

<span><span>HTTP/2</span></span> requires 3 RTTs to establish a connection. If session reuse is considered, which caches the symmetric key calculated during the first handshake, it still requires 2 RTTs. Furthermore, if TLS is upgraded to 1.3, then HTTP/2 connections require 2 RTTs, and considering session reuse requires 1 RTT. If HTTP/2 is not urgent for HTTPS, it can be simplified, but in practice, almost all browser designs require HTTP/2 to be based on HTTPS.

<span><span>HTTP/3</span></span> only requires<span><span>1 RTT</span></span> for the first connection, while subsequent connections only require<span><span>0 RTT</span></span>, meaning the first packet sent by the client to the server contains request data. The main connection process is as follows:

- On the first connection, the client sends

<span><span>Inchoate Client Hello</span></span>to request a connection; - The server generates g, p, a, calculates A based on g, p, a, then places g, p, A into the Server Config and sends a

<span><span>Rejection</span></span>message to the client. - After the client receives g, p, A, it generates b, calculates B based on g, p, a, and calculates the initial key K based on A, p, b. Once B and K are calculated, the client encrypts the HTTP data using K and sends it to the server along with B.

- After the server receives B, it generates the same key as the client based on a, p, B, and uses this key to decrypt the received HTTP data. For further security (forward secrecy), the server updates its random number a and public key, generates a new key S, and sends the public key to the client via

<span><span>Server Hello</span></span>, along with the HTTP return data.

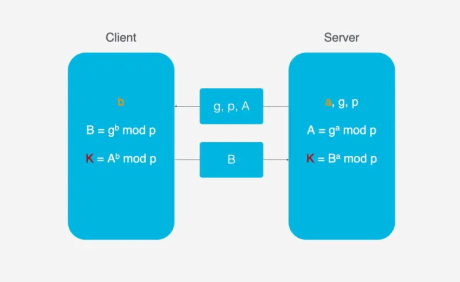

Here, the DH key exchange algorithm is used. The core of the DH algorithm is that the server generates three random numbers a, g, p, where a is kept by the server, and g and p are transmitted to the client. The client generates a random number b, and through the DH algorithm, both the client and server can calculate the same key. During this process, a and b are not transmitted over the network, greatly enhancing security. Even if g, p, A, and B are intercepted during transmission, they cannot be cracked with current computational power due to the large numbers involved.

Connection Migration

TCP connections are based on a four-tuple (source IP, source port, destination IP, destination port). When switching networks, at least one of these factors changes, leading to connection failure. If the original TCP connection is still used, it will result in a connection failure, and one must wait for the original connection to time out before re-establishing it. This is why we sometimes find that when switching to a new network, even if the network conditions are good, the content still takes a long time to load. Ideally, when a network change is detected, a new TCP connection should be established immediately, but even then, establishing a new connection still takes hundreds of milliseconds.

QUIC is not affected by the four-tuple. When any of these four elements change, the original connection remains intact. The principle is as follows:

QUIC does not use the four elements for representation but instead uses a 64-bit random number known as<span><span>Connection ID</span></span>. As long as the Connection ID does not change, the connection can be maintained even if the IP or port changes.

Head-of-Line Blocking / Multiplexing

<span><span>HTTP/1.1</span></span> and <span><span>HTTP/2</span></span> both suffer from head-of-line blocking issues.

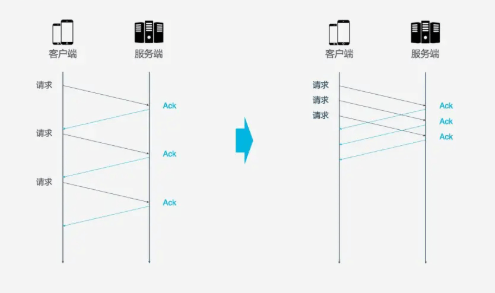

TCP is a connection-oriented protocol, meaning that after sending a request, it must receive an<span><span>ACK</span></span> message to confirm that the object has accepted the data. If each request must wait for the previous request’s<span><span>ACK</span></span><span> message before sending the next request, the efficiency is undoubtedly very low. Later, </span><code><span><span>HTTP/1.1</span></span> introduced the <span><span>Pipeline</span></span> technique, allowing multiple requests to be sent simultaneously over a single TCP connection, thus improving transmission efficiency.

In this context, head-of-line blocking occurs. For example, if a TCP connection transmits 10 requests simultaneously, and requests 1, 2, and 3 are received by the client, but the fourth request is lost, then requests 5-10 will be blocked. They must wait for the fourth request to be processed before they can be handled. This wastes bandwidth resources.

Therefore, HTTP generally allows each host to establish six TCP connections, which can better utilize bandwidth resources, but the head-of-line blocking issue still exists within each connection.

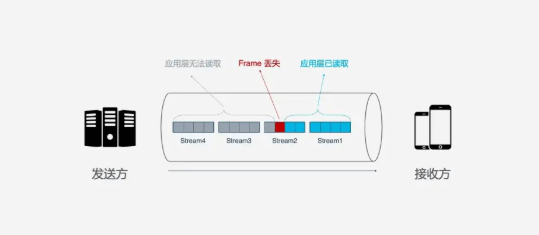

HTTP/2’s multiplexing solves the above head-of-line blocking problem. In HTTP/2, each request is split into multiple<span><span>Frames</span></span> and transmitted simultaneously over a single TCP connection, so even if one request is blocked, it does not affect other requests.

However, while HTTP/2 can solve blocking at the request level, the underlying TCP protocol still suffers from head-of-line blocking issues. Each request in HTTP/2 is split into multiple Frames, and different requests’ Frames combine to form Streams. A Stream is a logical transmission unit over TCP, allowing HTTP/2 to achieve the goal of sending multiple requests simultaneously over a single connection. If Stream1 is successfully delivered, but the third Frame in Stream2 is lost, TCP processes data in strict order, requiring the sender to resend the third Frame. Streams 3 and 4 may have arrived but cannot be processed, causing the entire link to be blocked.

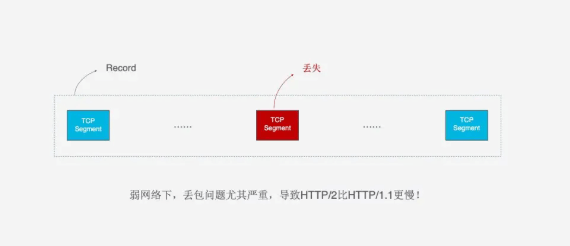

Moreover, since HTTP/2 must use HTTPS, and HTTPS uses the TLS protocol, it also suffers from head-of-line blocking issues. TLS organizes data based on Records, encrypting a pair of data together, and after encryption, splits it into multiple TCP packets for transmission. Generally, each Record is 16K, containing 12 TCP packets, so if any one of the 12 TCP packets is lost, the entire Record cannot be decrypted.

Head-of-line blocking can cause HTTP/2 to be slower than HTTP/1.1 in weak network environments where packet loss is more likely.

How does QUIC solve the head-of-line blocking problem? There are two main points:

- QUIC’s transmission unit is a Packet, and the encryption unit is also a Packet. The entire encryption, transmission, and decryption are based on Packets, which avoids the blocking issues of TLS.

- QUIC is based on UDP, and UDP packets have no processing order at the receiving end. Even if a packet is lost in the middle, it does not block the entire connection. Other resources can be processed normally.

Congestion Control

The purpose of congestion control is to prevent too much data from flooding the network at once, causing it to exceed its maximum capacity. QUIC’s congestion control is similar to TCP but has been improved upon. Let’s first look at TCP’s congestion control.

- Slow Start: The sender sends one unit of data to the receiver, and upon receiving confirmation, sends 2 units, then 4, 8, and so on, exponentially increasing while probing the network’s congestion level.

- Avoiding Congestion: After exponential growth reaches a certain limit, it switches to linear growth.

- Fast Retransmit: The sender sets a timeout timer for each transmission. If it times out, it is considered lost and needs to be resent.

- Fast Recovery: Based on the above fast retransmit, when the sender resends data, it also starts a timeout timer. If it receives a confirmation message, it enters the congestion avoidance phase; if it still times out, it returns to the slow start phase.

QUIC re-implements the Cubic algorithm from TCP for congestion control, and here are the features of QUIC’s improved congestion control:

1. Hot Plugging

In TCP, modifying the congestion control strategy requires system-level operations, while QUIC can modify the congestion control strategy at the application level, dynamically selecting the congestion control algorithm based on different network environments and users.

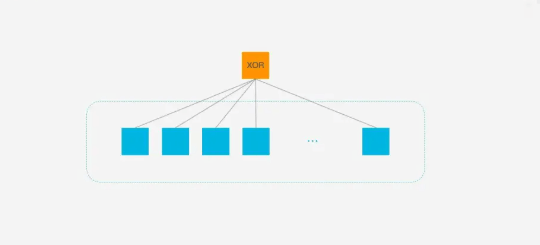

2. Forward Error Correction (FEC)

QUIC uses Forward Error Correction (FEC) technology to increase the protocol’s fault tolerance. A segment of data is split into 10 packets, and an XOR operation is performed on each packet. The result is transmitted as an FEC packet along with the data packets. If one data packet is lost during transmission, the missing packet’s data can be inferred from the remaining 9 packets and the FEC packet, greatly increasing the protocol’s fault tolerance.

This is a solution that aligns with current network transmission technology. Currently, bandwidth is no longer the bottleneck for network transmission; round-trip time is. Therefore, new network transmission protocols can appropriately increase data redundancy to reduce retransmission operations.

3. Monotonically Increasing Packet Number

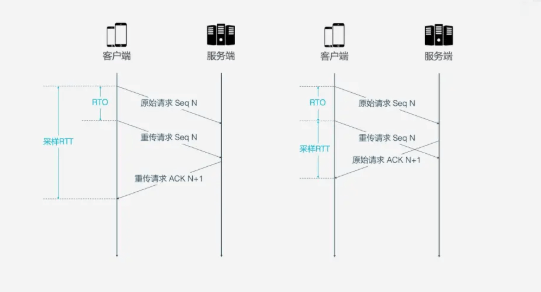

To ensure reliability, TCP uses<span><span>Sequence Number</span></span> and ACK to confirm whether messages arrive in order, but this design has flaws.

When a timeout occurs, the client initiates a retransmission. Later, it receives an ACK confirmation message, but since the ACK message for the original request and the retransmission request is the same, the client cannot determine whether this ACK corresponds to the original request or the retransmission request. This can lead to ambiguity.

- RTT: Round Trip Time

- RTO: Retransmission Timeout

If the client thinks it is an ACK for a retransmission, but it is actually the situation on the right, it will lead to a small RTT, and vice versa will lead to a large RTT.

QUIC resolves the ambiguity issue mentioned above. Unlike<span><span>Sequence Number</span></span>, the <span><span>Packet Number</span></span> is strictly monotonically increasing. If<span><span>Packet N</span></span> is lost, the identifier for the retransmission will not be N, but a larger number, such as N+M. This way, when the sender receives the confirmation message, it can easily determine whether the ACK corresponds to the original request or the retransmission request.

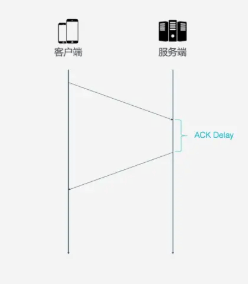

4. ACK Delay

TCP does not consider the delay between the receiver receiving data and sending the confirmation message to the sender when calculating RTT, as shown in the following diagram. This delay is known as ACK Delay. QUIC takes this delay into account, making RTT calculations more accurate.

5. More ACK Blocks

Generally, the receiver should send an ACK response after receiving the sender’s message to indicate that the data has been received. However, sending an ACK response for every single data packet is cumbersome, so typically, multiple data packets are acknowledged at once. TCP SACK provides a maximum of 3 ACK blocks. However, in some scenarios, such as downloads, the server only needs to return data, but according to TCP’s design, it must return an ACK for every three data packets received. QUIC can carry up to 256<span><span>ACK blocks</span></span>, which can reduce retransmissions and improve network efficiency in networks with high packet loss rates.

Flow Control

TCP performs flow control on each TCP connection, meaning it prevents the sender from sending data too quickly, allowing the receiver to keep up; otherwise, data overflow and loss may occur. TCP’s flow control is mainly implemented through a sliding window. Congestion control primarily controls the sender’s sending strategy but does not consider the receiver’s receiving capacity, while flow control addresses this limitation.

QUIC only needs to establish one connection, over which multiple<span><span>Streams</span></span> can be transmitted simultaneously. This is like having a road with multiple warehouses, where many vehicles transport goods. QUIC’s flow control has two levels: Connection Level and Stream Level.

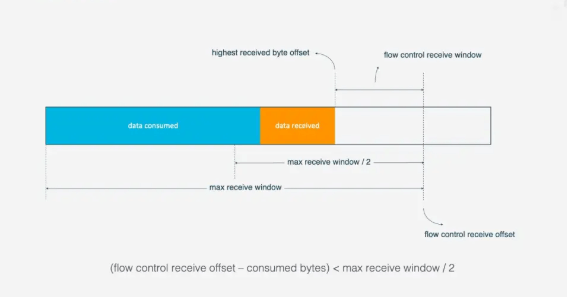

For flow control at the Stream level: When a Stream has not yet transmitted data, the receive window (flow control receive window) is the maximum receive window. As the receiver receives data, the receive window gradually shrinks. Among the received data, some have been processed, while others have not yet been processed. In the following diagram, the blue blocks represent processed data, while the yellow blocks represent unprocessed data, which causes the Stream’s receive window to shrink.

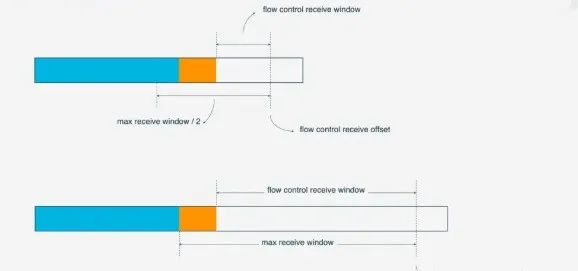

As data continues to be processed, the receiver can handle more data. When the condition<span><span>(flow control receive offset - consumed bytes) < (max receive window/2)</span></span> is met, the receiver will send a<span><span>WINDOW_UPDATE frame</span></span> to inform the sender that it can send more data. At this point, the<span><span>flow control receive offset</span></span> will shift, and the receive window will increase, allowing the sender to send more data to the receiver.

Stream-level flow control has limited effectiveness in preventing the receiver from receiving too much data; it is more necessary to rely on Connection-level flow control. Understanding Stream flow control makes it easy to understand Connection flow control. In a Stream,

Receive Window = Maximum Receive Window - Data ReceivedFor Connection-level flow control:

Receive Window = Stream1 Receive Window + Stream2 Receive Window + ... + StreamN Receive WindowCopyright Notice: All reprinted articles must indicate the original source or reprint source (in cases where the reprint source does not indicate the original source), please contact for deletion if there is any infringement.