The following article is a summary of common knowledge in Shell programming, covering syntax and techniques.

1. Basic Concepts of Shell

1. Introduction

The Shell is a command-line interpreter, an interface for users to interact with the kernel, receiving user-input commands and invoking system functions for execution. The Shell is loaded into memory when logging into the system, independent of the kernel. The Shell scripting language has a simple syntax structure and does not require compilation. The Shell provides syntax structures such as branching, variables, functions, loops, and arrays. Combined with the small tools provided by the Linux system, it can effectively help accomplish specific functions.

Common Shells: `bash` (mainstream), `sh`, `zsh`, `ksh`

The first line of a script often declares the interpreter, such as: #!/bin/bash.

2. Variables

Defining a variable does not require a prior type declaration, but you can use declare to specify the variable type.

User Variables:

name=”Hell” # Definition and assignment (no spaces around the equals sign)

read -s -p “please input ” name #-s specifies wait time, -p prompts the user for input, and the final value is stored in name

echo “Hello, $name” # Using variables, double quotes ” “: allow variable substitution (`$var`) and escape characters; single quotes ‘ ‘: output as is, do not parse variables.·· Backticks execute the result of the command, similar to $().

Environment Variables:

export PATH=”$PATH:/my/path” # Set environment variable

echo $HOME # View system variable

printenv # View all environment variables

IFS # Specify Shell field separator, default is space,

Common System Variables:

$0: The name of the current script

$n: The nth parameter of the current script, n=1,2,3,4······

$*: All parameters of the current script (excluding the program itself)

$#: The number of parameters of the current script (excluding the program itself)

$@: A list of all parameters passed to the program

$?: The status after execution, returning 0 means successful execution

$$: The PID of the program itself

$!: The process ID (PID) of the last background process

Note:$* and $@ both represent all parameters passed to the function or script. When not enclosed in double quotes, they output all parameters in the form of $1 $2 …$n; when enclosed in double quotes, “$*” treats all parameters as a whole, outputting them as “$1 $2 …$n”; “$@” separates each parameter, outputting them as “$1” “$2″…”$n”.

3. Arrays

Arrays: Only one-dimensional arrays are supported, size does not need to be specified, indices start at 0, and both numbers and characters can be in one array. Elements can be directly added to the array, and operations such as adding, deleting, and modifying are possible.

arr=(“apple” “banana” “cherry” 3 4 5 )

echo ${arr[1]} # Output banana

echo ${arr[@]} or echo ${arr[*]} # Output all elements, both have the same effect

echo ${#arr[@]} # Print array length 3

echo ${#arr[1]} # Display the length of the second element

array_new3=(${array1[@]} ${array2[*]}) # Concatenate array1 and array2

unset arr # Delete the entire array,

unset arr[0] # Delete the first array element

echo $arr{[@]:1} # Print all values starting from the second value

echo $arr{[@]:1:2} # Print the second and third values

Application example: IP addresses can be defined through arrays.

4. Introduction to vi/vim Editor

vi is a text editing tool in the command-line interface, developed by Bill Joy in 1976. vim is its enhanced version. In Linux systems, both are equivalent commands. The vi editor commonly has three modes:

Normal Mode: The default mode for navigation, copying, deleting, etc., cannot directly input text.

Insert Mode: The mode for inputting and editing text, entered through commands like i, a, etc.

Command-Line Mode: Entered through :, used for saving, exiting, searching, replacing, and other advanced operations.

/word # Search for the word string downwards from the current cursor position

?word # Search for the word string upwards from the current cursor position

:1,$s/old/new/g # Replace old with new from the first line to the last line

:n1,n2s/old/new/g # Search for the string named old between lines n1 and n2, replace with new

:s/old/new/g # Replace old with new, provided the cursor must be moved to that line; without /g, it only replaces the first occurrence of old

yy Copy the line where the cursor is located, 3yy# Copy the three lines below the cursor. p pastes above the line where the cursor is located, P pastes below the line where the cursor is located.

wq! Exit; q! Force exit; ;dd Delete the line where the cursor is located; x Delete the character at the current cursor position

5. Wildcards and Regular Expressions

Wildcards and regular expressions are two concepts; wildcards are commonly used for file or file name operations, while regular expressions are used for character search and replacement in file content.

Wildcards:

“*” # 0 or more characters, numbers

“?” # Matches any one character

“#” # Comment

“^” # Negation

“|” # Pipe

“&” # Command running in the background

Regular Expressions:

“*” # Used to match 0 or more times, a*b matches ab, abc, abbc, adfdfc··

“.” # Matches any one character, …ab matches the fourth and fifth characters as ab, the first three are arbitrary

“^”# Matches the beginning of a line, ^ab matches lines starting with ab

“$”# Matches the end of a line, ab$ matches lines ending with ab, ^$ matches empty lines, ^.$ matches one character

“[]”# Matches a set of characters, [0-9] matches any one digit, “[^a-z]” ^ is no longer matching the beginning of the line, but negation

“?” # Matches the preceding character 0 or 1 time, ab?c represents abc and abbc

“+” # Matches the preceding character more than once

\<\> # Exact match, such as \<wor\> means matching wor, not matching word

\{\} # Series symbols, can specify the number of times the preceding character appears

2. Several Common Commands

1. The tar command is for tar software package management, including packaging, compression, decompression, archiving, etc. Common parameters are as follows:

tar -cvf test.tar.gz test # Package the test directory or file, named test after packaging

tar -Af target_file.tar source_file.tar # Merge the source file into the target file, provided it is uncompressed; if compressed, it must be decompressed first

tar -tf test.tar.gz # View the contents of the test.tar.gz package

tar -rf test.tar.gz file1 # Append file1 to the test.tar.gz package

tar -xvf test.tar.gz # Decompress the test.tar.gz package

tar -zcvf test.tar.gz test # Use gzip format to package and compress the test directory

tar -cjvf test.tar.gz test # Use bzip2 format to package and compress the test directory

tar -zcvf test.tar.gz test –exclude=test1.tar.gz –exclude=file2# Use gzip format to package and compress the test but exclude test1.tar.gz and file2

tar -zcvf test.tar.gz test -X file3 # Use gzip format to package and compress the test directory, but exclude the files recorded in file3, all file names recorded in file3 will not be processed

tar -g can perform incremental backups based on GUN format

2. find command: Core search for files or directories, can process the found files.

Syntax format: The complete format can be viewed through the “find –help” command. The common format is: find [path…] [search condition] [action]

Common parameters:

-name filename # Search for files by name

-type b/d/c/p/l/f # Search for block devices, directories, character devices, pipes, symbolic links, ordinary files

-size n[c] #: Search for files of length n blocks [n bytes]

-perm:# Search by execution permission

-user username # Search by file owner

-group groupname # Search by file group

-type f -empty # Empty file

-exec chmod 755 {} \;# Find and change permissions

-mtime;-ation;-ctime;-mmin;-amin;-cmin# respectively are based on modification time, access time, creation time, modification time (the first three are in days, the last three are in minutes)

Common examples:

a)find /home /var – -name “*.log”# Search for files ending with log in the /home and /var directories (-iname parameter can match case-insensitively)

b)find /home -user deng -type f -size +50M -ok rm -v {} \; # Find files in the /home directory belonging to user deng and larger than 50MB, and execute the delete operation

c)find /home/oracle/testshell -name “*.log” -mtime +7 -exec gzip {} \; Modify time exceeds 7 days, execute gzip compression for each file

d)find /boot -path /boot/grub2 -o -path /boot/efi -prune -o -print# -prune specifies the directory to ignore

e)find /home/oracle -name “[A-Z]*” -type f -mtime +7 -size +1M -exec mv {} /tmp/ \; # Find files starting with uppercase letters, modified more than 7 days ago, larger than 1MB, and move them to the /tmp directory. The ok command can be used instead of exec to prompt before executing the operation, increasing security

f)find ./testshell/ -type f -newer /file1 # Find all files with a modification time longer than file1

g)find testshell/ -mtime -1 -name *_backup |xargs -i rm -v {} # Find files with a modification time of less than 1 day ending with _backup, then delete. The difference between xargs and exec parameters is that find passes all matched files to exec for execution, which may cause overflow errors if the files are too large. xargs only gets a portion each time, and some systems may start a corresponding process for each matched file with exec, which may affect system efficiency

The flexibility of find is reflected in:

Condition combinations: Combine complex conditions through logical operators (-a, -o, !) and parentheses.

Action expansion: Combine -exec, xargs, or pipe symbols or regular expressions to interact with other commands.

Safe operations: Use -ok instead of -exec for interactive confirmation.

It is recommended to preview results with -print or -ls before operations to avoid accidental deletion/modification of files!

3. grep: Global search regular expression

A powerful text search tool. The pattern of the grep command can be a string, a variable, or a regular expression. As long as there is a space, it must be enclosed in double quotes (double quotes can be parsed) or single quotes (single quotes output as is). If not enclosed, the subsequent characters will be treated as file names to search.

Format: grep [options] [pattern] [files···]

-v # Display all lines that do not contain the pattern

-h# When querying multiple files, do not display file names; by default, multiple files will display file names

-l# Only list file names; by default, display line content

-s# Do not prompt for non-existent or non-matching error messages

-r# Indicates recursive search in subdirectories; by default, only searches the current directory

-w# Similar to single quotes, does not interpret values, matches literally

-x# Only prints output if the entire line matches

-q# Indicates whether the search was successful with an exit status, 0 for success, does not display matching information

grep -c “word” file1 file2 # Display the total number of lines containing word in file1 and file2

grep -n “word” file1 # Match line numbers and line content.

grep -i “word” file1 # Case insensitive, match all lines containing word

grep “word[12]” file1# Match lines starting with word followed by 1 or 2

grep “^[^word]” file1 # Display lines where the first character is not w or o or r or d; the first ^ is a wildcard for the beginning of the line. The second ^ is the negation of the regular expression

4. sed Stream Editor

sed is a non-interactive text editor that can edit text files and standard input, where standard input can be keyboard input, file redirection, strings, variables, or even text from pipes. Common usage is as follows:

sed -n ‘/word/p’ file1# Print lines matching word, case sensitive

sed -n ‘2,4p’ file1 # Only print lines 2 to 4; by default, it will also print other lines

sed -n ‘1p;$p’ file1# Print the first and last lines

sed ‘s/old/new/g’ file1 # Replace new with old

sed -i ‘s/old/new/g’ file # Replace new with old, but note that -i will modify the source file

sed ‘1,4d’ file1# Delete lines 1 to 4

sed ‘/word/,$d’ file1# Delete matching lines to the last line

for i in `seq 1 3`; do sed -i ‘$d’ file1 ;done# Loop 3 times to delete the last line, effectively deleting the last 3 lines

sed usage is very flexible and will be discussed further in subsequent chapters.

5. awk (Who, Weinberger, Kernighan)

awk is a text processing tool, a programming language with a syntax similar to C. The basic principle is to process data in files line by line, looking for patterns that match the content given in the command line. If a match is found, the next programming step is executed; if no match is found, the next line is processed. Two special fields, BEGIN and END, are executed before and after the main input loop, respectively.

Format: awk ‘pattern {action}’ input_file

Pattern: Optional, determines when to execute the action (such as regular expressions, conditional judgments)

Action: The operation executed when the pattern matches (such as printing, calculating, etc.)

NF: The total number of fields in the current line.

NR: The current processing line number.

$0: The entire line content.

FS: Input field separator (default space, can be modified with -F)

OFS: Specify output separator

BEGIN and END blocks

BEGIN{}: Executes before processing the first line, i.e., before reading the input line (commonly used for initialization).

END{}: Executes after processing all lines (such as summarizing results).

gsub(r,s)# Replace r with s in $0.

length(s)# Length of s

&& # Logical and

|| # Logical or

Simple usage examples:

awk ‘{print $0}’ file.txt # Print all content of the file (similar to cat)

awk ‘{print $1, $3}’ file.txt# Print the 1st and 3rd columns

awk ‘BEGIN{OFS=”:”} {print $1, $3}’ file.txt # Custom output format (OFS specifies the separator)

awk ‘$3 > 100 {print $0}’ data.txt# Print lines where the 3rd column is greater than 100

awk ‘/error/i {print}’ log.txt # Print lines containing “error” (case insensitive)

awk ‘$2 == “admin” && $4 >50’ access.log# 2nd column is “admin” and 4th column is greater than 50

awk has many built-in variables, built-in functions, supports operators, arithmetic operators, conditional operators, and complete flow control statements, with very flexible usage, and will be discussed in detail in subsequent chapters.

3. Flow Control

Before learning flow control, first understand several important points

Exit Status: Every command execution has an exit status, the exit status of the last executed command is stored in the built-in variable “$?”;

0 indicates successful execution;

1~125 indicates failure; 126 indicates the command was found but cannot be executed;

127 indicates the command to be executed was not found; >128 indicates the command was forcibly terminated by the system

Testing: Use the test command or start the test command with “[” to pair with “]”

Integer Comparison Operators:

-gt # Greater than; -le # Less than or equal to; -lt# Less than; -ne# Not equal. Example:

[ num1 -eq num2 ] # Whether equal, if equal, the test result is 0

[ num1 -ge num2 ] # Whether num1 is greater than or equal to num2, then the test result is 0

String Comparison Operators:

-n tests whether the string is non-empty; -z tests whether the string is empty; =; !=; Example:

a=”hello”,

test “$a” and [ $a ] both have a test result of $? as 0

File Operators:

-d file # Test whether file is a directory

-e file # Test whether file exists

-f file # Test whether file is a regular file

-r file # Test whether file is readable by the process

-s file # Test whether the length of file is not 0

-w file # Test whether file is writable by the process

-x file # Test whether file is executable by the process

-L file # Test whether file is a symbolic link

Logical Operators:

“()” # Used for combining multiple commands, command substitution, executing commands in a subprocess, initializing arrays,

“(())” # Arithmetic operations, numerical comparisons; used in for/while loops

“[]” # Equivalent to the test command, used for file, string, and numerical condition judgments. Logical tests use [ a -a b ] [ a -o b ]

“[[ ]]” # Use == and wildcards (*, ?) for string matching. Logical tests use [[ a && b ]] [[ a || b ]]

Example:

a=1; ( a=2; echo “In child shell a=$a” ); echo “In parent shell a=$a”

a=1;((a += 3))

if [ “$a” -eq 5 ]

if [[ “$choice” == “yes” || “$choice” == “y” ]];

Operators:

+ Addition; – Subtraction; * Multiplication; / Division; % Modulus; ** Exponentiation;

++a and a++ both represent increment;

–a and a– both represent decrement;

1. Conditional Judgment:

if [[ -f “file.txt” ]]; then

echo “File exists”

elif [[ -d “dir” ]]; then

echo “Directory exists”

else

echo “Other cases”

fi

2. Case Statement:

case “$OS” in

“Linux”) echo “Linux system” ;;

“Mac”) echo “Mac system” ;;

*) echo “Other systems” ;;

esac

3. Loop Structures:

# for loop

for i in {1..5}; do

echo $i

done

#select statement

select i in Centos Redhat

do

echo “$i”

done

# while loop

count=0

while (( count < 3 )); do

echo $count

((count++))

done

# until loop

#!/bin/sh

a=1; until [[ “$a” -gt 5 ]]

do

echo “$a”;((a++))

done

Flow control requires repeated practice, such as using various methods to achieve the same scenario. Flow control also includes break loop control, continue loop control, which will be discussed in subsequent chapters.

4. Functions (Function)

Functions are the core tool for code reuse, allowing encapsulation of repetitive logic, simplifying script structure, and allowing direct mutual calls between functions, including recursive calls.

Example:

Define a function, pass parameters:

print_a() {

echo “First parameter: $1”

echo “All parameters: $@”

}

print_a “Hello World” “Shell Script” # Correctly pass parameters containing spaces

Modular example:

# lib.sh

# Log recording function

log() {

local level=$1

local message=$2

echo “[$level] $(date ‘+%Y-%m-%d %H:%M:%S’) – $message”

}

# Check if the file exists

file_exists() {

[ -f “$1” ] && return 0 || return 1

}

Save functions to a separate file (e.g., lib.sh)

# main.sh

source ./lib.sh # or . ./lib.sh

log “INFO” “Script started”

if file_exists “/tmp/data.txt”; then

log “SUCCESS” “File found”

else

log “ERROR” “File missing”

fi

Recursive function:

# Calculate factorial

factorial() {

if [ $1 -le 1 ]; then

echo 1

else

local prev=$(factorial $(( $1 – 1 )))

echo $(( $1 * prev ))

fi

}

result=$(factorial 5) # 5! = 120

echo “5! = $result”

5. Execution and Debugging

Ways to execute scripts:

bash script.sh or /bin/sh test.sh # Directly specify the interpreter to execute the script without authorization

chmod o+x script.sh && ./script.sh # Grant execution permission and run the script in the current directory

bash -x script.sh # Display each executed command

#!/bin/bash

set -euxo pipefail # Common debugging options:

# -e: Exit on error

# -u: Error on undefined variables

# -x: Print executed commands set +x # Turn off debugging

# -o pipefail: Terminate on pipe errors

6. Practice

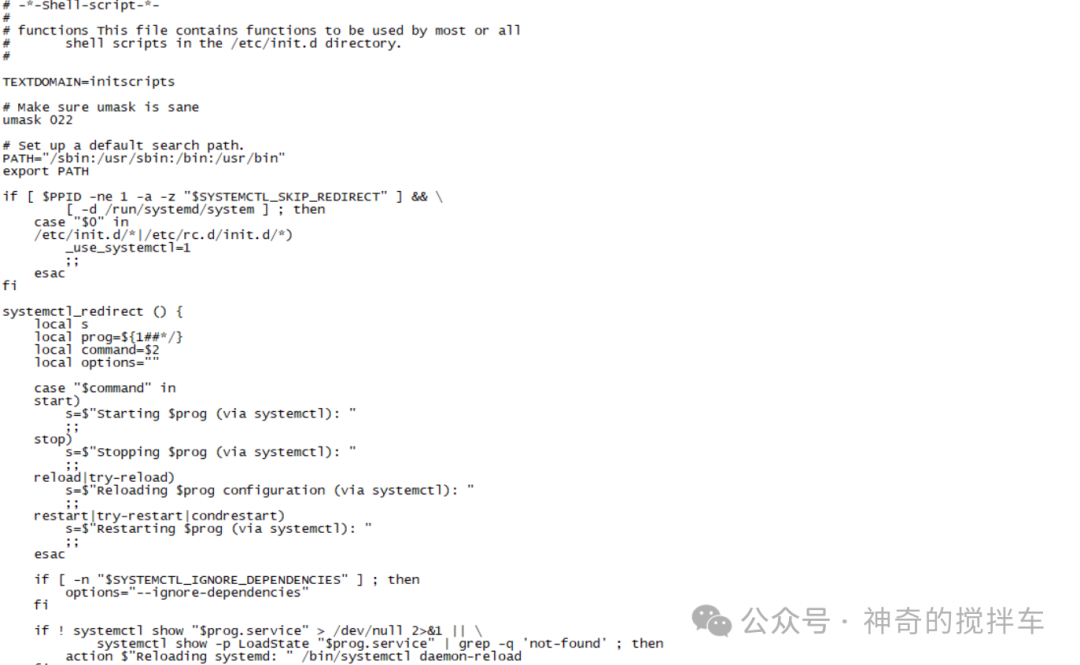

Read the Linux system startup script /etc/init.d/function

Reference:

https://www.cnblogs.com/sunfie/p/5149678.html

7. Summary:

Shell programming is very practical, such as data backup, asset management, log analysis, system setup, security monitoring, etc. It greatly aids in the automation and operation and maintenance construction of enterprises, requiring repeated practice based on needs. For complex business logic, it can be combined with languages like Python.

If you have any questions, feel free to leave a message for discussion!