The spring of 2025 is particularly special. With humanoid robots and DeepSeek making a strong impression, AI has become the most promising field. Everything can be AI, and everything is seeking to utilize AI or have an ‘aha moment’ where AI becomes mainstream.Helping machines think better and bringing more AI to the edge is one of the important trends in AI development. Integrating NPU units into MCUs is one of the chip technology routes to implement this trend. STM32N6 is the pioneering MCU+NPU architecture, offering MPU-level AI performance while maintaining MCU-level power consumption and cost. This unique value positioning makes edge AI deployment easier.

The spring of 2025 is particularly special. With humanoid robots and DeepSeek making a strong impression, AI has become the most promising field. Everything can be AI, and everything is seeking to utilize AI or have an ‘aha moment’ where AI becomes mainstream.Helping machines think better and bringing more AI to the edge is one of the important trends in AI development. Integrating NPU units into MCUs is one of the chip technology routes to implement this trend. STM32N6 is the pioneering MCU+NPU architecture, offering MPU-level AI performance while maintaining MCU-level power consumption and cost. This unique value positioning makes edge AI deployment easier.

We find that during the continuous innovation of AI chip technology, many neural network algorithms have requirements that are too high for traditional MCUs. To run these algorithms and achieve edge AI functionality, developers have had to adopt MPUs with integrated neural processing units (NPUs). With the advent of STM32N6, which integrates ST’s self-developed NPU, it can replace those powerful MPUs while maintaining advantages such as proximity to the original data source, reduced latency, enhanced data security and privacy, and lower system costs (lower BOM costs), while also strengthening real-time operation and meeting lower power consumption requirements.

01Overview of STM32N6 Features STM32N6 is STMicroelectronics’ latest and most powerful STM32 MCU, excelling in:

STM32N6 is STMicroelectronics’ latest and most powerful STM32 MCU, excelling in:

- Dedicated Embedded Neural Processing Unit (NPU)STM32N6 integrates ST’s self-developed hardware NPU with a processing capability of 600 GOPS; it also features an extremely low power consumption of 3 TOPS/W, eliminating the need for any cooling devices when running AI models.

- Arm Cortex-M55 Core The STM32N6 core is Cortex-M55, with a main frequency of 800 MHz, adding 150 DSP vector extension instructions (MVE), enabling preprocessing of data before it is sent to the NPU or post-processing of results obtained from the NPU.

- Large Embedded RAM STM32N6 has 4.2 Mbytes of embedded RAM, supporting real-time data processing and multitasking, such as storing inference data from NPU computations, serving as frame buffers, or intermediate data during H264 compression.

- Powerful Computer Vision Capabilities STM32N6 integrates parallel and MIPI CSI-2 camera interfaces and a dedicated image processing unit (ISP), providing 600 GOPS of AI processing power, suitable for many machine vision applications.

- Extended Multimedia Features STM32N6 integrates a 2.5D graphics accelerator, H264 encoder, and hardware acceleration for JPEG encoding and decoding, easily enabling the transmission of video captured from cameras via Ethernet or USB (UVC protocol) while running AI processing.

-

Enhanced Security FeaturesSTM32N6 includes Arm TrustZone for the Cortex-M55 core and NPU, targeting SESIP3 and PSA L3 certification.

02NPU Introduction to MCU Will Trigger New ‘Aha Moments’ in Edge AI ApplicationsThe reason STM32N6 can achieve MPU-level AI performance is that it is equipped with a neural network hardware acceleration unit—the Neural-ART accelerator, which is ST’s self-developed NPU, with a throughput of up to 600 GOPS (600 billion operations per second), which is 600 times higher than the STM32H7 without an NPU.The NPU is specifically designed to accelerate neural network computations and AI-related tasks, optimized for matrix multiplication, convolution, and other linear algebra operations. Therefore, the NPU is very efficient in running and processing AI algorithms, excelling in handling multimodal models such as image classification, speech processing, and natural language. The NPU has very low power consumption when processing AI-related tasks, making it particularly suitable for battery-powered devices. Moreover, it can reduce the latency of AI tasks on microcontrollers, which is crucial for real-time applications. By introducing the NPU into the MCU, it opens up a range of new possibilities for your AI applications, paving the way for more advanced and complex AI use cases, even in multimodal scenarios, rather than just single-modal scenarios.

By introducing the NPU into the MCU, it opens up a range of new possibilities for your AI applications, paving the way for more advanced and complex AI use cases, even in multimodal scenarios, rather than just single-modal scenarios.

The performance improvements brought by the NPU are also significant. As shown in the figure, selecting classic neural network models such as image classification, object detection, and speech recognition, running these models on the STM32N6’s NPU and on the Cortex-M55 core of the STM32N6, we can see that the inference performance improved by 26 to 134 times.

03Complete Development Toolchain of STM32N6 Meets Mature Product Ecosystem

In addition to hardware performance, software support is also crucial for AI application development. STM32N6 has a complete development toolchain and a mature software ecosystem, natively supporting various mainstream AI frameworks such as TensorFlow and Keras. For other AI frameworks like PyTorch, STM32N6 supports them through the ONNX format. ONNX is an intermediate conversion format that can be used to convert any neural network model format into an open and standardized format. This reflects the flexibility of the STM32N6 NPU toolchain, which will support more AI frameworks and application layers in the future.

ST also provides a complete software ecosystem that greatly facilitates and optimizes the development process of new AI applications based on STM32N6. The core of this ecosystem is the ST Edge AI Suite, which consists of three parts: first is the Edge AI Model Zoo, a repository of free software tools, practical edge AI models, code examples, and detailed documentation. Developers, regardless of their experience level, can find the support they need to create edge AI applications. Second is the Edge AI Developer Cloud online platform, where developers can remotely benchmark the performance of their AI models using boards hosted on this cloud platform. Finally, STM32Cube.AI and ST Edge AI Core are model optimizers that can convert your neural networks into C code that can be executed on devices.

04Conclusion

In the current booming AI landscape, STM32N6 breaks through with its MCU+NPU architecture, significantly enhancing performance and unlocking more scenarios for edge AI, becoming the ‘invisible wing’ for the implementation of edge AI.

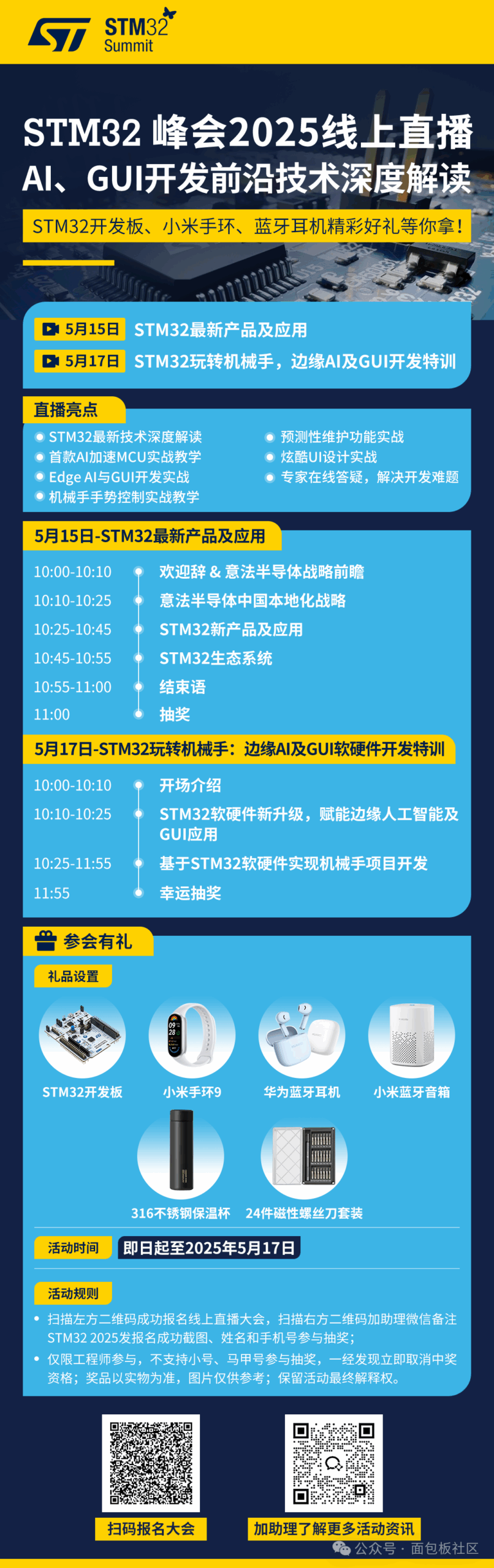

The STM32 Summit online live broadcast is about to begin, where related content will be discussed. Interested STM32N6 enthusiasts can sign up to watch the live broadcast!

👇