This is a work from Big Data Digest, please see the end of the article for reprint requirements.

Author | Adam Geitgey

Translation | Yuan Yuan, Lisa, Saint, Aileen

Python is undoubtedly an excellent programming language for processing data or automating repetitive tasks. Need to scrape web logs? Or adjust a million images? There is always a corresponding Python library to help you complete the task easily.

However, Python’s operational speed has always been criticized. By default, Python programs use a single process on a single CPU. If your computer was produced in the last decade, it most likely has 4 or more CPU cores. This means that while you are waiting for the program to finish running, 75% or more of your computer’s computing resources are idle!

Let’s see how to fully utilize computing resources through parallel computing. Thanks to Python’s concurrent.futures module, you can make a regular program run in parallel with just three lines of code.

Typical Python Execution

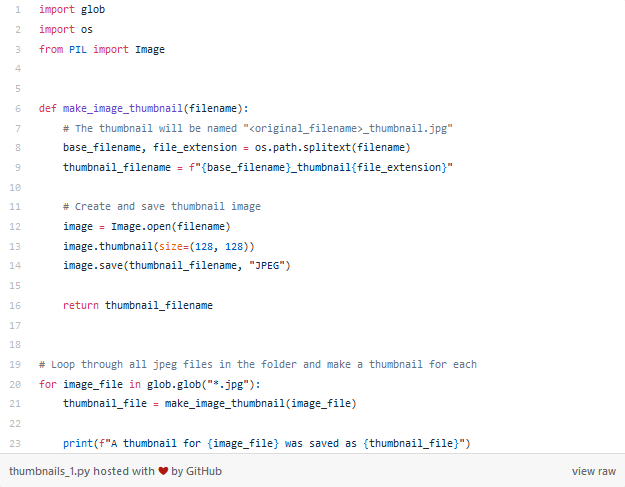

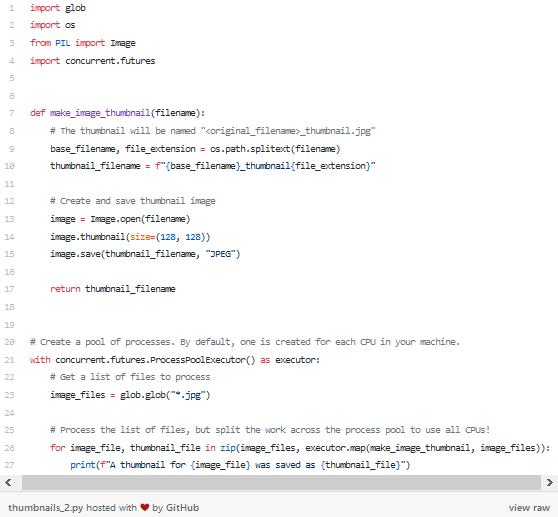

For example, we have a folder full of image files, and we want to create thumbnails for each image.

In the short program below, we use Python’s built-in glob function to get a list of all image files in the folder and use the Pillow image processing library to get a 128-pixel thumbnail for each image.

This program follows a very common data processing pattern:

1. Start with a series of files (or other data) that you want to process.

2. Write a helper function to process one piece of data.

3. Use a for loop to call the helper function to process the data one by one.

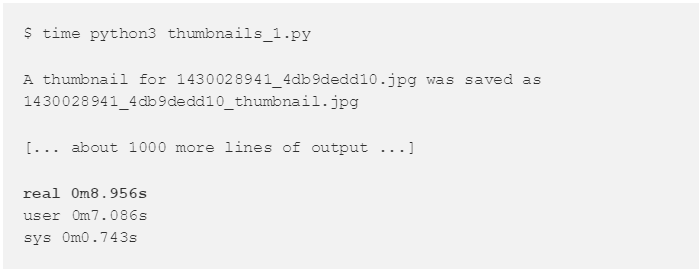

Let’s test this program with 1000 images and see how long it takes to run.

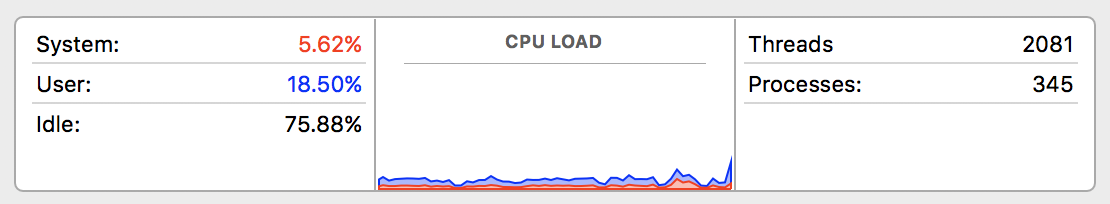

The program took 8.9 seconds to run, but how much of the computer’s computational resources were utilized?

Let’s run the program again while checking the Activity Monitor:

The computer is 75% idle, why is that?

The problem is that my computer has 4 CPU cores, but Python only used one of them. Even though my program fully utilized that one CPU core, the other 3 CPU cores did nothing. We need to find a way to split the workload of the entire program into 4 parts and run them in parallel. Fortunately, Python can do that!

Let’s try parallel computing

Here’s one way to implement parallel computing:

1. Split the list of JPEG image files into 4 parts.

2. Run four Python interpreters simultaneously.

3. Let the four interpreters process a portion of the image files.

4. Aggregate the results from the four interpreters to get the final result.

The four Python programs run on 4 CPUs, achieving about 4 times the speed compared to running on 1 CPU, right?

The good news is that Python can help us with the tricky parts of parallel computing. We just need to tell Python what function we want to run and how many parts we want the work divided into, and leave the rest to Python. We only need to modify three lines of code.

First, we need to import the concurrent.futures library. This library is built into Python:

Next, we need to tell Python to start 4 more Python instances. We convey this instruction by creating a Process Pool:

By default, the above code will create a Python process for each CPU on the computer, so if your computer has 4 CPUs, it will start 4 Python processes.

The final step is to let the Process Pool execute our helper function on the data list using these 4 processes. We can replace our previous for loop with:

The new code calls the executor.map() function

The executor.map() function requires the helper function and the list of data to be processed as input. This function takes care of all the troublesome work, splitting the list into smaller lists, assigning the smaller lists to each subprocess, running the subprocesses, and aggregating the results. Well done!

We can also obtain the results of each call to the helper function. The executor.map() function returns results in the order of the input data. Python’s zip() function can be used to retrieve the original filenames along with the corresponding results in one step.

Here’s the program after the three modifications:

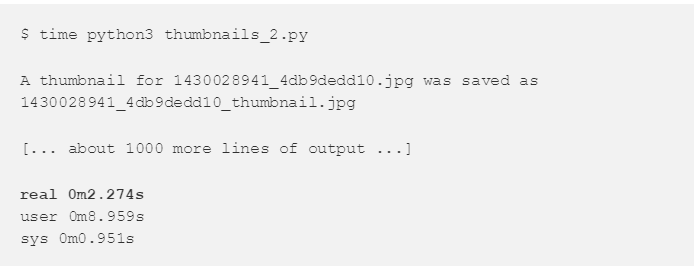

Let’s try running it and see if we can shorten the runtime:

The program finished running in 2.274 seconds! This is a 4x speedup compared to the original version. The reason for the reduced runtime is that we used 4 CPUs instead of 1.

However, if you look closely, you will see that the “User” time is close to 9 seconds. If the program finished in 2 seconds, why is the user time 9 seconds? This seems… something is off?

Actually, this is because the “User” time is the total time across all CPUs. Just like last time, we have a total CPU time of 9 seconds.

Note: Starting Python processes and assigning data to subprocesses will also take time, so you may not significantly speed up with this method. If you are processing a large amount of data, there is an article on “Setting the chunksize parameter” that may help you: https://docs.python.org/3/library/concurrent.futures.html#concurrent.futures.Executor.map.

Will this method always speed up my program?

When you have a list of data, and each piece of data can be processed independently, using Process Pools is a good method. Here are some examples suitable for parallel processing:

-

Scraping data from a series of individual web server logs.

-

Parsing data from a bunch of XML, CSV, and JSON files.

-

Preprocessing large amounts of image data to build machine learning datasets.

However, Process Pools are not a panacea. Using a Process Pool requires passing data back and forth in separate Python processes. If the data you are using cannot be effectively passed during processing, this method will not work. The data you are processing must be of a type that Python knows how to handle (https://docs.python.org/3/library/pickle.html#what-can-be-pickled-and-unpickled).

At the same time, data will not be processed in a predictable order. If you need the results of one step to proceed to the next step, this method will not work either.

What about GIL?

You may have heard that Python has a Global Interpreter Lock (GIL). This means that even if your program is multi-threaded, only one Python command can be executed at a time. GIL ensures that only one Python thread is executing at any given time. The biggest problem with GIL is that Python’s multithreaded programs cannot take advantage of multi-core CPUs.

But Process Pools can solve this problem! Because we are running separate Python instances, each instance has its own GIL. This way, you have true parallel processing in your Python code!

Don’t be afraid of parallel processing!

With the concurrent.futures library, Python allows you to easily modify scripts and immediately utilize all CPU cores on your computer. Don’t be afraid to try it. Once you get the hang of it, it’s as simple as writing a for loop, but it will make your entire program much faster.

Original link: https://medium.com/@ageitgey/quick-tip-speed-up-your-python-data-processing-scripts-with-process-pools-cf275350163a

Recommended high-quality deep learning course

Deep Learning and Computer Vision

Google AI experts + ample GPU + high cost performance

Course starts in 3 days!

For details, please see the poster

Volunteer Introduction

Reply “Volunteer” to join us

Previous Excellent Articles

Click the image to read

Python is indeed slow, but I don’t care