The RISC-V processor has garnered widespread attention due to its flexibility and scalability, but without efficient verification strategies, erroneous design implementations could hinder the continued promotion of RISC-V.

Before RISC-V emerged, processor verification had almost become a dragon-slaying skill for most semiconductor companies. Expertise was concentrated in a few commercial companies that provided processors or processor IP, often developing their own internal processes and tools. However, the advent of the open-source RISC-V ISA and the surge of open-source implementations have sparked significant interest, necessitating appropriate tools and expertise.

Over the past year, RISC-V International has announced several new extensions. Additionally, users are encouraged to make their own extensions and modifications. While developing these extensions may be a relatively quick and straightforward process, verifying them is not easy.

There are few standards or open-source tools to assist with processor verification. RISC-V is an open ISA that anyone can adopt and implement a processor. But leaders in the RISC-V market understand that just because they do not have to pay licensing fees does not mean RISC-V is a cheap option.

If you want to succeed with RISC-V, verification is not something you can shortcut.

Many people naively believe that verifying a processor is just about testing whether the instructions are valid. They build a test generator, do this and that, or introduce a verification suite, but the real issues are related to microarchitecture and pipeline. There is no standard method for microarchitecture verification, and there is even little public discussion.

For a given RISC-V CPU, verification is often underestimated. RISC-V introduces significant complexity in control and data paths. Techniques such as speculative execution and out-of-order execution provide higher performance for more challenging workloads, which are essential for RISC-V to be adopted as a server-grade CPU. However, these techniques can also lead to exploitable security vulnerabilities, such as Spectre and Meltdown.

Many of the developers of RISC-V come from traditional CPU architecture backgrounds, reusing whatever they have learned. But it is still not easy, as RISC-V and traditional CPUs have some ecological differences.

Verification of RISC-V microarchitecture is at a crossroads, balancing the advantages of openness and flexibility with the challenges of diversity and complexity. As the ecosystem matures, addressing these challenges is crucial for establishing RISC-V as a reliable and secure alternative to other microarchitectures (ARM, X86).

Through a community-driven approach, continuous innovation, and a focus on standardization, the verification environment for RISC-V will undoubtedly continue to evolve and meet challenges.

Not Just Random

Processor verification differs from conventional ASIC verification, as the space for processor verification is larger. The AS in ASIC stands for Application-Specific. The anticipated applications for fully verified chips are limited and bounded. Processor verification is not. Every operation in a processor Instruction Set Architecture (ISA) must be verified to provide specified behavior across every possible scenario (every instruction combination), and this cannot be predicted when verifying processor IP.

SystemVerilog and UVM are the mainstays of ASIC verification. UVM is a good method for random instruction generation, but it has limitations, such as coverage. If you claim to have 100% coverage for the “add instruction,” you must have a different understanding of coverage than I do because I do not believe you have covered all possible combinations. Moreover, the typical way to provide incentives to the processor is to generate a bunch of random instructions. You might be able to adjust these instructions based on specific areas you want to test, but this is a very inefficient way to attempt to verify a processor. It will find some simple errors, but you must continue to improve verification efficiency.

Many customers are doing constrained random testing, and indeed there are several instruction generators that can randomly generate hundreds of thousands of instructions for you. But is that enough?

Based on the experiences of traditional CPU vendors and what has been observed in RISC-V cores, simulation-based verification is not sufficient. This is why you must consider other methods, such as formal verification.

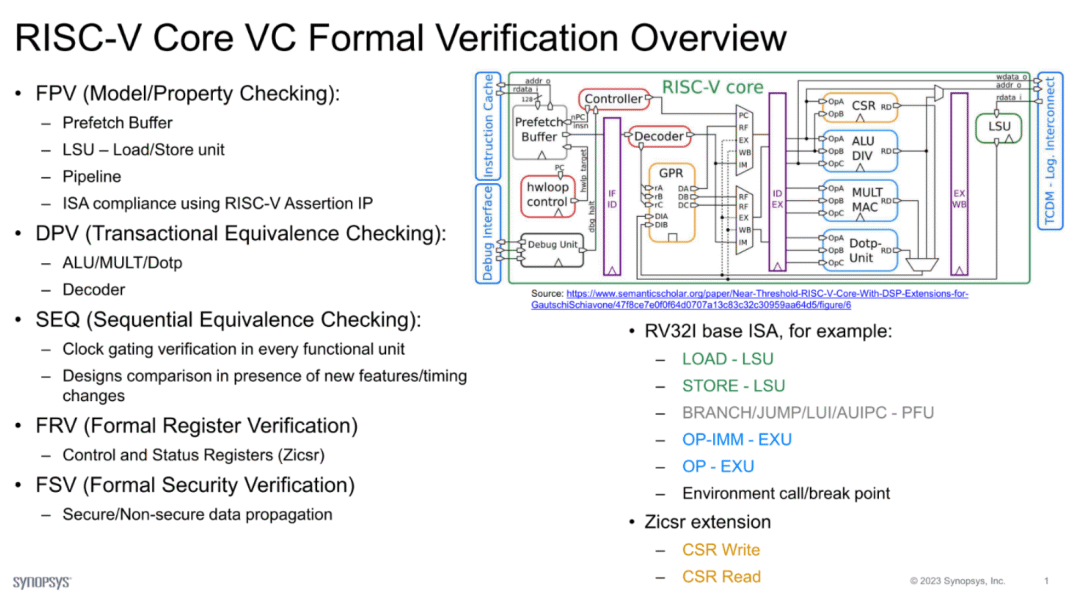

Processors are verified in a bottom-up manner, similar to how systems are verified today. Processor subunits include branch prediction, pipelines, and memory systems, such as caches. This is often where formal methods shine. Some people verify prefetch buffers/ALUs/register models/multipliers/load store units under constrained randomness, achieving good coverage. However, if you do not use formal verification, you risk leaving behind some extreme cases, which is the last mile of verification, carrying the risk of legacy bugs.

Using formal verification for processor submodules

After verifying the subunits, they can be integrated. Imagine the experience of discovering an ALU error when booting Linux.

Now, a more hybrid verification strategy is needed. Formal verification is useful because, fundamentally, formal verification executes all possible input combinations to verify the behavior specified by the ISA, which is typically described as SystemVerilog assertions. Major processor vendors also possess extensive verification suites, including UVM test platforms and test software. Simulation is even more necessary for comprehensive verification of all modules of large processors, ensuring that the correct behavior is integrated into the SoC while allowing test software to run on the processor under test.

Surprisingly, a processor core can have many errors and still boot Linux. By booting a real Linux system, you will discover various issues that cannot be found in other EDA verification and formal verification, such as many asynchronous timing issues.

Most people verify processors by comparing the implemented functionality with a reference model. Specifications are not precise in every aspect; they may not specify what happens when six identical priority interrupts occur simultaneously. The microarchitecture choices about which one to adopt and at which stage in the pipeline to handle these choices vary by processor core. At this point, the reference model can play an unexpected role; when the reference model does not match the RTL design, you will analyze whether the design is reasonable!

A significant attraction of RISC-V is that you can freely modify the processor to better suit specific applications. The challenge is that every addition doubles the verification effort and complexity. Adding things is very easy, but making sure they exit at high quality is difficult. Many people do not realize the complexity added by custom instructions. They must perform all this verification themselves. For everything you add, you must completely re-verify everything, and ensure that this addition does not affect more components. You must consider how it impacts the rest of the design, especially if it changes content in the pipeline, conflicts in the ALU, cache system issues, or load/store operations.

When are you done? Verification is never complete. The widely accepted notion is that when verification is done sufficiently, the risks are manageable.

The verification process tells you what you have done; it gives you a certain level of confidence, but it does not guarantee that there will be no problems. If you use simulation, you can generate a large number of coverage reports that give you confidence that you have exercised most of your design, and coverage proves this, and that you have discovered certain categories of errors.

Due to the complexity of processor verification, this coverage is insufficient.

Processor coverage is not just about all instructions and the coupling of instructions; at this point, you are only focusing on the decoder within the processor. You are not truly testing anything else, including instruction sequences and what happens within the pipeline.

As new custom instructions are introduced in RISC-V, understanding the microarchitecture and how it affects the entire SoC and the workloads running on it is crucial. Hardware-assisted verification, such as virtual prototyping capabilities, simulation, and hardware prototyping design, is a key component of the entire verification process. These techniques help ensure that RISC-V microarchitecture decisions do not negatively impact power and performance trade-offs.

When it comes to security, stricter measures are required. Depending on the certification level required for the final product, they must achieve a certain level of fault coverage. Defined faults need to be injected, which are defined by ISO 26262 for functional safety. Fault analysis must be conducted, followed by generating diagnostic coverage. If you insert these faults for critical functions in the design, have you built in safety mechanisms to handle these faults?

When verification cannot be completed, heuristic approaches often become important. The principled advice is to not think of yourself as a verification engineer during verification; you need to stand in the user’s shoes when using this chip, meaning you must continue running real software. After running for a while, everything seems normal. This is a very valuable thing, even if it cannot be quantified, it still holds great significance.

The open-source nature of RISC-V also exposes it to potential security risks. While transparency allows for community-driven scrutiny, it also means that adversaries can access the same information. This necessitates robust security verification to ensure that the microarchitecture can withstand various attacks. Compared to closed architectures, RISC-V’s security challenges are more severe, as proprietary designs can keep their security features confidential.

RISC-V requires more specialized verification tools. When processors are designed by five different companies, the demand for test generators or formal tools for these processors is not high because they are built internally at each company. But now with RISC-V, there is certainly a market for architectural analysis, verification, and formal around the RISC-V ISA.

Over time, we will see people develop performance analysis tools and formal tools for RISC-V, but it is still too early for that.

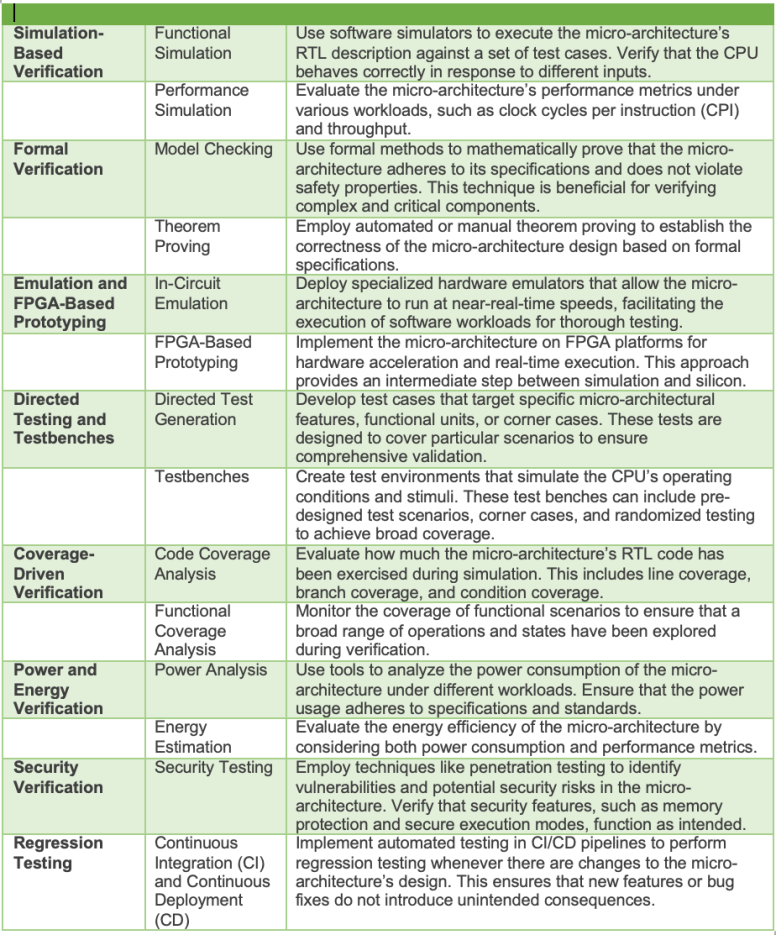

The following image lists the approaches that can be considered when verifying processor microarchitecture.

Verification Methods for Processors.