If you don’t want to miss my updates, remember to check the public account in the upper right corner and set it as a star, take down the star and give it to me!

I am Tongji Zihai, when I received the invitation from Darlwen to do the Raspberry Pi project, I happened to be in the United States and had the privilege to go to Intel headquarters to learn more about NCS2, so I decided to combine the two to create an artificial intelligence application.

Summarizing some key operation steps for everyone, if you want to learn step by step completely, click to read the original text for more details.

Materials Preparation

– A computer

Configuring the Raspberry Pi System

sudo nano /etc/apt/sources.listdeb http://mirrors.tuna.tsinghua.edu.cn/raspbian/raspbian/ stretch main contrib non-free rpi deb-src http://mirrors.tuna.tsinghua.edu.cn/raspbian/raspbian/ stretch main contrib non-free rpi

sudo apt-get updateThis step is the necessary path summarized by countless Raspberry Pi players over the years, condensing the blood, tears, and sweat of many predecessors, especially suitable for beginners to read.

Check

uname -mOutput armv7l indicates normal operation.

Change Source

sudo nano /etc/apt/sources.listdeb http://mirrors.tuna.tsinghua.edu.cn/raspbian/raspbian/ stretch main contrib non-free rpi deb-src http://mirrors.tuna.tsinghua.edu.cn/raspbian/raspbian/ stretch main contrib non-free rpisudo nano /etc/apt/sources.list.d/raspi.listdeb http://mirror.tuna.tsinghua.edu.cn/raspberrypi/ stretch main ui deb-src http://mirror.tuna.tsinghua.edu.cn/raspberrypi/ stretch main uiSave and exit. Use the sudo apt-get update command to update the software source list.

Install CMake

sudo apt install cmakeDownload OpenVINO Toolkit for Raspbian

cd ~/Downloads/tar -xf l_openvino_toolkit_runtime_raspbian_p_2019.3.334.tgzsource inference_engine_vpu_arm/bin/setupvars.sh sh inference_engine_vpu_arm/install_dependencies/install_NCS_udev_rules.sh sed -i "s|<INSTALLDIR>|$(pwd)/inference_engine_vpu_arm|" inference_engine_vpu_arm/bin/setupvars.shSet Environment Variables

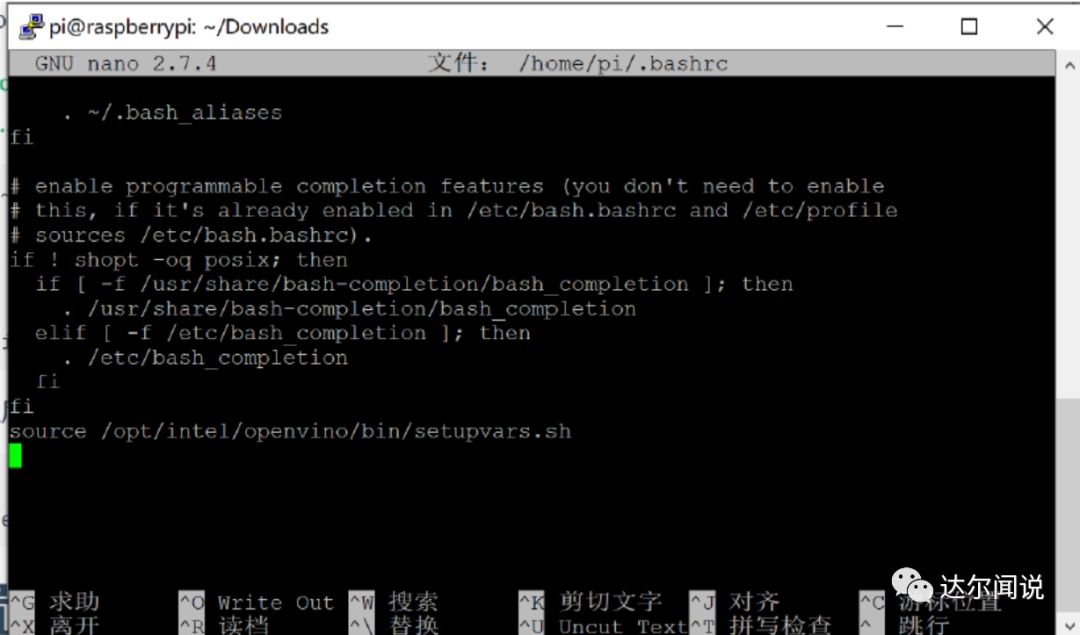

sudo nano /home/pi/.bashrc

Configure USB Rules for Intel Neural Stick 2

sudo usermod -a -G users "$(whoami)" sh inference_engine_vpu_arm/install_dependencies/install_NCS_udev_rules.shUpdating udev rules... Udev rules have been successfully installed.cd inference_engine_vpu_arm/deployment_tools/inference_engine/samples mkdir build && cd build cmake .. -DCMAKE_BUILD_TYPE=Release -DCMAKE_CXX_FLAGS="-march=armv7-a" make -j2 object_detection_sample_ssdDownload Model Files and Weights for Neural Stick

wget --no-check-certificate https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.bin wget --no-check-certificate https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.xmlFace Detection

Replace <path_to_image> with the absolute path of your face image

pi@raspberrypi:~/Downloads/inference_engine_vpu_arm/deployment_tools/inference_engine/samples/build$ ./armv7l/Release/object_detection_sample_ssd -m face-detection-adas-0001.xml -d MYRIAD -i /home/pi/Downloads/face/1.jpgCall OpenCV for Face Detection in Python

import cv2 as cv print('Starting face detection') # Load model and weight files net = cv.dnn.readNet('face-detection-adas-0001.xml', 'face-detection-adas-0001.bin') # Specify target device net.setPreferableTarget(cv.dnn.DNN_TARGET_MYRIAD) # Read image, change the image path to your face image frame = cv.imread('./6.jpg') # Prepare input blob and perform an inference blob = cv.dnn.blobFromImage(frame, size=(672, 384), ddepth=cv.CV_8U) net.setInput(blob) out = net.forward() # Draw detected faces on the frame for detection in out.reshape(-1, 7): confidence = float(detection[2]) # xmin,ymin - top left corner coordinates xmin = int(detection[3] * frame.shape[1]) ymin = int(detection[4] * frame.shape[0]) # xmax,ymax - bottom right corner coordinates xmax = int(detection[5] * frame.shape[1]) ymax = int(detection[6] * frame.shape[0]) if confidence > 0.5: cv.rectangle(frame, (xmin, ymin), (xmax, ymax), color=(0, 255, 0)) # Save the detected face image cv.imwrite('./out.png', frame) print('Face detection completed, saved the result image')Configure the Raspberry Pi Camera

sudo apt-get install python-opencv sudo apt-get install fswebcamsudo nano /etc/modulesbcm2835-v4l2Restart Raspberry Pi

vcgencmd get_cameraraspistill -o image.jpgls /dev

fswebcam 10 test.jpgAfter execution, it will delay 10 frames to take a picture (give some preparation time) and generate an image named test, stored in the /home/pi directory.

Configure Display Window

Open VNC Viewer, run this command in the Raspberry Pi command line

export DISPLAY=:0.0Real-time Face Detection with Raspberry Pi Camera

# coding=utf-8 # face-detection-camera.py import cv2 as cv import numpy as np print('Starting real-time face detection with camera') # Load model files and weights net = cv.dnn.readNet('face-detection-adas-0001.xml','face-detection-adas-0001.bin') # Specify target device net.setPreferableTarget(cv.dnn.DNN_TARGET_MYRIAD) # Read image frames from camera cap=cv.VideoCapture(0) while(1): # Get a frame image ret,frame=cap.read() # Prepare input blob and perform an inference frame=cv.resize(frame,(480,320),interpolation=cv.INTER_CUBIC) blob = cv.dnn.blobFromImage(frame, size=(672, 384), ddepth=cv.CV_8U) net.setInput(blob) out = net.forward() # Draw face boxes for detection in out.reshape(-1, 7): confidence = float(detection[2]) # Get the coordinates of the top left corner of the image xmin = int(detection[3] * frame.shape[1]) ymin = int(detection[4] * frame.shape[0]) # Get the coordinates of the bottom right corner of the image xmax = int(detection[5] * frame.shape[1]) ymax = int(detection[6] * frame.shape[0]) if confidence > 0.5: cv.rectangle(frame, (xmin, ymin), (xmax, ymax), color=(0, 255, 0)) # Display the image cv.imshow("capture",frame) if cv.waitKey(1) & 0xFF == ord('q'): # Listen for keyboard actions every 1 millisecond, press q to end and save the image cv.imwrite('out.png', frame) print("save one image done!") break # Close the camera and display window cap.release() cv.destroyAllWindows() print('Real-time face detection with camera completed')Run this script file

python3 face-detection-camera.py

With NCS2, the Raspberry Pi can run the computer algorithm applications you want with ease. Of course, the premise is that you have learned the most basic applications. Below is the detailed course prepared by Zihai for beginners, you canclick to read the original textfor more details.

The graduation project course consists of 17 lectures: from “topic selection – supplementing basic knowledge points – project breakdown explanation – excellent work sharing” to help you solve various problems from the topic proposal to the final defense process.

Dejie – 17 free graduation design courses high-energy review:

Introduction: Graduation project “pass” secrets, attached board card white piao

Graduation project lesson 1: How to choose a topic, which type of graduation project is suitable for you

Graduation project lesson 2:Graduation project reading case analysis

Graduation project lesson 3:Graduation project development platform selection

Graduation project lesson 4/5:Use AD19 to draw STM32 core board in 2 hours

Graduation project lesson 6:C language/Verilog language basics supplement

Graduation project lesson 7: Build a neural network with Tensorflow (read the original text for more details)

Graduation project lesson 8: System debugging skills and instrument usage

Graduation project lesson 9:Use instruments to speed up design and development

Graduation project lesson 10:Power circuit design

Graduation project lesson 11:Analysis and design of commonly used sensors

Graduation project lesson 12:Analysis of commonly used data buses (read the original text for more details)

Graduation project lesson 13:Communication interface design

Graduation project lesson 14:Teach you to design motor drive circuits

Graduation project lesson 15:Open source STM32 IoT smart home project