Source | Quantum Bit

2025 will be the year of mass production for humanoid robots.

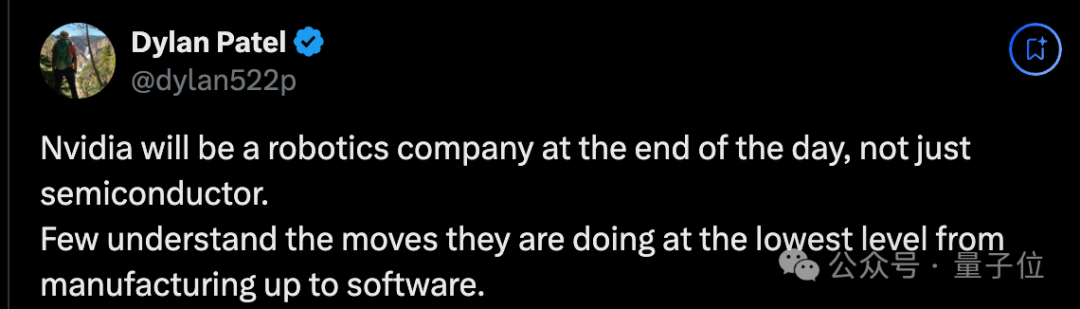

The recent appearance of 14 robots at CES by Jensen Huang has sparked renewed discussion following a prediction from SemiAnalysis analysts.

NVIDIA will ultimately become a robotics company, not just a semiconductor company. Only a few understand their foundational layout, from manufacturing to software.

Where does this viewpoint come from? What layouts does NVIDIA have in robotics? And why are they clearly not directly entering the robot manufacturing space?

In NVIDIA’s Beijing office, Deepu Talla, Vice President of Robotics and Edge Computing, answered everything.

Three Computers, Welcoming the ChatGPT Moment for Robots

(The following is a summary of Deepu Talla’s speech)

For me,there arethree main reasons that make robotics technology indispensable:

Dangerous jobs. For example, miners or workers in hazardous environments, robots can replace humans in these dangerous tasks.

Labor shortages. Each generation chooses careers different from the previous one. For instance, my grandparents were farmers, but my parents left agriculture, and I have never worked in agriculture. Jobs are constantly evolving, and the issue of labor shortages is becoming more acute.

Demand for elder care. Human lifespans are increasing. Today, the global average life expectancy has surpassed 75 years, compared to just 67 years 25 years ago, and only 57 to 58 years 50 years ago. In another 25 years, life expectancy may exceed 100 years, and perhaps even reach 150 years. But if humans live to 200 years, would you want a 75-year-old child to take care of you? Probably not. So we need “social companions”—robots—to help address care and companionship issues.

These are not new pieces of information, but the situation has indeed changed. From 2024 to 2025, interest in robotics technology has significantly increased.

Companies around the world are exploring how to develop humanoid robots.Why now, and not two years ago? What has changed?

There are two main reasons:

Technological advancements, especially in the field of Generative AI (GenAI).

About two years ago, large language models (LLMs) disrupted the digital application space. Now, these technologies are also beginning to be applied in robotics, breaking the boundaries between digital applications and physical applications.

Improvements in simulation environments, manufacturing a robot is very expensive because they have not achieved mass production, making costs high.

Making progress in the physical world also takes a lot of time because everything follows “real time” (what we call “wall clock time”). Previously, testing in robotics primarily relied on physical environments, which led to very slow progress. So what has changed in the past 12 months?

In the past year, NVIDIA has developed a technology called Omniverse. You can think of it as a simulation environment, a “green” environment. This technology has matured significantly—although it has not yet solved all problems, its development level is comparable to the application level of Generative AI in the simulation field. Now, imagine that with these technological advancements, the robot development process can become much faster.

Therefore, in the next 5 to 10 years, we will see significant differences in the field of robotics. This is why every company is now focused on advancing this field. I have discussed with many companies, and they are all researching robotics-related issues and developing general-purpose robot “brain” models. This is the future—a future that can help solve dangerous jobs, labor shortages, and operational challenges for businesses.

This progress is incredibly exciting. A year ago, it was unclear whether this direction would succeed. Although there have been many efforts before, the breakthrough developments in the past 6 to 12 months have changed everything.

Now, when people talk about robots, most think of physical robots—those concrete machines that resemble humans or industrial tools. This physical form is the goal, the ultimate outcome. But the path to this goal is very complex.To truly address the challenges in robotics, we needthree computers:

The first computer is for training: This is the system used to train AI models. Training typically occurs in the cloud, data centers, or powerful systems like NVIDIA DGX. This is a critical step in building the robot’s “brain”.

The second computer is for simulation: Once training is complete, testing is required. The previous standard was physical testing, but this method is slow, expensive, and risky. A better solution is to introduce a “simulation layer”, a “digital twin”, to complete testing in a virtual environment. Simulation allows for thousands of scenario tests to be run at scale, quickly, and safely, without being constrained by real-world time or costs.

The third computer is for deployment: The third system is installed inside the robot, serving as the “brain” that operates the physical robot. For NVIDIA, this can be achieved through systems like Jetson or AGX.

By integrating these three systems, we can significantly shorten development time. Before real-world deployment, thousands of tests can be conducted through simulation, accelerating the entire process. If a design performs poorly in reality, we can return to the simulation environment to optimize the model and test again. This cyclical process (training, simulation, testing) allows advancements in robotics to be achieved in 5 years instead of 50 years.

This approach also explains why robotics is more challenging than autonomous driving. For autonomous driving, the main task is to avoid obstacles and ensure safety without needing to interact with physical objects. In robotics, however, it involves contact, collisions, and complex physical interactions with objects, which are much harder to test and optimize.

Simulation is not a new concept. For example, in chip design, 100% of chips need to be simulated before manufacturing to ensure their functionality is flawless, as errors in chip design can lead to months of delays and cost millions or even billions of dollars.

In the field of robotics, the main challenge is the “sim-to-real gap”—the difference between simulation results and actual performance. Until recently, the accuracy of simulation technology was insufficient, so it was not widely adopted. However, technological advancements like Omniverse have significantly narrowed this gap. Although this gap has not been completely closed, the improvements are substantial enough to make simulation a viable and effective tool in robot development.

At NVIDIA, we do not directly manufacture robots but collaborate with everyone involved in robot manufacturing or robotics solution development. We are building a platform that includes three computing systems along with related software tools and workflows to help robotics experts, researchers, mechanical engineers, and testers develop robotic solutions more easily. This platform aims to simplify and accelerate the entire process.

Now, let’s focus on the three main steps in robot development: training, testing, and deployment.

Taking AI model training as an example. Popular models like ChatGPT are trained on large GPUs and massive text data from the internet. However, training robot models requires entirely different data. Robots need to perform actions such as picking up objects, moving, interacting, or completing tasks. Unfortunately, this kind of data does not currently exist at scale.

Data collection and solutions face the following challenges:

Limitations of real-world data Unlike autonomous vehicles that can rely on existing vehicles to collect data through sensors, the current number of robots is far from sufficient to generate comparable data volumes.

Current methods include using Apple Vision Pro or motion capture suits to record demonstrations of human actions. While these methods can provide useful data, the scale is too small to fully meet the training needs of robot models.

The importance of synthetic data generation To address this issue, synthetic data generation becomes crucial. Through synthetic data, we can generate countless variations of specific actions (e.g., grasping objects) or build complete virtual environments.

NVIDIA Cosmos is a “world foundation model” that can generate highly realistic, video game-like environments for robot training. Here, “world” does not refer to Earth, but to the environment in which robots interact, such as the visible area within a room where the robot operates.

Combining real and synthetic data By combining a small amount of real-world data with large-scale synthetic data generated using tools like NVIDIA Cosmos, we can overcome the data scarcity issue in robotics. This new workflow has been announced and is partially online, and we are implementing this solution in collaboration with global partners to address the foundational data challenges in robotics.

Without sufficient data, robot models cannot be effectively trained, tested, or deployed. This makes data collection and generation the critical first step in addressing the challenges of robot development. By adopting this innovative workflow that combines real and synthetic data, NVIDIA is paving the way for significant advancements in the field of robotics.

END

Skill Expansion

-

• Core AI Technology Stack

Recommended Reading

-

• The domestic version of AI IDE is here!

-

• Improve training results with just one line of code!