1. SIMD

Arm NEON is a SIMD (Single Instruction Multiple Data) extension architecture suitable for Arm Cortex-A and Cortex-R series processors.

SIMD uses a controller to control multiple processors, performing the same operation on each data element within a set of data (also known as “data vectors”), thus achieving parallelism.

SIMD is particularly suitable for common tasks such as audio and image processing. Most modern CPU designs include SIMD instructions to enhance multimedia performance.

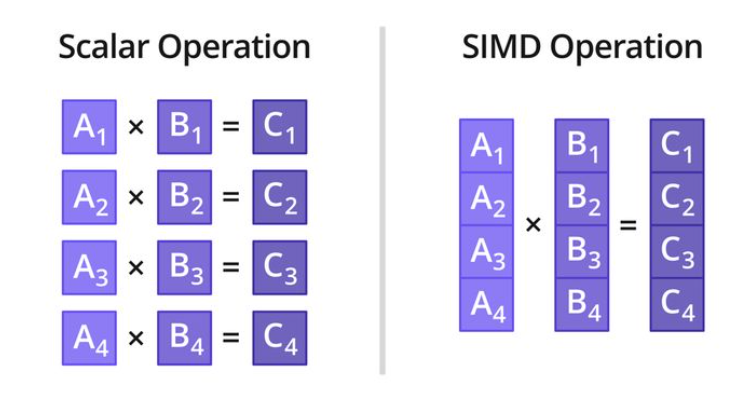

Illustration of SIMD operations

Illustration of SIMD operations

As shown in the figure above, scalar operations can only perform multiplication on one pair of data at a time, while SIMD multiplication instructions can perform multiplication on four pairs of data simultaneously.

A. Instruction Stream and Data Stream

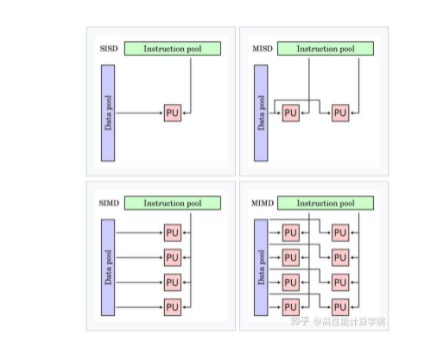

The Flynn classification method categorizes computers based on the handling of Instruction Streams and Data Streams, dividing them into four types:

Illustration of Flynn classification

Illustration of Flynn classification

1. SISD (Single Instruction Single Data)

The hardware of the machine does not support any form of parallel computing, and all instructions are executed serially. A single core executes a single instruction stream, operating on data stored in a single memory, one operation at a time. Early computers were SISD machines, such as the von Neumann architecture, IBM PCs, etc.

2. MISD (Multiple Instruction Single Data)

This uses multiple instruction streams to process a single data stream. In practice, using multiple instruction streams to handle multiple data streams is a more effective method; therefore, MISD only appears as a theoretical model and has not been implemented in practical applications.

3. MIMD (Multiple Instruction Multiple Data)

Computers have multiple processors that work asynchronously and independently. In any clock cycle, different processors can execute different instructions on different data segments, meaning they can simultaneously execute multiple instruction streams, with each stream operating on different data streams. MIMD architecture can be applied in various fields such as computer-aided design, computer-aided manufacturing, simulation, and modeling.

In addition to the above models, NVIDIA has introduced the SIMT architecture:

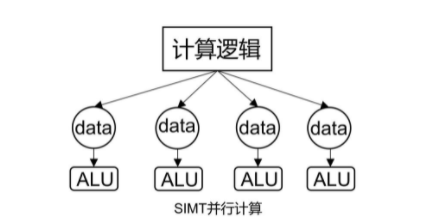

4. SIMT (Single Instruction Multiple Threads)

Similar to multithreading on a CPU, each core has its execution unit, with different data but the same executing commands. Multiple threads each have their processing units, unlike SIMD, which shares one ALU.

Illustration of SIMT

Illustration of SIMT

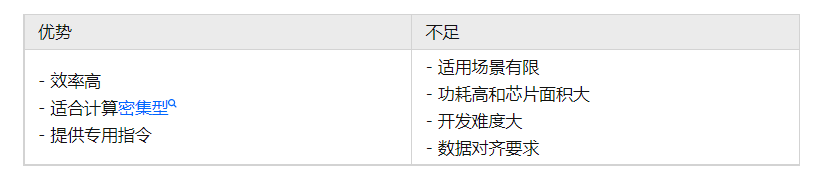

B. Characteristics and Trends of SIMD

1. Advantages and Disadvantages of SIMD

2. Trends in SIMD Development

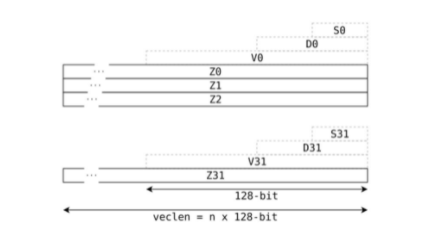

Taking the next generation SIMD instruction set SVE (Scalable Vector Extension) under the Arm architecture as an example, it is a completely new vector instruction set developed for high-performance computing (HPC) and machine learning.

The SVE instruction set has many concepts similar to the NEON instruction set, such as vectors, channels, and data elements.

The SVE instruction set also introduces a new concept: variable vector length programming model.

SVE scalable model

Traditional SIMD instruction sets use fixed-size vector registers, for example, the NEON instruction set uses fixed 64/128-bit length vector registers.

In contrast, the SVE instruction set, which supports the VLA programming model, allows for variable-length vector registers. This enables chip designers to choose an appropriate vector length based on load and cost.

The vector register length of the SVE instruction set supports a minimum of 128 bits and a maximum of 2048 bits, in increments of 128 bits. SVE design ensures that the same application can run on SVE instruction machines supporting different vector lengths without recompiling the code.

Arm introduced SVE2 in 2019, based on the latest Armv9, expanding more operation types to fully replace NEON while adding support for matrix-related operations.

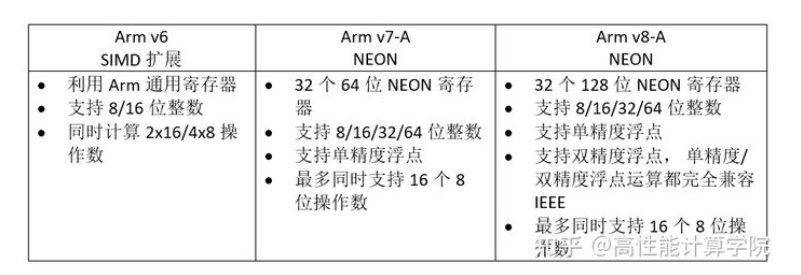

2. Arm’s SIMD Instruction Set

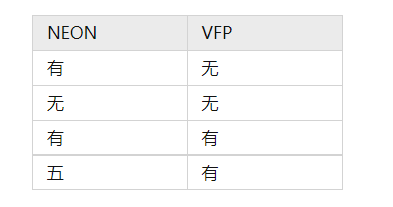

The Arm NEON unit is included by default in Cortex-A7 and Cortex-A15 processors, but in other Armv7 Cortex-A series processors, it is optional; some implementations of Cortex-A series processors that configure Armv7–A or Armv7–R architectures may not include the NEON unit.

The possible combinations of Armv7 compliant cores are as follows:

Therefore, it is essential to first confirm whether the processor supports NEON and VFP. This can be checked during compilation and runtime.

History of NEON development

History of NEON development

2. Checking SIMD Support of ARM Processors

2.1 Compile-time Check

The simplest way to detect whether the NEON unit exists. In the Arm Compiler Toolchain (armcc) version 4.0 and above or GCC, check whether the predefined macros ARM_NEON or __arm_neon are enabled.

The equivalent predefined macro for armasm is TARGET_FEATURE_NEON.

2.2 Runtime Check

Detecting the NEON unit at runtime requires the help of the operating system. The ARM architecture intentionally does not expose processor features to user-mode applications. In Linux,<span>/proc/cpuinfo</span><span> contains this information in a readable format, for example:</span>

-

In Tegra (dual-core Cortex-A9 processor with FPU)

$ /proc/cpuinfo

swp half thumb fastmult vfp edsp thumbee vfpv3 vfpv3d16-

ARM Cortex-A9 processor with NEON unit

$ /proc/cpuinfo swp half thumb fastmult vfp edsp thumbee neon vfpv3

Since the <span>/proc/cpuinfo</span><span> output is text-based, it is often preferred to check the auxiliary vector </span><code><span>/proc/self/auxv</span><span>, which contains binary format kernel <span>hwcap</span>, allowing easy searching of the <span>AT_HWCAP</span> record in the </span><code><span>/proc/self/auxv</span><span> file to check the <span>HWCAP_NEON</span> bit (4096).</span>

Some Linux distributions have modified the ld.so linker scripts to read hwcap through glibc and add additional search paths for shared libraries that enable NEON.

3. Instruction Set Relationships

-

In Armv7, the NEON instruction set has the following relationships with the VFP instruction set:

-

Processors with NEON units but without VFP units cannot perform floating-point operations in hardware.

-

As NEON SIMD operations execute vector calculations more efficiently, vector mode operations in the VFP unit have been deprecated since the introduction of ARMv7. Therefore, the VFP unit is sometimes referred to as the floating-point unit (FPU).

-

VFP can provide fully IEEE-754 compliant floating-point operations, while single-precision operations in the Armv7 NEON unit do not fully comply with IEEE-754.

-

NEON cannot replace VFP. The VFP provides some specialized instructions that do not have equivalent implementations in the NEON instruction set.

-

Half-precision instructions are only applicable to systems with NEON and VFP extensions.

-

In Armv8, VFP has been replaced by NEON, and issues such as NEON not fully complying with the IEEE 754 standard and some instructions supported by VFP but not by NEON have been resolved in ARMv8.

4. NEON

NEON is a 128-bit SIMD extension architecture suitable for Arm Cortex-A series processors, with each processor core containing a NEON unit, enabling multithreaded parallel acceleration.

1. Basic Principles of NEON

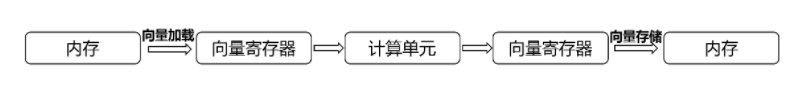

1.1 NEON Instruction Execution Process

The above figure is a flowchart of the NEON unit completing accelerated calculations. Each element in the vector register executes calculations synchronously, thus accelerating the computation process.

1.2 NEON Computational Resources

-

Relationship between NEON and Arm Processor Resources

– The NEON unit, as an extension of the Arm instruction set, uses independent 64-bit or 128-bit registers for SIMD processing, operating on the 64-bit register file. – NEON and VFP units are fully integrated into the processor, sharing processor resources for integer operations, loop control, and caching. This significantly reduces area and power consumption costs compared to hardware accelerators, and it also uses a simpler programming model since the NEON unit uses the same address space as the application.

-

Relationship between NEON and VFP Resources

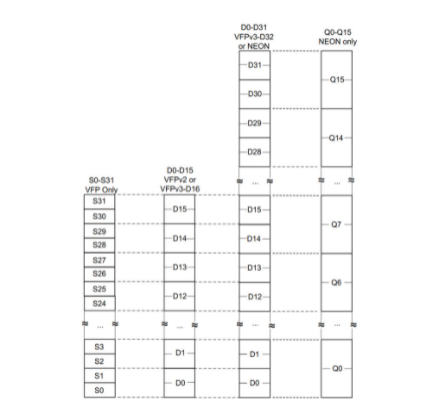

NEON registers overlap with VFP registers; Armv7 has 32 NEON D registers, as shown in the figure below.

NEON Registers

NEON Registers

2. NEON Instructions

2.1 Automatic Vectorization

Vectorizing compilers can use C or C++ source code to vectorize it in a way that effectively utilizes NEON hardware. This means that portable C code can still achieve the performance levels brought by NEON instructions.

To aid vectorization, set the number of loop iterations to be a multiple of the vector length. Both GCC and the ARM Compiler Toolchain have options to enable automatic vectorization for NEON technology.

2.2 NEON Assembly

For programs with particularly high performance requirements, manually writing assembly code is a more suitable approach.

The GNU assembler (gas) and the Arm Compiler Toolchain assembler (armasm) both support the assembly of NEON instructions.

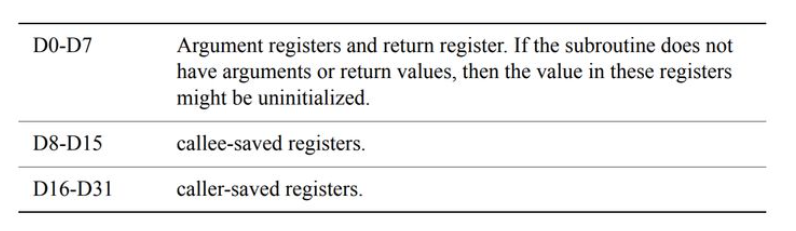

When writing assembly functions, it is essential to understand Arm EABI, which defines how to use registers. The ARM Embedded Application Binary Interface (EABI) specifies which registers are used to pass parameters, return results, or must be preserved, specifying the use of 32 D registers in addition to the Arm core registers. The following figure summarizes the functions of the registers.

Register Functions

Register Functions

2.3 NEON Intrinsics

NEON intrinsic functions provide a way to write NEON code that is easier to maintain than assembly code while still controlling the generated NEON instructions.

Intrinsic functions use new data types that correspond to D and Q NEON registers. These data types support creating C variables that directly map to NEON registers.

Writing intrinsic functions is similar to calling functions that use these variables as parameters or return values. The compiler does some of the heavy lifting typically associated with writing assembly language, such as:

Register allocation, code scheduling, or reordering instructions

-

Disadvantages of intrinsics

It is not possible for the compiler to output the desired code accurately, so there is still room for improvement when transitioning to NEON assembly code.

-

NEON Instruction Simplification Types

NEON data processing instructions can be categorized into normal instructions, long instructions, wide instructions, narrow instructions, and saturation instructions. For example, the long instruction of Intrinsic is

<span>int16x8_t vaddl_s8(int8x8_t __a, int8x8_t __b);</span>– The above function adds two 64-bit D register vectors (each vector contains 8 8-bit numbers) to generate a vector of 8 16-bit numbers (stored in a 128-bit Q register), thus avoiding overflow of the addition result.

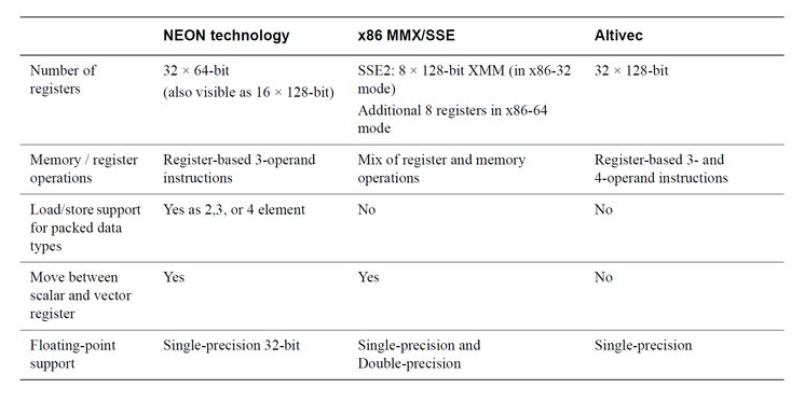

5. Other SIMD Technologies

1. Other Platforms’ SIMD Technologies

SIMD processing is not unique to Arm; the following diagram compares it with x86 and Altivec.

SIMD Comparison

SIMD Comparison

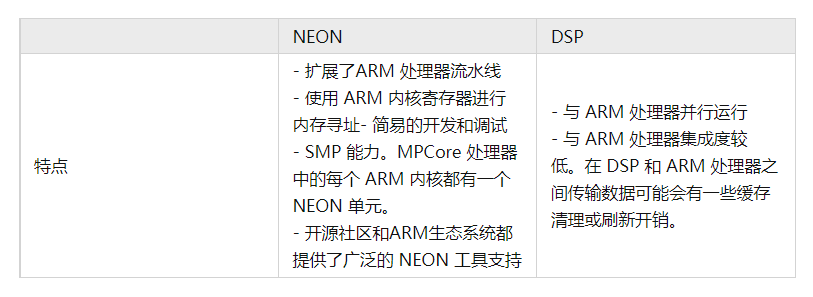

2. Comparison with Dedicated DSP

Many Arm-based SoCs also include coprocessing hardware such as DSPs, allowing for the simultaneous inclusion of NEON units and DSPs. Compared to DSPs, NEON has the following characteristics:

6. Conclusion

This section mainly introduces basic SIMD and other instruction stream and data stream processing methods, the basic principles of NEON, instructions, and comparisons with other platforms and hardware.

It is hoped that everyone can gain something.

Series Reading

-

Arm NEON Learning (1) Quick Start Guide

-

Arm NEON Learning (2) Optimization Techniques

-

Arm NEON Learning (3) NEON Assembly and Intrinsics Programming

Source | Arm Technology Academy

Copyright belongs to the original author. If there is any infringement, please contact for deletion.

END

关于安芯教育

安芯教育是聚焦AIoT(人工智能+物联网)的创新教育平台,提供从中小学到高等院校的贯通式AIoT教育解决方案。

安芯教育依托Arm技术,开发了ASC(Arm智能互联)课程及人才培养体系。已广泛应用于高等院校产学研合作及中小学STEM教育,致力于为学校和企业培养适应时代需求的智能互联领域人才。