In the field of autonomous driving, high-precision simulation systems play the role of a “virtual training ground”. Engineers need to simulate extreme scenarios such as heavy rain, traffic congestion, and sudden accidents in the digital world to repeatedly verify the reliability of algorithms.

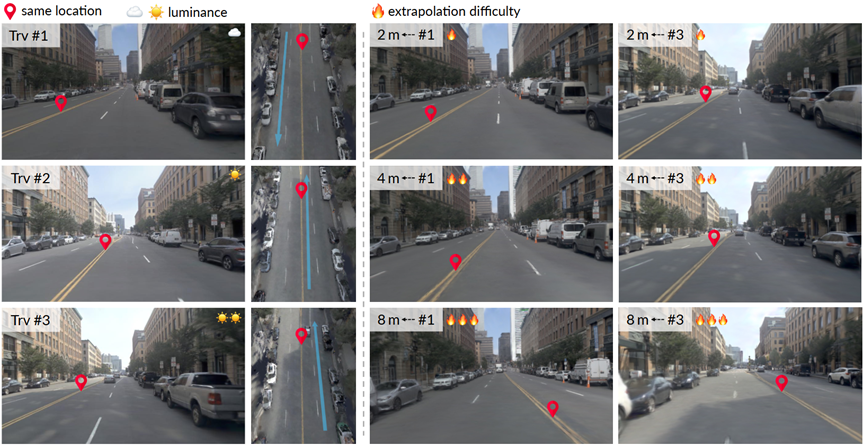

However, traditional simulation technologies often face two major challenges: first is the limited perspective, relying on single trajectory data, such as camera recordings along a fixed route, which means the reconstructed scene can only be realistic within a limited viewpoint and cannot support vehicles’ “free exploration”. The second is dynamic distortion, where the same intersection may be filled with vehicles or completely empty at different times, causing the generated images to deviate from reality.

To address this issue, Shanghai Chuangzhi Academy, in collaboration with institutions such as the University of Hong Kong, proposed the MTGS (Multi-Traversal Gaussian Splatting) method, which constructs ultra-high precision simulation scenes that can both restore real road details and dynamically respond to environmental changes through the fusion of multi-trajectory data.

During daily commutes, vehicles often pass through the same segment of road multiple times along different trajectories; the fleets used for collecting driving data also tend to traverse the same neighborhood repeatedly, with each vehicle recording information about the current block from different angles at different times. Therefore, using multi-trajectory data can capture more information about the surrounding environment. However, experiments have shown that simply stacking data does not improve reconstruction results and may even damage the scene model reconstructed from a single trajectory. One reason is that these data have significant differences in weather and lighting, making it difficult to align them well. The core innovation of MTGS is to intelligently integrate these fragmented “digital puzzles”, allowing the geometric information collected from different trajectories to complement each other and reconstruct a driving scene with more accurate geometric information.

-

arXiv link: https://arxiv.org/abs/2503.12552

-

Code, checkpoints, etc. will be open-sourced soon

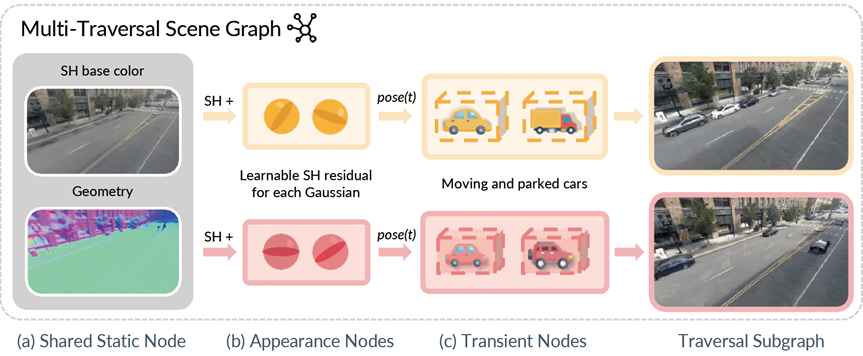

Heterogeneous Graph of Scene Based on Multiple Trajectories

MTGS collects elements from the same scene into a heterogeneous graph and categorizes them into three types of nodes based on the characteristics of different scene elements: static nodes, appearance nodes, and transient nodes. This “divide and conquer” design allows MTGS to both restore the original features of the road and flexibly present the ever-changing traffic flow and environment.

Static nodes – Static backgrounds shared by all trajectories, such as asphalt roads and traffic signs.

Appearance nodes – Adjust lighting and shadows using spherical harmonic coefficients to adapt to weather changes and lighting differences corresponding to different times of the multi-trajectory.

Transient nodes – Moving objects unique to each trajectory, such as passing vehicles and temporarily parked delivery trucks.

Among these, static nodes and appearance nodes jointly determine the Gaussian sphere representing the static background, with the former providing the position, rotation quaternion, size, transparency, and the first parameter of the spherical harmonic function, while the latter determines the other parameters of the spherical harmonic function. This design is based on the characteristics of spherical harmonics: the first spherical harmonic function Y_0,0 has rotational invariance and can be used to represent the object’s original color or base color; other spherical harmonic functions will change with the observation angle, making them more suitable for representing color variations of the object from different trajectories and angles, such as shadows, reflections, and other details.

Appearance Alignment Within the Same Trajectory

In addition to lighting differences between multi-trajectories, there are also appearance misalignments within the same trajectory, such as overexposed images from some cameras and color tone differences between different cameras. MTGS innovatively uses LiDAR point cloud colors as “anchors”, aligning the colors of the same spatial point captured by different cameras at the same time, and learning independent affine transformations for each camera to ensure consistent color tones for images captured at different times.

Furthermore, to avoid distortions such as “floating fragments” in the model, MTGS also introduces multiple constraints: (1) using LiDAR point clouds to correct 3D shapes, ensuring precise alignment of structures such as curbs and guardrails; (2) using UniDepth for depth estimation of images, calculating the normal vector direction for each pixel based on the estimated depth, and using the normal vector constraints of adjacent pixels to ensure smoother surface transitions (e.g., the curvature of a car roof); (3) separating the shadows of moving objects from the background to prevent “ghosting” artifacts. These techniques improve reconstruction results by 46.3%, significantly reducing issues such as aliasing and ghosting in the synthesized images.

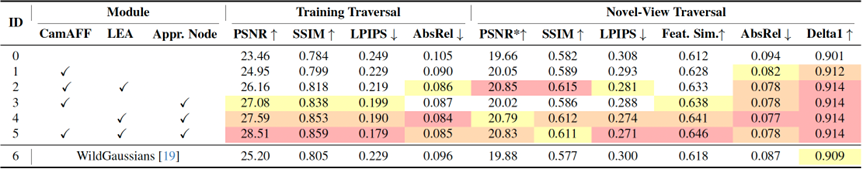

Measured Results: Pixel-Level Approximation Between Digital and Reality

Tests on the nuPlan large-scale autonomous driving dataset show that MTGS sets new records in multiple metrics. In terms of image quality, perceptual similarity (LPIPS) improved by 23.5%. In terms of geometric accuracy, depth error decreased by 46.3%, with details such as guardrail spacing and lane width being restored to centimeter-level accuracy. In terms of dynamic response, it supports 60 frames per second real-time rendering, smoothly presenting scenarios such as changes in traffic density and pedestrians suddenly crossing.