Recently, I have been learning about system porting. During the process of learning and debugging, I encountered and solved many problems, but I always felt a vague sense of confusion about our development results. Upon reflection, the main reason is that we do not have a profound understanding of our development environment. Sometimes, a few simple commands can accomplish very complex functions, but have we ever thought about why this happens? If we don’t question it and just mechanically complete the tasks while observing the experimental results, we are not truly grasping the essence of system porting.

When performing each step, first ask yourself why you are doing this, and then ask what you are currently doing? Understanding these questions is crucial. In the future, regardless of which platform, chip, or development environment you switch to, you will not be confused and will quickly get the hang of it. My personal approach to learning embedded systems is to grasp the macro perspective (solving the why question) and study the micro perspective (solving the what question). Below, I will share my learning methods and experiences with the ARM Cortex-A8 development board as my target.

Embedded Linux system porting consists of four main parts:

1. Setting Up the Cross Development Environment2. Bootloader Selection and Porting3. Kernel Configuration, Compilation, and Porting4. Creating the Root File SystemPart One: Setting Up the Cross Development Environment

Let’s first introduce the content of the first part: setting up the cross development environment. First, two questions must be considered: What is a cross environment? Why is it necessary to set up a cross environment?

To answer the first question, in embedded development, cross development is a very important concept. The first step in development is to set up the environment. If this step is not completed, the subsequent steps cannot be discussed. The cross development environment mainly refers to: developing programs on the host development machine (usually my PC) that can run on the target machine (usually our development board). Embedded systems are quite special in that programs cannot be developed directly on the target machine (in a narrow sense), because a raw development board cannot run without any programs. To get it running, we must use a PC to perform tasks such as programming, allowing the development board to function. The PC here is what we refer to as the development host. Just think, without a development host, our target machine would essentially be impossible to develop for. This is a famous saying in the electronics industry: “In electronics, to put it simply, it’s all about playing with computers!”

Next, let’s answer the second question: why do we need a cross development environment? The main reasons are as follows:

Reason 1: Embedded systems have many hardware resource limitations, such as relatively low CPU clock speeds and small memory capacities. Imagine asking a few hundred MHz MCU to compile a Linux kernel; it would make us impatient. In contrast, a PC is much faster and has richer hardware resources, so using a PC for development will improve efficiency.

Reason 2: Embedded system MCU architectures and instruction sets differ, so it is necessary to install cross-compilation tools for compilation. This way, the compiled target program can run on the corresponding platforms, such as ARM, MIPS, and PowerPC.

The hardware components of a cross development environment mainly consist of the following::

1. Development Host2. Target Machine (Development Board)3. The link medium between the two, commonly using three methods: (1) Serial cable (2) USB cable (3) Network cable

In addition to the corresponding hardware medium, we also need the appropriate software “medium” support:

1. For serial connections, commonly used tools include serial debugging assistants, Putty, etc. There are many tools, and their functions are similar; knowing how to use one or two is sufficient;2. For USB cables, USB drivers are essential. Typically, chip manufacturers provide these. For example, for Samsung chips, USB downloads are mainly completed by DNW software;3. For network cables, network protocol support is necessary, with the main services being two:

First: TFTP Service:

This is mainly used for file downloads. For instance, during development and debugging, TFTP is used to download the bootloader, kernel, and file system directly into memory for execution without needing to pre-flash them to the Flash chip. On one hand, frequent downloads are often needed during testing; if each of these files had to be flashed to the Flash chip before running, it would be cumbersome, and moreover, the Flash has limited erase/write cycles. On the other hand, the purpose of testing is simply to load these target files into memory for immediate execution, and TFTP conveniently fulfills this function, making it unnecessary to flash these files to Flash.

Second: NFS Service:

This is primarily used for mounting network files, essentially for network file sharing. During development, typically in the final step of system porting, a file system is created. This can be placed in the appropriate location on our development host PC, and the development board can mount it via NFS, allowing us to test whether our created file system is correct. Throughout this process, there is no need to flash the file system to Flash, and mounting occurs automatically. After the bootloader starts, once the kernel runs, it will automatically mount based on the startup parameters we set, making this method very convenient for development testing and improving efficiency.

Additionally, there is a service called Samba that is also quite important, primarily used for file sharing. Note that this sharing is different from NFS file sharing; NFS sharing is for network file sharing, while Samba implements file sharing between a Windows host and a Linux virtual machine, facilitating file transfers.

The above tools are essential for embedded development, greatly contributing to improving development efficiency. Therefore, it is crucial to become proficient in using these tools, as this will significantly enhance your development efficiency. After testing is complete, the corresponding target files will be flashed to Flash, which is something done only when releasing the product. Thus, for developers, all work is ultimately about testing.

Through the previous work, we have prepared the hardware part of the cross development environment and some software; finally, we still need a cross compiler. Readers may wonder why a cross compiler is necessary. As mentioned earlier, a cross development environment will inevitably require cross-compilation tools. In simple terms, this means compiling programs on one platform that can run on another platform with a different architecture. Programs compiled on the development host PC platform (X86 CPU) must be able to run on the ARM-based CPU platform; the compiled programs cannot run on the X86 CPU platform and must be executed on the ARM CPU platform, even though both platforms use the Linux system. In contrast to cross-compilation, the usual compilation is called local compilation, where the program is compiled to execute locally. The tool used for this type of compilation is called a local compiler. Therefore, to generate programs that run on the target machine, a cross-compilation toolchain must be used.

Now, another question arises: isn’t it just a cross-compilation tool? Why is it called a cross toolchain? The reason is simple: a program cannot simply be compiled; it also needs to go through assembly, linking, and debugging processes. For a large project, engineering management is also required. Hence, the cross-compilation tool refers to a comprehensive development environment consisting of compilers, linkers, and interpreters. The cross-compilation toolchain mainly consists of binutils (which includes the assembler ‘as’ and linker ‘ld’), gcc (the C compiler for the GNU system), and glibc (which defines some basic C functions and other functions). Sometimes, to reduce the size of the libc library, other C libraries can replace glibc, such as uClibc, dietlibc, and newlib.

So, how do we obtain a cross toolchain? Is it just a matter of downloading a program from the internet and installing it? Before answering this question, consider this: our cross toolchain is, by definition, a toolchain that compiles programs capable of running on our target development platform, such as ARM, from the PC. This raises another question: our ARM processor models are numerous; is there a specific cross toolchain for each one? If so, think about it: with so many processor platforms, would there really be a dedicated cross toolchain for each one available online for everyone to download? It would take a long time to find the right compiler for your needs, and clearly, such an approach is not reasonable and wastes resources! Therefore, to obtain a cross toolchain, just like porting a Linux kernel, we only care about what we need and compile only what is necessary for our platform. We do not select or compile unnecessary components. In other words, the cross toolchain is a collection of tools supporting many platforms (similar to Linux source code), and we only need to find the tools related to our platform from this toolset. The question of how to find the tools related to our platform leads us to the issue of how to create a cross toolchain.

Typically, there are three methods for building a cross toolchain:

Method 1: Step-by-step compilation and installation of the libraries and source code required for the cross-compilation toolchain, ultimately generating the cross-compilation toolchain. This method is relatively difficult and suitable for readers who want to delve into building cross toolchains. If you only want to use a cross toolchain, it is recommended to use the following Method 2.

Method 2: Use the Crosstool-ng script tool to achieve a single compilation that generates the cross-compilation toolchain. This method is much simpler than Method 1 and has a very low chance of error, so it is recommended to use this method to build a cross-compilation toolchain in most cases.

Method 3: Directly download a pre-made cross-compilation toolchain from the internet. The advantage of this method is obvious: it is simple and convenient, but it also has significant drawbacks: it is too limited since it is a fixed toolchain built by someone else, lacking flexibility. The libraries and compiler versions used may not be suitable for the programs you want to compile, and you may encounter many inexplicable errors during use. Therefore, readers are advised to use this method cautiously.

Crosstool-ng is a script tool that can create cross-compilation toolchains suitable for different platforms. Before building, you need to install the following software: $ sudo apt-get install g++ libncurses5-dev bison flex texinfo automake libtool patch gcj cvs cvsd gawk. The crosstool script tool can be downloaded from http://ymorin.is-a-geek.org/projects/crosstool, then extracted. Next, the installation and configuration process is similar to kernel configuration. The main steps are as follows: 1. Set the source package path and the installation path for the cross-compiler. 2. Modify the architecture targeted by the cross-compiler.

3. Increase the number of parallel processes during compilation to improve efficiency and speed up compilation, as this process can be relatively slow. 4. Disable the Java compiler to reduce compilation time. 5. Compile. 6. Add environment variables. 7. Refresh environment variables. 8. Test the cross toolchain.

At this point, all the work for the first part of the embedded Linux system porting is complete. Next, we can proceed with the subsequent development. Part Two: Bootloader Selection and Porting

1. Boot Loader Concept

A bootloader is a small program that runs before the operating system kernel. Through this small program, we can initialize hardware devices, establish a mapping of memory space, thereby bringing the system’s software and hardware environment to a suitable state, preparing the correct environment for the final invocation of the operating system kernel. This is what we call the bootloader.

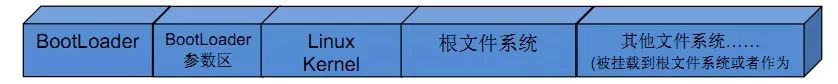

【Figure 1】 Distribution of Files Stored in Flash Memory

2. Why Port the Bootloader Before System Porting?

The task of the bootloader is to boot the operating system. To boot the operating system means to start the kernel, loading the kernel into memory RAM for execution. Let’s first ask two questions: Who moves the kernel into memory for execution? The second question is: The memory we are talking about is SDRAM. As we all know, this type of memory is different from SRAM; the biggest difference is that SRAM can run as long as the system is powered on, while SDRAM requires software initialization to operate. Therefore, before moving the kernel into memory for execution, the memory must be initialized. But who initializes the memory? In fact, both tasks are handled by the bootloader, preparing the software and hardware environment for the kernel to run. Without a bootloader, our system cannot start.

3. Classification of Bootloaders.

First, let’s correct a common misconception: many people say that the bootloader is U-Boot. This statement is incorrect; to be precise, U-Boot is a type of bootloader. In other words, bootloaders come in various types, broadly classified as shown in the figure below:

【Figure 2】 Bootloader Classification Diagram

As seen in the diagram, different bootloaders have different usage scopes. One notable bootloader is U-Boot, a universal bootloader that supports multiple processor architectures, including X86, ARM, and PowerPC. U-Boot, which stands for Universal Boot Loader, is an open-source project under the GPL license developed by the German DENX group for various embedded CPUs. U-Boot has made significant contributions to Linux development and is open-source.

U-Boot has the following features:

1) Open-source; 2) Supports multiple embedded operating system kernels, such as Linux, NetBSD, VxWorks, QNX, RTEMS, ARTOS, LynxOS; 3) Supports multiple processor families, such as PowerPC, ARM, x86, MIPS, XScale; 4) High reliability and stability; 5) Highly flexible feature settings suitable for U-Boot debugging, different operating system boot requirements, product releases, etc.; 6) Rich source code for device drivers, including serial ports, Ethernet, SDRAM, FLASH, LCD, NVRAM, EEPROM, RTC, keyboards, etc.; 7) Relatively rich development debugging documentation and strong network technical support; U-Boot can actually be understood as a small operating system.

4. U-Boot Directory Structure

* board: Contains files related to the target board, primarily including SDRAM and FLASH drivers;* common: General code independent of processor architecture, such as memory size detection and fault detection;* cpu: Contains files related to the processor. For example, the mpc8xx subdirectory includes serial port, network port, LCD drivers, and interrupt initialization files;* driver: General device drivers, such as CFI FLASH drivers (currently with good support for INTEL FLASH);* doc: Documentation for U-Boot;* examples: Example programs that can run under U-Boot, such as hello_world.c, timer.c;* include: U-Boot header files; especially the configuration header files related to the target board in the configs subdirectory, which are often modified during the porting process;* lib_xxx: Files related to the processor architecture, such as lib_ppc and lib_arm directories that contain files related to PowerPC and ARM architectures;* net: Directory for files related to networking functions, such as bootp, nfs, tftp;* post: Power-on self-test file directory, which is still to be further improved;* rtc: RTC driver files;* tools: Tools for creating U-Boot S-RECORD and BIN image files;5. U-Boot Working Modes

U-Boot has two working modes: Boot Loading Mode and Download Mode. The boot loading mode is the normal working mode of the bootloader. When embedded products are released, the bootloader must operate in this mode, loading the embedded operating system from FLASH into SDRAM for execution. The download mode allows the bootloader to download kernel images or root filesystem images from a PC into the target board’s SDRAM for execution. Users can utilize some command interfaces provided by the bootloader to complete their desired operations. This mode is mainly used for testing and development.

6. U-Boot Startup Process

Most bootloaders are divided into stage1 and stage2. U-Boot is no exception. The CPU architecture-dependent code (such as device initialization code) is typically placed in stage1, often implemented in assembly language, while stage2 is usually implemented in C language, allowing for more complex functions and better readability and portability.

1) Stage1 (start.s code structure): U-Boot’s stage1 code is usually found in the start.s file, written in assembly language. The main code parts include: (1) Defining the entry point. Since an executable image must have a single global entry point, it is typically placed at address 0x0 in ROM (Flash). Therefore, the compiler must be informed about this entry point, which can be done by modifying the linker script. (2) Setting up the exception vector. (3) Setting the CPU speed, clock frequency, and interrupt control registers. (4) Initializing the memory controller. (5) Copying the program from ROM to RAM. (6) Initializing the stack. (7) Jumping to execute in RAM, which can be accomplished using the ldrpc instruction.

2) Stage2 (C language code part): The start function in lib_arm/board.c is the main C language function of the entire startup code and also the main function of the entire U-Boot (armboot). This function mainly carries out the following operations: (1) Calls a series of initialization functions. (2) Initializes FLASH devices. (3) Initializes the system memory allocation functions. (4) If the target system has NAND devices, initializes the NAND devices. (5) If the target system has display devices, initializes them. (6) Initializes relevant network devices, filling in IP addresses and other parameters. (7) Enters the command loop (the working loop of the boot), accepting commands input from the serial port and performing corresponding tasks.

7. Analysis of the Boot Process of the S5PC100 Bootloader Based on Cortex-A8

The S5PC100 supports two boot methods: USB boot and NAND Flash boot:

1. S5PC100 USB Boot Process

[1] A8 reset, execute the program in iROM; [2] The program in iROM determines the boot method (USB) based on the S5PC100 configuration pins (SW1 switch 4, switching to the opposite side of 4); [3] The program in iROM initializes USB and then waits for the PC to download the program; [4] Using the DNW program, download the SDRAM initialization program from the PC into iRAM for execution, initializing SDRAM; [5] Once SDRAM is initialized, the program in iROM continues to take over A8, then waits for the PC to download the program (BootLoader); [6] The PC uses DNW to download BootLoader into SDRAM; [7] The BootLoader runs in SDRAM.

2. S5PC100 NAND Flash Boot Process

[1] A8 reset, execute the program in IROM; [2] The program in IROM determines the boot method (Nandflash) based on the S5PC100 configuration pins (SW1 switch 4, switching to the side near 4); [3] The program in IROM drives Nandflash; [4] The program in IROM copies the first 16k from Nandflash to iRAM; [5] The first 16k program (the first half of the BootLoader) initializes SDRAM, then copies the complete BootLoader into SDRAM and runs it; [6] The BootLoader copies the kernel into SDRAM and runs it; [7] Once the kernel is running, it mounts the rootfs and executes the system initialization script.

8. U-Boot Porting (Taking S5PC100 Based on Cortex-A8 as an Example)

1. Establishing Your Own Platform

(1) Download the source package version 2010.03, which is relatively stable. (2) After extracting, add your platform information, using smdkc100 as a reference to port your S5PC100 development board. (3) Modify the corresponding directory filenames and Makefile to specify the cross toolchain. (4) Compile. (5) Port for your platform, mainly modifying the SDRAM running address from 0x20000000. (6) “Switch” the corresponding macro definitions. (7) Add NAND and network card driver code. (8) Optimize the go command. (9) Recompile: make distclean (completely remove intermediate files and configuration files), make s5pc100_config (configure for your development board), make (compile to generate your u-boot.bin image file). (10) Set environment variables, which are startup parameters. Download the compiled u-boot into memory for execution. The process is as follows: 1. Configure the development board network: IP address configuration: $setenv ipaddr 192.168.0.6 (configure IP address in memory environment variable) $saveenv (save the environment variable value to NAND flash parameter area) Network test: On the development board, ping the virtual machine: $ ping 192.168.0.157 (IP address of the virtual machine) If the network test fails, check the following: 1. Ensure the network cable is properly connected. 2. Check if the development board and virtual machine’s IP addresses are configured in the same subnet. 3. The virtual machine network must be set to bridge mode (VM–Setting–>option). 4. When connecting to the development board, the virtual machine should be set to static IP address.

2. On the development board, configure the TFTP server (virtual machine) IP address: $setenv serverip 192.168.0.157 (IP address of the virtual machine) $saveenv. 3. Copy u-boot.bin to /tftpboot (directory on the virtual machine). 4. Use TFTP to download u-boot.bin into the development board memory: $ tftp 20008000 (any memory address) u-boot.bin (the name of the file to download). If the above command fails to download correctly: 1. Check if serverip is configured correctly. 2. Restart the TFTP service if it fails to start: #sudo service tftpd-hpa restart. 5. Write u-boot.bin to NAND flash at address 0: $nand erase 0 (starting address) 40000 (size) (erase the NAND flash 0 – 256k area) $nand write 20008000 (the memory address where u-boot.bin is cached) 0 (the position on NAND flash for u-boot) 40000 (size of the write). 6. Switch the development board’s boot method to NAND flash: 1. Power off the development board. 2. Move SW1 switch 4 to the side near 4. 3. Power on the development board; it will boot from NAND flash.

Part Three: Kernel Configuration, Compilation, and Porting

1. Copy the downloaded linux-2.6.35.tar.bz2 to the home directory and extract it.

2. Modify the Makefile in the top-level directory, mainly changing the platform architecture and cross-compiler. The code is as follows:

ARCH ?= $(SUBARCH) CROSS_COMPILE ?= CROSS_COMPILE ?= $(CONFIG_CROSS_COMPILE:”%”=%) Modify the above code to: ARCH ?= arm —-> Architecture is ARM CROSS_COMPILE ?= arm-cortex_a8-linux-gnueabi- —-> Cross-compiler is for ARM Cortex-A8 platform Note: These two variable values directly affect the compilation behavior of the top-level Makefile, determining which code to compile and what compiler to use.

3. Copy the standard configuration file to obtain configuration information related to our development board.

$ cp arch/arm/configs/s5pc100_defconfig .config

Here, we copy arch/arm/configs/s5pc100_defconfig to .config to select the code related to our development board. Since Linux supports a vast number of platforms, not just ARM processors, we only need to compile the code related to our platform. However, there are over ten thousand source code files in the Linux system, so how do we choose?

Actually, the concern we have was already addressed by those who write operating systems; they have solved this problem for us. We only need to perform a simple operation to select the code we want to compile. Specifically, we copy the corresponding platform’s _defconfig directly into the .config file in the top-level directory. This way, the .config file records the platform information we want to port, as all configuration information is stored in the .config file during kernel configuration. Note that when we first enter make menuconfig, the system will automatically select the relevant code and modules based on the platform information we choose. Therefore, we just need to enter and then exit, selecting to save the configuration information. The system will save all the configuration information related to the platform we are porting in the .config file in the top-level directory.

4. Configure the Kernel

$make menuconfig

Note: When entering for the first time, do not make any changes; just exit. When prompted to save the configuration, ensure you confirm “YES”. This way, our .config file will have the information of our development platform saved.

In this stage, we need to be concerned about one issue: what exactly does the system do when executing make menuconfig? Why does a graphical interface appear? Where does the content in the graphical interface come from?

The graphical interface is implemented by a special graphical library. Remember the first time we executed make menuconfig, the system did not show the graphical interface but instead reported an error indicating that we were missing ncurses-devel. At this point, we just need to install the required libncurses5-dev by running sudo apt-get install libncurses5-dev. With the support of this graphical library, we can display the graphical interface correctly.

Now that the graphical interface issue is resolved, we have another question: where does the content in the graphical interface come from? To answer this question, we need to mention the design philosophy of the Linux kernel. The Linux kernel is organized in a modular way. But why use a modular approach? What is the concept of a module? Let me clarify these questions.

The source tree directory of the Linux 2.6 kernel generally contains two files: Kconfig and Makefile. The Kconfig files distributed across various directories form a distributed kernel configuration database, with each Kconfig describing the kernel configuration menu related to the source files in that directory. Each directory stores information about relatively independent functions, and various module information is stored in each directory. For example, in the /dev/char/ directory, all character device drivers are stored, and this program code exists in the kernel as modules. This means when the system needs a driver, it will compile that driver into the kernel in module form. Compiling can be either static or dynamic; static compilation results in a larger kernel size compared to dynamic compilation. Each directory also has a Kconfig file. You may wonder what information is stored in this file? Each directory’s Kconfig file describes the kernel configuration menu related to the source files in that directory, using a specific syntax. The text in the graphical interface is read from this file. If the relevant directory files’ information is deleted from this file, then the module information will not appear in the graphical interface, and module configuration will not be possible.

When configuring the kernel with make menuconfig (or xconfig, etc.), the system automatically reads the configuration menu from Kconfig. After the user configures and saves it, it is stored in .config (generated in the top-level directory). During kernel compilation, the main Makefile calls this .config file, which is crucial as it saves all our configuration information and serves as the final basis for selecting source code and compiling it!

From the above content, we can see that Kconfig corresponds to the kernel configuration menu. If we want to add a new driver to the kernel source, we can increase the configuration menu for our driver by modifying Kconfig. This way, we can select our driver. If we want this driver to be compiled, we also need to modify the Makefile in the directory where the driver is located. Therefore, generally, the files that need to be modified when adding a new driver are Kconfig and the corresponding directory’s Makefile (note that it is not just these two).

The important content of system porting is to add and remove relevant modules from the kernel, so the primary files modified during kernel work are Kconfig and the corresponding directory’s Makefile.

5. Compile the Kernel

$make zImage

Through the above operations, we can generate a zImage file in the arch/arm/boot directory, which is the compressed kernel image.

The kernel compilation process is quite complex. Note that this compilation is static compilation. At this point, the zImage command in the top-level directory’s Makefile will be executed. During this process, the system will select the source code for compilation based on the .config file. The specific steps for compiling the kernel are quite intricate, and I will write a separate article to describe them in detail.

6. Download and Test the Kernel via TFTP Network Service

setenv bootcmd tftp 20008000(zImage memory address); go 20008000 setenv bootargs nfs nfsroot=192.168.1.199(virtual machine’s IP):/source/rootfs ip=192.168.1.200(development board’s IP) init=/linuxrc(the first user process to start) ttySAC0,115200(set the interrupt to serial port 1, baud rate: 115200)

Save the environment variables, reset the development board, and test whether it can start normally (Note: before this, the NFS mounted file system must be set up properly to see the results). The kernel testing and startup process is also quite complex, and I will detail this in future articles.

Part Four: Introduction to the Root File System

Based on the first figure of this article: Distribution of Files Stored in Flash Memory, it can be seen that creating and porting the file system is the last step in system porting. Here, I want to raise a few questions: 1.What is a file system? 2.How is a file system implemented? 3.What are the commonly used file systems, and why do we need these file systems?

Now, let’s answer these questions one by one:

We rarely hear about file systems in our daily lives, but they do exist; they are just not called file systems but rather databases. There are many files in the database; how do we quickly and accurately find the file we need? The database uses a classified index method to achieve fast searching, similar to how libraries manage books. The first floor may be philosophy, the second floor social sciences, the third floor electronics, the fourth floor computer science, and so on. We call this classified index database a file system.

For a computer, files are essentially data that can only be stored on physical media, such as hard drives. However, we cannot read files from physical media ourselves or write files to physical media; the reading and writing of files on physical media can only be accomplished through programs. To facilitate this, programs are divided into physical media driver programs, content storage programs, and file content storage programs. The physical media driver program is used to access data from physical media; the content storage program is used to package file content and file attribute information; and the file content storage program is used to form file content from user input or retrieve file content for display.

We can decompose a file system (a tree structure) into several file systems (trees) stored on different storage media. For example, one can be stored on a CD, and another on a hard drive. When in use, we can mount the root directory of the file system on the CD under a directory on the hard drive, making accessing that directory equivalent to accessing the root directory on the CD. Once we find the root directory, we can also access the entire file system on the CD.

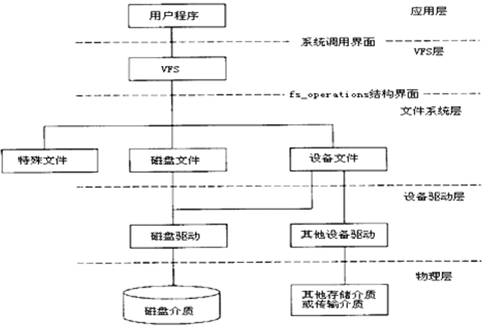

“In Linux, everything is a file” is a phrase often heard when learning the Linux system. Although somewhat exaggerated, it reveals the importance of the file system to the Linux system. In fact, file systems are crucial for all operating systems, as they manage most hardware devices and software data in the form of files.The management framework for devices and data in the Linux system is shown below:

【Figure 3】 File System Implementation

[Description]

A. VFS (Virtual File System) manages special files (virtual files), disk files, and device files.

B. The fs_operations structure consists of a series of file operation interface functions completed by the file system layer, providing file operations for VFS;

C. In the file system layer, disk files must implement various file systems (such as ext2), and device files must implement various abstract device drivers.

D. In the device driver layer, disk drivers must implement various disk drivers, while other device drivers must implement specific device drivers.

E. The physical layer refers to the devices themselves.

Why are there different file types?

Because there are many types of storage media, it is impossible to store file systems in a unified format on various storage media. Instead, multiple different storage formats are needed to adapt to the characteristics of different storage media, optimizing access efficiency and space utilization. Therefore, it is necessary to establish standards for each storage format, which are referred to as file system types. Common file system types include: 1. DOS FAT16 2. Windows FAT16, FAT32, NTFS 3. Linux Minix, ext, ext2, ext3, ISO9660, jffs2, yaffs, yaffs2, cramfs, romfs, ramdisk, rootfs, proc, sysfs, usbfs, devpts, tmpfs & ramfs, NFS.

As can be seen, Linux supports the most file system types. They can be categorized based on different media, as follows:? Disk: FAT16, FAT32, NTFS, ext, ext2, ext3, Minix? CD: ISO9660? Flash: jffs2, yaffs, yaffs2, cramfs, romfs? Memory: Ramdisk, tmpfs & ramfs? Virtual: rootfs, proc, sysfs, usbfs, devpts, NFS

Theoretically, all commonly used storage media can store Linux-supported file systems. However, since we are only studying embedded systems, and due to size and mobility constraints, embedded systems cannot use disks and CDs, so they can only use flash-type storage devices, memory, and virtual storage devices as storage media for file systems.

Flash chip drivers are provided by the system, so their access characteristics are entirely determined by the characteristics of the flash itself. It is best to have a file system more suitable for flash, such as Jffs, Yaffs, Cramfs, and Romfs. These file systems are commonly used in embedded Linux systems, and can be selected based on their characteristics, which are as follows: Common PointsBased on MTD DriversJffs A. Implemented for NOR Flash; B. A log-structured file system based on hash tables; C. Employs wear leveling technology, attempting to distribute write locations evenly; D. Readable and writable, supports data compression; E. Crash/power loss safety protection; F. When the file system is full or nearly full, its speed greatly slows down due to garbage collection.

Yaffs A. Implemented for NAND Flash; B. A log-structured file system; C. Employs wear leveling technology, attempting to distribute write locations evenly; D. Readable and writable, does not support data compression; E. Short mount time, occupies less memory; F. Comes with NAND flash drivers, can operate without VFS and MTD.

Cramfs A. Single-page compression, supports random access, achieving compression ratios up to 2:1; B. Fast speed, high efficiency; C. Read-only, helps protect the file system from damage, improving system reliability, but cannot expand its contents.

Romfs A. A simple, compact, read-only file system; B. Stores data sequentially, thus supporting applications to run in XIP (Execute In Place) mode, saving RAM space during system operation.

Specific File System Types:Ramdisk File System

In the Linux system, memory is often used to store file systems, which is called Ramdisk. There are two types of Ramdisk: one treats memory entirely as a physical storage medium, simulating a disk using memory and applying a disk file system type; the other stores only the logical structure of the file system in memory, using tmpfs & ramfs file system types.

1. Overview: This simulates disk partitions using physical memory. Once this partition is mounted, it can be read and written just like disk files, but the speed is much faster than that of disk files. Therefore, it is generally applied in the following scenarios: 1) Files requiring fast read/write speeds should be placed in this file system; 2) When the disk partition is flash, frequently read/write files should be placed in this file system and periodically written back to flash; 3) Temporary files in the system, such as those under /tmp and /var, should be placed in this file system; 4) /dev device files (as device files change with the loading and unloading of drivers and devices) should be placed in this file system.

2. Characteristics: 1) Since data is stored in physical memory, all data in this file system will be lost upon system reboot; 2) Ramfs will automatically grow in size until it consumes all physical memory if no maximum size is specified, potentially leading to system crashes. It is advisable to limit its maximum size when mounting; 3) If a size limit is specified, tmpfs will grow to that limit, at which point the system will restrict its size. This file system occupies physical memory pages that can be swapped out to the swap partition, while Ramfs cannot.

Different file systems have different creation methods; some are quite complex, while others are simpler. Due to space constraints, I will not introduce them here but will cover the creation of file systems in a separate article later.