Author: Jeremy AndrewsTranslator: Tu LingEditor: Cai FangfangLinux was born in 1991, and it has been 30 years since then. Although it started as a personal project of Linus, rather than a grand dream to develop a new operating system, Linux is now ubiquitous.

Author: Jeremy AndrewsTranslator: Tu LingEditor: Cai FangfangLinux was born in 1991, and it has been 30 years since then. Although it started as a personal project of Linus, rather than a grand dream to develop a new operating system, Linux is now ubiquitous.

Thirty years ago, when Linus Torvalds first released the Linux kernel, he was a 21-year-old student at the University of Helsinki. He announced, “I am developing a (free) operating system (just as a hobby, it won’t be big and professional…)”. Thirty years later, 500 of the top supercomputers and over 70% of smartphones run Linux. Clearly, Linux is not only large but also very professional.

For 30 years, Linus Torvalds has been leading the development of the Linux kernel, inspiring countless developers and open-source projects. In 2005, Linus developed Git to manage the kernel development process. Git has now become the most popular version control system, trusted by countless open-source and private projects.

On the occasion of the 30th anniversary of Linux, Linus Torvalds responded via email to interview questions from Jeremy Andrews, founding partner/CEO of Tag 1 Consulting, reflecting on and summarizing the insights he has gained over the years while leading a large open-source project. This article focuses on Linux kernel development and Git. InfoQ has translated the interview content for readers.

Linux Kernel Development

Jeremy Andrews: Linux is everywhere; it is the source of inspiration for the entire open-source world. Of course, things were not always this way. In 1991, you posted a Linux kernel in the comp.os.minix Usenet newsgroup. Ten years later, you wrote a book called “Just for Fun: The Story of an Accidental Revolutionary”, which deeply reviewed that history. This August, Linux will celebrate its 30th anniversary! At what point during this process did you start to realize that Linux was more than just a “hobby”?

Linus Torvalds: It may sound a bit ridiculous, but I actually realized it quite early on. By the end of 1991 (and early 1992), Linux was already much larger than I had anticipated.

At that time, there may have only been a few hundred users (not exactly “users”, as people were still constantly patching it), and I never imagined that Linux would grow to what it is today. To me, the biggest turning point was when I realized that others were using it and were interested in it; it began to have a life of its own. People started sending patches, and the system could do much more than I initially envisioned.

In April 1992, X11 was ported to Linux (I can’t remember the exact date, as it was a long time ago), which was a significant advancement, as Linux suddenly had a GUI and a whole new set of features.

I didn’t have any grand plans at the beginning. It was just a personal project, not a grand dream to develop a new operating system. I just wanted to understand the ins and outs of my new PC hardware.

So, when I released the first version, it was more about “seeing what I had done”. Of course, I hoped others would find it interesting, but it wasn’t a truly usable operating system. It was more of a proof of concept and just a personal project I had worked on for a few months.

The transition from a “personal project” to others starting to use it, giving me feedback (and bug reports), and sending patches was a huge shift for me.

For example, the initial copyright license was “you can distribute it in source code form, but you cannot make money from it”.

At that time, commercial versions of Unix were too expensive for me (as a poor student, I had already spent all my money on a new PC), so I wanted the source code of this operating system to be publicly available (so people could provide patches), and I wanted to open it up to people like me who couldn’t afford expensive computers and operating systems.

By the end of 1991 (or early 1992), I changed the license to GPLv2 because someone wanted to distribute it on floppy disks to local Unix user groups but wanted to recoup the cost of the disks and compensate for the time spent copying the disks. I thought that was reasonable because whether it was “free” or not was not the most important thing; the most important thing was to “make the source code public”.

The end result was that people not only published it in Unix user groups, but within a few months, floppy disk distributions like SLS and Slackware appeared.

Compared to those fundamental changes at the beginning, everything that followed was “incremental”. Of course, some incremental changes were also significant (IBM’s involvement, the porting of Oracle databases, Red Hat’s IPO, the application of Android on phones, etc.), but to me, they still did not seem as revolutionary as the initial realization that “people I don’t know are using Linux”.

Jeremy Andrews: Have you ever regretted changing the license? Or do you feel regret that others or companies have made a lot of money using the system you developed?

Linus Torvalds: I have never regretted it.

First of all, I am doing quite well. I am not particularly wealthy, but I am a well-paid software engineer who can do what I love at my own pace.

The key is that I believe 100% that this license is a crucial reason for the success of Linux (and Git). I think that when everyone believes they have equal rights and no one has privileges in this regard, they become happier.

Many projects adopt a “dual licensing” approach, where the original author retains a commercial license (“you can use it as long as you pay the license fee”), while the project can also be open-sourced under the GPL license.

I think it is very difficult to build a good community in such cases because the open-source side knows they are “second-class citizens”. Additionally, a lot of licensing paperwork is required to ensure that the privileged side continues to have special rights, which adds extra friction to the project.

On the other hand, I have seen many open-source projects based on BSD (or similar licenses like MIT) that, when they become strong enough to have commercial value, inevitably split, and the related companies will turn their parts into proprietary.

I believe GPLv2 strikes a perfect balance between “everyone is under the same rules” and “requiring people to give back to the community”. Everyone knows that all participants are bound by the same rules, so it is very fair.

Of course, your contributions will always be rewarded. If you just want to participate lightly in the project or just want to be a user, that is fine too. If you really just want that, you cannot control the project. If you really just need a basic operating system, and Linux already has all the features you want, that is perfectly fine. But if you have special needs and want to contribute to the project, the only way to do that is to get involved.

This keeps everyone honest, including me. Anyone can fork the project, do it their own way, and say, “Goodbye, Linus, I am going to maintain my own version of Linux”. I am “special” only because people believe I can do the job well.

“Anyone can maintain their own version of Linux” has led some to question GPLv2, but I see it as an advantage, not a disadvantage. I believe this is actually the reason Linux has avoided fragmentation: everyone can create their own project branches. In fact, this is also one of the core design principles of “Git”—every clone of the repository is a branch, and people (and companies) can fork their own versions and complete their development work.

So, branching is not a problem as long as you can merge the good parts back. This is where GPLv2 comes into play. Being able to pull branches and modify the code in your own way is important, but it is equally important that when a branch proves successful, there is the right to merge it back.

Another issue is that, in addition to having tools that support this workflow, there also needs to be a mindset that supports it. One major obstacle to merging branches is not just licensing issues but also “animosity” issues. If a branch arises from opposition, merging two branches becomes very difficult—not because of licensing or technical reasons, but because the branches are too opposed. I believe Linux has avoided this situation mainly because we have always viewed branching as a natural thing. Moreover, when some development work proves successful, trying to merge it back is also very natural.

Although this answer is a bit off-topic, I think it is important—I do not regret changing the license because I truly believe GPLv2 is a crucial reason for Linux’s success.

Money is not a good motivator; it does not bring people together. I believe that participating in a common project and feeling that you can be a partner in that project is what motivates people.

Jeremy Andrews: Nowadays, people usually release source code based on GPLv2 because of Linux. How did you find this license? How much time and effort did you invest in researching other licenses?

Linus Torvalds: At that time, the debate over BSD and GPL was very intense. I saw some discussions about licenses while reading various newsgroups (like comp.arch, comp.os.minix, etc.).

Two main reasons might be gcc and Lars Wirzenius. gcc played a significant role in the development of Linux because I definitely needed a C language compiler. Lars Wirzenius was another computer science student at my university who spoke Swedish (a minority language in Finland).

Lasu liked to discuss licensing issues more than I did.

In my view, choosing GPLv2 was not a major political issue; it was mainly because I was too hasty in choosing the license initially and later needed to make modifications. Moreover, I am grateful for gcc, and GPLv2 aligns more with my expectation of “you must merge the source code back”.

Therefore, rather than creating a new license from scratch, it was better to choose a license that people already knew and that had some lawyers involved.

Jeremy Andrews: What does a typical day look like for you? How much time do you spend writing code, reviewing code, and on email? How do you balance personal life and Linux kernel development work?

Linus Torvalds: I write very little code now, and I haven’t written any in a long time. When I do write code, it is usually because there are specific issues that people are disputing. I modify the code and release it as a patch, as an explanation of the solution.

In other words, most of the code I write is more as an example of a solution, and patches are very specific examples. People can easily fall into the trap of theoretical discussions, and I find that the best way to describe a solution is to write code snippets; it doesn’t have to be a complete program, just enough to make the solution more concrete.

Most of my work time is spent on email. It is mainly communication, not writing code. In fact, I think this communication with journalists and tech bloggers is part of my job—it may be a bit lower priority than technical discussions, but I also spend quite a bit of time on these matters.

Of course, I also spend some time on code reviews. But to be honest, when I receive a PR, the problematic code has usually already been reviewed by others. So while I still look at the patches, I tend to focus more on the annotations and the evolution of the patches. But for those I have worked with for a long time, I don’t do this: they are the maintainers of their subsystems, and I don’t need to micromanage their work.

So, a lot of the time, my main job is just to “be there”, executing management and release tasks. In other words, my work is usually more about the maintenance process than the underlying code.

Jeremy Andrews: What is your work environment like? For example, do you prefer a dark, undisturbed room, or a room with a view? Do you like to work in a quiet environment, or do you prefer to work while listening to music? What hardware do you typically use? Do you review code in a terminal using vi, or do you use some quirky IDE? Do you have a preferred Linux distribution as your development environment?

Linus Torvalds: My room is not “dark”, but I do close the blinds on the window next to my desk because I don’t want strong sunlight. So, my room has no scenic view, just a (messy) desk with two 4k monitors and a powerful computer tower underneath. There are also a few laptops for testing and on-the-go use.

I like to work quietly. I really hate the ticking sound of mechanical hard drives, so I threw them in the trash and now only use SSDs. This has been the case for over 10 years. Noisy CPU fan noise is also unacceptable.

Code reviews are done in a traditional terminal, but I do not use vi. I use a thing called “micro-emacs”, which is a horrible thing. It has nothing to do with GNU emacs, but some key bindings are similar. I got used to it when I was at the University of Helsinki, and I haven’t changed that habit. A few years ago, I added (very limited) utf-8 support to it, but it is indeed quite outdated, and all signs indicate it was developed in the 80s; the version I use is a branch that hasn’t been updated since the mid-90s.

The University of Helsinki chose this tool because it could run on DOS, VAX/VMS, and Unix, which is why I also use it. By now, my fingers have developed muscle memory for it. I really need to switch to a tool that is maintained and supports utf-8, but the enhanced functionality I added works well enough that I haven’t forced my fingers to accept a new tool.

My work desktop is quite simple: a few text terminals, a browser with email open (and a few other tabs, mainly news and tech sites). I like a large desktop space because I am used to using large terminal windows (100×40 is my default initial size) and opening several side by side. I use two 4k monitors.

I have Fedora installed on all my machines, not because I prefer it, but because I am used to it. I don’t really care which distribution I use—choosing a distribution is just a way to install Linux and development tools on the machine.

Jeremy Andrews: The Linux kernel mailing list (https://lore.kernel.org/lkml/) is where people publicly communicate about kernel development, and the traffic is very high. How do you handle so many emails? Have you tried other collaboration and communication solutions outside of the mailing list? Or is this simple mailing list sufficient for your work?

Linus Torvalds: I haven’t directly read emails in the kernel mailing list for years. There are just too many emails.

Emails in the kernel mailing list are cc’d to all discussions. When newcomers join the discussion, they can refer to the kernel mailing list to understand the relevant history and context.

In the past, I would subscribe to the mailing list and have all emails not cc’d to me automatically archived, and I would not look at them by default. When some issues required my intervention, I could find all relevant discussions because they were all in my email, just appearing in my inbox when needed.

Now, I use the features provided by lore.kernel.org because it is very convenient, and we have also developed some tools based on it. This way, there is no need to automatically archive emails; we have changed the way we discuss, but the basic workflow is the same.

But clearly, I still receive a lot of emails—but in many ways, things have improved over the years rather than getting worse. A large part of this is due to the improvements in Git and the kernel release process: we used to have many issues with the code flow and tools. The early 2000s were the worst, when we were still dealing with huge patch bombs, and our development process had serious scalability issues.

The mailing list model works very well, but that doesn’t mean people don’t use other communication methods besides email: some people prefer various real-time chat tools (like traditional IRC). While I am not a big fan of that, it is clear that some people like to use them for brainstorming. But this “mailing list archive” model works very well and can seamlessly combine “sending patches via email between developers” and “sending issue reports via email”.

So email remains the primary communication channel, and because patches can be included in emails, we can discuss technical issues more easily. Moreover, email can cross time zones, which is very important when participants are spread across different regions.

Jeremy Andrews: I have closely followed kernel development for about 10 years and wrote kernel-related blog posts on KernelTrap until I stopped updating the blog around the release of kernel 3.0. The release of kernel 3.0 was 8 years after the release of 2.6.x. Please summarize some interesting things that have happened in kernel development since version 3.0.

Linus Torvalds: That was a long time ago, and I don’t know where to start summarizing. It has been 10 years since version 3.0, and a lot of technical changes have occurred during this time. ARM has matured, ARM64 has become one of our main architectures, and a large number of new drivers and core features have emerged.

If there is anything interesting that has happened in the past 10 years, it is our efforts to maintain the stability of the development model and the things that have not changed.

Over the past few decades, we have experienced various version numbering schemes and different development models, and version 3.0 ultimately established the model that has been used since. It made the statement “time-based releases, version numbers are just numbers, unrelated to features” a reality.

In version 2.6.x, we already had a time-based release model, so it was not something new, but version 3.0 was indeed a crucial step in solidifying that model.

We previously used a random numbering scheme (mainly before version 1.0), then used “odd numbers for development kernels and even numbers for stable production-ready kernels”, and then in version 2.6.x, we began to enter a time-based release model. But people still had questions about “when to increment the major version number”. After the release of version 3.0, it declared that the major version number was meaningless, and we tried to simplify the numbers and not let them get too large.

Therefore, in the past 10 years, we have made huge changes (with Git, it is easy to get some numerical statistics: over 17,000 people have submitted about 750,000 code changes), but the development model has remained quite stable.

However, it has not always been this way; the first 20 years of kernel development experienced quite painful changes in the development model, and it is only in the past 10 years that the predictability of releases has significantly improved.

Jeremy Andrews: Currently, the latest version is 5.12-rc5. What is the standard release process now? For example, what are the differences between -rc1 and -rc2? Under what circumstances do you decide to officially release a given version? What happens if a lot of regressions occur after the official release? How often does this happen? How has this process evolved over the years?

Linus Torvalds: I mentioned earlier that the process itself is quite standard and has been so for the past ten years. Before that, it underwent several evolutions, but since version 3.0, it has been running like clockwork.

So far, our release rhythm is as follows: first, a two-week merge window, followed by about 6 to 8 weeks of candidate versions, and then the final version. This has been the case for about 15 years.

The rules have always been the same, although they are not always strictly enforced: the merge window is for new code that is considered “tested and ready”, and then in the following two months or so, fixes are made to ensure all issues are resolved. Sometimes, those so-called “ready” codes may be disabled or completely overturned before release.

This process repeats, so we release approximately every 10 weeks.

Meeting the release standard is based on my confidence in the candidate version, which is based on various issue reports. If certain aspects still have issues late in the rc phase, I will strongly push to revert those and suggest putting them in a later version. But overall, this situation is rare.

Is everything completely problem-free? No. Once the kernel is released, new users will discover some issues that were not found in the rc version. This is almost inevitable. This is also why we need a “stable kernel” tree. After release, we can continue to fix the code. Some stable kernels are maintained longer than others and are referred to as LTS (“Long Term Support”) versions.

None of this has changed much in the past ten years, although there has been more automation in the process. Generally speaking, kernel testing automation is quite difficult—because many kernels are drivers that heavily depend on the availability of hardware. However, we have several test farms that perform boot and performance testing, as well as various random load tests. These have improved significantly over the years.

Jeremy Andrews: Last November, someone mentioned that you were very interested in the ARM64 chips used in some new Apple computers. Will Linux support them? I see some code has been merged into for-next. Is the upcoming 5.13 kernel likely to boot on Apple MacBooks? Will you be an early adopter? What is the significance of ARM64?

Linus Torvalds: I occasionally keep up with this, but it is too early to say. As you mentioned, early support may be merged into 5.13, but that is just a start and does not indicate what Linux and Apple computers will look like in the future.

The main issue is not the arm64 architecture but all the associated hardware drivers (especially SSDs and GPUs). So far, some underlying things have been supported, but there are no useful results beyond enabling the hardware. It will take some time to reach a level where it can be used by people.

Not only has Apple’s hardware improved—arm64 architecture as a whole has also matured significantly, and the kernel has become more competitive in the server space. Not long ago, arm64 was quite weak in the server space, but Amazon’s Graviton2 and Ampere’s Altra processors—both based on improved ARM Neoverse IP—are much better than products from a few years ago.

I have been waiting for a usable ARM machine for over ten years, and it seems I will have to continue waiting, but the situation is clearly better than before.

In fact, I wanted an ARM machine a long time ago. When I was a teenager, what I really wanted was an Acorn Archimedes, but availability and price led me to eventually choose a Sinclair QL (with an M68008 processor), and then a few years later switch to an i386.

So, this idea has been brewing for decades. But they are still not widely used, and for me, they are not competitive in terms of price and performance. I hope this idea can become a reality in the near future.

Jeremy Andrews: Is there anything in the kernel that needs to be completely rewritten to achieve optimal performance? Or, given that the kernel has been around for 30 years, and knowledge, programming languages, and hardware have changed significantly in that time: if you were to rewrite it from scratch now, what changes would you make?

Linus Torvalds: If necessary, we would do so. We are really good at rewriting; those things that would have caused disasters were rewritten long ago.

We have many “compatibility” layers, but they generally do not cause too many problems. If we were to rewrite from scratch, whether to remove these compatibility layers is still unclear—these layers exist to maintain backward compatibility with old binaries (usually to maintain backward compatibility with old architectures, such as running 32-bit x86 applications on x86-64). Because I believe backward compatibility is very important, I would want to keep these compatibility layers even if we rewrote.

So it is clear that there are many things that are not optimal; after all, everything has room for improvement. But in response to your question, I have to say that I do not disdain anything. There are some legacy drivers that perhaps no one cares about and no one cleans up, which do some ugly things, but that is mainly because “no one cares”. These were not problems in the past, and once they become problems, we actively remove those things that no one cares about. Over the years, we have removed many drivers, and when maintenance no longer makes sense, we abandon support for entire architectures.

The main reason for “rewriting” is that the entire architecture is no longer meaningful, but there are still some use cases. The most likely scenario is that some small embedded systems do not need everything Linux provides; their hardware is small and requires a simpler, less functional system.

Linux has come a long way. Now, even small hardware (like phones) is much more powerful than the machines used to develop Linux.

Jeremy Andrews: What if we rewrote part of the system in Rust? Is there room for improvement in this regard? Do you think it is possible to replace C with another language (like Rust) in kernel development?

Linus Torvalds: I do not think we will replace C with Rust for kernel development, but we may use it to develop some drivers, perhaps an entire driver subsystem, or file systems. So it is not about “replacing C” but rather “extending our C code in meaningful ways”.

Of course, drivers make up almost half of the kernel code, so there is a lot of room for rewriting, but I do not think everyone will be eager to see a complete rewrite of existing drivers in Rust. Perhaps “some people will develop new drivers in Rust or appropriately rewrite parts of old drivers”.

Right now, it is more about “people trying and experimenting” with Rust, and that is all. The advantages of Rust certainly come with complexities, so I will take a wait-and-see approach to see if these advantages really work out.

Jeremy Andrews: Is there a part of the kernel that you personally feel most proud of?

Linus Torvalds: I would say the VFS layer (Virtual File System, especially pathname lookup) and VM. The former is because Linux does some basic tasks (finding file names in an operating system is indeed a core operation) much better than other systems, and the latter is mainly because we support over 20 architectures while still using a fundamentally unified VM layer, which I think is remarkable.

But at the same time, it largely depends on “which part of the kernel you are most concerned about”. The kernel is large, and different developers (and different users) will focus on different aspects. Some people think scheduling is the most exciting part of the kernel, while others focus on the details of device drivers (we have many such drivers). Personally, I have been more involved in VM and VFS, so naturally, I would mention them.

Jeremy Andrews: I looked at the description of pathname lookup, and it is more complex than I expected. What makes Linux do this better than other operating systems? What do you mean by “better”?

Linus Torvalds: Pathname lookup is such a common and fundamental task that most non-kernel developers do not think of it as a problem: they just know how to open files and take it for granted.

But doing it well is actually quite complex. Specifically, because almost everywhere uses pathname lookup, the performance requirements are very high, and everyone wants it to scale well in SMP environments, while locking is also complex. You do not want to incur IO, so caching becomes very important. Pathname lookup is so important that you cannot leave it to the underlying file systems because we have over 20 different file systems, and letting them each have their own caching and locking mechanisms would be a complete disaster.

So, one of the main tasks of the VFS layer is to handle all the locking and caching issues for all pathname components, as well as all serialization and mount point traversal issues, which are done through lock-free algorithms (RCU), but there are also some very smart locks (the Linux kernel’s “lockref” lock is a very special “reference-counted spinlock” that is ostensibly designed for dcache caching but is essentially a lock-aware reference count that can eliminate locks in some common cases).

The end result is that the underlying file systems still need to look up uncached content, but they do not need to worry about caching and consistency rules and the atomicity rules related to pathname lookup. VFS handles all these issues for them.

And its performance is better than any other operating system, basically running perfectly on machines with thousands of CPUs.

So it is not just “better”; it is “better” in capital letters. Nothing can compare to it. Linux dcache is unique.

Jeremy Andrews: The past year has been a tough year for the world. What impact has the COVID-19 pandemic had on the kernel development process?

Linus Torvalds: In fact, thanks to our way of working, its impact has been very small. Email is really a great tool, and we do not rely on face-to-face meetings.

Yes, it did affect last year’s annual kernel summit (this year’s summit is still undecided), and most meetings were canceled or moved online. Most people who used to work in the office have started working from home (but many core kernel maintainers had already been doing this before). So, many things have changed around, but kernel development has remained the same as before.

Clearly, the COVID-19 pandemic has affected all our lives in other ways, but overall, as kernel developers who communicate almost entirely through email, we are probably the least affected.

Version Control System Git

Jeremy Andrews: Linux is just one of your many contributions to open source. In 2005, you also created Git, a very popular distributed source code control system. You quickly migrated the Linux kernel source tree from the proprietary BitKeeper to the open-source Git system and handed over maintenance to Junio Hamano that same year. There are many interesting stories here; what prompted you to hand over the leadership of the project so quickly, and how did you find and choose Junio?

Linus Torvalds: The answer can be divided into two parts.

First of all, I did not want to create a new source code control system. I developed Linux because the low-level interface between hardware and software was very appealing to me—basically out of personal love and interest. In contrast, developing Git was because there was a real need: not because I thought source code control was interesting, but because I despised most of the source code control systems on the market. And I felt that the most suitable one, BitKeeper, which worked well in Linux development, could no longer be maintained.

I have been developing Linux for over 30 years (with the anniversary of the first version coming up in a few months, but I started researching the “predecessor” of Linux 30 years ago), and I have always maintained it. But Git? I never really thought I wanted to maintain it long-term. I like using it, and to some extent, I think it is the best SCM, but it is not my area of interest.

So I always hoped someone else would maintain SCM for me—in fact, I would have been happy if I had not had to develop this SCM myself.

That is the background of the story.

As for Junio, he was actually one of the first people to join the Git development team. He submitted the first change code just a few days after I made the first very rough version of Git public, so Junio was involved from the very beginning.

But the reason I handed the project over to Junio was not because he was one of the first participants. After maintaining Git for a few months, the real reason that made me decide to hand the project over to Junio was his “good taste”—a concept that is hard to describe. I really cannot think of a better description: programming is mainly about solving technical problems, but how to solve those problems and how to think about them is also important. Over time, you start to realize that some people just have that “good taste”; they always choose the right solution.

I do not want to say that programming is an art, because it is mainly about “good engineering”. I really like Thomas Edison’s quote, “Genius is one percent inspiration and ninety-nine percent perspiration”: programming involves almost all the details and daily hard work. But that one percent of “inspiration”, that is, “good taste”, is not just about solving problems but doing it cleanly and beautifully.

Junio has that “good taste”.

Every time I mention Git, I try to clarify: I proposed the core idea of Git at the beginning and often received too much credit for that part of the work. In the 15 years of Git, I have only really participated in the project in the first year. Junio is an excellent maintainer, and he is the one who has made Git what it is today.

By the way, regarding “good taste” and finding people with good taste and trusting them—not just Git is like this; Linux is like this too. Unlike Git, I am still actively maintaining the Linux project, but like Git, Linux is also a project with many contributors. I believe one of the great successes of Linux is that it has hundreds of maintainers, all of whom possess “good taste” and maintain different parts of the kernel.

Jeremy Andrews: Have you ever had the experience of handing over control to a maintainer and then realizing it was a wrong decision?

Linus Torvalds: Our maintenance system has never been black and white, so that situation does not occur. In fact, we do not even formally record maintenance rights: we do have a MAINTAINERS file, but that is just to help you find the right person when you encounter a problem, not a mark of exclusive ownership.

So, “who is responsible for what” is more like a fluid guideline, and “this person is active and doing a good job” rather than “we gave ownership to that person, and then they messed it up”.

In a sense, our maintenance system is also fluid. Suppose you are the maintainer of a subsystem, and you need something from another subsystem; you can cross boundaries. Usually, people communicate extensively before doing this, and it does happen. It is not a hard rule like “you can only touch this file”.

In fact, this relates to the earlier discussion about licensing. Another design principle of “Git” is that “everyone has their own code tree, but no code tree is special”.

Because many other projects use tools—like CVS or SVN—that make some people “special” and give them some “ownership”. In the BSD world, they call it “commit bit”: giving a maintainer a “commit bit” means they can commit code to the central code repository.

I have always hated this model because it inevitably leads to the emergence of political “cliques”. In this model, there are always some people who are special and implicitly trusted. The key issue is not even “implicitly trusted” but the other side of the coin—others are not trusted; they are defined as outsiders who must be subject to the guardians.

Similarly, there is no such situation in Git development. Everyone is equal; anyone can clone the code, do their own development, and if they do well, they can merge it back.

So, there is no need to give people privileges, nor is there a need for a “commit bit”. This avoids the emergence of political “cliques” and does not require “implicit trust”. If they do poorly—or more commonly, eventually disappear and turn to another interest—their code will not be merged back, and it will not hinder others with new ideas.

Jeremy Andrews: Are there any new features in Git that have impressed you and become part of your workflow? Are there any features you would like to add?

Linus Torvalds: My needs for Git have always been met first, so there are no “new” features for me.

Over the years, Git has indeed improved significantly, and some of that has already been reflected in my workflow. For example, Git has always been fast—after all, that was one of my design goals—but most of its features were initially built around shell scripts. Over the years, most shell scripts have disappeared, which means I can apply Andrew Morton’s patches faster than before. This is gratifying because it was actually one of the benchmarks I used for performance testing early on.

So Git has always been good for me, and it is getting better.

The biggest improvement in Git is that the user experience for “ordinary users” has become better. Part of the reason is that people have gradually become accustomed to Git workflows as they learn them, but more importantly, Git itself has become easier to use.

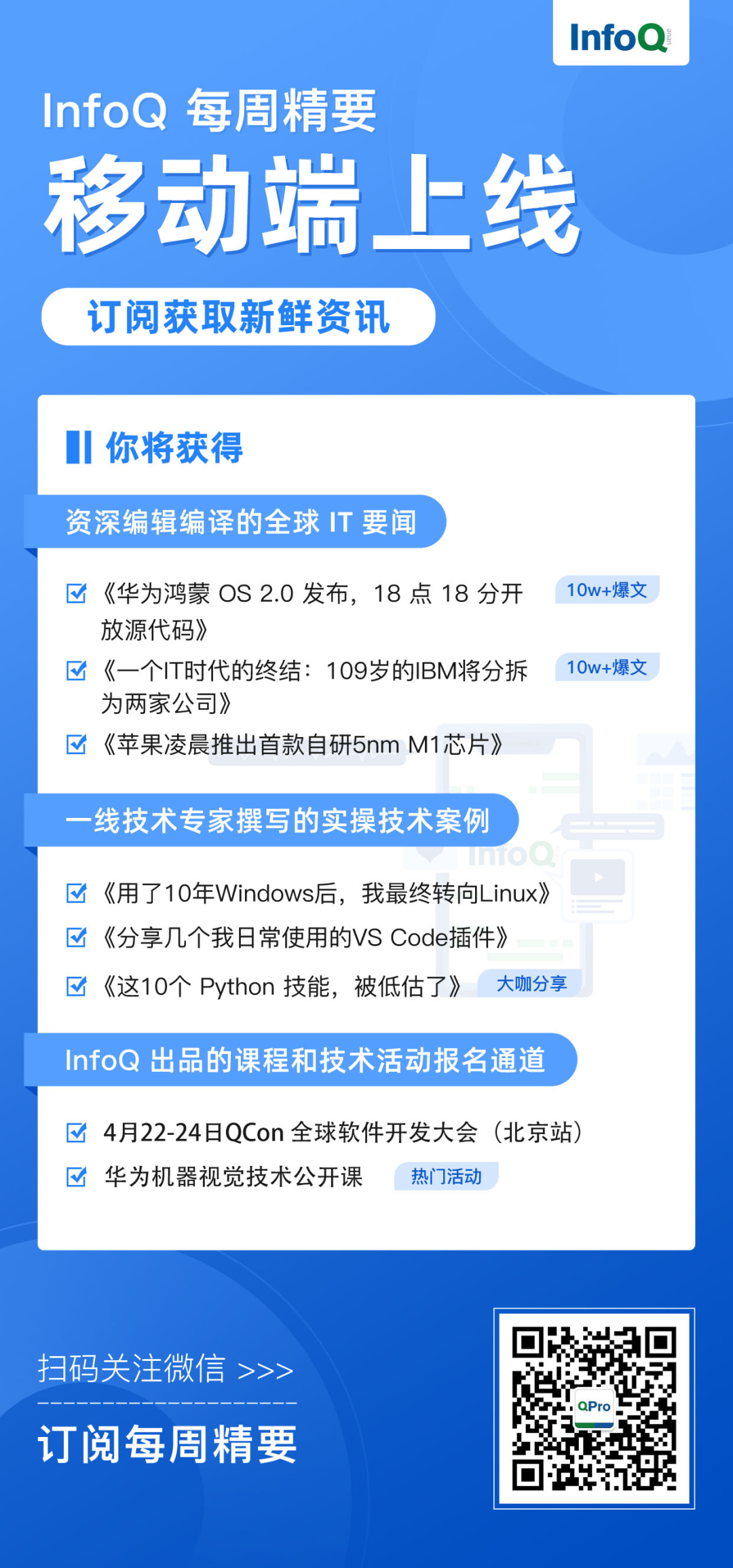

This Week’s Recommended ArticlesLei Jun: Young people should not make suggestions within six months of joining; NetEase responds to inappropriate recruitment remarks: has terminated the labor contract; Ant’s self-developed database OceanBase will be open-sourced | Q NewsData Mesh, the next revolution in data architecture! After nearly 30 years of open-source projects being “usurped”, employees collectively left to establish a new project to counter it. Finally! Fuchsia OS is officially available, marking Google’s most critical step in five years.Weekly Highlights The mobile version of the highlights is now online; subscribe immediately to receive a collection of essential content that InfoQ users must read every week: articles written or compiled by senior technical editors; practical technical cases written by frontline technical experts; courses and technical event registration channels produced by InfoQ; “Code” up to follow, subscribe to weekly fresh information

Click to see less bugs👇