If a person only has senses and muscles, their limbs cannot move. This is partly because there are no organs to receive and process signals from the senses, and also because there are no organs to issue nerve signals that drive muscle contractions or relaxations. Similarly, if a robot only has sensors and actuators, its mechanical arm cannot function properly. This is because the signals output by the sensors do not take effect, and the drive motors do not receive the necessary voltage and current. Therefore, a robot needs a controller, which forms a control system composed of hardware and software.

The function of the robot control system is to receive detection signals from sensors and, according to the requirements of the operational tasks, drive the motors in the mechanical arm, just as human activities depend on their own senses. The motion control of the robot cannot be separated from the sensors. Robots need sensors to detect various states. The internal sensor signals of the robot are used to reflect the actual motion state of the mechanical arm joints, while the external sensor signals are used to detect changes in the working environment.

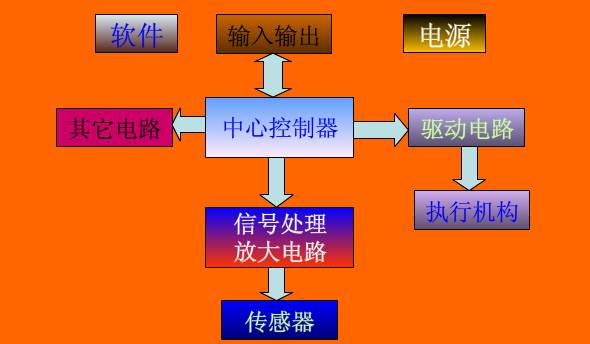

Thus, the robot’s nervous system and brain must work together to form a complete robot control system.

What does the robot motion control system include?

Actuators —- Servo motors or stepper motors; Drive mechanisms —- Servo or stepper drivers; Control mechanisms —- Motion controllers that perform path and motor linkage algorithm calculations; Control methods —- If there are fixed execution methods, then pre-program fixed parameters for the motion controller; if there is a visual system or other sensors, then program non-fixed parameters based on sensor signals for the motion controller.

-

Control the motion position of the end effector of the mechanical arm (that is, control the points and movement path the end effector passes through);

-

Control the motion posture of the mechanical arm (that is, control the relative positions of two adjacent moving components);

-

Control the motion speed (that is, control the law of change of the end effector’s motion position over time);

-

Control the motion acceleration (that is, control the speed change of the end effector during movement);

-

Control the output torque of each dynamic joint in the mechanical arm (that is, control the force applied to the object being operated on);

-

Have user-friendly human-machine interaction functions, allowing the robot to complete specified tasks through memory and reproduction;

-

Enable the robot to have detection and sensing functions for the external environment. Industrial robots are equipped with vision, force, touch, and other sensors for measurement, identification, and judgment of changes in working conditions.

1. Hardware Structure of Industrial Robot Control Systems The controller is the core of the robot system, and foreign companies have implemented strict blockades against our country. In recent years, with the development of microelectronics technology, the performance of microprocessors has increased while their prices have decreased. Currently, 32-bit microprocessors priced at 1-2 dollars have appeared on the market. Cost-effective microprocessors have brought new development opportunities for robot controllers, making it possible to develop low-cost, high-performance robot controllers. To ensure that the system has sufficient computing and storage capacity, current robot controllers generally use chips from the ARM series, DSP series, POWERPC series, Intel series, etc. Furthermore, since existing general-purpose chips do not fully meet the requirements of certain robot systems in terms of price, performance, integration, and interfaces, there has been a demand for SoC (System on Chip) technology in robot systems, integrating specific processors with the required interfaces together, which can simplify the design of peripheral circuits, reduce system size, and lower costs. For example, Actel integrates NEOS or ARM7 processor cores into its FPGA products to form a complete SoC system. Research on robot motion controllers is mainly concentrated in the United States and Japan, with mature products such as those from Delta Tau in the USA and POMT in Japan. Their motion controllers are based on DSP technology and adopt a PC-based open structure.

2. Architecture of Industrial Robot Control Systems In terms of controller architecture, the focus of research is on functional division and the norms of information exchange between functions. In research on open controller architectures, there are two basic structures: one is based on hardware level division, which is relatively simple; in Japan, architectures are divided based on hardware, such as Mitsubishi Heavy Industries’ PA210 portable general-purpose intelligent robotic arm being divided into a five-layer structure; the other is based on functional division, which considers both hardware and software, and is the direction of research and development of robot controller architectures. 3. Control Software Development Environment In terms of robot software development environments, most industrial robot companies have their own independent development environments and robot programming languages, such as Japan’s Motoman, Germany’s KUKA, the USA’s Adept, and Sweden’s ABB. Many universities have conducted extensive research on Robot Development Environments and provided a lot of open-source software that can be integrated and controlled under certain robot hardware structures. Many related experiments have been conducted in laboratory environments. Existing robot system development environments include TeamBots v.2.0e, ARIA v.2.4.1, Player/Stage v.1.6.5.1.6.2, Pyro v.4.6.0, CARMEN v.1.1.1, MissionLab v.6.0, ADE v.1.0beta, Miro v.CVS-March17.2006, MARIE v.0.4.0, FlowDesigner v.0.9.0, RobotFlow v.0.2.6, etc. From the perspective of robot industry development, there are two aspects of demand for robot software development environments. On one hand, there are demands from end users of robots, who not only use robots but also hope to program them to endow more functions. This programming is often realized using visual programming languages, such as the graphical programming environment of Lego MindStorms NXT and the visual programming environment provided by Microsoft Robotics Studio. 4. Dedicated Operating Systems for Robots (1) VxWorks, VxWorks is an embedded real-time operating system (RTOS) designed and developed by Wind River in the USA in 1983 and is a key component of the Tornado embedded development environment. VxWorks has a scalable microkernel structure; efficient task management; flexible inter-task communication; microsecond-level interrupt handling; supports POSIX 1003.1b real-time extension standards; supports various physical media and standard, complete TCP/IP network protocols, etc. (2) Windows CE, Windows CE has good compatibility with the Windows series, which is undoubtedly a major advantage for its promotion. Windows CE provides a feature-rich operating system platform for establishing dynamic applications and services for handheld devices and wireless devices, and can run on various processor architectures, typically suitable for devices with certain memory space limitations. (3) Embedded Linux, due to its open source code, can be modified at will to meet specific applications. Most of them comply with GPL, making it open source and free. They can be slightly modified for use in users’ own systems. There is a large developer community, so no specialized talent is required, as long as one understands Unix/Linux and C language. A vast number of hardware is supported. Embedded Linux is not fundamentally different from regular Linux, and it supports almost all hardware used in PCs. Moreover, the source code for drivers for various hardware can be obtained, which greatly facilitates users in writing drivers for their proprietary hardware. (4) μC/OS-Ⅱ, μC/OS-Ⅱ is a well-known open-source real-time kernel designed specifically for embedded applications and can be used for 8-bit, 16-bit, and 32-bit microcontrollers or digital signal processors (DSP). Its main features are open-source, good portability, solid-state, scalability, preemptive kernel, and determinism. (5) DSP/BIOS, DSP/BIOS is a real-time multitasking operating system kernel designed by TI specifically for its TMS320C6000, TMS320C5000, and TMS320C28x series DSP platforms and is part of TI’s Code Composer Studio development tools. DSP/BIOS mainly consists of three parts: a multithreaded real-time kernel; real-time analysis tools; and a chip support library. Developing programs using real-time operating systems can facilitate and speed up the development of complex DSP programs. 5. Robot Servo Communication Bus Technology Currently, there is no dedicated servo communication bus for robot systems internationally. In practical applications, common buses such as Ethernet, CAN, 1394, SERCOS, USB, RS-485, etc., are usually used according to system requirements. Most communication control buses can be categorized into two types: serial bus technology based on RS-485 and line driver technology and high-speed serial bus technology based on real-time industrial Ethernet.

(1) Open modular control system architecture: It adopts a distributed CPU computer structure, divided into robot controllers (RC), motion controllers (MC), opto-isolated I/O control boards, sensor processing boards, and programming teaching boxes, etc. The robot controller (RC) and the programming teaching box communicate via serial/CAN bus. The main computer of the robot controller (RC) completes the robot’s motion planning, interpolation, position servo, as well as main control logic, digital I/O, sensor processing, and other functions, while the programming teaching box displays information and accepts user inputs.

(2) Modular hierarchical controller software system: The software system is built on an open-source real-time multitasking operating system, Linux, and adopts a layered and modular structural design to achieve openness. The entire controller software system is divided into three levels: hardware driver layer, core layer, and application layer. Each level addresses different functional requirements and corresponds to different levels of development, with each level composed of several functionally opposing modules that collaborate to achieve the functions provided by that level.

(3) Robot fault diagnosis and safety maintenance technology: Diagnosing robot faults through various information and performing corresponding maintenance is a key technology to ensure the safety of robots.

(4) Networked robot controller technology: Currently, robot application engineering is evolving from single robot workstations to robot production lines, making networking technology for robot controllers increasingly important. Controllers are equipped with serial, fieldbus, and Ethernet networking capabilities, allowing communication between robot controllers and between robot controllers and upper computers, facilitating monitoring, diagnosis, and management of robot production lines.

1. Huazhong CNC

2. Siasun Robot

3. Xinsida

4. Nanjing Estun

5. Inovance Technology

6. Guangzhou CNC Equipment Co., Ltd.

7. Shenzhen Huasheng Control Technology Co., Ltd.

8. Guantai CNC

9. Goko Technology

10. Canopus

If the drive subsystem is the robot’s muscles, the energy subsystem is the robot’s heart, then the control and decision-making subsystem is the robot’s brain. This is the most important and complex subsystem of the robot.

Robots are highly complex automated devices. Their control subsystems also derive directly from other applications in the field of automation, such as processors, circuits, and standards used in factory automation. This chapter merely lists and compares several common and typical control system topologies, and then analyzes several typical robot control subsystems, especially detailing the control architecture of the “Creative Star” robot.

Typical Robot Control Architectures

Here we do not discuss traditional industrial robots but focus mainly on new forms of robots such as autonomous mobile robots and bionic robots. Generally, the architecture of a robot refers to how to organically combine various modules such as perception, modeling, planning, decision-making, and action, to complete the target tasks in a dynamic environment for one or more robots. Overall, the current control architectures for autonomous robots can be categorized into the following types:

1. Program-controlled architecture, also known as planning architecture, which provides a sequence of behavioral actions based on a given initial state and target state, executing step by step. More complex program-controlled models may also adjust control strategies based on sensor feedback, such as using “conditional judgment + jump” methods in the sequence of the program.

2. Inclusive architecture and behavior-based control models, also known as reactive models, where complex tasks are decomposed into a series of relatively simple specific behaviors, each based on sensor information and controlling one aspect of the comprehensive goal. Behavior-based robot systems can respond quickly to changes in the surrounding environment, with good real-time performance, but they do not make global plans for tasks, thus cannot guarantee that the achievement of goals is optimal.

3. Hybrid architecture, is an integrated system of planning and behavior-based systems, which is sensitive to changes in the environment and ensures the efficiency of goal achievement. Typically, hybrid architecture has two modes: one mode is that the overall framework of the decision system is based on planning, and when encountering dynamic situations, it is dominated by the behavior model; the other mode is that the overall framework of the decision system is based on behavior, adopting the planning model in certain specific behaviors. In short, the design goal of hybrid architecture is to comprehensively integrate the advantages of program-controlled architecture and inclusive architecture, while avoiding their disadvantages.

1.1 Centralized Program-controlled Architecture

Traditional robots are mostly industrial robots. They usually work at a specific position on an assembly line, where the position of each robot is known and determined; designers are also clear about what each robot’s task is and where its working object is located before the robot starts working. In this case, controlling the robot becomes numerical computation, or “symbolic” computation. For example, we can obtain the coordinates of a transport robot’s base through field measurements; then through spatial mechanism geometry calculations (the forward and inverse solutions of spatial robots), we can determine the positions of the robot’s joints when its end transport device can reach a given position. In this way, the robot control strategy designer writes strategies in a static, structured, symbolic environment; they do not need to consider too many unexpected situations, at most needing to consider some exceptions, such as using simple sensors to detect whether the item to be transported is in the correct position, thus deciding whether to trigger an alarm or stop working, etc.

This type of robot is usually controlled by a single controller. This controller collects position, angle, and other information from various joints of the robot and additional sensors, processes it through the controller, and calculates the next step of the robot’s work. The entire robot operates under the control of this controller, and handling some exceptions is also within the program’s set range. The following diagram shows two typical mobile robot block diagrams that use centralized system architecture. The controller on the left is a PC that handles all information collection, processing, and control functions; the right diagram is an improved robot architecture: in addition to the PC, a DSP controller is added to share some of the PC’s workload. However, under both architectures, the burden on the controller is quite heavy, and if the controller fails, the entire robot will become inoperative.

For the work described above, program-controlled, centralized control structures are very ideal. As mentioned earlier, robots do not encounter too many dynamic, non-symbolic environmental changes, and the controller can obtain sufficient and accurate environmental information. Designers can pre-design the optimal strategy before the robot starts working, and during the process, only handle some predictable exceptional events.

However, suppose we want to design a mobile robot that roams in a room, where the size of the room is unknown; and we also cannot accurately obtain the robot’s relative position in the room. In that case, this architecture will be unable to obtain sufficient information and cannot handle unknown unexpected situations. Therefore, for robots beyond traditional definitions, such as mobile robots, pet robots, etc., program-controlled control architecture is difficult to adapt.

The advantage of centralized program-controlled architecture is that the system structure is simple and clear, with all logical decisions and calculations performed in a centralized controller. This architecture is clear: the controller is the brain, and other parts do not need processing capability.

But for bionic robots and robots working in unknown environments, is it really suitable for a single brain to handle everything?

Consider low-level organisms such as earthworms and centipedes. They have poorly developed brains (or even no brains) but possess a well-developed nerve cord or ganglia. Most of these distributed ganglia govern their activities and responses, rather than a brain.

Readers should have done this experiment in middle school biology class:

Gently tap below the knee with a small hammer. You will find that the calf automatically raises uncontrollably. This is the famous “knee-jerk reflex” experiment. This experiment shows that even higher organisms like humans do not have all life activities under the control of the brain. Imagine a robot with all human functions. It has hundreds of motors corresponding to hundreds of human muscles, thousands of sensors corresponding to human skin, eyes, nose, and ears. If a centralized control architecture is used, it would be difficult for the robot’s brain to handle such a massive amount of data computation and decision-making.

Therefore, for robots other than industrial ones, hierarchical control architecture, inclusive architecture, and hybrid architecture have been developed, which are more suitable for their characteristics.

1.2 Layered Architecture

Layered architecture has developed alongside the advancement of distributed control theories and technologies. Distributed control usually consists of one or more main controllers and many nodes, each with processing capabilities. The central idea is that the main controller can be relatively weak, but most non-symbolic information has already been processed and symbolized at their respective nodes, then passed to the main controller for decision-making. Single-node distributed control models have been widely applied in complex structures such as large factories and buildings, where there are many sensors and actuators.

Layered architecture is based on cognitive artificial intelligence (AI) models, hence also called knowledge-based architecture. In AI models, intelligent tasks are achieved through reasoning processes running on the model, emphasizing a central planner with an environmental model, which is an indispensable component of robot intelligence, and this model must be accurate and consistent. Layered architecture divides various modules into several levels, allowing modules at different levels to have different performance and operation modes.

By functionally dividing nodes with different functions in distributed systems, layered architecture is obtained.

The most representative example of layered architecture is the three-layer model proposed by the famous scholar Saridis in the intelligent control field in the 1980s. Saridis believes that as control precision increases, intelligent capability decreases; that is, the higher the layer, the greater the intelligence but the lower the precision, and vice versa. Based on this principle, he divides the entire structure into three levels according to function: execution level, coordination level, and organizational level. The organizational level is the system’s “brain,” achieving cognition, expression, planning, and decision-making in task organization through artificial intelligence; the coordination level is the intelligent interface between the upper and lower levels, coordinating with the next level to determine the execution sequence and conditions using artificial intelligence and operations research; the execution level, based on control theory, implements high precision control requirements, executing determined movements. It should be noted that this is merely a conceptual model; the actual physical structure can have more or fewer than three levels. Regardless of the number of levels, from a functional perspective, they can generally be divided into these three levels. The information flow starts from low-level sensors, through internal and external state assessments and inductions, layer by layer upward, where overall decisions are made; the high-level accepts overall tasks, plans based on information provided by the information system, determines overall strategies, forms macro commands, and then through the planning design of the coordination level, generates several sub-commands and work sequences to be allocated to each controller for execution.

In layered architecture, the most widely followed principle is to divide layers and modules based on time and function. One of the most representative examples is the NASREM structure proposed by NASA and the National Bureau of Standards (NBS) in the USA. One of its starting points is to consider that an intelligent robot might have multiple controlled subsystems such as operation hands, communication, sonar, etc., and such robots may form a group or combine into higher-level systems to work together; another starting point is to consider that existing unit technologies and technologies under research can be applied to this system, including modern control technologies and artificial intelligence technologies. The entire system is horizontally divided into three columns: information processing, environmental modeling, and task decomposition, and vertically divided into coordinate transformation and servo control, dynamic calculation, basic motion, individual tasks, group tasks, and overall tasks. All modules share a global database, as shown in the diagram below.

The functions and relationships of the modules in the NASREM structure are very clear, which is beneficial for the system’s structure and the addition and replacement of algorithms within each module. It has global planning and reasoning capabilities, allowing reasonable responses to complex environments, suitable for controlling one or a group of robots. However, like other layered architectures, NASREM’s issue lies in that input environmental information must pass through all modules in the information processing column. The result is often that simple problems become complicated, affecting the robot’s response speed to environmental changes, which is a crucial performance requirement for robots. Therefore, theoretically, layered architecture is only suitable for robots with certain positional environmental information, working in slightly unstructured environments. However, due to the rapid development of bus technology and real-time control technology, one of the most fatal weaknesses of layered architecture, namely slow response, has also been mitigated to some extent. Since layered architecture is also quite mature, it is still used by many mobile robots and toy robots and has integrated aspects of inclusive architecture and hybrid architecture to some extent.

1.3 Inclusive Architecture

Assuming our robot operates in a virtual environment. In this virtual environment, the ground is perfectly level, and walls are perfectly vertical; at the same time, sensors are error-free, and the robot’s wheels do not slip, allowing us to accurately determine the robot’s position and its relative relationship with the surrounding environment through sensors like encoders, thus making decisions based on the program.

However, the reality is far from ideal. Even the flattest ground has undulations, let alone the terrain in the wild; ultrasonic sonar return data can sometimes produce significant errors, even fluctuating violently; when the robot starts and stops, its wheels will certainly slip. Since the real world where robots operate is primarily an unstructured dynamic environment, they often encounter problems that were not considered in pre-completed program planning. In such environments, the pre-planned decision program often encounters various troubles in practice and cannot work as we envisioned.

1. The complexity of the environment and errors in environmental models;

2. The unpredictability of the environment;

3. Instability caused by imprecise environmental perception.

Program-controlled architecture cannot resolve the last two issues. However, inclusive architecture and behavior-based control models can better address these two problems, although they may sacrifice some efficiency.

To illustrate the differences between these two control architectures simply, consider a cleaning robot whose task is to traverse the entire room while cleaning it thoroughly. If we use a planning model to complete this task, the robot may follow a predetermined path to traverse the entire floor once or multiple times. However, if it encounters a chair that was overlooked during planning, the robot may veer off course and never complete the cleaning task. On the other hand, if we use a behavior-based model, the robot may wander around and adjust its walking direction based on the encountered situations, but it would still manage to clean the room, albeit with some bumps and scrapes.

Layered architecture can effectively solve the relationship between intelligence and control precision, creating a good autonomous control method. However, due to the three problems mentioned above, layered architecture often has deficiencies in flexibility, real-time performance, and adaptability.

To address these shortcomings, R. Brooks at MIT proposed a behavior-based architecture—subsumption architecture—from the perspective of studying the structure of mobile robot control systems. Unlike layered architecture, which decomposes the system into functional modules and constructs it according to the perception-planning-action (SPA) process in a serial structure; subsumption architecture is a fully reactive architecture based on the mapping relationship between perception and action (Sense-Action, SA) in a parallel structure. In subsumption architecture, higher-level behaviors encompass all lower-level behaviors, and the higher level can only complete its tasks with the assistance of lower levels; on the other hand, lower levels do not depend on higher levels, although higher levels can sometimes utilize or constrain lower levels, the internal control of lower levels is independent of higher levels, and adding or removing higher levels does not affect lower levels.

In behavior-based models, various, potentially incompatible behaviors participate in control, with each behavior responsible for achieving or maintaining a specific goal of the robot, such as tracking a target or avoiding obstacles. Multiple behaviors can often generate conflicting control output commands. Therefore, the first problem that the system needs to solve is the collaboration of multiple behaviors, that is, constructing an effective coordination mechanism for multi-behavior activities to achieve reasonable and consistent overall behavior.

Using the cleaning robot example again, we can decompose “cleaning the entire room” into several basic behaviors: “move forward and clean the floor,” “avoid left-side obstacles,” “avoid right-side obstacles,” and “avoid front obstacles.” The robot initially does not make any planning for its task; it simply moves forward. When it encounters an obstacle, the corresponding behavior is activated, producing an output to the drive actuator to execute the corresponding action. A reasonable arbiter (e.g., a FSM state machine) is then used to determine the priority when multiple behaviors conflict.

This seemingly simple logic has excellent adaptability. Such a cleaning robot would ultimately be able to clean the room. However, unfortunately, if it is not lucky, it may take several hours to clean the entire room.

The methods for implementing behavior coordination mechanisms mentioned above can be divided into two categories: arbitration and command synthesis. The arbitration method allows one or a series of behaviors to control simultaneously at the same time, and then switch to another set of behaviors in the next moment. Command synthesis focuses on how to combine the results of various behaviors into a single command to input into the robot’s actuators. It allows multiple behaviors to influence the final control of the robot, which is suitable for solving typical multi-behavior problems, such as a robot navigating in open space while avoiding encountered obstacles. Both arbitration mechanisms and command synthesis mechanisms have many specific implementation strategies, each with its advantages and disadvantages.

1.4 Hybrid Architecture

Subsumption architecture emphasizes the independence and parallel operation of modules but lacks global guidance and coordination. Although it demonstrates flexible responsiveness and robustness in local actions, it shows less initiative and purposefulness in tracking long-term global goals. For example, in the cleaning robot case mentioned above, subsumption architecture and behavior models provide a highly robust, adaptable control method that relies less on external information. However, its fatal issue is efficiency. Therefore, for more complex applications, a hybrid architecture may be needed to integrate the advantages of program-controlled architecture and subsumption architecture/behavior models, while trying to avoid their respective disadvantages.

Typically, hybrid architecture employs program-controlled architecture at higher decision-making levels to achieve better purposefulness and efficiency, while using subsumption architecture at lower reactive levels to gain better environmental adaptability, robustness, and real-time performance.

Gat proposed a hybrid three-layer architecture consisting of a reactive feedback control layer (Controller), a reactive planning-execution layer (Sequencer), and a planning layer (Deliberator). The UP-Voyager IIA robot launched by Bochuang Technology adopts a behavior-based hybrid layered control architecture, which includes four levels: user layer, autonomous planning decision layer, behavior layer, and execution control layer. The user layer mainly handles interactions between the user and the robot; it is primarily used to convey necessary information to the user and accept user commands; the autonomous planning decision layer accomplishes some high-level autonomous decisions, such as traversing the room or moving to a specified location without colliding with suddenly appearing obstacles; the behavior layer includes behaviors such as obstacle avoidance, low voltage protection, disturbances, and escape, which can autonomously execute without upper-level control. The execution control layer transforms non-symbolic data from sensors into symbolic data for upper levels to read or uses automatic control theories and methods to rapidly control the operation of actuators.

Source: Robot 2025

Introduction to Robot-related Organizations!

The Design Process of Industrial Robots is Not Simple!

Discussing Motion Control Parts Around Industrial Robots: Servo, Stepper, Variable Frequency!

Want to Master Industrial Robot Servos? Do You Know the Servo Control Modes?

Analysis of the Transmission Mechanism of Industrial Robots!