New Intelligence Compilation

Source: bair.berkeley.edu

Author: Jacob Andreas

Translator: Liu Xiaoqin

[New Intelligence Guide]This article is the first technical blog post published by the Berkeley Artificial Intelligence Research (BAIR) lab, detailing the role of Neural Module Networks (NMNs) in complex reasoning tasks and their advantages and challenges compared to other methods.

Open the New Intelligence WeChat public account, directly reply with [NMN] or [Neural Module Network] to download the three related papers

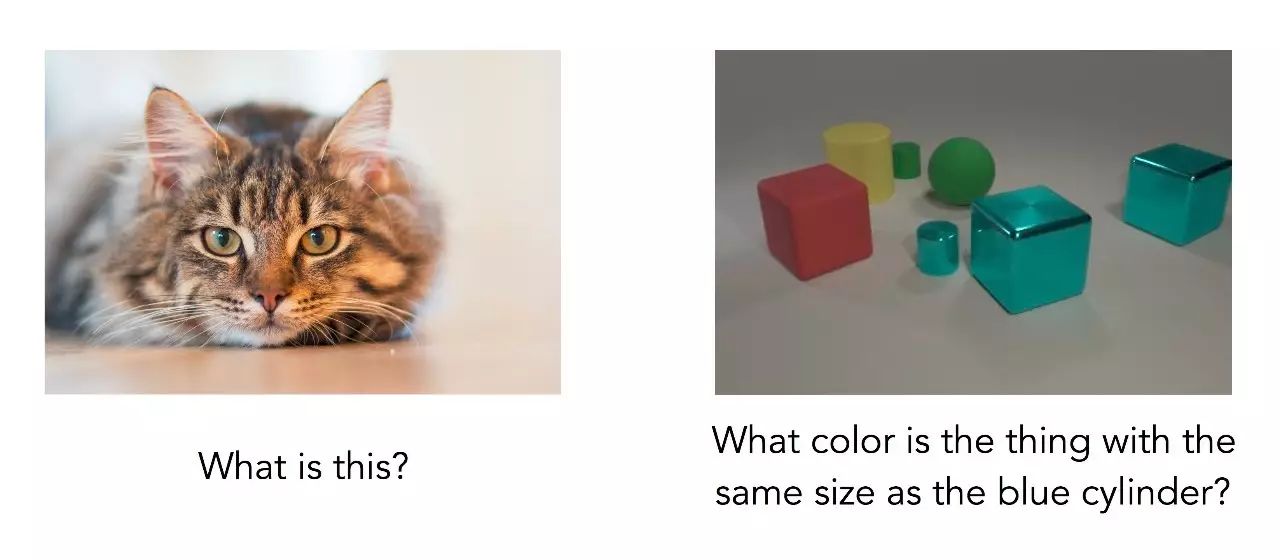

Suppose we want to create a home robot that can answer questions about its surroundings. We might ask it questions like:

Left: What is this? Right: What color is the object that is the same size as the blue cylinder in the picture?

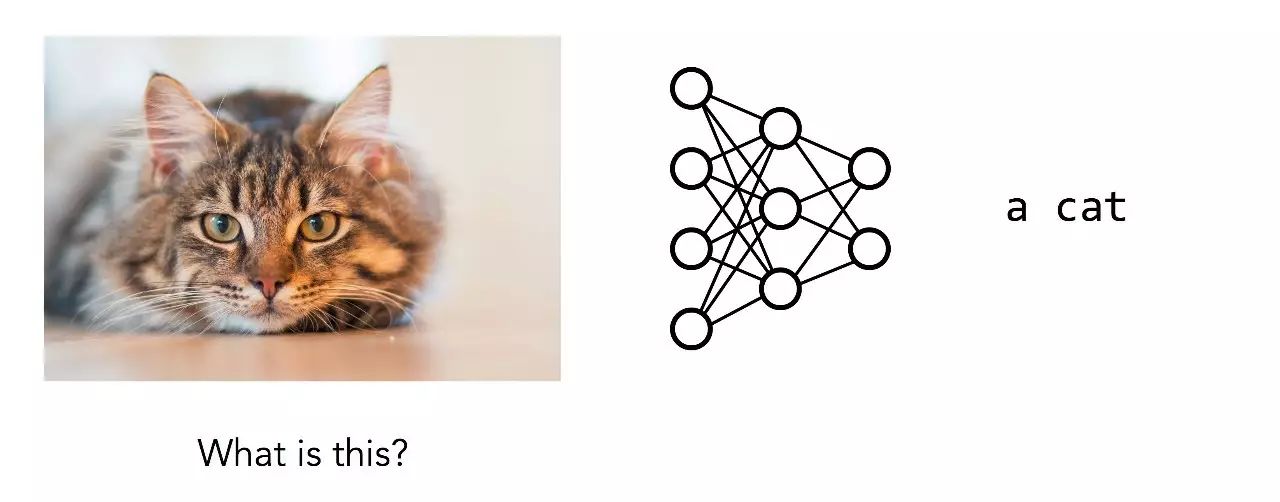

How can we ensure that the robot can answer these questions correctly? The standard approach in deep learning is to collect a large dataset of questions, images, and answers, and then train a neural network to map questions and images directly to answers. If most questions are similar to the left image, then we are dealing with a familiar image recognition problem, and such holistic methods are quite effective:

Answer the question: What is this?

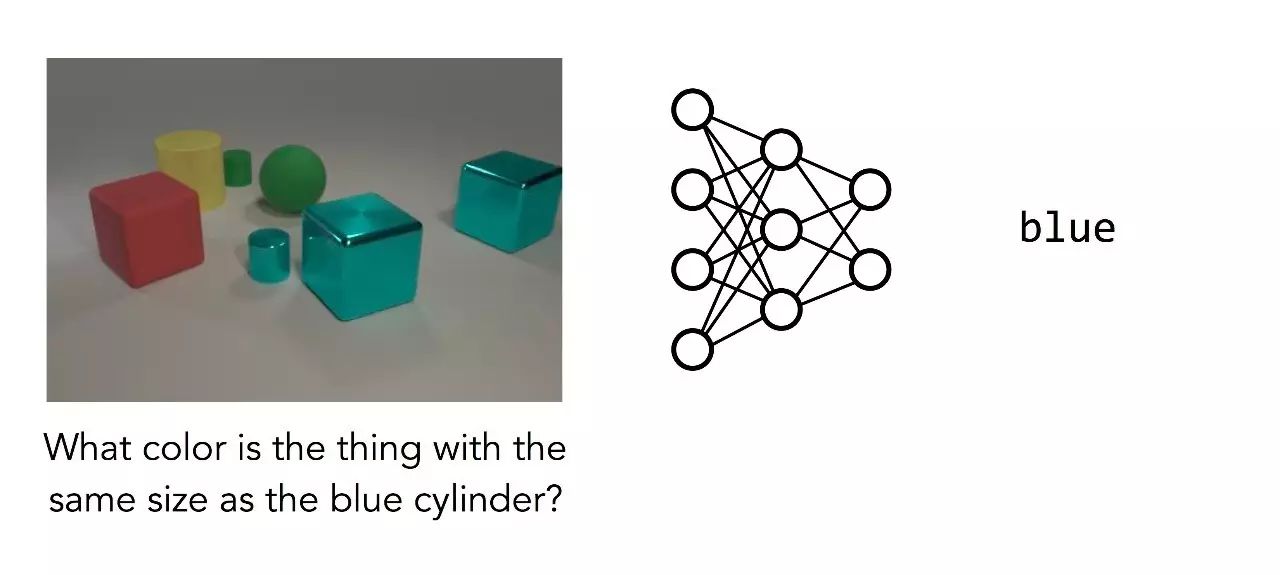

But for questions like the one on the right, it is not so simple:

Answer the question: What color is the object that is the same size as the blue cylinder?

Here, our trained network has given up, guessing the most common color in the image (blue). Why is this question much harder? Even if our image is clearer and more concise, this question still requires multi-step reasoning: it is not simply identifying the main object in the image; the model must first locate the blue cylinder, then find another object of the same size, and finally determine the color of that object. This is a complex computation, specific to the particular question being asked. Different questions require different steps to solve.

The mainstream paradigm in deep learning is a “one-size-fits-all” approach: for any problem we want to solve, we write a fixed model architecture, hoping it can capture everything about the relationship between inputs and outputs, and learn parameters for this fixed model from annotated training data.

But reasoning in the real world cannot work this way: it involves various different abilities, mixed with every new challenge we encounter in the external world. We need a model that can dynamically determine how to reason about the questions posed—one that can choose its own structure at runtime. In this article, we will discuss a class of models known as Neural Module Networks (NMNs), which can integrate this more flexible approach into solutions while retaining the powerful utility of deep learning.

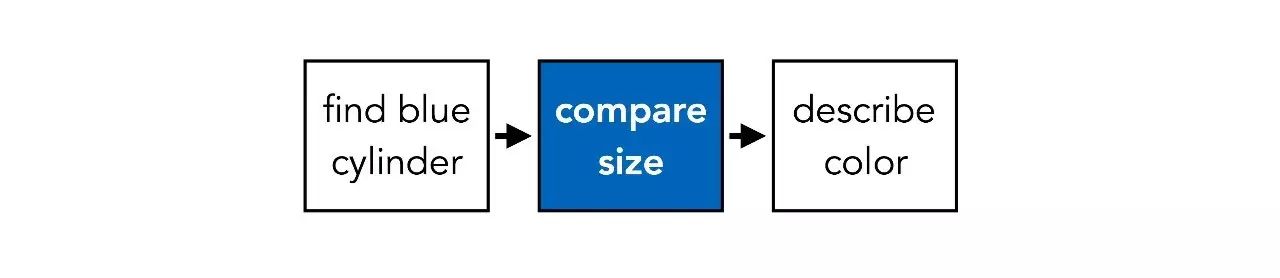

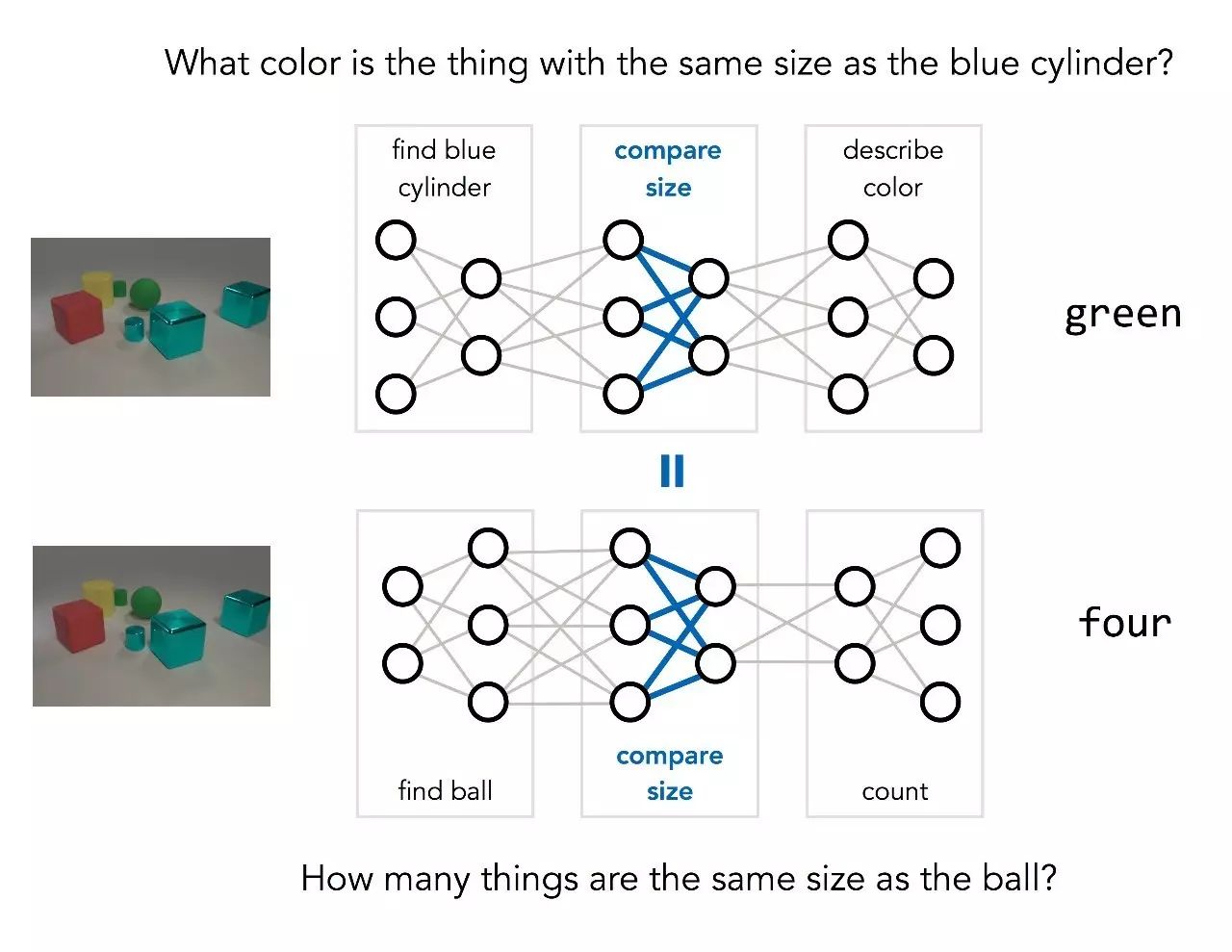

As mentioned earlier, answering the above questions involves three different steps: finding a blue cylinder, finding another object of the same size, and determining the color of that object. This process can be represented in the following diagram:

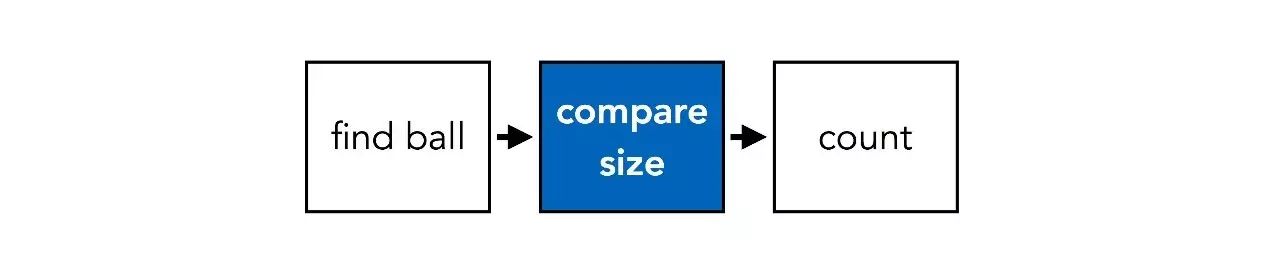

Once the question changes, it may lead to a series of different steps. For example, if we ask, “How many objects in the picture are the same size as the ball?”, the steps would change to:

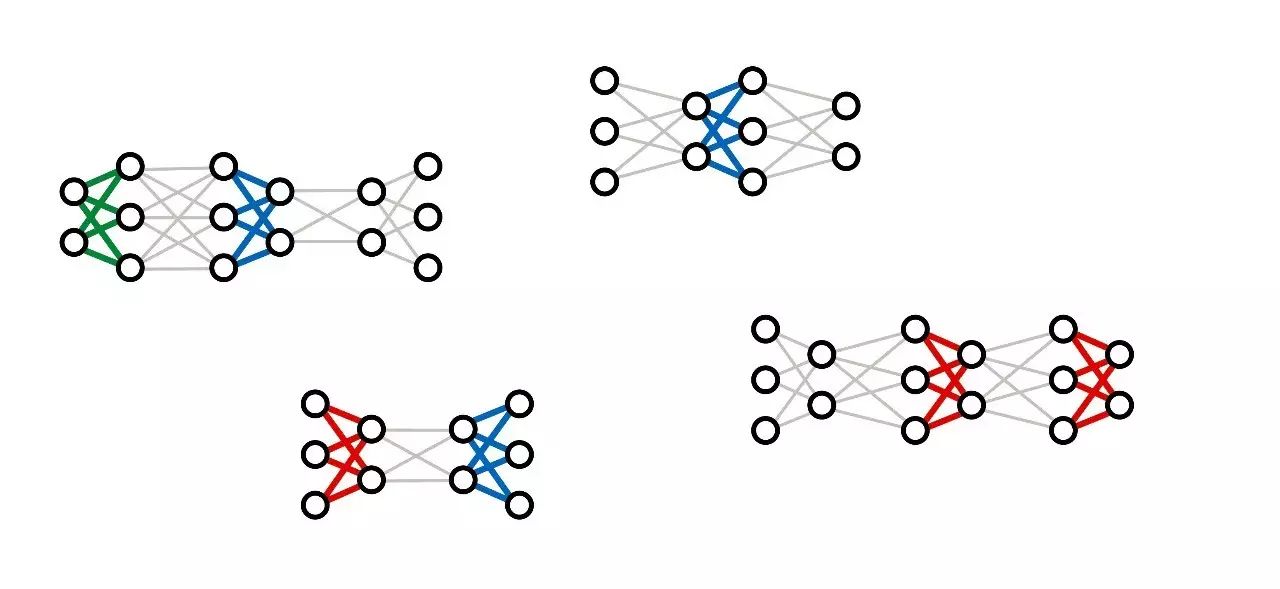

Basic operations, such as “comparing sizes”, are shared across different questions, but the way they are used varies. The key idea of NMNs is to make this sharing explicit: we use two different network structures to answer the above two questions, but the parts of the weights that contain the same basic operations are shared between the two networks.

So, how do we learn such a model? We actually train many different networks simultaneously and try to combine their parameters at the appropriate times, rather than training a single network on a large number of input/output pairs.

Figure: Recent deep learning architectures, including DyNet and TensorFlow Fold, are designed in this way of dynamic computation.

At the end of such a training process, what we obtain is not a single deep network, but a collection of neural “modules” (modules), each implementing a step of reasoning. When we want to use the trained model on new questions, we can dynamically combine these modules to form a new network structure tailored to that question.

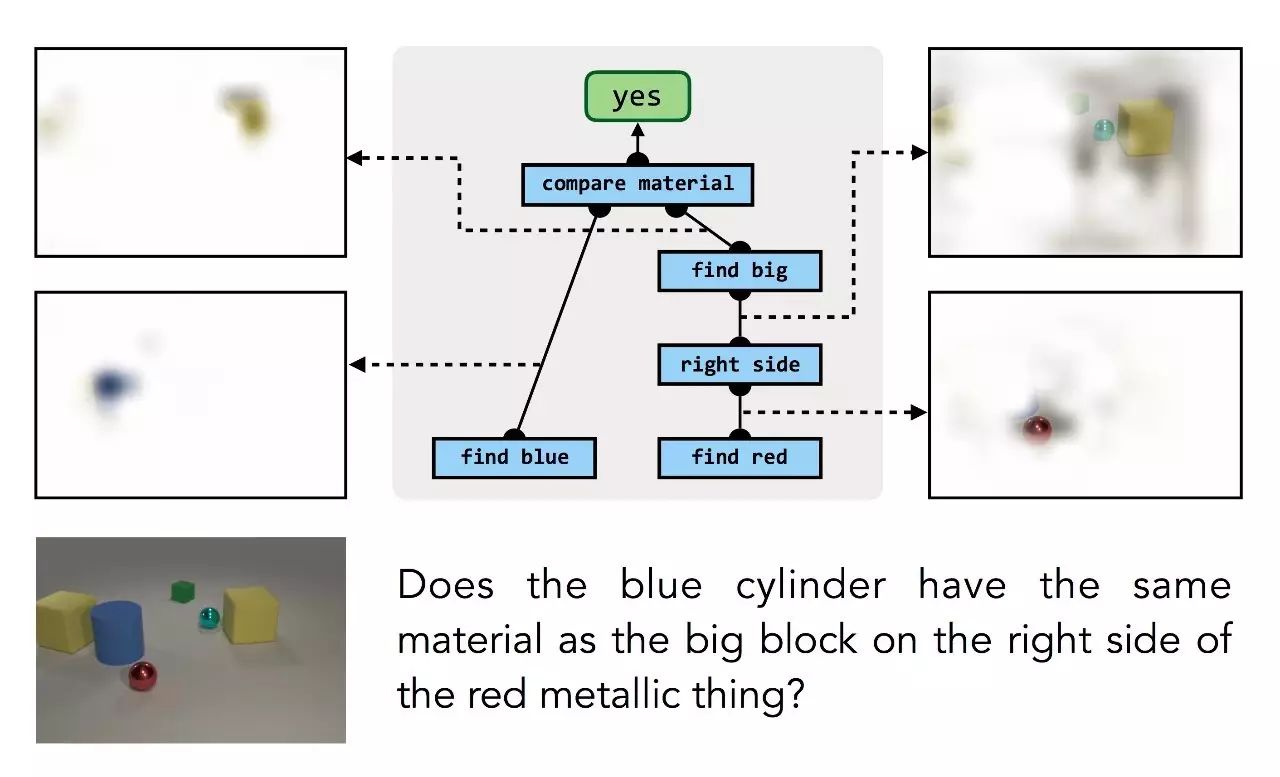

It is worth noting in this process that we do not need to provide low-level supervision for individual modules: the model does not treat “blue object” or “left side” relations as isolated examples. Modules learn only within the larger combination structure, with only (question, answer) pairs as supervision. But the training process can automatically infer the correct relationships between the parts of the structure and the computations they are responsible for:

Question: Is the blue cylinder made of the same material as the large red metal block on the right?

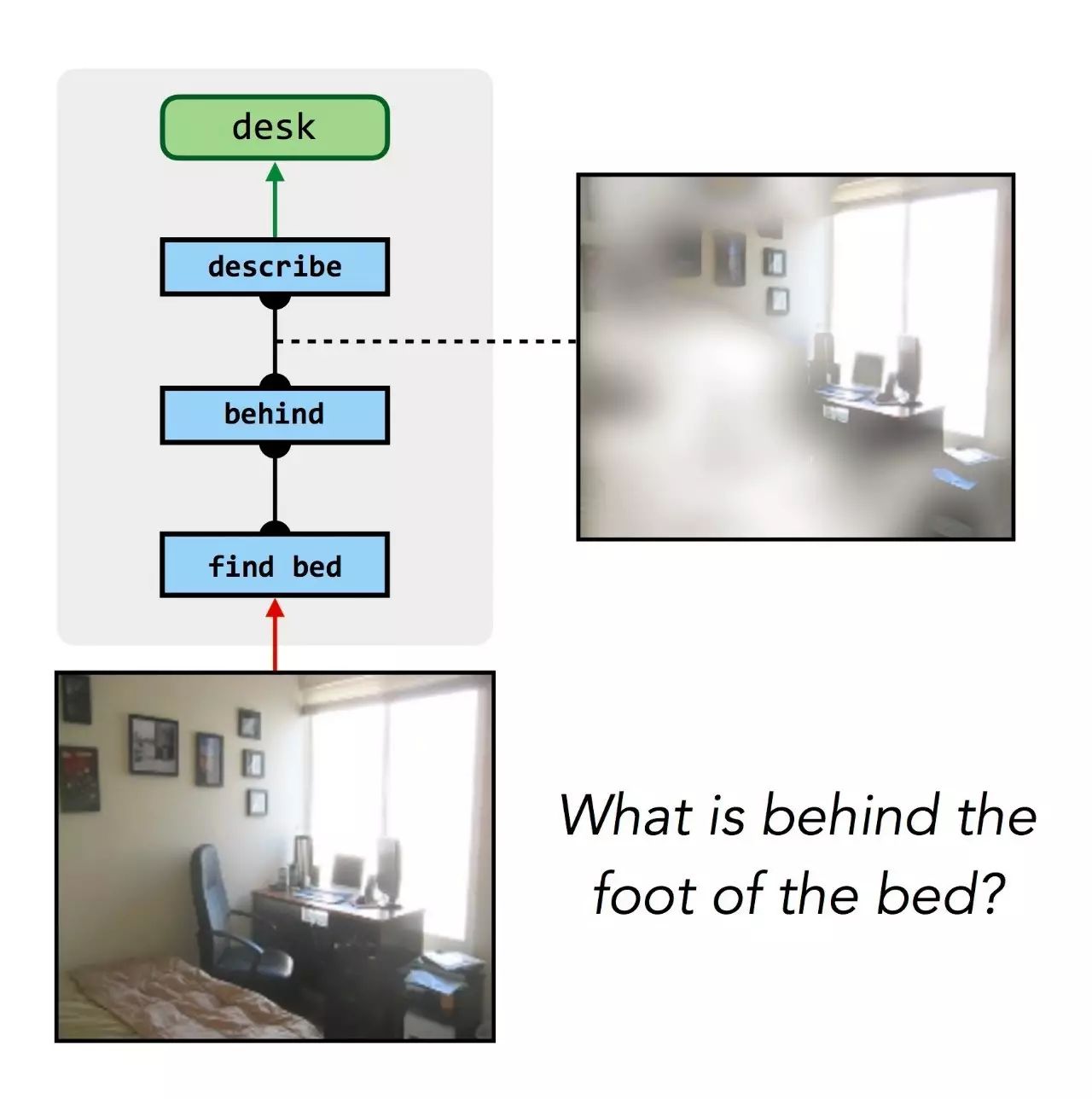

The same process also works for answering questions about more realistic photos, and even for other knowledge sources like databases:

Question: What is behind the bed leg? What beaches are there in Florida?

The key element of this process is the “blueprint of reasoning” collection mentioned above. These blueprints can tell us how the network for each question should be laid out and how different questions relate to each other. But where do these blueprints come from?

In the preliminary work on these models, we found a surprising correlation between the design issues of question-specific neural networks and the analysis of syntactic structures. Linguists have long found that the syntax of a question closely relates to the sequence of computational steps required to answer it. Thanks to recent advances in natural language processing, we can use off-the-shelf syntactic analysis tools to automatically provide similar versions of these blueprints.

However, accurately mapping from linguistic structures to network structures remains a challenging problem, and the conversion process is prone to errors. In later studies, we no longer relied on such linguistic analysis, but instead used data created by human experts, who annotated a series of questions with idealized reasoning blueprints. By learning to imitate these human methods, our model was able to significantly improve prediction quality. Even more surprisingly, when we trained the model by mimicking human experts and allowed it to modify the predictions of these experts, it often found better solutions to many questions than the experts.

Despite many significant successes of deep learning methods in recent years, there are still many challenges, such as few-shot learning and complex reasoning. But these are precisely the areas where more structured classical techniques, such as semantic parsing and program induction, truly excel. Neural Module Networks (NMNs) have advantages in both of these challenges: the flexibility of discrete combinations and data efficiency, along with the capabilities of deep networks. NMNs have already succeeded in many tasks of visual and textual reasoning, and we look forward to applying them to other AI problems.

This article is based on the following papers (open the New Intelligence WeChat public account, directly reply with [NMN] or [Neural Module Network] to download the papers):

-

Neural Module Networks. Jacob Andreas, Marcus Rohrbach, Trevor Darrell and Dan Klein. CVPR 2016. (arXiv)

-

Learning to Compose Neural Networks for Question Answering. Jacob Andreas, Marcus Rohrbach, Trevor Darrell and Dan Klein. NAACL 2016. (arXiv)

-

Modeling Relationships in Referential Expressions with Compositional Modular Networks. Ronghang Hu, Marcus Rohrbach, Jacob Andreas, Trevor Darrell and Kate Saenko. CVPR 2017. (arXiv)

Original link: http://bair.berkeley.edu/blog/2017/06/20/learning-to-reason-with-neural-module-networks/

Author: Jacob Andreas

Co-authors: Ronghang Hu, Marcus Rohrbach, Trevor Darrell, Dan Klein & Kate Saenko