New Intelligence Compilation

Source: arxiv

Editor: Xiao Qin

【New Intelligence Guide】Traditional memory architectures struggle with relational reasoning. This paper from DeepMind and University College London proposes the Relational Memory Core (RMC), which can perform relational reasoning within sequential information, achieving state-of-the-art performance on the WikiText-103, Project Gutenberg, and GigaWord datasets.

Paper: https://arxiv.org/pdf/1806.01822v1.pdf

Memory-based neural networks model sequential data by utilizing long-term memory information. However, it is still unclear whether they can leverage the information they remember for complex relational reasoning.

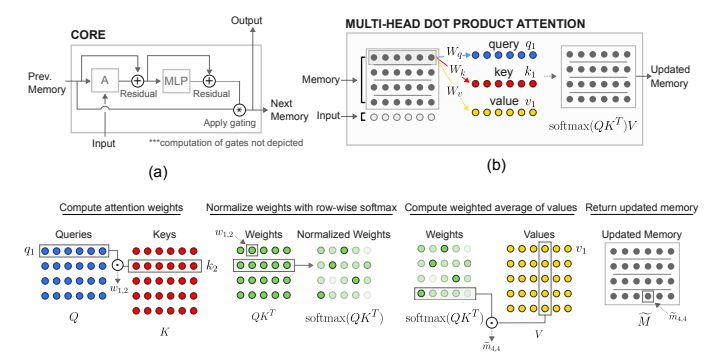

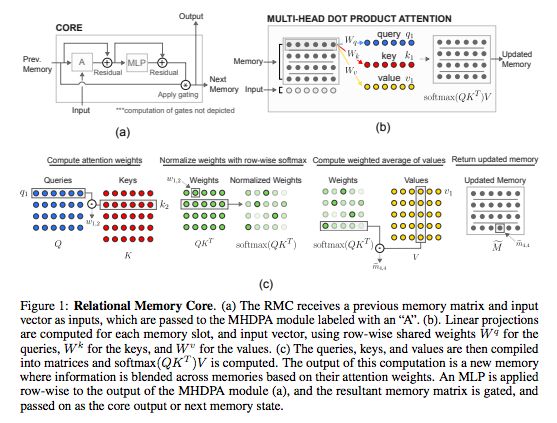

In this paper, researchers from DeepMind and University College London first confirm an intuitive idea that standard memory architectures face difficulties in some tasks involving relational reasoning. They then improve this flaw by using a new memory module—Relational Memory Core (RMC)—which employs multi-head dot product attention to allow memory interactions.

Finally, the researchers test RMC on a range of tasks that can benefit from more powerful relational reasoning across sequential information, showing significant benefits in the RL domain (e.g., Mini PacMan), program evaluation, and language modeling, achieving state-of-the-art results on the WikiText-103, Project Gutenberg, and GigaWord datasets.

Humans use complex memory systems to access and reason about important information, regardless of when that information was originally perceived. In neural network research, many successful methods for modeling sequential data also utilize memory systems (memory systems), such as LSTM and memory-augmented neural networks (memory-augmented neural networks). By enhancing memory capacity, managing limited computational costs over time, and handling gradient vanishing, these networks learn to associate events across time to proficiently store and retrieve information.

Here, we suggestconsidering memory interaction alongside storage and retrieval, which is productive. While current models can learn to partition and associate distributed, vectorized memories, they do not explicitly favor doing so. We hypothesize that such a bias can allow a model to better understand how memories are related, thus enabling it to perform better relational reasoning.

First, we emphasize relational reasoning of sequential information through the development of a demonstration task, demonstrating current models’ difficulties in this area. Using the newRelational Memory Core (RMC), we solve and analyze this issue by allowing memories to interact with each other through multi-head dot product attention. We then apply RMC to a range of tasks that may benefit from more explicit memory-memory interactions, potentially increasing relational reasoning capabilities over time: in the WikiText-103, Project Gutenberg, and GigaWord datasets, partially observed reinforcement learning tasks, program evaluation, and language modeling.

We considerrelational reasoning to be the process of understanding how entities are connected and using that understanding to achieve higher-order goals. For example, consider sorting distances between various trees and park benches: comparing the relationships (distances) between entities (trees and benches) to arrive at a solution; if we only consider the attributes (positions) of each entity in isolation, we cannot arrive at a solution.

Since we can usually define what constitutes an “entity” or “relation,” we can imagine a series of neural network-induced biases that can be expressed in the language of relational reasoning. For example, a convolutional kernel can be used to compute the relationships (linear combinations) of entities (pixels) within a receptive field.

In the temporal domain, relational reasoning can encompass the ability to compare and contrast information at different time points. Here, the attention mechanism implicitly performs some form of relational reasoning; if previous hidden states are interpreted as entities, then using attention to compute a weighted sum of the entities helps eliminate the locality bias present in RNNs.

Since our current architecture solves complex sequential tasks, it must possess some capacity for temporal relational reasoning. However, it is still unclear whether their inductive biases are limited, and whether these limitations can be exposed in tasks that require specific types of temporal relational reasoning.

Our guiding design principle is to provide a backbone architecture on which themodel can learn how to partition information and how to compute interactions between the partitioned information. To achieve this, we assemble building blocks from LSTMs, memory-augmented neural networks, and non-local networks (specifically, the Transformer seq2seq model). Similar to memory-augmented architectures, we consider a set of fixed memory slots; however, we allow for interactions between memory slots using attention mechanisms. In contrast to previous work, we apply attention between memories at a single time step, rather than across all previous representations computed in prior observations.

Figure 1: Relational Memory Core

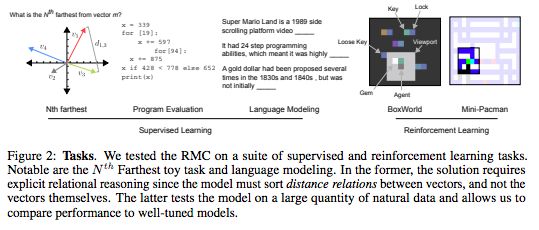

Figure 2: Tasks

We test RMC on a set of supervised learning and reinforcement learning tasks. Notably, the Nᵗʰ Farthest task and language modeling. In the former, the solution requires explicit relational reasoning, as the model must sort the relationships of distances between vectors, rather than sorting the vectors themselves. The latter tests the model on a large amount of natural data and allows us to compare performance with well-tuned models.

This section briefly introduces the experimental tasks applying RMC, for detailed information on each task and the model’s hyperparameter settings, please refer to the original paper.

Illustrative Supervised Tasks

Nᵗʰ Farthest

The Nᵗʰ Farthest task emphasizes the relational reasoning capability across time. The input is a randomly sampled vector sequence, and the goal is to answer the formal question: “What is the nth farthest vector from vector m?” where the values of the vectors, their IDs, n, and m are all randomly sampled for each sequence. We emphasize that the model must sort the relationships of distances between vectors, rather than sorting the vectors themselves.

Program Evaluation

The Learning to Execute (LTE) dataset consists of algorithm snippets in a Turing-complete pseudocode programming language, categorized into three types: addition, control, and complete programs. The input is a character sequence on an alphanumeric vocabulary representing these code snippets, and the target is a numeric character sequence that is the output of executing the given programming input. Considering that these snippets involve symbolic operations on variables, we believe it may impact the model’s relational reasoning capabilities; since symbolic operators can be interpreted as defining a relationship on operands, successful learning can reflect an understanding of this relationship. To evaluate the model’s performance on classic sequence tasks, we also assessed memory tasks, where the output is merely a permutation of the input rather than an evaluation from a set of operational instructions.

Reinforcement Learning

Mini Pacman with Viewport

We follow the formulation of Mini Pacman in the literature. In brief, the agent navigates a maze to collect food while being chased by ghosts. We implement this task with a viewport: a 5×5 window around the agent that contains perceptual input. Thus, the task is partially observable. The agent must predict the dynamics of ghosts in memory and plan navigation accordingly, while also considering the memory information of the food being collected. This task requires relational reasoning in the memory space.

Language Modeling

Finally, we investigate vocabulary-based language modeling tasks.

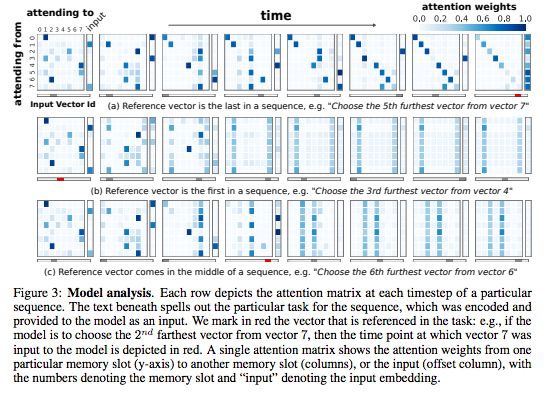

Figure 3: Model Analysis

Each row describes the attention matrix at each time step for a specific sequence. The text below clarifies the specific task of the sequence, which is encoded and provided as input to the model. We highlight the vectors referenced in the task in red.

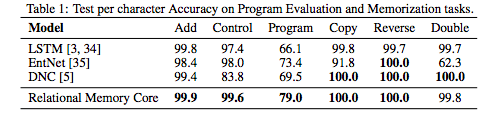

Table 1: Accuracy of each character in testing program evaluation and memory tasks.

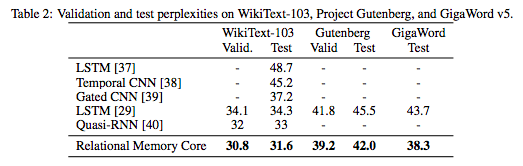

Table 2: Validation and test perplexity on the WikiText-103, Project Gutenberg, and GigaWord v5 datasets.

Overall, our results show that explicit modeling of memory interactions also improves performance on reinforcement learning tasks, as well as program evaluation, comparative reasoning, and language modeling, indicating the value of incorporating relational reasoning capabilities into recurrent neural networks.

【Join the Community】

The New Intelligence AI Technology + Industry Community is recruiting. We welcome students interested in the implementation of AI technology + industry to add the assistant’s WeChat account: aiera2015_3 to join the group; after passing the review, we will invite you to join the group. Please be sure to modify the group note (Name – Company – Position; the professional group has strict reviews, please understand).