For the past two years, many executives in the cloud computing and chip industries have predicted that Nvidia’s dominance in the AI server chip market would decline, but this situation has yet to occur.

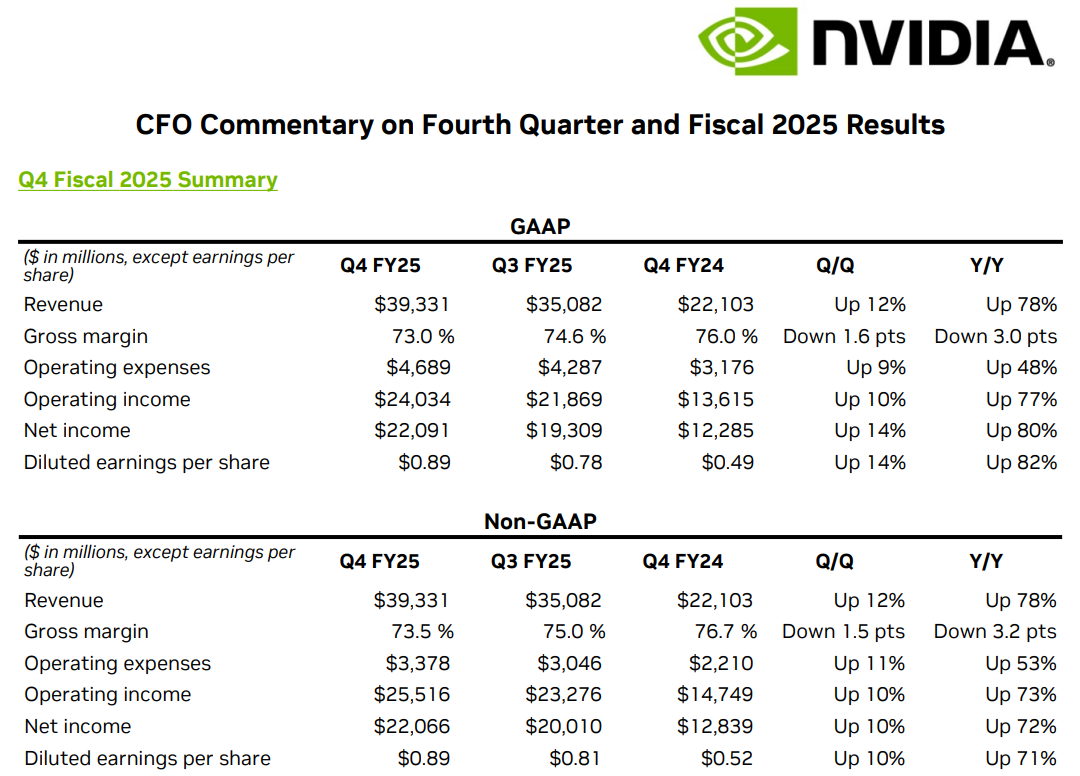

On February 27, Beijing time, Nvidia announced its fourth-quarter financial report for fiscal year 2025, with results once again exceeding Wall Street expectations. The report showed that Nvidia’s fourth-quarter revenue reached a record $39.3 billion, a 12% increase from the previous quarter and a 78% increase year-over-year. Net profit soared to $22.091 billion, surpassing the market expectation of $19.611 billion. For the entire fiscal year, revenue grew by 142%, reaching a record $115.2 billion.

(Source: Nvidia)

The data center business remains the backbone of Nvidia’s revenue, especially against the backdrop of surging demand for AI training and inference. In the fourth quarter, data center revenue set a new record of $35.6 billion, a 16% quarter-over-quarter increase and a 93% year-over-year increase. This is mainly due to the strong demand for the Blackwell computing platform and the continued growth of the H200 product.

However, it is important to note that while quarterly revenues continue to climb, the year-over-year growth rate is showing a downward trend. This time, Nvidia’s fourth-quarter year-over-year growth rate was only 78%, marking the lowest level in seven quarters. Perhaps Nvidia’s future development is no longer as easy as it once was.

Rene Haas, CEO of Arm Holdings and a member of the SoftBank board, believes that although Nvidia currently dominates the AI chip market, the sales growth of other AI chips is just a matter of time. In an interview with The Information, Haas shared his views on the competitive landscape of the AI chip market.

“As many large AI developers and cloud service providers actively develop alternatives to Nvidia chips, specialized inference chips will gradually emerge,” Haas stated.

As for which challengers are most likely to succeed, Haas is not yet certain. He believes that chip startups, cloud giants like Amazon, new players like OpenAI, and traditional chip manufacturers all have opportunities. This is because companies like OpenAI will eventually spend more on running existing AI models (i.e., inference) than on training new models, while Nvidia’s chips have an absolute advantage mainly in the training domain.Those inference tasks with relatively low computational intensity provide market space for Nvidia’s competitors.

Here is the interview content:

The Information (hereinafter referred to as TI): Last week, we reported that OpenAI expects its spending on inference computing (running AI models) to exceed its spending on training models by 2030. Does this align with your predictions?

Image丨Related report (Source: The Information)

Haas:If you agree that at some point the returns from training new models will gradually flatten, the main work will shift to reinforcement training rather than developing entirely new frontier large models. Whether this turning point is five years or ten years away is a judgment worth considering. For now, let’s assume OpenAI’s prediction is correct, and there will be a turning point when inference becomes the absolutely dominant workload.

We are still far from AGI (artificial general intelligence). I saw some reports this morning about how powerful AI programming assistants are, able to pass certain math tests, but can they really write code comparable to that of a mid-level engineer? When AI reaches that level—though everyone has different definitions of AGI—that’s when you’ll see we have truly made breakthroughs. Clearly, we are not there yet, which is why people are investing so heavily in training.

So regardless of whether this turning point is in 2030, I believe the development curve will roughly look like this.

TI: In the long run, will companies run AI inference on Nvidia GPUs?

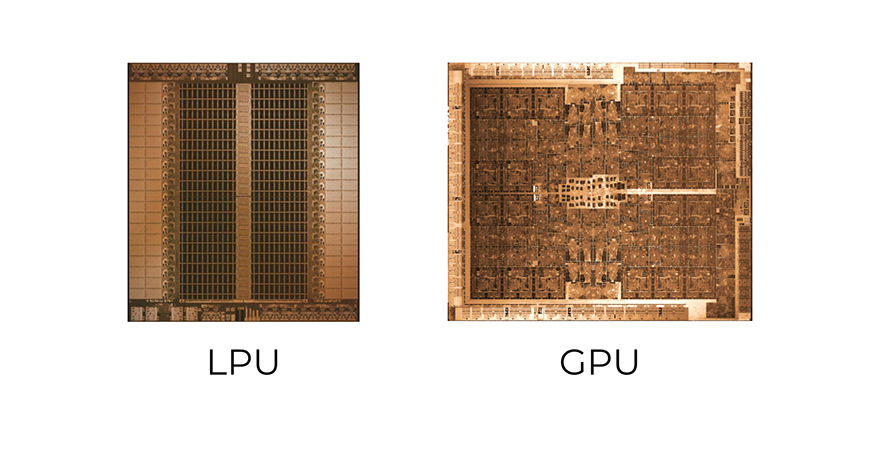

Haas:It is still too early to draw conclusions, as the vast majority of deployed devices on the market are Nvidia’s. The inference tasks of large cloud service providers almost default to running on Nvidia hardware. Smaller chip companies like Cerebras and Groq have achieved some success in the inference market of small to medium-sized data centers, but some customers are forced to choose alternatives simply because they cannot buy Nvidia GPUs.

Image丨 Comparison of Groq’s AI chip LPU with Nvidia GPUs (Source: Groq)

The market is still in its early stages, as many companies are considering: “I have already invested heavily in GPU training, the data center hardware costs are already sunk, and I am uncertain whether it is worth switching to chips from other companies for inference tasks.” But I believe that in the coming years, as model sizes continue to grow, more and more investment will flow into dedicated training GPUs or solutions, leading to a clear market differentiation.

Dedicated inference products will gradually emerge. Major cloud service giants are developing their own chips. I cannot disclose confidential information, but we have in-depth knowledge of these products, and in many scenarios, CPUs can play a significant role.

TI:Who can seize market share from Nvidia in the inference market?

Haas:It is currently difficult to predict who will win. There is no doubt that Nvidia is an incredible company with a significant advantage in deployed infrastructure.

On the other hand, chip startups are constantly innovating, whether it is optical fiber substrates, co-packaged optical technology, innovative memory configurations, or in-memory computing—the semiconductor industry is experiencing a level of innovation not seen in years.

TI:What is the outlook for custom AI chips from large cloud service providers?

This path is indeed challenging. We have accumulated rich experience in general-purpose computing chips, such as Amazon’s Graviton (non-AI task chip) and Microsoft’s and Google’s AI chips. Graviton has gained considerable market recognition, but Microsoft’s and Google’s AI chips have not yet been widely adopted, which will take a long time.The biggest challenge is that the pace of AI model development far exceeds hardware iteration, making it extremely difficult for cloud service providers to develop their own chips.

TI:What are your thoughts on the chips being developed by OpenAI?

Haas:I cannot comment on this, as you know, if these companies are developing custom chips, they are likely collaborating with Arm.

TI:There are reports that Arm will also launch AI chips this year, is that true?

Haas:I can assure you that if we are preparing to do so, you will be the first to know.

TI:What impact does Elon Musk’s xAI have on AI infrastructure?

Haas:Perhaps more importantly than anything else is that Musk is building Grok at a fairly rapid pace. I believe this puts tremendous pressure on the entire ecosystem.

Image丨 The Colossus supercomputer built by xAI in just eight months (Source: xAI)

TI:Will there be more projects like Stargate in the future?

Haas:I am not sure if SoftBank can invest more in projects like Stargate, but in terms of the idea, that is a good question. Masayoshi Son recognized early on that you need funding, but also other resources.What is less known is that SoftBank has a large energy business that provides significant services to tech giants like Google and Microsoft.

SoftBank also brings many special resources, including Arm and numerous technology partners. These are the key elements needed for success. It is difficult to succeed solely with financial backing without providing technical support.I believe others will try, but integrating all resources is no easy task.

TI:Will AI inference primarily run in data centers or on edge devices?

Haas:It is not impossible for edge devices to take on important AI tasks. I believe the future will be a hybrid model—some running in the cloud and some executed locally. From a privacy and security perspective, there are certain tasks you would prefer to process locally.Even smaller devices, like headphones, have severely limited power consumption. Therefore, AI algorithms and related computations must become more efficient.

TI:What type of device is best suited for running AI?

Haas:Mobile phones themselves are already very efficient products with rich functionalities.Over the past decade, mobile phones have taught us one thing: people do not like to carry multiple devices that do not significantly enhance their experience.

Therefore, it must be a product that enhances the existing experience. Could it be smart clothing? It is indeed possible. The field of smart glasses is also developing rapidly. The key is that the product experience must feel natural.I can imagine accessories that provide some enhancement to existing devices. But I have yet to see that “must-have” AI device.

References:1.https://www.theinformation.com/articles/why-arms-ceo-sees-room-for-nvidia-competitors?rc=a4cwroOperations/Layout: He Chenlong

01/ Can a “shot” to old batteries repair them without damage? A Fudan team uses AI to design organic lithium carrier molecules, hoping to solve the problem of large-scale battery disposal from the source.02/ What happened in the past month as Trump destroyed the American research system?03/ Researchers at Hong Kong University of Science and Technology develop a liquid crystal elastomer that can lift objects weighing 450 times its own weight, which can be used for artificial muscles and bionic machines.04/ Scientists achieve coordinated movement of micro-nano robot swarms, laying the foundation for large-scale applications of robotic swarms.05/ The Peking University team develops the first electromagnetic space-embodied intelligent agent, promoting human-computer interaction in the electromagnetic-cyber space, capable of monitoring vital signs.

01/ Can a “shot” to old batteries repair them without damage? A Fudan team uses AI to design organic lithium carrier molecules, hoping to solve the problem of large-scale battery disposal from the source.02/ What happened in the past month as Trump destroyed the American research system?03/ Researchers at Hong Kong University of Science and Technology develop a liquid crystal elastomer that can lift objects weighing 450 times its own weight, which can be used for artificial muscles and bionic machines.04/ Scientists achieve coordinated movement of micro-nano robot swarms, laying the foundation for large-scale applications of robotic swarms.05/ The Peking University team develops the first electromagnetic space-embodied intelligent agent, promoting human-computer interaction in the electromagnetic-cyber space, capable of monitoring vital signs.