AI Agent has recently become extremely popular.

Sam Altman once described the future of AI Agents: “Today’s AI models are the ‘dumbest’ they will ever be; they will only become smarter in the future!” Andrew Ng also praised at the AI Ascent 2024 conference: “This is the golden age of AI development, where generative AI and AI Agents will fundamentally change the way we work!” The words of these industry leaders make one feel that the deep integration of AI with human life and work is accelerating.

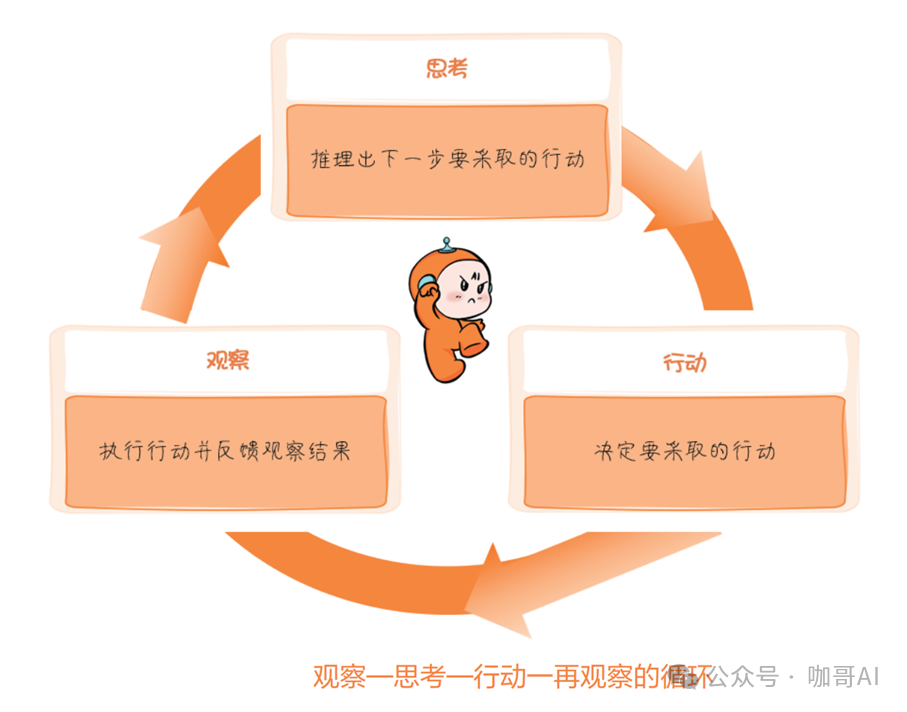

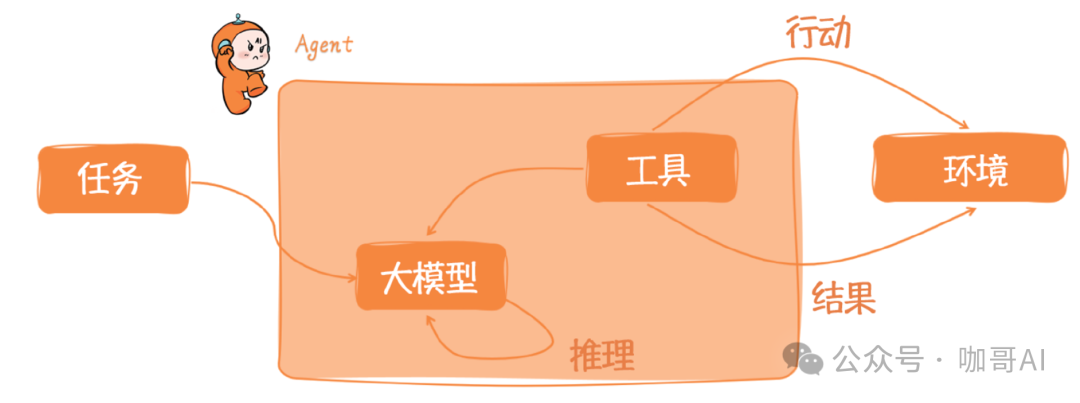

The reason why an Agent can become an Agent is that it has the ability to make judgments, thus enabling it to make decisions.

So where does its judgment ability come from? It is derived from the wisdom distilled from vast amounts of human language. Our natural corpus specifies that in scenario A, things should be done this way, while in scenario B, they should be done that way. Therefore, large language models can also make judgments. This is the foundation for large language models to become Agents.

A cute Agent

However, while the vision is good, the reality is not ideal. An article from the WeChat account “Product Sister” pointed out that Agent developers candidly admit that everyone is navigating through a dilemma. The current state of Agent development lacks systematic theoretical guidance and excellent examples to inspire and emulate.

So, in the era of large model application development, how should the Agents that everyone is discussing be designed and implemented? The author of this article, best-selling author “Hands-on AI Agent” and “GPT Illustrated”, Huang Jia, will comprehensively analyze the design understanding and implementation methods of 7 major design patterns in a practical manner.

4 Design Patterns of Agent Cognitive Frameworks

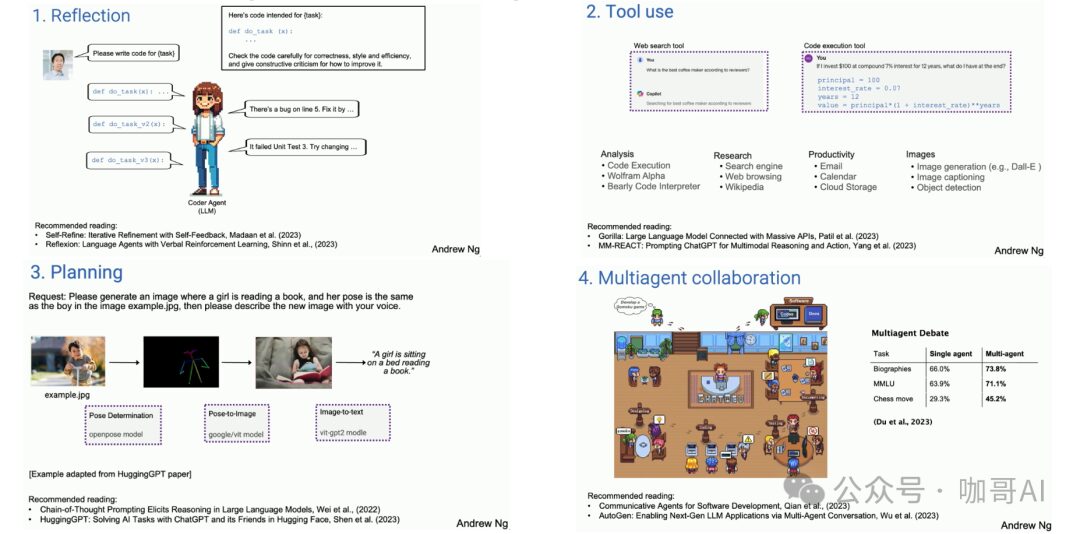

Professor Andrew Ng discussed his four classifications of AI Agent cognitive framework design patterns at the Sequoia Capital AI Summit (AI Ascent), including Reflection, Tool Use, Planning, and Multi-Agent Collaboration.

Professor Andrew Ng proposed four design patterns for the Agent cognitive framework.

The four basic thinking framework design patterns are:

· Reflection: The Agent optimizes decisions through interaction learning and reflection.

· Tool Use: In this mode, the Agent can invoke multiple tools to complete tasks.

· Planning: In the planning mode, the Agent needs to plan a series of action steps to achieve its goals.

· Multi-Agent Collaboration: Involves collaboration among multiple Agents.

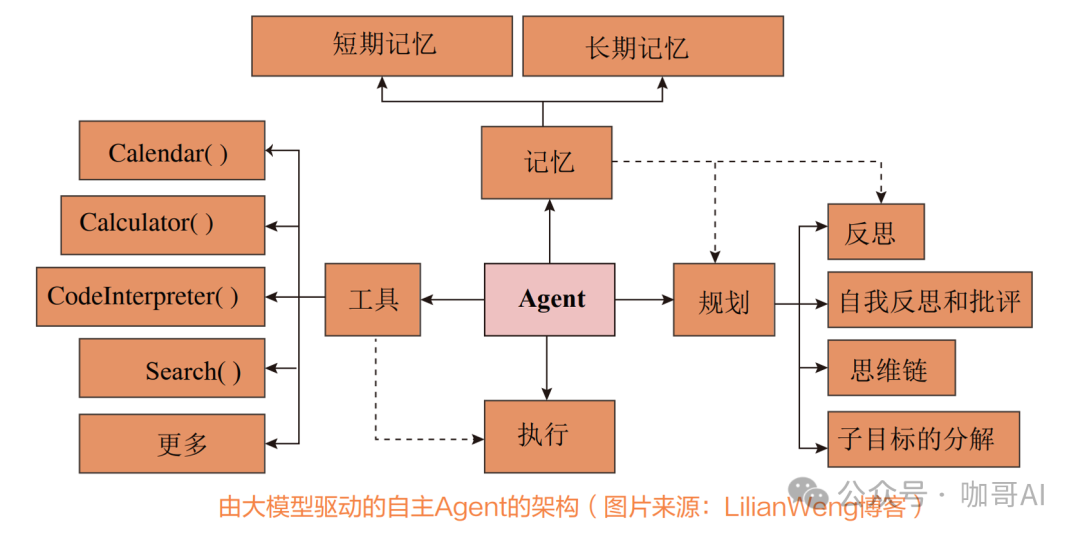

Technical Architecture of Agent Cognitive Framework

-

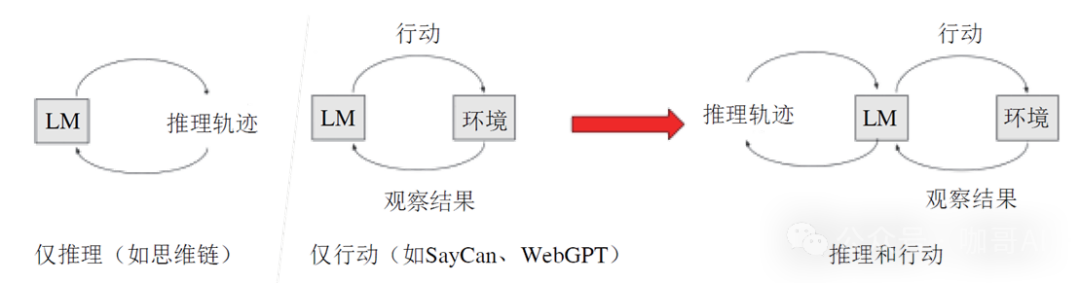

Planning: The Agent needs to possess planning (which also includes decision-making) capabilities to effectively execute complex tasks. This involves subgoal decomposition, chain of thoughts, self-criticism, and reflection on past actions. -

Memory: This includes both short-term and long-term memory. Short-term memory relates to context learning, part of prompt engineering, while long-term memory involves the long-term retention and retrieval of information, usually through external vector storage and quick retrieval. -

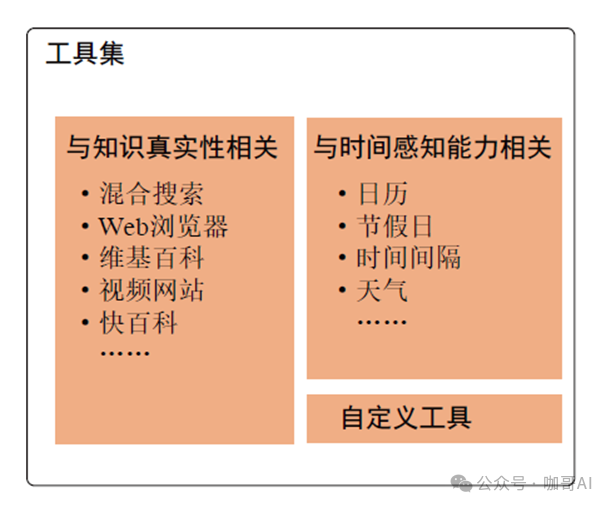

Tools: This includes various tools that the Agent may invoke, such as calendars, calculators, code interpreters, and search functions, as well as other possible tools. Since the internal capabilities and knowledge boundaries of large models are generally fixed once pre-training is completed, and difficult to expand, these tools become exceptionally important. They extend the Agent’s capabilities, allowing it to perform tasks beyond its core functions. -

Action: The Agent executes specific actions based on planning and memory. This may include interacting with the external world or completing an action (task) through the invocation of tools.

7 Specific Implementations of Agent Cognitive Frameworks

7 Specific Implementations of Agent Cognitive Frameworks

-

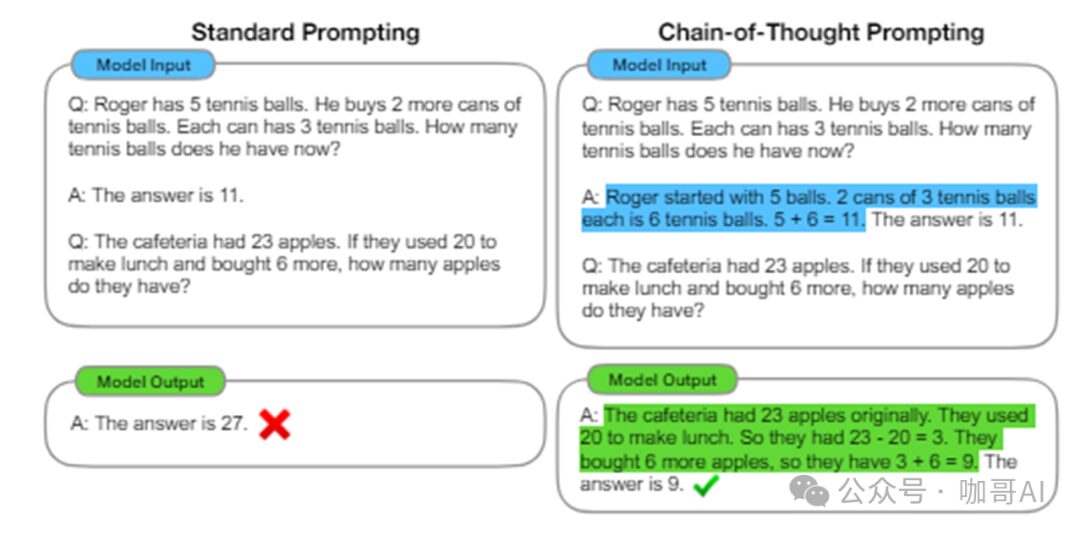

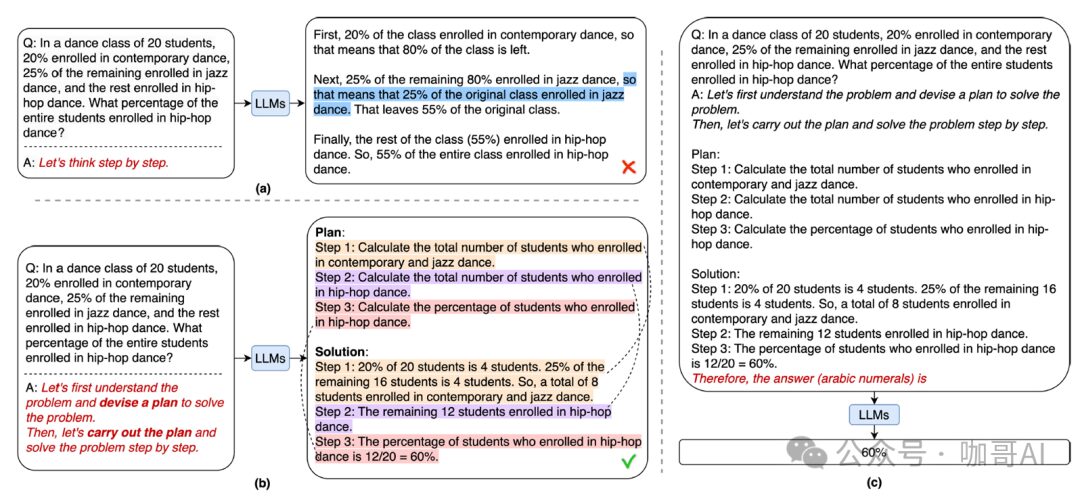

Framework 1: Chain of Thought

Example of Chain of Thought from the paper

– Age: 35 years

– Annual Income: $50,000

– Credit History: No defaults

– Debt: $10,000 credit card debt

Step 4: Based on the above analysis, comprehensively assess the applicant’s credit rating. Final judgment: Based on the above logical reasoning, the applicant’s credit rating should be above average.

from openai import OpenAIclient = OpenAI()completion = client.chat.completions.create( model="gpt-4", # Use the GPT-4 model messages=[ {"role": "system", "content": "You are an intelligent assistant specializing in credit assessment, capable of analyzing the applicant's credit status through logical reasoning."}, {"role": "user", "content": """ Consider the following information about the applicant: - Age: 35 years - Annual Income: 50,000 dollars - Credit History: No defaults - Debt: 10,000 dollars credit card debt - Assets: No real estate Analyze the steps as follows: 1. Assess the default risk based on age, income, and credit history. 2. Consider the debt-to-income ratio to determine if the debt level is reasonable. 3. Assess the risk factors of not having real estate and whether it will affect the applicant's ability to repay. Based on the above analysis steps, please assess the applicant's credit rating."""} ])print(completion.choices[0].message)Here, we constructed a detailed prompt that guides the model along the set thought chain for logical reasoning. This approach not only helps in generating more interpretable answers but also improves the accuracy of decisions.

The CoT paper has sparked a wave of research, and subsequent studies on large model reasoning cognition have gradually enriched.

-

Framework 2: Self-Ask

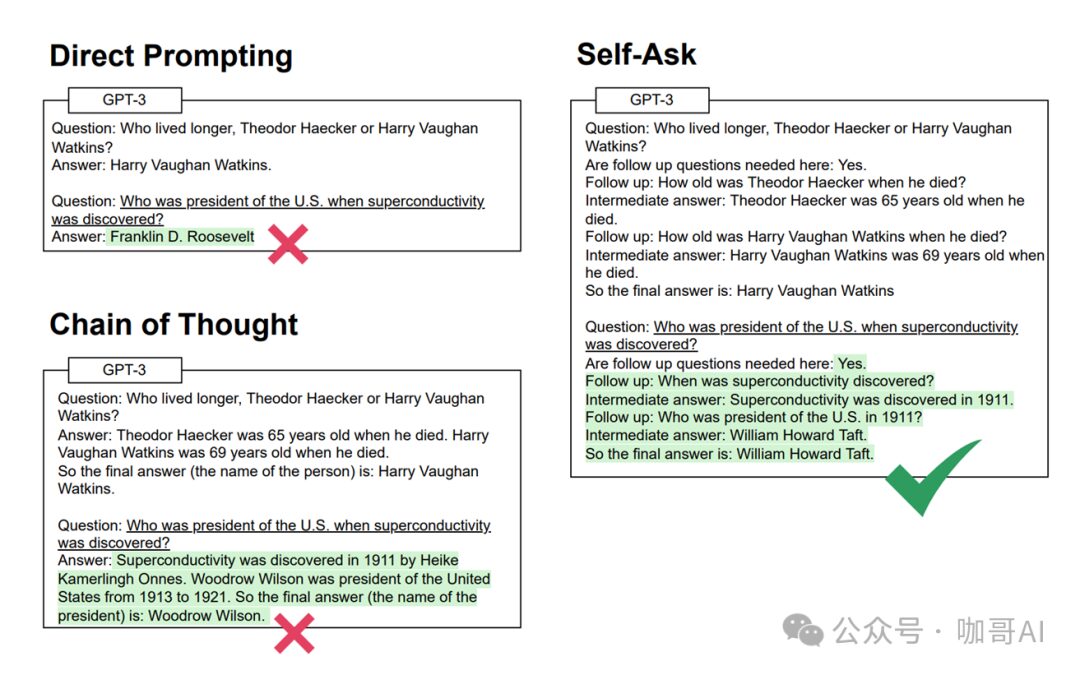

Following the idea of Chain-of-Thought, Self-Ask is an extension of CoT.

Self-Ask allows the model to self-generate questions, conduct self-querying to obtain more information, and then combine this information to generate the final answer. This method enables the model to explore various aspects of a problem more deeply, thereby improving the quality and accuracy of answers.

The Self-Ask cognitive framework is very useful in applications that require in-depth analysis or creative solutions, such as creative writing or complex queries.

Suppose we are designing a new smartwatch and need to consider diverse user needs and technological possibilities. We can set up the following Self-Ask framework.

from openai import OpenAIclient = OpenAI()completion = client.chat.completions.create( model="gpt-4", # Use the GPT-4 model messages=[ {"role": "system", "content": "You are an intelligent assistant specializing in product design innovation, capable of self-generating questions to explore innovative design solutions."}, {"role": "user", "content": "We are developing a new smartwatch. Please analyze the main functions of smartwatches currently on the market and propose possible innovations."}, {"role": "system", "content": "First, consider what features are generally lacking in smartwatches on the current market?"}, {"role": "system", "content": "Next, explore what new features may attract health-conscious consumers?"}, {"role": "system", "content": "Finally, analyze the technically feasible innovative features and how they can be realized through wearable technology?"} ])print(completion.choices[0].message)In this example, by generating questions and answers through the system, it not only guides in-depth market and technical analysis but also stimulates thinking about potential innovation points. This method helps to identify and integrate innovative elements at the early stages of product design.You can use a few-shot approach with this template to allow the large model to engage in more creative thinking, often sparking ideas we might not have initially considered.

-

Framework 3: Critique Revise or Reflection

The Critique Revise cognitive framework, also known as Self-Reflection, is a framework applied in artificial intelligence and machine learning, primarily used to simulate and implement complex decision-making processes. This architecture is based on two core steps: “Critique” and “Revise,” which iteratively improve the system’s performance and decision quality.

· Revise: Based on the issues identified in the critique step, the system adjusts its decision-making process or behavior strategies to improve output quality. Revisions can involve adjusting existing algorithm parameters or adopting entirely new strategies or methods.

· Revise: Based on the analysis results from the critique phase, the system proposes improvement measures. This may include adjusting target audiences, redesigning ad content, or optimizing budget allocation strategies. Additionally, the system may recommend testing new marketing channels or technologies to enhance overall marketing effectiveness.

from openai import OpenAIclient = OpenAI()# Execute Critique phasecritique_completion = client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a marketing analysis assistant."}, {"role": "user", "content": "Analyze the effectiveness of the recent marketing campaign and identify existing problems."} ])# Execute Revise phaserevise_completion = client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a marketing strategy optimization assistant."}, {"role": "user", "content": "Based on the previous critique, propose specific improvement measures."} ])print("Critique Results:", critique_completion.choices[0].message)print("Revise Suggestions:", revise_completion.choices[0].message)-

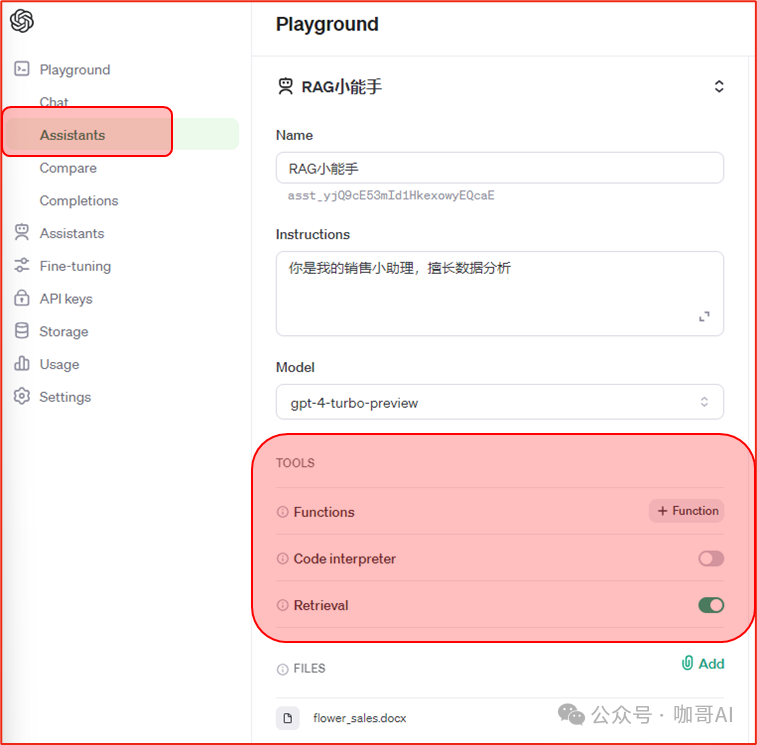

Framework 4: Function Calling/Tool Calls

Tools and toolboxes in LangChain

-

Framework 5: ReAct (Reasoning, Planning, and Acting)

-

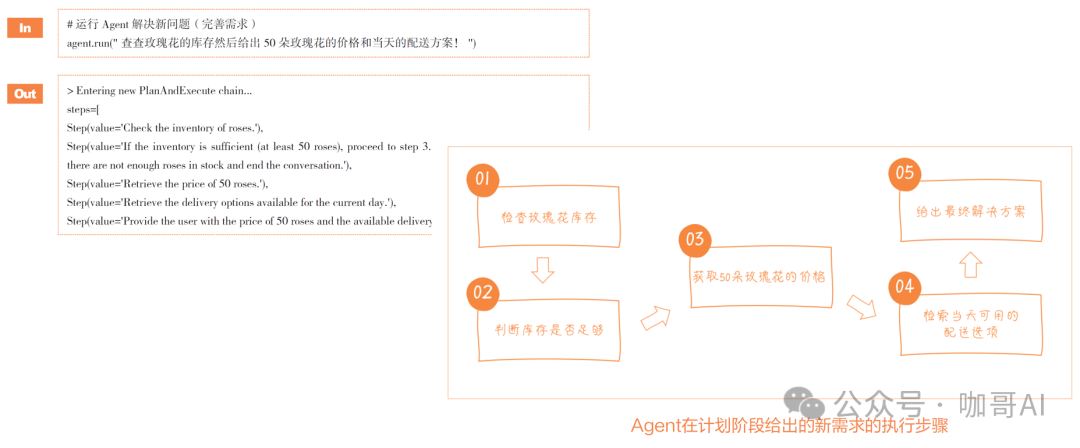

Framework 6: Plan-and-Execute

Implementation example of Plan-and-Solve

-

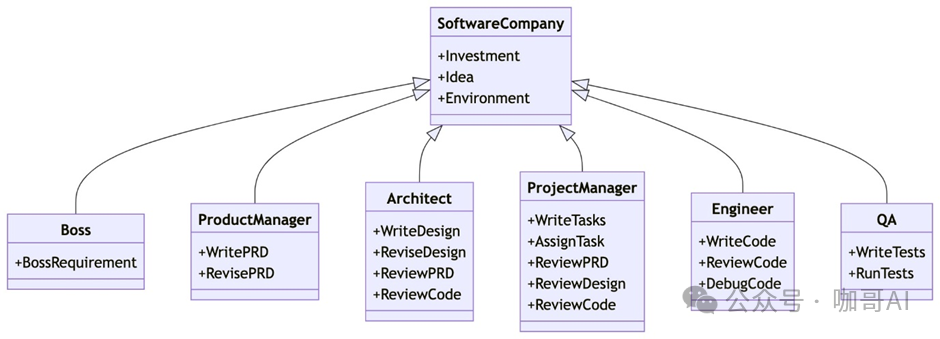

Framework 7: Multi-Agent Collaboration

· Boss: Sets the overall requirements for the project.

· Product Manager: Responsible for writing and revising the Product Requirements Document (PRD).

· Architect: Writes and revises designs, reviews product requirements documents and code.

· Project Manager: Writes tasks, assigns tasks, and reviews product requirements documents, designs, and code.

· Engineer: Writes, reviews, and debugs code.

· Quality Assurance: Writes and runs tests to ensure software quality.

Combination of Various Cognitive Frameworks

References

1.https://36kr.com/p/2716201666246790 – Andrew Ng’s latest speech: The Future of AI Agent Workflows

2.https://lilianweng.github.io/posts/2023-06-23-agent/ – LLM Powered Autonomous Agents

3.Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, 36th Conference on Neural Information Processing Systems (NeurIPS 2022).

4.Press, O., Zhang, M., Min, S., Schmidt, L., Smith, N. A., & Lewis, M. (2022).

Measuring and Narrowing the Compositionality Gap in Language Models. arXiv preprint arXiv:2212.09551.

5.Wang, L., Xu, W., Lan, Y., Hu, Z., Lan, Y., Lee, R. K.-W., & Lim, E.-P. (2023). Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models. arXiv.

6.Yao, S., Zhao, J., Yu, D., Du, N., Shafran, I., Narasimhan, K., & Cao, Y. (2023). ReAct: Synergizing Reasoning and Acting in Language Models. arXiv preprint arXiv:2210.03629.

7.https://github.com/geekan/MetaGPT – MetaGPT: The Multi-Agent Framework

Share your views on the future development of Agent technology

Participate in the interaction in the comment area and click to view and share the event to your circle of friends. We will select one reader to receive an e-book version of the electronic book, deadline June 30.