The full text is 1803 words and takes about 4 minutes to read.

Written by|X Tech Laboratory

When you clearly ask a smart speaker a question, but receive a bewildering answer, friends who have used smart speakers or other voice assistants will be familiar with this scenario.

If you suspect that you have been charged an intelligence tax, you might ask: Why can’t smart speakers understand human speech? Can they become smarter?

To answer this question, we first need to understand how smart speakers comprehend speech.

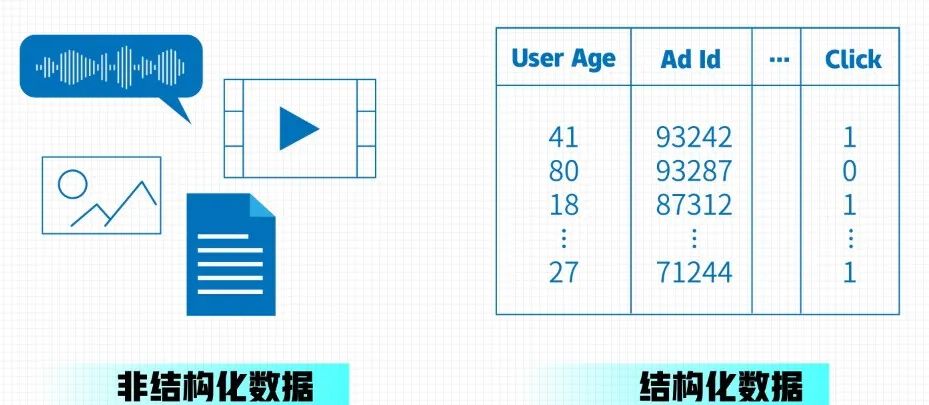

Speech, text, images, and video are considered “unstructured data” for machines and cannot be understood directly. What machines can understand and respond to is “structured data”.

In simple terms, the difference and relationship between the two can be understood as follows: unstructured data is like a Word document containing a paragraph of text, while structured data is like an Excel document summarizing that text.

When we ask a smart speaker a question, it first converts the voice into text, then forms structured data from the text to understand and respond, and finally converts the text back into voice to output the answer.

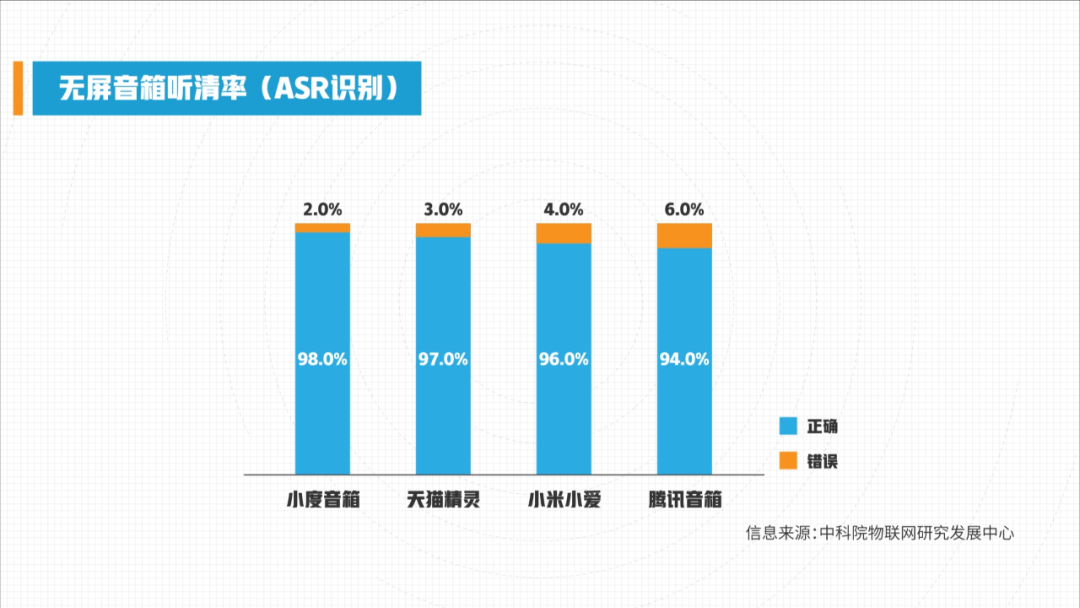

The three major processes correspond to three technologies, among which ASR (Automatic Speech Recognition) and TTS (Text-to-Speech) technologies are relatively mature. For example, ASR has been widely applied in speech-to-text, voice input methods, and smart translation pens. According to a test by the Chinese Academy of Sciences in 2019, the ASR recognition rate of four major domestic smart speaker brands is over 94%.

The inability of smart speakers to understand us mainly lies in the NLP (Natural Language Processing) stage.

When AI receives the text, it needs to perform a series of operations such as corpus cleaning, word segmentation, part-of-speech tagging, named entity recognition, and removing stop words to correctly convert the text into structured data and respond.

Today, smart speakers can relatively “intelligently” handle clear directive questions, such as turning on the TV, asking about the weather today, and setting alarms.

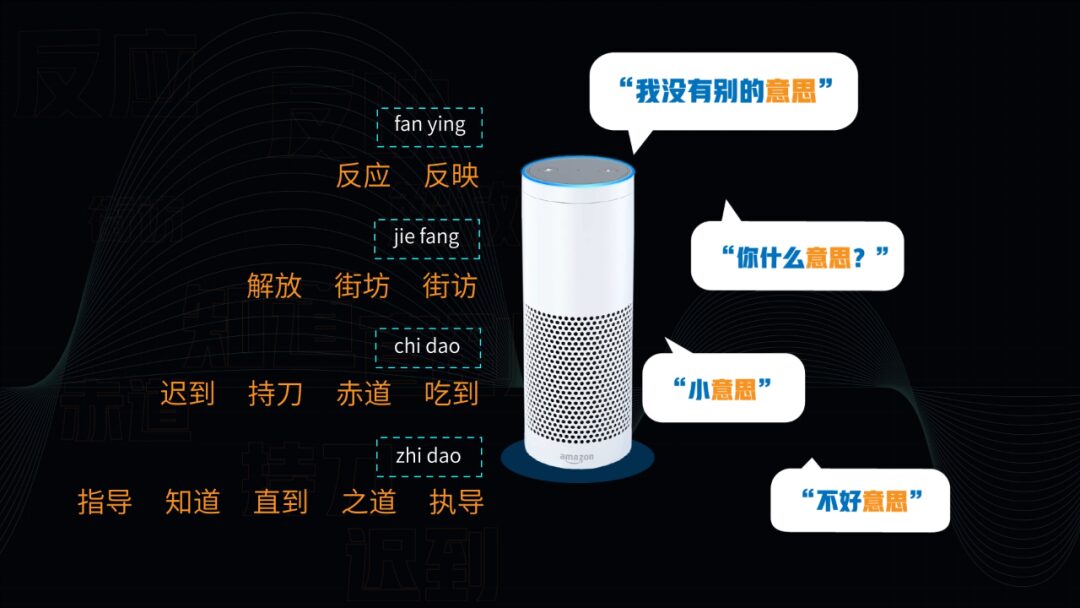

However, in open contexts, smart speakers make frequent mistakes. This is because spoken text often contains ambiguities that machines find difficult to understand, especially when processing Chinese, which has many homophones and complex grammar, where ambiguities are particularly common.

For example, “Set an alarm for an hour and a half later,” the speaker can hardly recognize whether it’s half an hour or an hour and a half. Another example is, “Help me book a restaurant, not Japanese food,” where AI is likely unable to understand the meaning of “not” in the sentence.

Faced with complex natural language, for AI to distinguish, understand, and eliminate ambiguities, it must analyze a vast amount of “corpus”, which means learning from instances of human language.

There are mainly three sources of corpus for AI learning.

The first is the “corpus” constructed by AI algorithm companies based on demand, such as creating databases for names, place names, singer names, song names, etc., for specific functions.

The second is a large amount of text extracted from the internet, most commonly from social networks, to help machines understand the latest spoken expressions.

Finally, there is data generated during user interaction with products, such as parts of our conversations with smart speakers being uploaded to the manufacturers’ corpus for AI learning.

So, if I talk to a smart speaker for a long time, will it understand me better?

The answer is likely no. Generally speaking, all training and learning processes are completed on the AI algorithm provider’s end. The algorithm provider will train and update the AI based on all user data, rather than targeting a specific user.

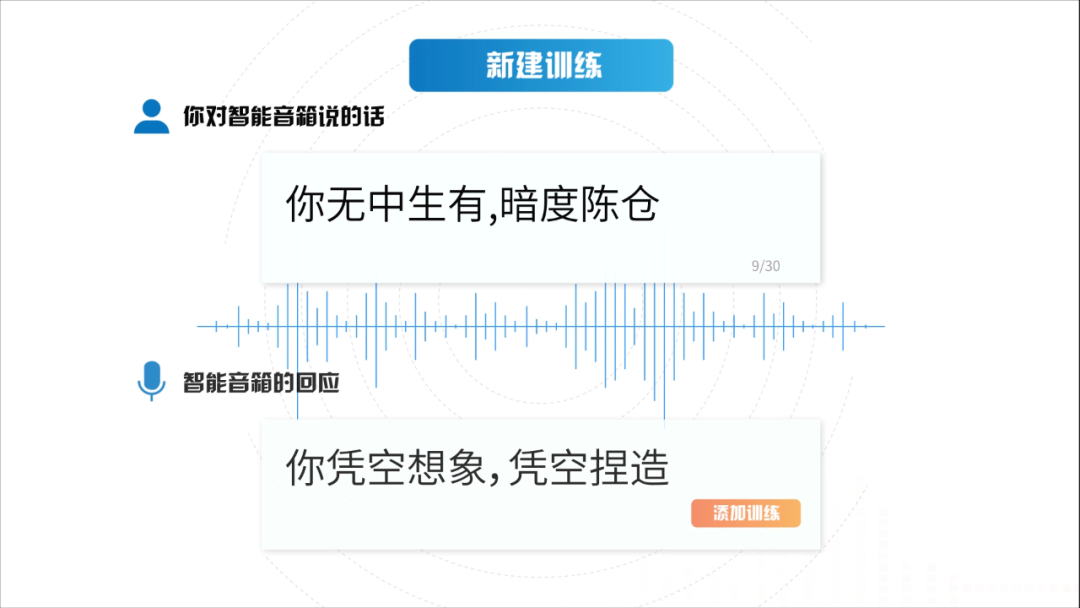

Therefore, to make a smart speaker understand your habitual speech errors, inversions, and colloquialisms, the best current method is to manually input what response it should give.

Although smart speaker technology is not yet fully mature, it is a territory that internet giants must conquer.

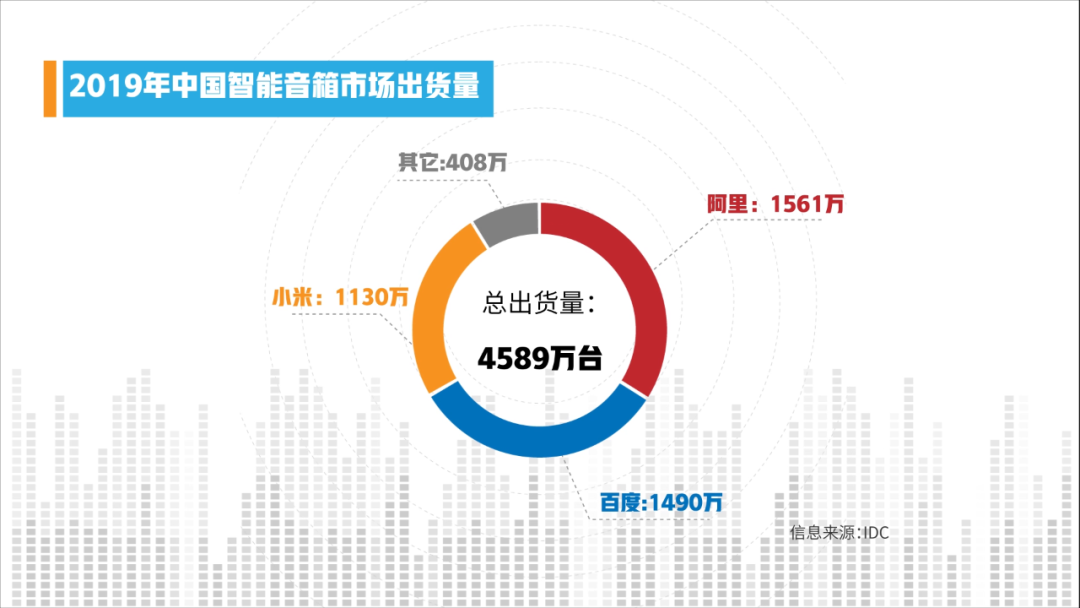

The Chinese smart speaker market experienced explosive growth in 2019, with total shipments reaching 45.89 million units, a year-on-year increase of 109.7%. Among them, Alibaba’s Tmall Genie, Baidu’s Xiaodu speaker, and Xiaomi’s Xiao Ai speaker accounted for over 90% of the market share.

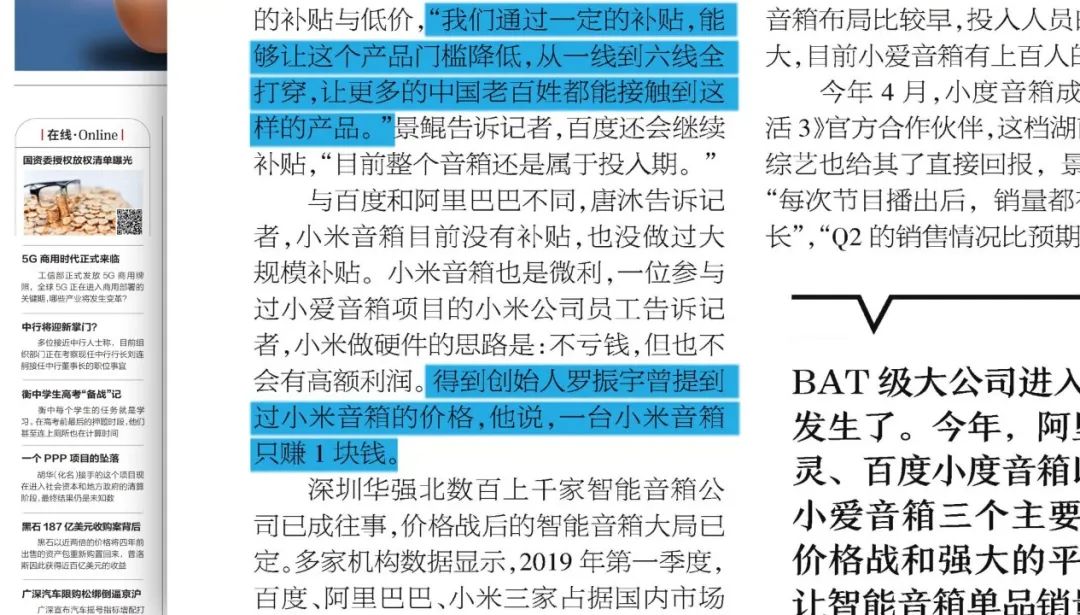

While shipments of smart speakers have increased, they have not brought profits to the giants. In 2019, Baidu’s vice president Jing Kun stated that Baidu maintained the low price of the Xiaodu speaker through subsidies. Additionally, according to industry estimates, for every Xiaodu speaker sold, Xiaomi only makes 1 yuan.

The underlying logic for these giants is that smart speakers are likely to become the control center of future homes, so they want to use the current low-price strategy to secure a ticket to the future IoT era. This strategy can indeed lead to increased sales, but effectively addressing user pain points is essential to ensure product usage.

To enhance the irreplaceability of smart speakers in users’ lives, manufacturers are trying hard to add new functions, as no one wants to see their smart speakers gathering dust when the IoT era truly arrives.

(Thanks to Han Qinghong from Xiangnong Technology for their assistance in this episode)

References:

1. Analysis of Smart Speaker Intelligent Technology and Its Maturity Assessment, Chinese Academy of Sciences Internet of Things Research and Development Center, 2019.12.09

2. Open Course “Natural Language Processing (NLP)”, Beijing University of Posts and Telecommunications Wang Xiaojie

3. The Evolution of Smart Speakers, Economic Observer, 2019.6.10

4. 2019 China Smart Speaker Shipment Volume, IDC, 2020.3.20

The video version of this episode can be found below, with more in-depth interpretations of technology and industry available on 【X Tech Laboratory】 video account. You can also search for the same account on Douyin, Bilibili, and Weibo.