Source: MedRobot; Edited by: Tang Zijie

Reprint Requirements: Please indicate the source at the beginning of the article, and it can be directly reprinted.

MedRobot

One of the outstanding features of the Da Vinci system is its strong academic output. (In 2022, there were over 3,000 papers on Da Vinci globally, totaling more than 35,000.) MedRobot will continue to release more paper content to help doctors find ideas and write good papers.

Robotics and artificial intelligence are both highly forward-looking disciplines. In the 21st century, their interdisciplinary intersection is pushing medicine to new heights.

Today, MedRobot has distilled a high-impact forward-looking article published by scholars Michael Yip et al. from the University of California and the University of British Columbia in Science Robotics (Q1, IF=25), titled “Artificial Intelligence Meets Medical Robotics.” This article elaborates on the applications, prospects, and challenges of artificial intelligence (AI) in medical robotics.

The application of artificial intelligence (AI) in medical robots brings a new era to medicine. Advanced medical robots can perform diagnostics and surgeries, assist in rehabilitation, and provide prosthetics as alternatives to limbs. The technologies used in these devices, including computer vision, medical image analysis, haptics, navigation, precision manipulation, and machine learning (ML), enable autonomous robots to conduct diagnostic imaging, remote surgeries, surgical subtasks, and even entire surgical procedures.

Furthermore, rehabilitation devices and advanced prosthetics can provide personalized support and improve functionality and mobility. In the future, significant advancements in robotics, medicine, materials science, and computer technology will lead to safer, more efficient, and broader patient care.

#Utilizing AI for Medical Imaging Guided Robotics

Medical imaging guided robotics combines medical imaging and robotics, where the former can determine the location of key anatomical structures, lesions, and objects, while the latter can precisely place instruments or tools, resulting in significant advantages.

Common imaging modalities include:

● Ultrasound

● Magnetic Resonance Imaging

● Computed Tomography

● White light or fluorescence endoscopy

Robots can assist in anatomical imaging and guide it to reach critical targets. Here, the focus of artificial intelligence is often on steering mechanics and planning algorithms, as navigating through soft tissue involves curved paths, which are constrained by the minimum curvature radius of the tissue traversed and the tissue displacement during instrument insertion.

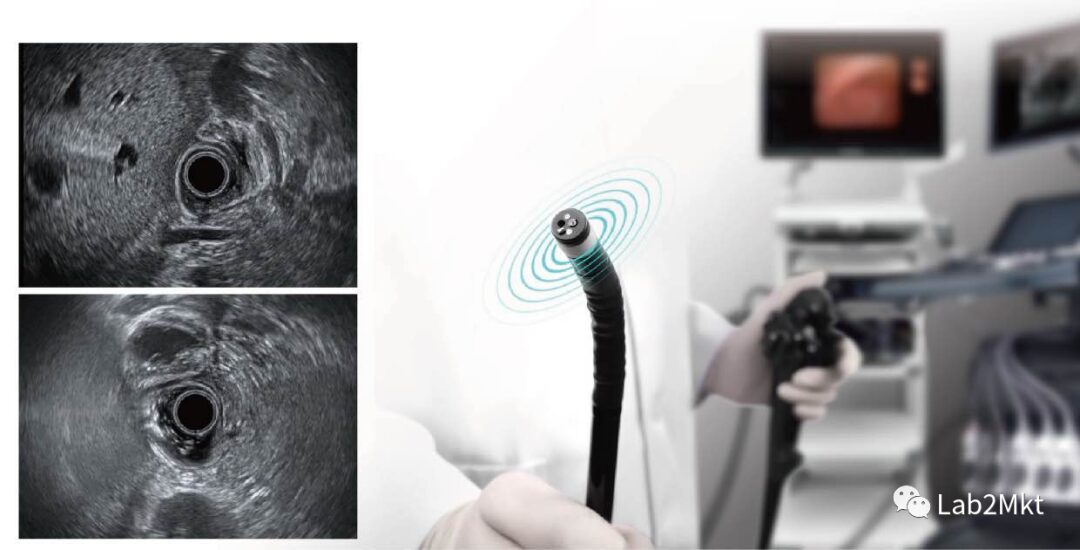

Robot assistance is also used in endoscopy: rigid or flexible endoscopes are used in surgical gastrointestinal imaging and bronchoscopy, even magnetic-driven capsules.

Operating endoscopes is challenging and requires considerable experience to master, making automation attractive. AI technology can be used for endoscope localization and mapping, as well as analyzing large sets of images involved in diagnostics. Intraoperative ultrasound and X-ray imaging can register preoperative medical images to the patient for biopsies and surgeries. Locating targets determined through intraoperative registration in the corresponding laparoscopic camera view remains a challenge.

Unlike establishing neural network models for everyday scenarios, finding labeled data for training models for medical robot applications is a significant bottleneck. Consequently, the cost of obtaining real-world data is very high and non-scalable. Synthetic generated images help address part of this issue, but synthetic images differ greatly from real images, potentially leading to overfitting.

#AI-Driven Development of Intelligent Medical Robots

The various applications of artificial intelligence (AI), including machine learning, machine vision, and haptic control, have significantly contributed to the development of robotic devices that can be used in various aspects of patient care, including diagnostics, surgery, rehabilitation, and limb replacement. The application of robotics in healthcare aims to ensure consistency, safety, and efficiency in treatment while allowing data collection to improve care and potentially increase access to underserved communities and populations affected by natural disasters.

The Best Example is Surgical Robots

Surgical robots come in various forms, including remote-operated devices that allow surgeons to perform complex surgeries without worrying about hand tremors damaging the tissue. Soft robotic devices for minimally invasive surgery are currently under development, providing tactile feedback to surgeons and ensuring safe operation and navigation in soft tissues. Additionally, semi-autonomous robots capable of performing surgical subtasks like suturing and debridement are also in development.

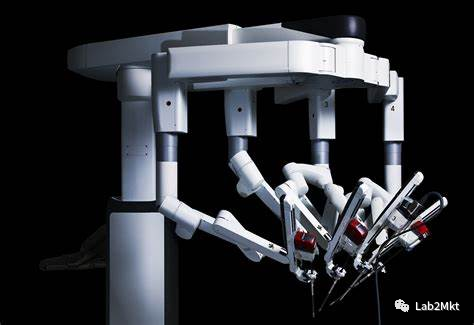

▲ Da Vinci SP (Image Source: Intuitive Surgical)

This could lead to the emergence of fully autonomous surgical robots.

#Supervised Autonomy in Robot-Assisted Surgery and Remote Surgery

Every year, over a million surgeries are performed by robots. These robots are highly precise, but not fully autonomous—they are entirely controlled by surgeons. This is because surgery does not allow for errors, and there are numerous rare but potentially dangerous edge conditions, where even a single failure could be fatal.

1. Considering the Complexity of Debridement Tasks

Surgeons can easily overlook small fragments, leading to infections. Surgical robots and imaging systems can systematically identify and remove debris under the close supervision of surgeons, allowing them to detect any misidentified foreign fragments. Autonomous supervision of surgical debridement has been achieved under laboratory conditions, but further research is needed to extend these results and evaluate them in vivo.

▲ Surgical Debridement Kit

2. Another Example is Surgical Suturing

Suturing requires evenly placed stitches to balance tissue tension. Supervised autonomous suturing can make suturing more consistent, thereby reducing healing time and scarring.

Researchers are investigating how to utilize supervised autonomous systems for suturing, where surgeons can touch the wound’s edges with instruments to outline the wound’s contour, and the system will calculate and display the optimal entry and exit points for the needle, ensuring uniform tension distribution across the entire wound.

Initial results have been demonstrated in laboratory settings, but issues such as safely grasping surgical needles during insertion, passing needles back and forth between grasping tools, and managing suture slack must be resolved before considering clinical suturing tests.

▲ Robotic Autonomous Suturing

3. Supervised Autonomy Also Opens Doors for Remote Surgery

Experienced surgical experts can guide surgeries from afar. Remote surgery has the potential to significantly increase access to skilled surgeons in remote areas or during natural disasters. Experts cannot directly control all movements of surgical instruments due to the inherent time delays of optical and telecommunication signals, which would render any direct control unstable. Supervised autonomous control can address this issue.

Remote surgery is a new surgical approach that allows local control of surgical subtasks and remote monitoring. Remote surgery has been demonstrated using dedicated high-speed optical fiber networks, and researchers are actively exploring this, but it has not yet been approved for clinical use.

In recent years, advancements in sensors and artificial intelligence have significantly developed robotic technology, providing many opportunities for AI applications in the operating room. AI can be used to enhance digital camera images and respond to surgeons’ verbal inquiries during surgery. Researchers worldwide are exploring how to leverage supervised autonomy to enhance robot-assisted surgery and remote surgery.

As the original patents for surgical robot technology expire, new commercial surgical robot systems are increasing the diversity of hardware and interfaces. This competition is driving the commercial development of new features, and supervised autonomous operations are likely to be applied clinically within the next decade.

#Soft Robotics Technology for Minimally Invasive Surgery

Over the past few decades, robotic-assisted minimally invasive surgery (RAMIS) has made significant progress. RAMIS systems use slender linear instruments to perform surgery through small incisions in the patient’s skin.

Robots simplify minimally invasive surgery by:

● Filtering out surgeons’ manual tremors

● Improving overall ergonomics

● Restoring three-dimensional (3D) views that manual minimally invasive surgery typically cannot achieve.

Additionally, RAMIS can generate large amounts of data to enhance safety and perform some autonomous tasks. Although some RAMIS platforms have been successful, such as the Da Vinci surgical system for prostatectomy and abdominal corrective surgery, these systems are often limited by their rigid component designs, making it difficult to access certain areas of the body and potentially leading to tissue damage.

▲ Da Vinci Surgical Robot

Soft robotics technology is a promising avenue for developing more flexible and adaptable surgical robots, possessing the necessary dexterity and stiffness modulation capabilities to perform surgeries safely. The main feature of soft robots is the use of deformable, bendable, contractible, and stiffness-changing materials, pushing the paradigm of robotic surgery towards safer and softer directions.

▲ Magnetically Controlled Soft Guidewire Robot

These robots can be used in various body parts, including the ear, abdomen, and chest, for both diagnostic and interventional purposes. For instance, a fluid-driven soft robotic system has been developed to enhance patient comfort during ear treatment and safely guide needles to the desired injection sites. Gastrointestinal pathological diagnosis is also a significant application of soft robotics, as these tissues are flexible and collapsible and require a series of soft and hard working modes. Recently, a capsule robot for endoscopy was demonstrated, utilizing eversion navigation and soft shape-changing mechanisms.

Between 2012 and 2015, the EU conducted the STIFF-FLOP project (a controllable stiffness, flexible, learnable mechanical hand for surgery), which explored soft robotics for RAMIS. The developed soft robotic system is made of biocompatible silicone rubber and is pneumatically driven.

There are still many technical challenges. In traditional surgical robots, motors directly or via tendons move the robot’s joints, and effectors are made of rigid components, which do not deform during operation. The resulting motion is more challenging to model, potentially leading to reduced positional accuracy, which could be a critical issue in surgery. To overcome this challenge, advanced strategies based on AI, ML, and data-driven control are being developed to address the highly nonlinear motion behaviors of soft robots.

Recent advancements in computational power, computer vision, ML, real-time modeling, and simulation can make soft robotics operations in surgery feasible without cumbersome remote operation modes and extensive training for surgeons. Soft robots for RAMIS will replace mature surgical robots, and the design rules of soft robots based on morphological computation will permeate traditional technologies used for RAMIS.

#Bringing Highly Autonomous Surgical Robot Technology into Clinical Practice

Autonomous surgical robots are the future surgeons. They can standardize treatment outcomes independent of the surgeon’s experience and skills; they integrate AI and dexterous tools, capable of performing tasks with greater consistency and accuracy than specialized surgeons; they can also provide necessary care in environments without surgeons, such as human spaceflight.

This robot will make high-quality surgeries ubiquitous, minimizing the occurrence of corrective surgeries, thereby reducing medical costs and democratizing healthcare. Although most systems have not yet received clinical approval, they are sure to play a role in the future.

Generally, autonomous surgical robots are classified according to their levels of autonomy (LoA) and employ algorithms responsible for enhancing surgical decision-making capabilities. As the level of autonomy increases, the complexity of the robot’s role in surgery and the amount of integrated AI in the system also rise.

The levels of autonomy in medical robots (LoA) are divided into different levels, from pure teleoperation to complete autonomy.

LoA includes:

● LoA 0: No autonomy (e.g., pure teleoperation: the Da Vinci surgical system uses human remote operation without AI for surgery);

● LoA 1: Robot-assisted (continuously controlled by humans via virtual fixtures or active constraints through some mechanical guidance or assistance: the EndoAssist camera uses AI for surgery);

● LoA 2: Task autonomy (the robot autonomously performs specific tasks, initiated by humans through discrete control rather than continuous control);

● LoA 3: Conditional autonomy (a system generates task strategies but relies on humans to choose from different strategies or approve autonomously chosen strategies);

● LoA 4: Highly autonomous (the robot makes medical decisions but must be supervised by a qualified physician);

● LoA 5: Fully autonomous (a robot surgeon that can perform the entire surgery without human intervention).

Robot owners use algorithms to restrict tool movements. For higher levels of autonomy, surgeons relinquish control to robots, which use AI to perform surgical tasks.

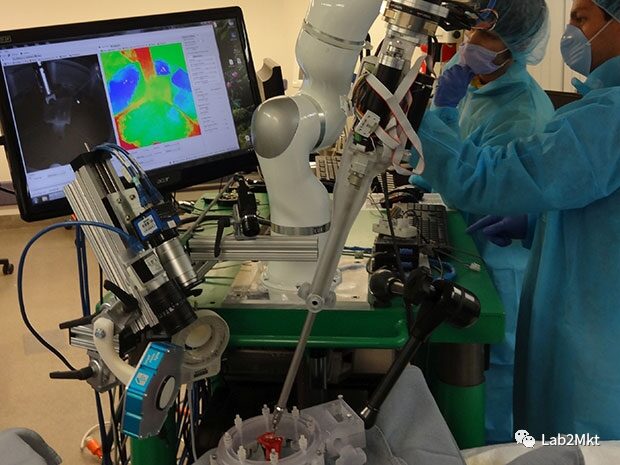

At the LoA 2 (task autonomy) stage, robots can independently cut tissues through observational learning, as seen in the Da Vinci research suite;

while at the LoA 3 (conditional autonomy) stage, the Smart Tissue Autonomous Robot (STAR) uses ML to track soft tissue deformation to execute suturing surgical plans.

LoA 4 (highly autonomous) and LoA 5 (fully autonomous) surgical systems are currently not feasible under today’s technological conditions, but their development is imminent. Robots need to better detect, handle, and respond to unpredictable changes in the surgical field.

In soft tissue surgery, these challenges will be further amplified, as deep learning and understanding are required to predict and respond to the ever-changing surgical scenarios. The resulting technology will face stricter regulations, as it remains unclear how the FDA or other medical device regulatory agencies will oversee a system capable of practicing medicine.

To gain public trust, autonomous robots may adopt a gradual approach, introducing autonomous subtasks like tissue recognition, endoscope control, and suturing step by step. These tasks will then be combined into a complete program, paving the way for autonomous robotic surgeries in future operating rooms.

#Increasing Importance of Rehabilitation Robots

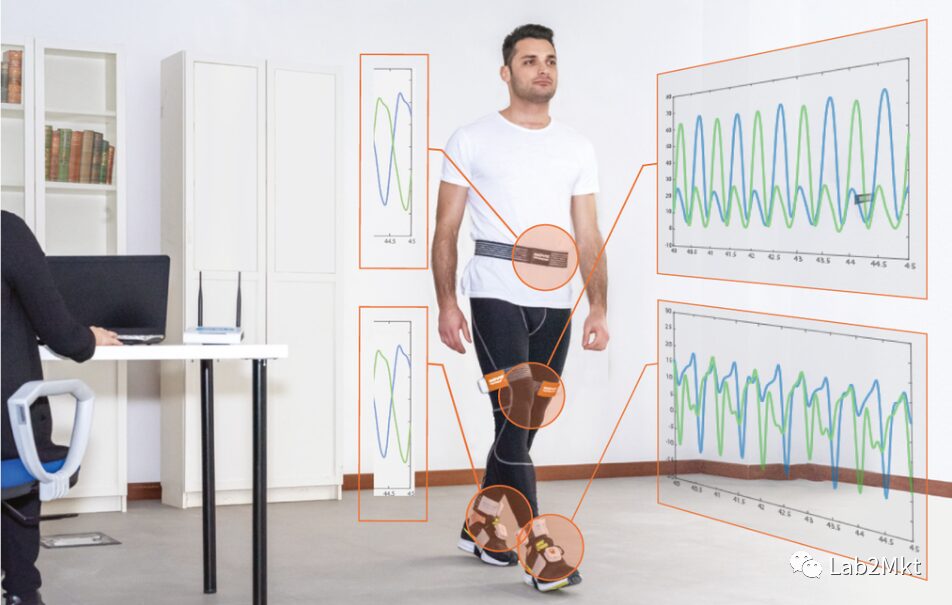

Rehabilitation therapy needs to extend from clinics to communities and homes, providing continuous care for patients. To achieve this, engineers, clinicians, and end-users have developed wearable robots that enable individuals with mobility impairments to practice and experience improved movement.

Although these systems have historically been viewed as assistive technologies, current work indicates that these portable autonomous robots and the data they capture can bring about fundamentally different rehabilitation approaches. It is conceivable that in the future, wearable robots will be used clinically, relieving clinicians of physical burdens while understanding the specific injuries of patients, and then be sent to patients’ homes to track their rehabilitation using personalized AI algorithms.

In addition to new mechanical designs, learning-based estimation algorithms can now leverage wearable sensors to detect and quantify movement, and control strategies can now bring users into the control loop, providing personalized interventions. Wearable cloud-connected robots will usher in a data-driven era of physical remote rehabilitation, integrating sensing to provide crucial biomechanical and physiological feedback to clinicians and users, similar to smart fitness trackers.

Moreover, developing universal data-driven AI algorithms in clinical populations is particularly complex, as data from any specific individual is very limited, and there are significant differences between individuals. The lack of ground truth data in real-world environments complicates the validation of these methods. If successful, the corresponding data from patients and robots could be combined with ML techniques to determine who is most likely to benefit from specific devices.

This classification will effectively prescribe intervention prescriptions, minimizing costs for clinicians and patients. Subsequently, the field will have new opportunities to investigate how longitudinal data can inform new methods for personalizing training parameters, such as optimizing biofeedback and device controllers. In the real world, data from wearable robots will inform the design and experimental validation of computational models for human-robot interaction during rehabilitation, ultimately leading to adaptive systems that better collaborate with end-users.

#AI Bionic Robot Prosthetics

Advanced robotic prosthetics, such as dexterous hands and powered prosthetics, bring about a paradigm shift in restoring mobility for those with limb loss. These modern prosthetics embed AIint into machine operations to adapt to the user’s intentions, environment, and physical conditions.

This is the symbiosis between humans and prosthetics.

Intelligent prosthetics and human users operate seamlessly as a system in life (for example, AIhas achieved neural control of prosthetics. From simple linear classifiers to deep learning methods, these methods can be used to recognize user intentions regarding joint movements (wrist or knee flexion or extension or grip strength).

Human hands can dexterously interact with objects of different sizes and materials: therefore, symbiotic prosthetics should also possess environmental adaptability. Machine vision has been used to create environmental adaptability for prosthetics.

Hand robotic SPs can re-identify objects to be grasped, allowing the prosthetic arm to prepare the appropriate wrist posture and hand grasping mode or force for the grasping action. Similarly, visual sensors mounted on prosthetic legs can identify the terrain in front of the user, enabling automatic adjustment of prosthetic control for seamless terrain transitions.

▲Hand Robotic SP

Due to significant variations in the physical conditions and movement impairments among amputees, symbiotic prosthetics need to be adjusted according to each user’s situation. In current clinical practices, the personalized control of robotic lower limb prosthetics is achieved through manual and heuristic methods, which are not only inaccurate but also time-consuming and labor-intensive. To automate this process, researchers have developed several algorithms and other data-driven optimization methods, such as Bayesian optimization, to adjust prosthetic control and bring humans into the loop for personalized walking assistance.

Furthermore, whether human users cognitively accept and trust AI-supported prosthetics is also an unresolved issue. These challenges should guide future research efforts to make AI-supported symbiotic robotic prosthetics multifunctional, safe to use, and cognitively acceptable to amputee users.

Well-Known Surgical Robot Companies in China:

Tianzhihang | Kangnuo Siten | Shuri | Rosenbot

Aibo Medical Robot | Yuanhua Intelligence | Bornsi

Jingfeng Medical | Jianjia

Other Surgical Robot Company Updates:

Progress of Chinese Surgical Robot Companies

Progress of Overseas Surgical Robot Companies