This letter pays tribute to virtualization by exploring its history from the 1960s to today, examining some current popular IT trends, and considering the impact of virtualization.

The importance and application of virtualization extend far beyond virtual machines.

The development of information technology over the past 60 years has not been as valuable as virtualization. Many IT professionals consider virtualization to be about virtual machines (VMs) and the associated hypervisors and operating systems, but this is just the tip of the iceberg. The increasingly widespread technologies, strategies, and virtualization capabilities are redefining the key IT elements of organizations worldwide.

Definition of Virtualization

Considering the broad definition of virtualization, we can say that it is the science of how to convert an object or resource simulated or emulated in software into a functionally equivalent physical implementation.

In other words, we use abstraction to make software appear like hardware, providing significant advantages in flexibility, cost, scalability, overall performance, and broad applicability. Thus, virtualization truly achieves real functionality by replacing similar implementations in software with the flexibility, convenience, and services of software capabilities.

Virtual Machines (VM)

The history of virtual machines dates back to the 1960s with a few mainframes, primarily from the IBM 360/67, which later became common in the mainframe world in the 1970s. With the advent of the Intel 386 in 1985, virtual machines found their place in microprocessors, which are the core of personal computers. The modern capabilities of virtual machines embedded in microprocessors, aided by hypervisors and implemented at the operating system level, require necessary hardware support, which is crucial for computational performance and capturing machine cycles.

Virtual machines also provide additional security, integrity, and convenience, as they do not require significant computational costs. Furthermore, the capabilities of virtual machines can be extended by adding emulator functionalities for interpreters (such as the Java Virtual Machine) or even full emulators.

Running Windows under MacOS? Easy. Using Code Commodore 64 on your modern PC with Windows? No problem.

-

Most importantly, software running on a virtual machine is unaware of this fact. Even operating systems originally designed for bare metal consider this their “hardware” platform. This is the most critical element of virtualization: the isolation of information systems provided by APIs and protocols.

In fact, we can trace the roots of virtualization back to the time-sharing era, which also began to emerge in the late 1960s. At that time, mainframes were certainly not portable, so the quality and availability of dial-up and leased telephone lines rapidly improved, along with advancements in modem technology, allowing mainframes to virtually exist as terminals (often alphanumeric). Indeed, virtual machines: due to technological advancements and the affordability of microprocessors, this model of computing directly led to the creation of personal computers in the 1980s, in addition to the data transmitted over telephone lines, local networks, ultimately representing the possibility of continuous access to the internet.

Virtual Memory

The concept of virtual memory also developed rapidly in the 1960s, rivaling the concept of virtual machines. The mainframe era was characterized by the high cost of core memory, with mainframes exceeding one megabyte of memory being a rare phenomenon in the 1970s. Like virtual machines, virtual memory was activated with relatively minor additions to hardware and instruction sets to include portions of memory, commonly referred to as segments and/or pages, for writing to auxiliary storage, and dynamically used in these blocks as they were unloaded from disk.

For example, a real megabyte of RAM on the IBM 360/67 could support the entire 24-bit address space (16 MB) contained in the computer architecture, and with proper implementation, each virtual machine could have its own complete set of virtual memory. As a result of these innovations, hardware designed for a program or operating system could be shared by multiple users, even if it had different operating systems or required more memory than the actual bandwidth. The advantages of virtual memory, like those of virtual machines, are numerous: user and application segmentation, enhanced security and data integrity, and significant improvements in ROI. Sound familiar?

Virtual Desktops

After virtualizing machines and memory and introducing them into affordable microprocessors and personal computers, the next step was desktop virtualization, thus providing single-user and shared applications. Once again, we need to return to the aforementioned time-sharing model, but in this case, we simulate PC desktops on servers, with client-specific software removing graphical and other user interface elements through network connections, typically via inexpensive, easy-to-manage, and secure thin client devices. Currently, every leading operating system supports this functionality in various forms, including VDI, X Windows systems, and very popular (and free) products like VNC.

Virtual Storage

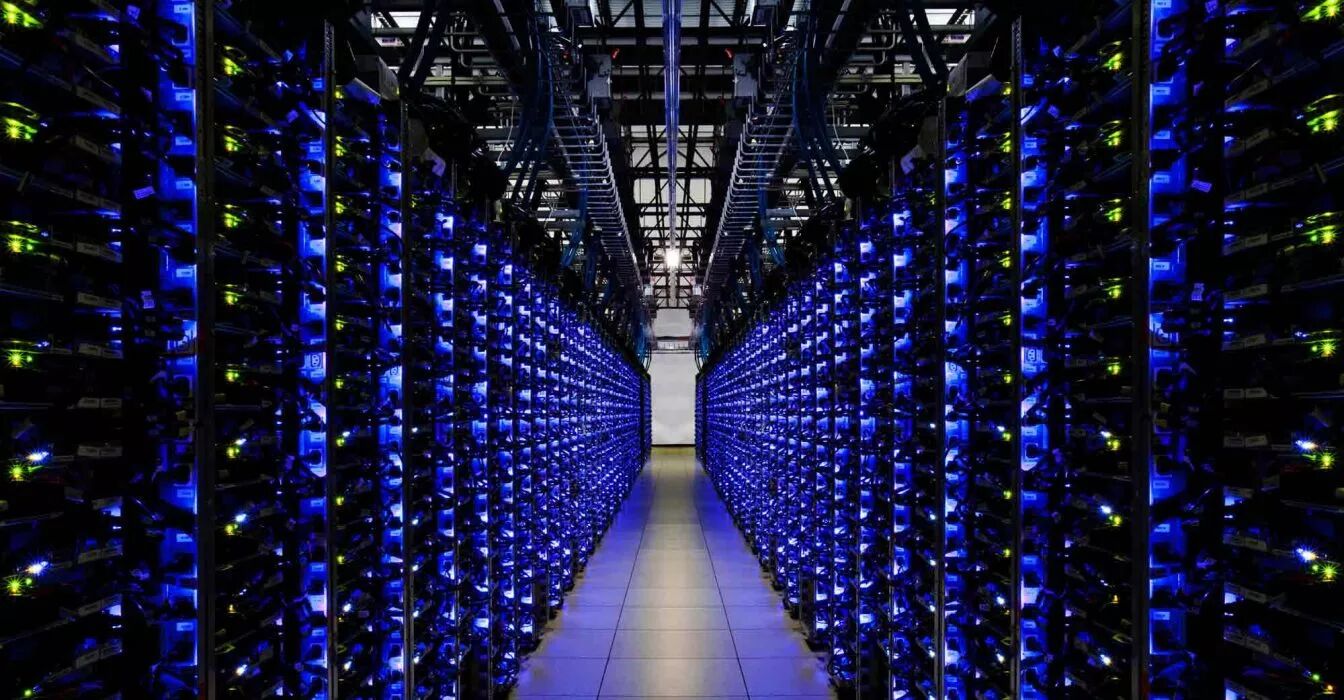

The next significant achievement that is very popular today is the virtualization of processors, storage, and applications in the cloud, which is the ability to extract necessary resources at any time with little or no effort from IT personnel to build capacity. Savings in physical space, capital costs, maintenance, downtime, time-consuming troubleshooting, severe performance and shutdown issues, and many additional costs can actually be offset by service solutions stored in the cloud. For example, storage virtualization in this case can provide many opportunities.

With wired and wireless networks providing data transmission rates of 1 Gbit/s and above, the widespread adoption of cloud storage (not only as backup but also as primary storage) will become more common. This capability has already been implemented in Ethernet, 802.11ac Wi-Fi, and one of the most anticipated high-speed networks—5G—which is currently being tested in many countries.

Virtual Networks

Even in the networking world, the concept of virtualization is becoming increasingly popular, with “Network as a Service” (NaaS) technology now being a promising and very popular option in many cases. This trend will only be amplified by the further promotion of Network Function Virtualization (NFV), which will certainly become of particular interest to operators and carriers, especially in the mobile communications sector. Notably, network virtualization provides mobile operators with real opportunities to expand their business scope, increase bandwidth, thereby enhancing the value and appeal of their services to enterprise customers. In the coming years, more organizations may use NFV independently, or even in hybrid networks (another attractive factor for customers). At the same time, VLAN (802.1Q) and Virtual Private Networks (VPN) have made significant contributions to the use of modern virtualization methods.

Cost Reduction through Virtualization

Even considering the various important functional solutions that virtualization can provide, the economic assessment of large virtualization capabilities remains at the forefront. The competitiveness of the rapidly developing business model based on cloud services means that the traditional time-consuming operational costs borne by contracting organizations will decrease daily, as service providers develop new proposals based on their experience, significantly saving costs and offering lower prices to end users due to market competition.

With it, the reliability and fault tolerance are effectively improved by using multiple cloud service providers in a fully redundant or hot backup mode, which practically eliminates the possibility of single points of failure. As you can see, many cost elements of capital expenditures in the IT field have shifted to operational expenditures. Most of the funds are not used to increase the organization’s equipment, capacity building, and personnel, but rather the number of service providers. Similarly, due to the capabilities of modern microprocessors, improvements in system and architecture solutions, and the dramatic increase in the performance of local networks and WANs (including wireless),

virtualization itself is not a paradigm shift, although it is often described as such.

-

As mentioned above, the significance of any form of virtualization lies in allowing IT processes to be more flexible, efficient, convenient, and effective with the help of a multitude of functionalities.

-

Based on the virtualization strategies of most cloud services in IT, one could argue that virtualization is so far the best solution as an alternative operating model with economic advantages that will make traditional working methods impossible.

-

The development of virtualization in this field is due to the significant economic transformation of IT operating models, stemming from the commercialization of information technology.

-

In the dawn of computer technology, our interest was focused on expensive and often overloaded hardware elements, such as mainframes. Their enormous costs and motivations led to the first attempts at virtualization, as described above.

-

As hardware became cheaper, more powerful, and easier to use, the focus shifted to applications running in almost standardized and virtualized environments, from PCs to browsers.

The result of this evolution is what we see today. As computers and computing are the backbone of IT, we have turned our attention to processing information and the ability to provide information anytime and anywhere. This “African-centered” evolution of the mobile and wireless era has thus allowed end users to access this information anytime and anywhere.

Initially considering how to work more efficiently through a slow and very expensive mainframe, everything has led to virtualization becoming the primary strategy for the entire future of the IT field. IT innovation has not had such a significant impact as virtualization, and as we transition to cloud virtualization infrastructure, we are truly just beginning to embark on the path to globalization.

Original link:

https://dzone.com/articles/the-history-and-state-of-virtualization?utm_medium=feed&utm_source=feedpress.me&utm_campaign=Feed:%20dzone

↓↓ Click “Read the original” 【Join the Cloud Technology Community】

Related Reading:

DevOps: A Guide for IT Leaders

Must-Read: Nine Key Terms in DevOps!

Seven DevOps Lessons from 2017

2018 Cloud Predictions

2018 Cloud Trends: Fog Computing

GitHub: Six Major Predictions for Technology in 2018

Top Ten Technology Trends, but You Don’t Have to Worry (2018 Edition)

Don’t Waste Time on Hybrid Cloud?

The Debate on Hybrid Cloud, Private Cloud, Public Cloud, and Multi-Cloud Architectures, Don’t Make the Wrong Choice!

Cloud Drives IT Transformation: Data on the Future of Cloud

For more articles, please follow