Computer memory, is the temporary storage area of a computer, which holds the data and instructions needed by the Central Processing Unit (CPU). The instructions, which refer to specific operations of the processor, are defined by the Instruction Set Architecture, which typically includes four types of instructions: arithmetic, logic, data movement, and control flow.

Before a program runs, it is loaded from the hard disk into memory, allowing the CPU to access the program directly. Memory is an indispensable module. The operating system and various software exist in memory in different forms, namely independent segments, which are divided into data segments and program segments (we will discuss this in detail in the future, but not this time).

However, before discussing memory, let’s first look at what a Multitasking Operating System is, as this concept is related to memory and involves virtual memory address spaces of different processes during multitasking. Alright, let’s get started.

1. Knowledge Foundation: Multitasking Operating Systems

A multitasking operating system is one that automatically shares available CPU time among multiple tasks.

This article vividly illustrates the difference between single-tasking and multitasking, stating that a single-core CPU can only be occupied by one process at a time, where a process can be seen as a single task. The “multitasking” in a multitasking operating system is actually a macro concept; for example, singing and eating can happen simultaneously, where one can take a bite of food while singing a line. During a certain time period, both eating and singing tasks are performed. In relation to the CPU, for a short period, both task A and task B are processed simultaneously, and due to the CPU’s fast processing speed, users feel as if multiple tasks are being done at the same time, hence the term multitasking operating system.

1.1 Two Implementations of Multitasking: Cooperative and Preemptive

Multitasking operating systems can be divided into various implementation methods. Based on how multitasking shares CPU time, they can be categorized into two types:

-

Cooperative multitasking: Tasks use the CPU until they voluntarily relinquish CPU control. This voluntary relinquishment can occur through system calls such as yields (which temporarily gives up the kernel space occupied by the current process, allowing other processes to execute, and after a brief pause, the current process continues execution) or exits. In this model, the process that relinquishes control is predetermined, as it is dependent on others voluntarily giving up their CPU usage. Examples of cooperative multitasking systems include pre-X MacOS or Windows 3.x, which are early systems. In some single-language cooperative multitasking systems, such as Oberon or Ruby, the compiler or interpreter periodically checks for control (periodically yield control), ensuring that programs run multi-threaded under operating systems similar to DoS. The key point you need to know is that the compiler or interpreter also verifies the control held by the code.

-

Preemptive multitasking: In preemptive multitasking systems, some tasks start and end without voluntarily obtaining or relinquishing the CPU. Instead, the operating system forcibly switches tasks due to one or more reasons, such as when a task consumes its allocated CPU time, or when a higher priority task requires the CPU. This switching is mandatory.

For the comparison of the two implementation methods, an example is given: multitasking systems before Windows 95 used cooperative multitasking, whereas now most systems use preemptive multitasking. In the latter, when a new process begins, the CPU usage is in the hands of that process during its time slice. When the time slice ends, the system reclaims the CPU for the next round of allocation, which means preemptive scheduling allocates based on task priority.

1.2 Comparison of Cooperative and Preemptive Scheduling

Returning to the scheduling strategies of preemptive and cooperative multitasking, further comparisons can be made, as described in the Slide Process and Thread Scheduling:

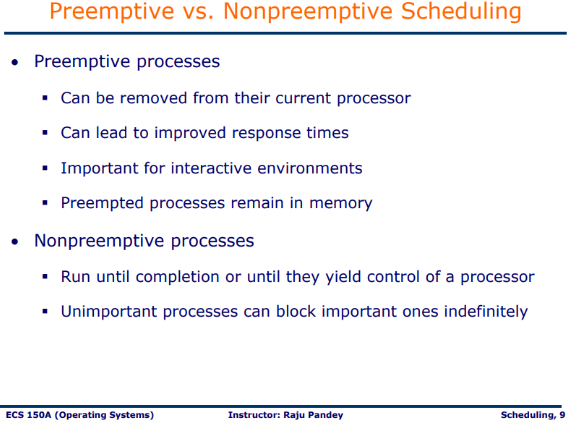

Figure 1: Preemptive vs. Non-preemptive Scheduling

Current mainstream operating systems are preemptive, such as Linux and Windows. Preemptive multitasking systems can be further subdivided: into preemptive task-based like Linux, and preemptive kernel-based like AmigaOS.

The biggest difference between preemptive and cooperative multitasking is that, as shown in the above slides, <span>Can</span><span>lead to improve response time</span>, <span>Unimportant</span><span>processes can block important ones indefinitely</span>. This highlights the advantages and disadvantages of both; cooperative multitasking can lead to an unimportant but time-consuming process blocking others. An example is provided: long ago, users of cooperative multitasking systems often experienced system crashes when multiple programs were opened, as one program could become “unresponsive” due to occupying the CPU for an extended period. To ensure smooth execution of programs, preemptive multitasking emerged.

Here is an article that compares the two in more detail:

Table 1: Preemptive Vs Non-Preemptive Scheduling

| Preemptive Scheduling | Non-Preemptive Scheduling |

|---|---|

| Resources are allocated according to the cycles for a limited time. | Resources are used and then held by the process until it gets terminated. |

| The process can be interrupted, even before completion. | The process is not interrupted until its life cycle is complete. |

| Starvation may be caused due to the insertion of priority processes in the queue. | Starvation can occur when a process with large burst time occupies the system. |

| Maintaining queue and remaining time needs storage overhead. | No such overheads are required. |

In fact, to determine whether it is Preemptive or Non-preemptive, one only needs to remember that Non-preemptive Scheduling allows a process to hold CPU resources until it terminates or is pushed to the waiting state.

Non-preemptive Scheduling is a CPU scheduling technique where the process takes the resource (CPU time) and holds it until the process gets terminated or is pushed to the waiting state. No process is interrupted until it is completed, and after that, the processor switches to another process.

As mentioned above, not only <span>gets terminated</span> but also <span>pushed to the waiting state</span>:

-

The first means completion or abnormal termination;

-

For the second, the

<span>waiting state</span>refers to the difference between<span>sleep</span>and<span>wait</span>functions.

1.3 Concepts: Time Slice and Task Priority

Since 1.1 mentioned time slice (which may not be written this way, but it is still a time slice) and task priority, let’s discuss time slice first.

1.3.1 Concept: Time Slice

The time slice comes from multi-threaded execution (where each task corresponds to a thread). When multitasking runs on the CPU, it appears that multiple tasks are running simultaneously, but in reality, the CPU allocates fixed time to different programs, referred to as a time slice. This can also be understood from the previous text.

From the perspective of the Round Robin scheduling method, each process receives a small unit of CPU time, known as time quantum, typically ranging from 10 to 100 ms. When this small time unit elapses, the current process is preempted and added to the end of a ready queue.

1.3.2 Concept: Task Priority

Here, we start with the priority terms ProcessPriorityClass and ThreadPriorityLevel in Windows. While researching this concept, I came across an article discussing how to retrieve thread information in PowerShell using the <span>Get</span><span>-</span><span>Process</span> command, which includes performance-related fields such as <span>TotalProcessorTime</span>, <span>ThreadState</span>, <span>WaitReason</span>, and a priority field <span>PriorityLevel</span>:

C:\> (Get-Process -Id 10080).Threads | Get-Member

TypeName: System.Diagnostics.ProcessThread

Name MemberType Definition

---- ---------- ----------

PriorityLevel Property System.Diagnostics.ThreadPriorityLevel PriorityLevel {get;set;}

PrivilegedProcessorTime Property timespan PrivilegedProcessorTime {get;}

ProcessorAffinity Property System.IntPtr ProcessorAffinity {set;}

ThreadState Property System.Diagnostics.ThreadState ThreadState {get;}

TotalProcessorTime Property timespan TotalProcessorTime {get;}

UserProcessorTime Property timespan UserProcessorTime {get;}

WaitReason Property System.Diagnostics.ThreadWaitReason WaitReason {get;}

At this point, I am curious about how Windows orders the <span>PriorityLevel</span>. Microsoft Docs mentions that <span>ThreadPriorityLevel</span> is an enumeration that indicates the priority of the specified thread. I found the documentation on Windows priority somewhat confusing, but I summarized three points:

-

Every thread has a base priority, which is determined by the thread’s priority value and its process’s priority class. The operating system sorts the basic priority levels of all executable threads to determine which thread gets the processor next.

-

When thread scheduling priority is higher, it indicates a higher priority. The values range from

<span>1</span><span>~</span><span>15</span>representing dynamic priority,<span>16</span><span>~</span><span>31</span>for real-time priority, while<span>0</span>indicates the zero-page thread priority, reserved for zeroing memory pages to prevent 0 page faults, which is lower than all other priorities. -

Each thread has a base priority and a current priority:

-

Base priority: inherited from the thread’s process. For example, kernel-mode driver code runs in the context of user threads; its base priority is that of the current user process. Another kernel-mode driver code running in a system worker thread has a base priority based on the service it provides.

-

Current priority: the current thread priority at a given time, which is dynamic.

The system continuously adjusts thread priorities to optimize throughput in completing tasks.

Figure: Basic Concept && Scheduling Criteria | Process and Thread Scheduling

Although Windows documentation does not clarify its scheduling policy, typical scheduling designs include the following concepts:

-

Maximizing CPU utilization during multiprogramming. Multiprogramming refers to storing several independent programs in memory that can run concurrently under the management of a controller, appearing to be in a state of beginning and ending simultaneously.

-

CPU – I/O Burst Cycle: The number of CPU execution cycles and I/O wait cycles involved in the process execution.

-

CPU burst distribution: The distribution of time spent executing tasks on the CPU.

Although the scheduling granularity is not specified as thread or process, scheduling granularity can also be at the thread level, and the operating system’s context switch occurs at the process level, as well as at the thread granularity.

Scheduling also has Scheduling Criteria, which typically include the following parameters:

-

CPU utilization: usually ranges from 0 to 100%, commonly between 40% to 90% in practice;

-

Throughput: the number of processes completed in a unit of time;

-

Turnaround time: the total time from process submission to completion, including waiting for data retrieval from memory, waiting in the ready queue, executing on the CPU, and performing I/O;

-

Waiting time: time spent waiting in the ready queue;

-

Response time: the time from the request submission to the first response.

Process (thread) scheduling algorithms are designed based on these parameters as optimization goals, such as minimizing response time/turnaround time and maximizing CPU utilization/throughput. For example, First-Come First-Served strategy serves requests in a FIFO manner, although simple, it does not allow for time slice sharing among multiple processes, which I believe is a typical non-preemptive strategy.

There is also the Shortest-Job First strategy, which considers waiting time. SJF is a special case of Priority Scheduling, which can be either Preemptive or Non-preemptive.

How can one determine if it is preemptive or non-preemptive? I mentioned earlier that non-preemptive scheduling allows a process to hold CPU resources until it terminates.

However, Priority Scheduling also has blocking and starvation issues. Understanding both:

-

Blocking: This refers to the queue of submitted process tasks, which can be blocked due to the current CPU being slow or overloaded, or I/O resources being occupied.

-

Starvation: This is a more severe form of blocking, where a later process with a higher priority has to wait for an earlier low-priority process to complete.

To solve the problem of low-priority processes waiting indefinitely, aging is implemented, where processes in the waiting state increase their priority over time, thus getting a chance to execute.

Returning to SJF and Priority Scheduling, Shortest-Job First, or Priority, considers the CPU burst time required by each process, adjusting the execution order of processes based on their required time in ascending order, thus minimizing average waiting time. SJF can be used for both Preemptive and Non-preemptive Scheduling.

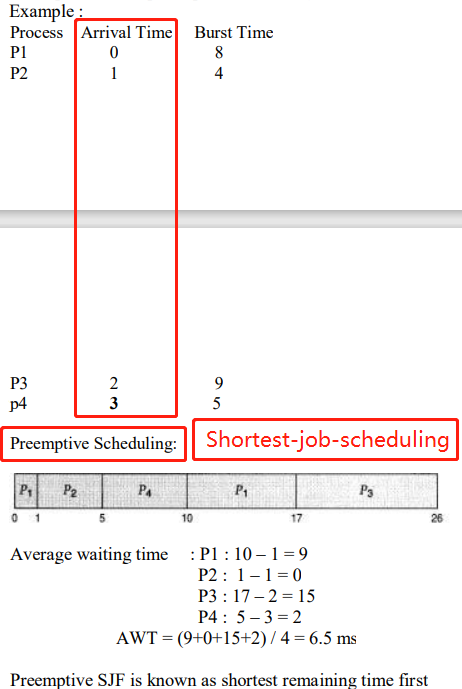

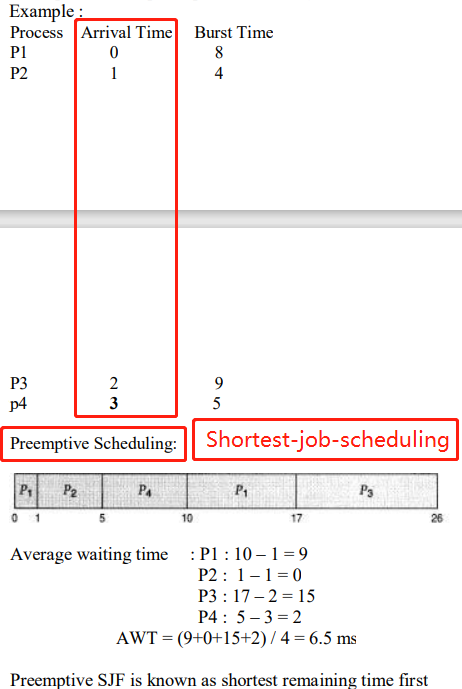

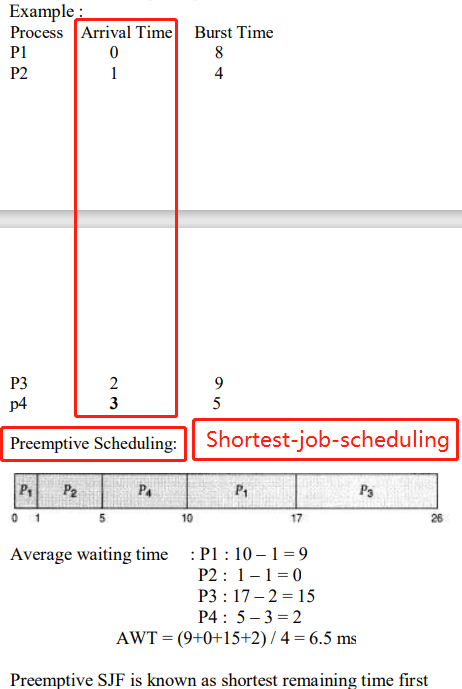

In the case of Preemptive Scheduling, it requires considering the arrival time of each process, as shown in the following example:

Figure: SJF Preemptive Scheduling

At this point, calculating the Average waiting time must consider the arrival time (which refers to the time a task arrives, unrelated to the scheduling algorithm). P1, although having a longer burst time of 8, arrives first, while P2/P3/P4 have not yet arrived:

-

P1 executes until P2 arrives, at which point P1 and P2 are compared; since P1 has executed for 1 time unit, it now has a burst time of 7, which is longer than P2’s, so P1 is paused and P2 executes.

-

When P3 arrives, P1/P2/P3’s burst times are updated, and since P2 is the shortest, it continues executing.

-

When P4 arrives, P3 is the shortest, so it executes next.

-

After P3 finishes, P4 executes, followed by P1.

Among these, two key points are:

-

How can one know the burst times before execution to sort? This is a drawback of SJF, as estimating burst times beforehand is difficult.

-

When switching from P1 to P2, what actions are required to design thread context switching, primarily reading and writing register values.

1.3.2.1 Estimating Next CPU Burst

The basic idea for this estimation is to use exponential averaging based on previously completed CPU burst lengths to predict the next CPU burst.

Figure: Determining Length of Next CPU Burst

Based on the previous page, we can see the actual CPU bursts at different times, and using exponential averaging, we can derive a formula for estimating the next CPU burst length.

However, I have two questions regarding this formula:

-

How to guess the initial burst time? This is a cold start problem; one solution is to use the median or mode of historical data, while another is to establish a relationship model based on static attributes of the task.

-

Does the prediction formula align with the actual CPU burst? I suspect there may be an error in the PPT.

References

-

Computer memory – Simple English Wikipedia, the free encyclopedia https://simple.wikipedia.org/wiki/Computer_memory

-

Instruction set – Simple English Wikipedia, the free encyclopedia https://simple.wikipedia.org/wiki/Instruction_set

-

Understanding Memory Layout. The memory refers to the computer… | by Shohei Yokoyama | Medium https://medium.com/@shoheiyokoyama/understanding-memory-layout-4ef452c2e709

-

Microsoft Word – Oberon07.Report.doc (ethz.ch) http://people.inf.ethz.ch/wirth/Oberon/Oberon07.Report.pdf

-

Linux内核 yield()|酷客网 https://www.coolcou.com/linux-kernel/linux-process-scheduling-kernel-api/the-linux-kernel-yield.html

-

多任务 – nianjun – 博客园 (cnblogs.com) https://www.cnblogs.com/sengnj/p/3559835.html

-

Microsoft PowerPoint – scheduling.ppt [Compatibility Mode] (ucdavis.edu) https://web.cs.ucdavis.edu/~pandey/Teaching/ECS150/Lects/05scheduling.pdf

-

Getting the CPU time and status of threads in a process with PowerShell – TheShellNut (mathieubuisson.github.io) https://mathieubuisson.github.io/cpu-time-threads-status-powershell/

-

Linux写时拷贝技术(copy-on-write) – as_ – 博客园 (cnblogs.com) https://www.cnblogs.com/biyeymyhjob/archive/2012/07/20/2601655.html

-

Virtual address spaces – Windows drivers | Microsoft Docs https://docs.microsoft.com/en-us/windows-hardware/drivers/gettingstarted/virtual-address-spaces

-

Memory Management : Paging. Paging is a method of writing and… | by Esmery Corniel | Medium https://medium.com/@esmerycornielle/memory-management-paging-43b85abe6d2f#:~:text=The physical part of the memory containing a,facilitates more efficient and faster use of storage.

-

CS 4410 Operating System, Memory: Paging | cornell.edu http://www.cs.cornell.edu/courses/cs4410/2016su/slides/lecture11.pdf

-

Introduction to the page file – Windows Client Management | Microsoft Docs https://docs.microsoft.com/en-us/windows/client-management/introduction-page-file

-

memory – Does Linux have a page file? – Stack Overflow https://stackoverflow.com/questions/35054783/does-linux-have-a-page-file

-

What Is the Windows Page File, and Should You Disable It? (howtogeek.com) https://www.howtogeek.com/126430/htg-explains-what-is-the-windows-page-file-and-should-you-disable-it/

-

Converting Virtual Addresses to Physical Addresses – Windows drivers | Microsoft Docs https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/converting-virtual-addresses-to-physical-addresses

-

Disable Windows Pagefile and Hibernation To Free Up Space – TechCult https://techcult.com/disable-windows-pagefile-and-hibernation-to-free-up-space/

-

How to disable and re-enable hibernation – Windows Client | Microsoft Docs https://docs.microsoft.com/en-us/troubleshoot/windows-client/deployment/disable-and-re-enable-hibernation

-

intro-to-memory-management (elinux.org) https://elinux.org/images/b/b0/IntroductiontoMemoryManagementin_Linux.pdf

-

[教學] 關機、待命、睡眠、休眠和交互式睡眠的分別 « FoolEgg.com https://www.foolegg.com/what-are-the-differences-between-shut-down-standby-sleep-hibernate-and-hybrid-sleep/

-

Understanding-linux-virtual-memory.pdf (cmu.edu) http://linuxclass.heinz.cmu.edu/doc/Open-Source-books/Understanding-linux-virtual-memory.pdf

-

What are types of kernel objects? (fyicenter.com) http://dev.fyicenter.com/Interview-Questions/Windows/Whataretypesofkernelobjects.html

-

A Process s Kernel Object Handle Table | Programming Applications for Microsoft Windows (Microsoft Programming Series) (flylib.com) https://flylib.com/books/en/4.419.1.29/1/

-

Memory Limits for Windows and Windows Server Releases – Win32 apps | Microsoft Docs https://docs.microsoft.com/en-us/windows/win32/memory/memory-limits-for-windows-releases#memory-and-address-space-limits

-

How PAE X86 Works: System Reliability | Microsoft Docs https://docs.microsoft.com/en-us/previous-versions/windows/it-pro/windows-server-2003/cc736309(v=ws.10)

-

High Non-Paged Pool Memory Usage (Leak) in Windows | Windows OS Hub (woshub.com) http://woshub.com/huge-memory-usage-non-paged-pool-windows

-

Memory Management — The Linux Kernel documentation (linux-kernel-labs.github.io) https://linux-kernel-labs.github.io/refs/heads/master/lectures/memory-management.html#physical-memory-management

-

What is a Hardware Abstraction Layer (HAL)? – Definition from Techopedia https://www.techopedia.com/definition/4288/hardware-abstraction-layer-hal

-

Unit OS4: Windows OS Thread Scheduling https://www.cs.sjtu.edu.cn/~kzhu/cs490/8/8_Scheduling.pdf

-

unit2 Process Scheduling and Synchronization.pdf http://www.notesengine.com/main/notes/eee/7sem/os/notes/unit2.pdf

-

Preemptive and Non-Preemptive Scheduling (tutorialspoint.com) https://www.tutorialspoint.com/preemptive-and-non-preemptive-scheduling

-

Chapter 5: CPU Scheduling | 2.01 (cmu.edu) https://www.andrew.cmu.edu/course/14-712-s20/applications/ln/14712-l6.pdf

-

Operating Systems: CPU Scheduling https://www.cs.uic.edu/~jbell/CourseNotes/OperatingSystems/6CPUScheduling.html

-

c++11 yield函数的使用 – 小怪兽&奥特曼 – 博客园 https://www.cnblogs.com/jinxiang1224/p/8468164.html

-

C++ yield()与sleepfor()weixin34029680的博客-CSDN博客 https://blog.csdn.net/weixin34029680/article/details/91871163

-

如何编写 C++ 20 协程(Coroutines)【图文】程序喵大人51CTO博客 https://blog.51cto.com/u_12444109/3031169

-

C/C++ Sleep()函数和wait()函数的区别苞米地里捉小鸡的博客-CSDN博客c++ wait https://blog.csdn.net/weixin_42709632/article/details/105175396