Linux | Red Hat Certified | IT Technology | Operations Engineer

👇 Join the technical exchange QQ group with 1000 members, note 【Public Account】 for faster approval

Why Docker Needs Network Management

The network of containers is isolated from the host and other containers by default, but sometimes communication with the host and other containers is necessary.

Demand for Communication Between Containers and External Communication

Communication Between Containers: In a Docker environment, containers are isolated from the host and other containers by default. However, in practical applications, multiple containers may need to communicate with each other to achieve data sharing, service calls, and other functions. This requires proper configuration and management of the container network to ensure they can communicate as expected.

Communication Between Containers and External: Some network applications, such as web applications and databases, may run inside containers and need to be accessible externally. To achieve this, appropriate network settings, such as port mapping and IP address configuration, must be set up to ensure external users can access the applications inside the containers.

Need for Network Isolation and Security

Network Isolation: Docker containers provide good isolation, with each container having its own filesystem, process space, and network space. Network isolation is an important part of container isolation, ensuring that the network stack between containers is independent, thus avoiding network attacks and interference.

Security: Through proper network management, access control, firewall rules, and other security measures can be implemented for containers to enhance security. For example, the access rights of containers to external networks can be restricted to prevent malicious attacks and data leaks.

Need for Customized Networks

Special Network Requirements: In certain scenarios, containers may require more customized network configurations. For example, special cluster networks or local area networks between containers may need to be customized. These customization needs must be implemented through Docker’s network management features.

Introduction to Docker Network Architecture

Docker’s network architecture is a complex and powerful system based on the Container Network Model (CNM), Libnetwork, and a series of drivers.

CNM

CNM: The full name is Container Network Model, which aims to standardize and simplify container network management.

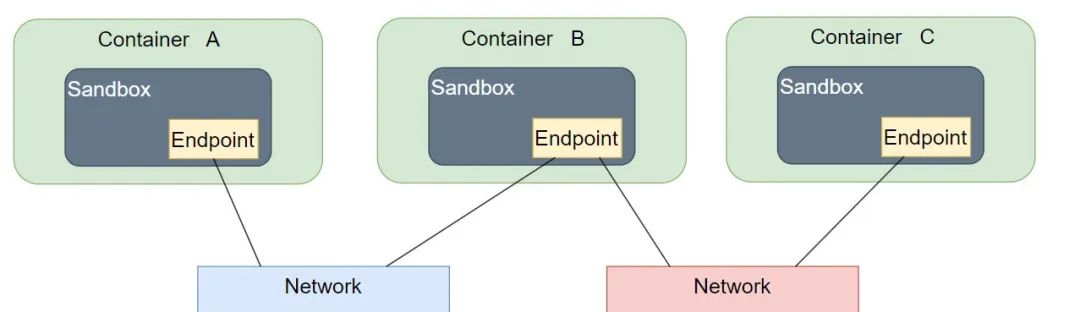

CNM mainly consists of the following three components:

Sandbox: The sandbox provides the virtual network stack for containers, including ports, sockets, IP routing tables, firewall, DNS configuration, etc. It is mainly used to isolate the container network from the host network, forming a completely independent container network environment.

Endpoint: An endpoint is the interface of the virtual network, similar to a regular network interface or network adapter. Its main responsibility is to create connections and connect the sandbox to the network. An endpoint can only connect to one network, and if a container needs to connect to multiple networks, multiple endpoints are required.

Network: The network is a virtual subnet within Docker that allows participants within the network to communicate. It can be a bridge network, overlay network, etc., with specific types implemented by drivers.

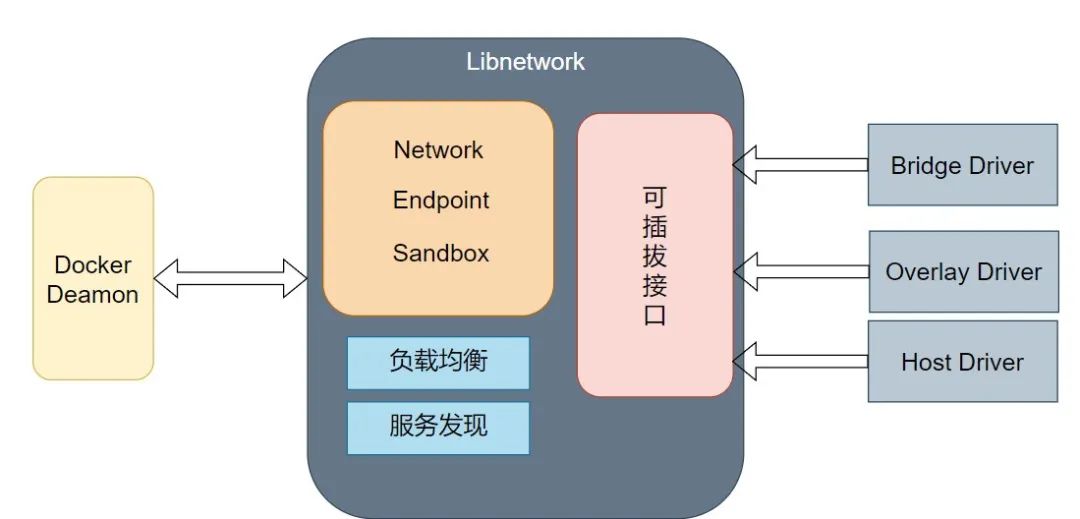

Libnetwork

Libnetwork is a standard implementation of CNM and is the core component of Docker’s network architecture. It is an open-source library written in Go, implementing all three components defined in CNM and providing the following additional features:

Local Service Discovery: Allows containers to discover other services within the same network.

Ingress-Based Container Load Balancing: Implements load balancing between containers, improving application availability and performance.

Network Control Layer and Management Layer Features: Provides rich network configuration and management options, allowing users to flexibly customize network topology and policies.

Drivers

Drivers are components in Docker’s network architecture that implement data layer-related content, responsible for handling core tasks such as network connectivity and isolation. Docker includes various drivers to meet different network needs:

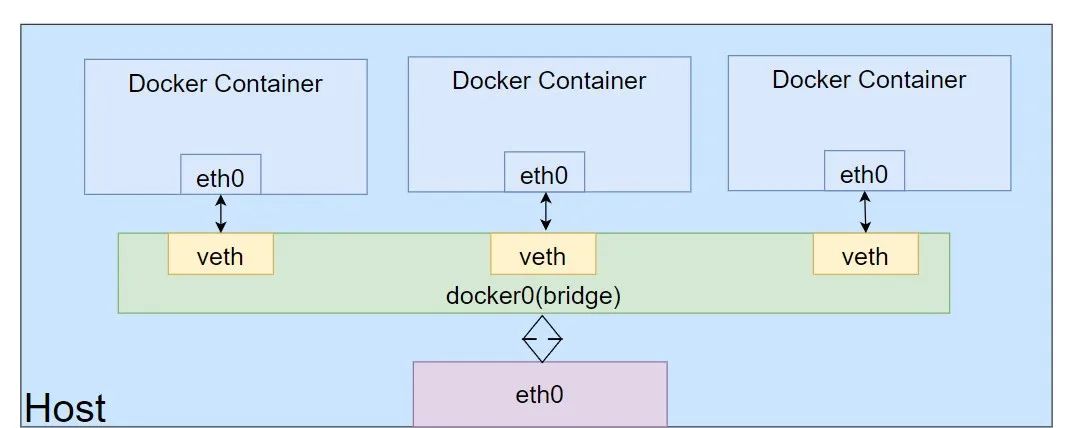

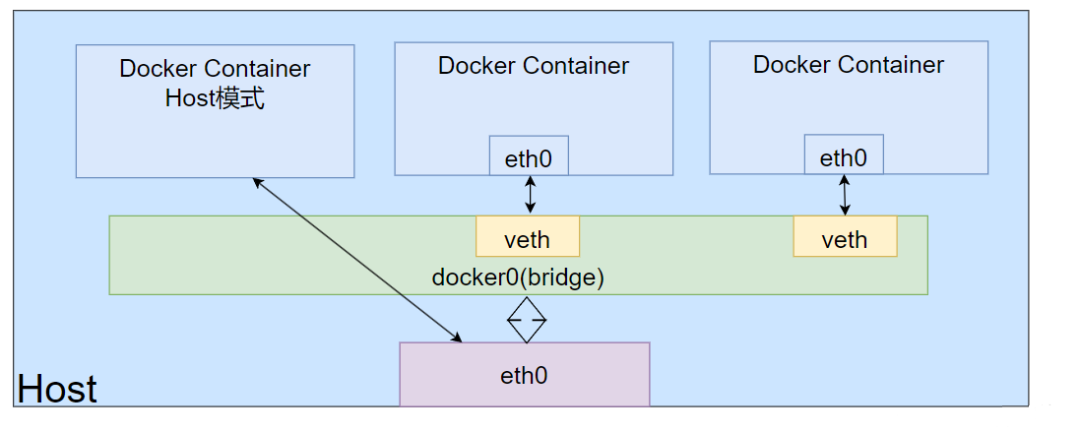

Bridge Driver: The bridge driver creates a Linux bridge (default is docker0) on the host managed by Docker. By default, containers on the bridge can communicate with each other and can be configured to access external containers. Bridge networks are the best choice for communication between multiple containers within the host.

Host Driver: The host driver allows containers to share the same network namespace as the host. In this mode, containers will directly use the host’s network stack, routing tables, and iptables rules, achieving seamless integration with the host network.

Overlay Driver: The overlay driver implements a multi-subnet network solution across hosts. It mainly uses Linux bridge and vxlan tunnels for implementation, with multi-machine information synchronization achieved through KV storage systems like etcd or consul. Overlay networks are the best choice for solving multi-subnet network problems across hosts.

None Driver: The none driver completely isolates Docker containers, preventing access to external networks. In this mode, containers are not assigned IP addresses and cannot communicate with other containers or the host.

Docker Network Management Commands

docker network create

Create a Custom Network

Syntax:

docker network create [OPTIONS] NETWORKKey Parameters

-d, –driver: Network driver

–gateway: Gateway address

–subnet: Subnet in CIDR format

–ipv6: Enable ipv6

Example:

docker network create --driver=bridge --subnet=192.168.0.0/16 br0docker network inspect

View Network Details

Syntax:

docker network inspect [OPTIONS] NETWORK [NETWORK...]Example:

docker network inspect br0docker network connect

Connect Container to Network

Syntax:

docker network connect [OPTIONS] NETWORK CONTAINERExample:

docker network connect br0 mynginx1docker network disconnect

Disconnect Network

Syntax:

docker network disconnect [OPTIONS] NETWORK CONTAINERExample:

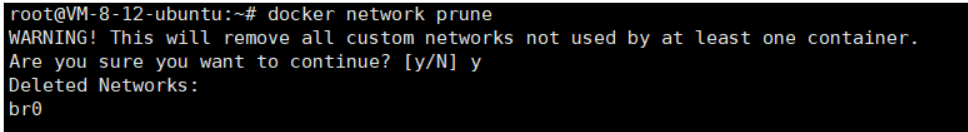

docker network disconnect br0 mynginxdocker network prune

Delete Different Networks

Syntax:

docker network prune [OPTIONS]Example:

docker network rm

Delete One or More Networks

Syntax:

docker network rm NETWORK [NETWORK...]Example:

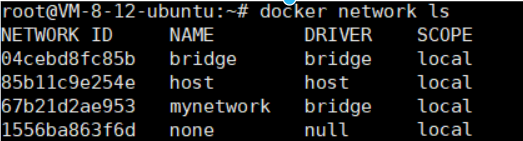

docker network ls

View Networks

Basic Operations for Network Management

Create a Network and View:

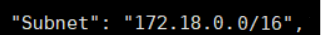

docker network create --subnet=172.18.0.0/16 mynetwork

Create a Container and Join the Network:

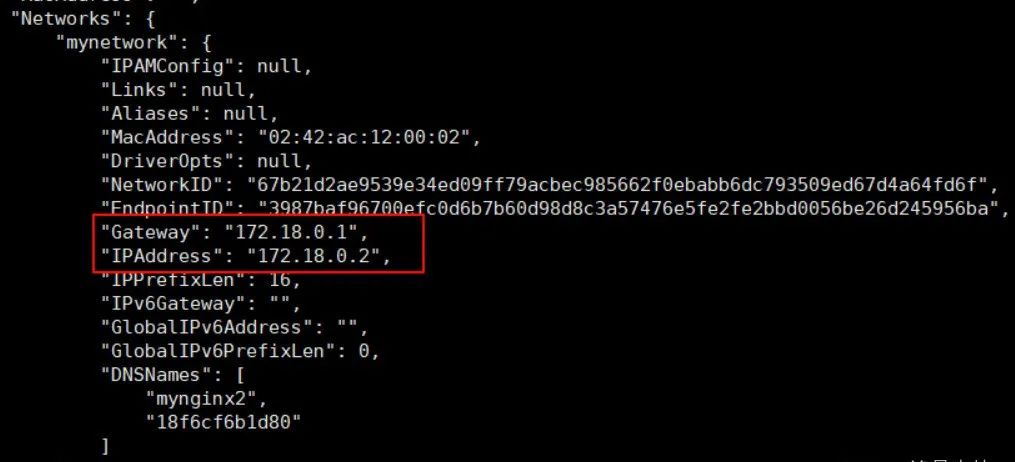

docker run -itd --name mynginx2 --network mynetwork nginx:1.23.4View Container Details:

docker inspect mynginx2

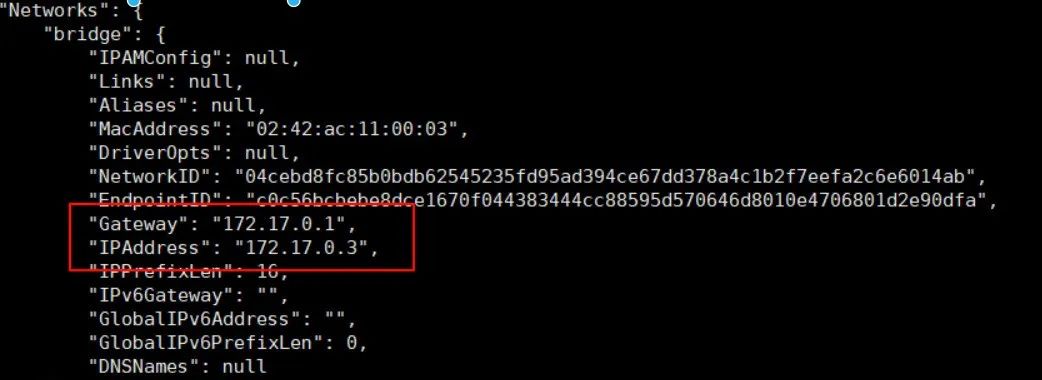

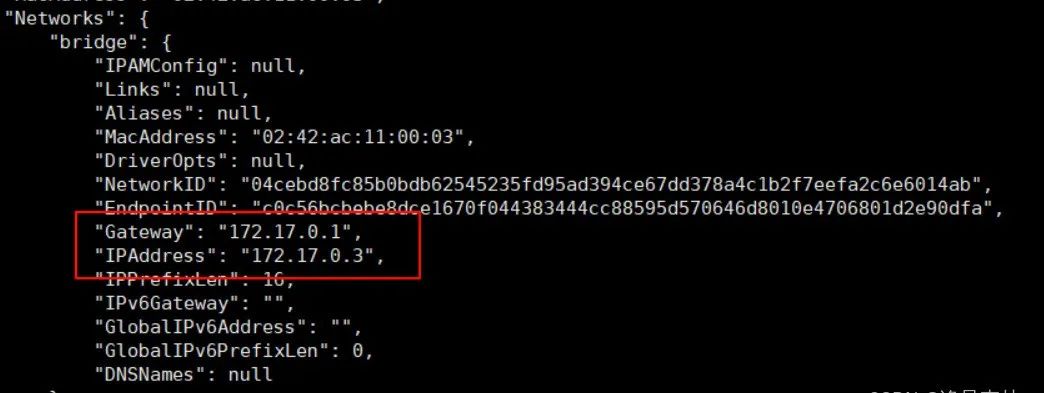

Without Joining the Network:

docker run -itd --name mynginx3 nginx:1.23.4docker inspect mynginx

After Connecting to the Network, Another Network Appears:

docker network connect mynetwork mynginx3

After Disconnecting, Checking Again Shows It Has Disappeared:

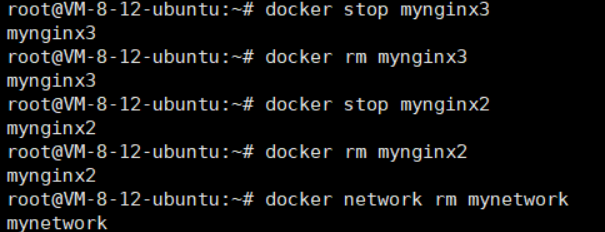

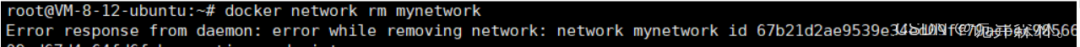

docker network disconnect mynetwork mynginx3At this point, the network cannot be deleted because the two created containers are connected to this network, and the related containers must be deleted first:

docker network rm mynetwork

Docker Bridge

The Docker Bridge network uses the built-in bridge driver, which is based on the Linux bridge technology in the Linux kernel.

Below are a few operation examples:

Network Communication Between Containers

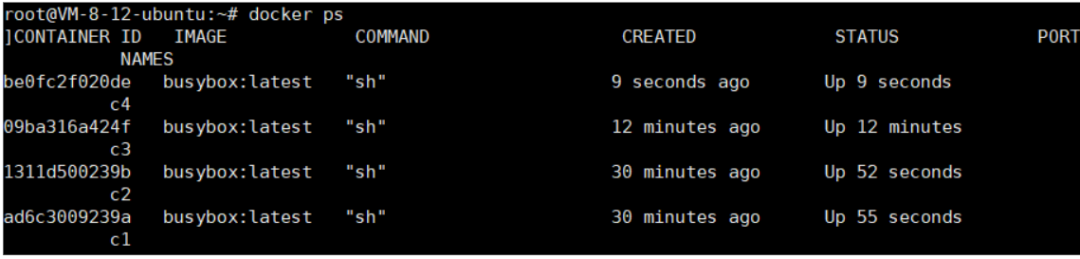

Use busybox to create two containers and keep them running in the background

docker run -itd --name c1 busybox:latestdocker run -itd --name c2 busybox:latest

Check the communication status of the two containers:

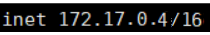

docker exec -it c1 ip a

docker exec -it c2 ip a

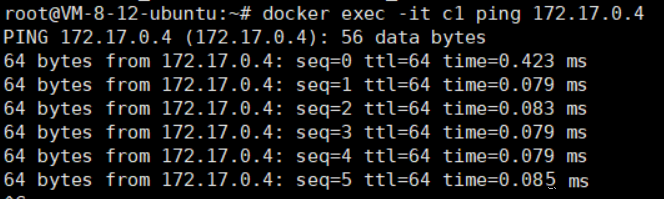

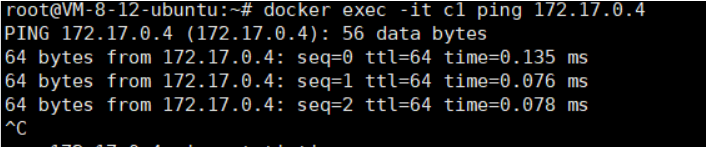

Test if c1 can communicate with c2

docker exec -it c1 ping 172.17.0.4

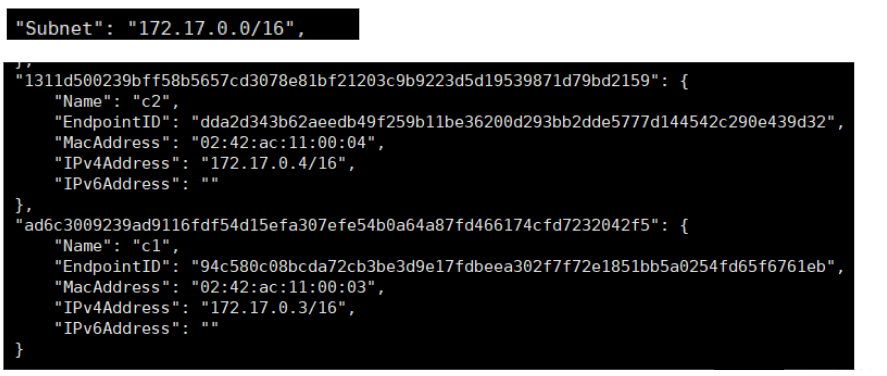

View bridge network details:

docker network inspect bridge

This network has already connected two containers, c1 and c2.

When stopping the c1 container, it will be found that the bridge will have one less container connected

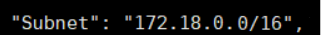

docker stop c1Create a Custom Bridge

Use the create command to create a new bridge:

docker network create -d bridge new-bridgeView the details of the new network:

docker network inspect new-bridge

When running a new container, specify a network:

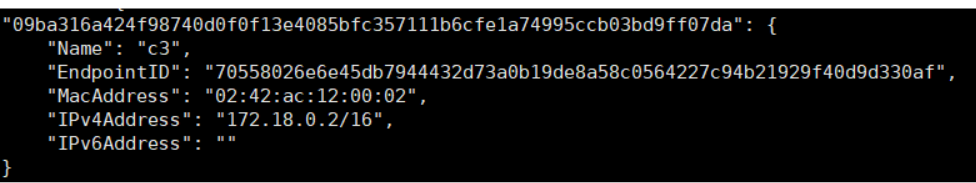

docker run -itd --name c3 --network new-bridge busybox:latestdocker inspect c3 | grep "Networks" -A 17

View Network Details

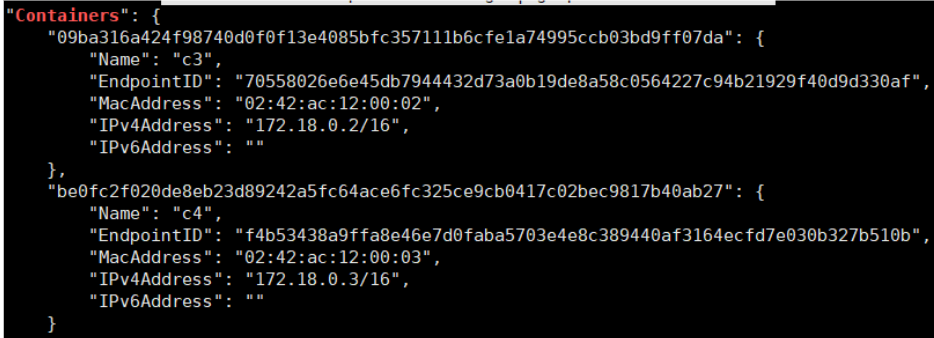

docker network inspect new-bridge

DNS Resolution

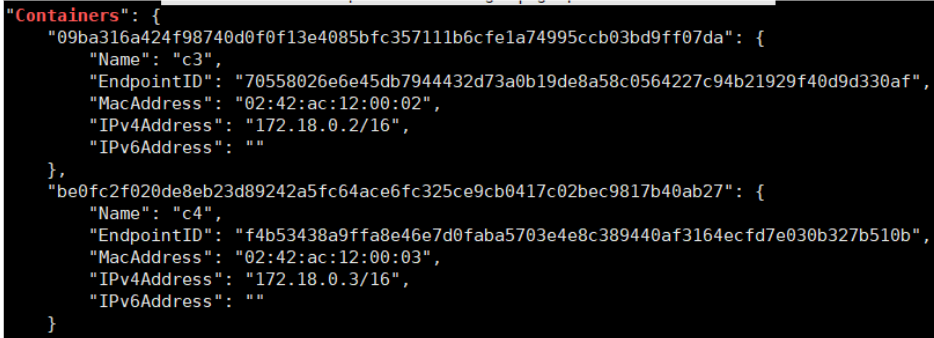

Let c1 and c2 connect to the default bridge, c3 and c4 connect to new-bridge

docker run -itd --name c4 --network new-bridge busybox:latest

docker network inspect bridge | grep "Containers" -A 20

docker network inspect new-bridge | grep "Containers" -A 20

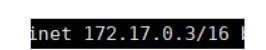

Check the IPs of c1 and c2:

docker exec -it c1 ip a

docker exec -it c2 ip a

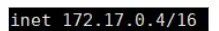

Check if they can communicate

docker exec -it c1 ping 172.17.0.4

docker container exec -it c1 ping c2c1 container pings c2 container name and finds the IP address is not reachable, confirming that the default bridge network does not support DNS

Verify if c3 and c4 can use DNS resolution service:

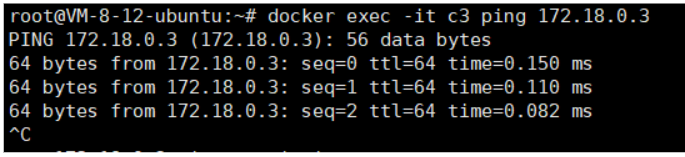

docker exec -it c3 ping 172.18.0.3

docker container exec -it c3 ping c4c3 container pings c4 container name and finds it can communicate, confirming that the custom bridge supports DNS

Docker Host

Docker containers run independently with their own Network Namespace isolation subsystem by default;

If based on host network mode, the container will not obtain an independent Network Namespace but will share the same Network Namespace as the host

We can verify:

Let container c1 connect to bridge network mode, and c2 connect to host network mode

docker run --name c1 -itd busybox:latestdocker run --name c2 -itd --network=host busybox:latestCheck the IPs of c1 and c2

docker exec c1 ip adocker exec c2 ip aIt can be seen that c1 container has an independent network configuration, while c2 container shares network configuration with the host.

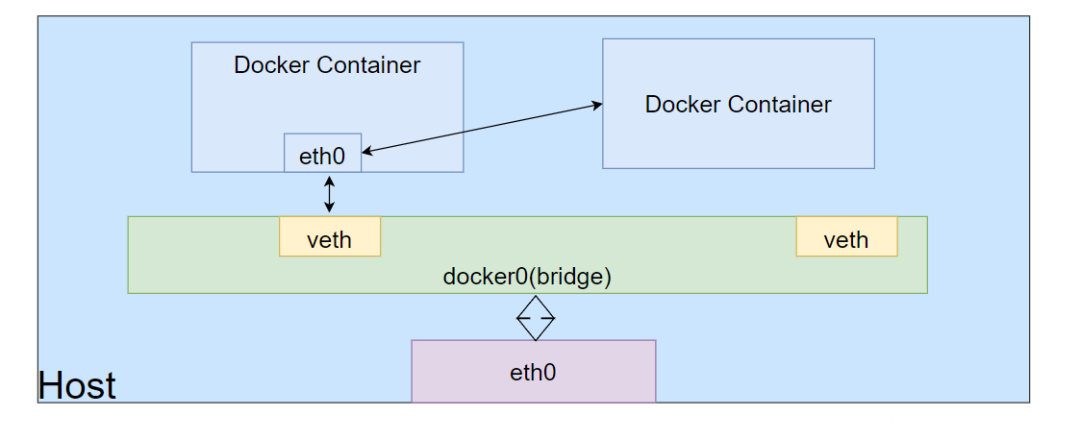

Docker Container

The other container network mode in Docker is a relatively special network mode.

In this mode, the network isolation of the container is between bridge and host mode.

Docker containers share the network environment of other containers, meaning there is no isolation between these two containers.

Below we will verify:

Create a busybox container

docker run -itd --name netcontainer1 busybox:latestCreate a new container using netcontainer1’s network

Create another container using netcontainer1’s network

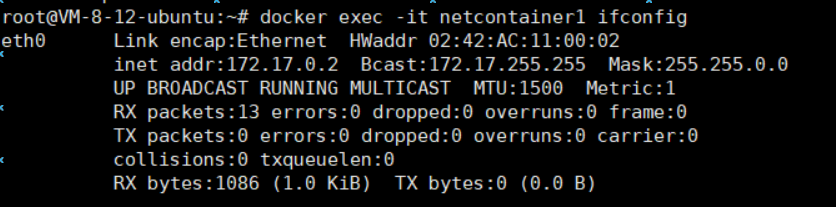

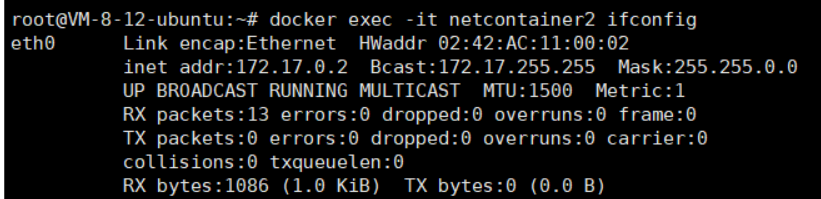

docker run -itd --name netcontainer2 --network container:netcontainer1 busyboxCheck the network configurations of netcontainer1 and netcontainer2:

docker exec -it netcontainer1 ifconfig

docker exec -it netcontainer2 ifconfig

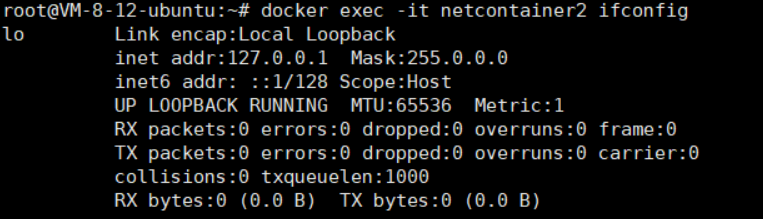

If we stop the netcontainer1 container and check the network configuration of netcontainer2:

It will be found that the eth0 network card is missing, leaving only a local network card

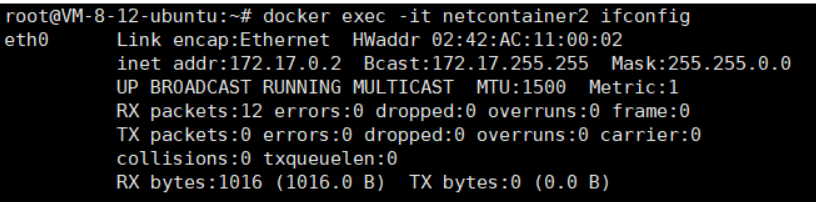

We restart both containers (first start netcontainer1):

docker restart netcontainer1docker restart netcontainer2

Network configuration restored

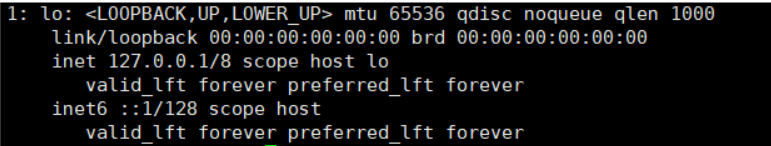

None Network

The none network means no network.

When attached to this network, there are no network cards except for the local lo.

Below we will verify:

docker run -itd --name c1 --network none busybox:latestdocker exec -it c1 ip a

Course consultation add: HCIE666CCIE

↓ Or scan the QR code below ↓

What technical points and content do you want to see?

You can leave a message below to let Xiaomeng know!