Author:Peng Zhao (Founder of Zhici Fang and Co-founder of Yunhe Capital)IoT Think Tank Original

Author:Peng Zhao (Founder of Zhici Fang and Co-founder of Yunhe Capital)IoT Think Tank Original

This is my 376th column article.

We seem to be on the verge of a scene where, on a certain night, a drone is flying low, its camera precisely locking onto the mechanical vibrations of the main control pump room. Meanwhile, a quadruped robot on the ground receives an anomaly code, swiftly avoiding obstacles to reach the site. The two are not coordinated through cloud scheduling but self-organize locally through an “Edge Agent Operating System” to form task collaboration: the drone is responsible for visual recognition and path analysis, while the ground robot executes and provides feedback. The entire process requires no human intervention and does not connect to a remote cloud platform.

This is not science fiction, but a real evolution of edge AI from inference engines to collaborative agents.

In the past few years, the evolution path of edge AI has been clear—from the initial exploration of low-power AI inference with TinyML, to the practical application of edge inference frameworks, to the rise of platform-level AI deployment tools, and the recent popularity of vertical models, we have achieved the task of “getting models to run.”

However, the next step for edge AI is not to stack more models and parameters, but to answer a more fundamental question: Once AI models are running, can they collaborate?

This limitation is the “invisible ceiling” that edge AI faces in moving towards a higher form of intelligence.

True edge intelligence goes beyond making judgments; it involves making decisions, forming systems, and executing tasks. This is the starting point for the evolution of edge AI from static inference to dynamic agents.

What we need is not a larger model, but a group of collaborative models. It can be said that models allow devices to see the world, while agents enable devices to participate in the world.

In this article, we will explore how edge AI evolves from model deployment to an agent operating system based on the latest market data, technological advancements, and platform trends, and how this trend will reshape the interaction methods, system architecture, and commercial value of smart terminals.

From Model Deployment to System Autonomy, AI Agents are Landing on the Edge

In the past, the mainstream way for enterprises to deploy edge AI was still a combination paradigm of “model-driven + platform scheduling”: develop a model, deploy it to a terminal, and complete resource allocation and state visualization through an edge platform.

This method indeed solved the problem of whether models could run in the early stages, promoting the landing of a large number of AI capabilities on the edge, but it also exposed an increasingly prominent structural bottleneck: as deployment scales expand and scene complexity increases, this model fails to answer a more essential question: Can models collaborate? Does the system have autonomous capabilities?

This shift in focus has already become clear at the enterprise decision-making level.

According to a global CIO survey report released by ZEDEDA at the beginning of 2025, 97% of the CIOs surveyed indicated that their enterprises have deployed or plan to deploy edge AI internally in the next two years; 54% of enterprises explicitly hope that edge AI will become part of system-level capabilities rather than isolated single-point functions; more notably, 48% of enterprises listed “reducing dependence on the cloud and enhancing local autonomous response capabilities” as a key goal for the next stage.

This set of data reflects that an industry-wide consensus is forming: The future of edge AI is no longer just about whether models can run, but about whether systems can achieve self-organization, self-perception, and self-response capabilities.

The core carrier of this capability leap is “edge AI agents.”

Compared to traditional model deployment paradigms, edge agents are no longer passive inference engines but the smallest intelligent units with perception, decision-making, action, and collaboration capabilities. They can not only run models but also initiate actions, negotiate roles, and allocate resources locally based on environmental states, system rules, and task objectives, becoming proactive intelligent nodes in edge systems.

For example, in a smart manufacturing scenario, the value chain of edge agents can be intuitively understood: when a camera on a conveyor belt identifies a defect in materials, the visual inspection agent immediately generates an event signal; this signal triggers the material handling agent to automatically schedule mobile robots for the transfer of problematic materials; subsequently, the quality inspection agent conducts a secondary review upon receiving the signal; finally, the MES system agent synchronously updates the production schedule and the next process plan.

The entire process from anomaly identification to task execution no longer relies on a centralized scheduling system but is completed through multiple edge agents collaborating autonomously on-site. This “perception—decision—collaboration—feedback” closed loop not only enhances response efficiency but also gives the system a high degree of resilience and adaptability.

If model deployment solves the question of “whether devices have the ability to think,” then agent deployment further answers the proposition of “whether devices have the ability to participate.” To truly achieve this participatory capability, edge agents must possess a complete capability system.

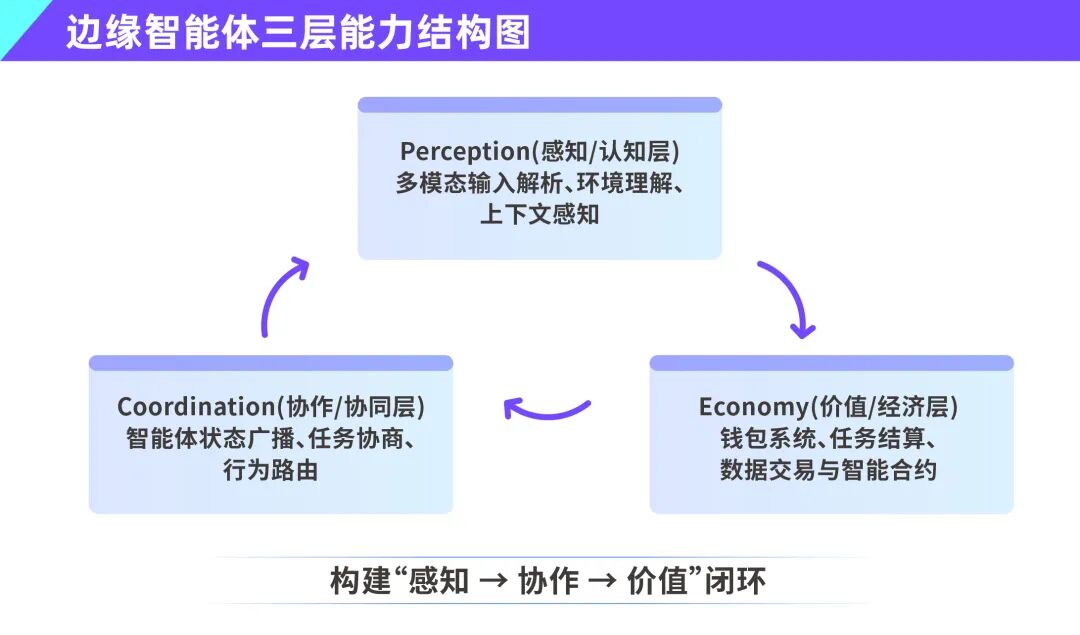

We can summarize it as PCE model—Perception, Coordination, Economy three levels of capability stack.

First is the perception layer.

Agents must be able to understand their environment, read and interpret data from multimodal sensors such as images, sounds, temperature, humidity, and vibrations, and make task judgments based on contextual information. ZEDEDA’s research shows that over 60% of enterprises have deployed multimodal AI models in edge devices, providing a rich foundation for environmental perception for agents.

Secondly, the coordination layer.

An agent cannot complete all tasks alone; a true intelligent system relies on efficient collaboration among multiple agents. This collaboration is not merely data exchange but an intelligent agent network based on state sharing, role negotiation, and task division. Coordination capability upgrades edge systems from device interconnection to intelligent mutual assistance.

Finally, the economy layer.

When edge agents begin to possess capabilities such as task acceptance, resource negotiation, and cost control, they naturally become participants in the machine economy. The foundation for this layer’s realization is device wallets, encrypted identities, and programmable contract mechanisms. According to my judgment in the article “The Explosion of Edge AI + The Transformation of Virtual Currency, Device Wallets Open the Door to AI Agency Economy,” the total volume of M2M transactions between AI devices is expected to exceed the total volume of transactions between humans, and agents will become active nodes in the edge economic network. Economic capability not only enables agents to execute tasks but also gives them collaborative value.

The three layers of perception, coordination, and economy together constitute the “PCE capability stack” of edge agents. It not only defines the capability modules that an agent should possess but also provides a reference framework for the future system design of edge AI platforms.

Why Do Edge Agents Need an AI Operating System?

Although edge AI has made a leap from model deployment to platform management in recent years, the current mainstream edge AI platforms still remain at the level of “model runtime environment.” However, as AI evolves from models to agents, this traditional platform paradigm becomes inadequate.

The reason is that agents are not static inference services but dynamic services with state perception, task negotiation, and autonomous action capabilities. They require not only execution space but also a complete operating system.

We call it the “Edge AI Operating System.”

Compared to traditional AI platforms, the edge AI operating system needs to meet three core capability requirements from the underlying architecture.

First, it must have the capability to schedule heterogeneous computing resources. In edge devices, AI models may run on various computing units such as CPUs, GPUs, NPUs, or even ASICs. How to dynamically allocate and balance loads among these heterogeneous computing resources becomes a technical challenge at the operating system level.

Secondly, a true edge AI operating system should support multi-agent runtime management. This means the system must not only run models but also schedule agents: including state perception among agents, task scheduling, permission control, and behavior coordination.

This also leads to the third core capability of AI OS: a triadic regulation mechanism of tasks, resources, and states. In traditional platforms, tasks are usually statically configured, resource allocation is demand-driven, and state management relies on external monitoring. However, in agent systems, these three are dynamically coupled: whether an agent can execute a task depends on its current state, available resources, and feedback from the behaviors of other agents in the system.

These trends point to a fact: The rise of edge agents is forcing a paradigm reconstruction of operating systems.

If traditional operating systems were born for programs, then the upcoming edge AI operating systems will be born for agents; they must understand hardware, models, behaviors, collaboration, and ecology.

Currently, CIOs are facing not the question of “whether to deploy AI” but the challenge of “how to systematically plan AI.” The emergence of agents is gradually transforming AI from a “project expenditure” to a “systemic infrastructure expenditure.”

Research from ZEDEDA indicates that over 54% of enterprises have adopted a “cloud + edge” hybrid deployment model, and in the next two years, over 60% of new AI budgets are expected to be allocated to edge deployment, with nearly half explicitly targeting the construction of “autonomous AI capabilities.” This reflects a fundamental change in the structure of enterprise AI spending: from CAPEX-dominated “model procurement + deployment costs” to OPEX-dominated “intelligent services + agent subscriptions.”

Enterprises will no longer pay based on the “number of models” but will manage budgets based on the “lifecycle of agents.” Enterprises will no longer purchase a specific model outright but will subscribe to certain agent functionalities and be billed based on effectiveness. All of this means that the industrialization path of edge agent systems is about to accelerate.

From “Models Can Run” to “Agents Can Live”—Four Thresholds

Although the future of edge agents is becoming clearer and the technical path is gradually unfolding, the transition from “models can run” to “agents can live” is not a linear evolution but a systemic upgrade that crosses four thresholds.

First, the complexity of scheduling is one of the most realistic and challenging issues today.

Edge scenarios are inherently heterogeneous, with diverse device types, varying computing structures, and intermittent network conditions. The models, resources, and sensor interfaces that agents rely on differ, making unified scheduling strategies difficult to implement. More complex is that agents themselves have dynamic states, and their behaviors are dependent on the environment and subject to temporal fluctuations; the scheduling system must not only allocate resources but also understand the current intentions and feasibility of the agents.

Secondly, model diversity constitutes the second threshold.

In practical applications of edge AI, an increasing number of tasks require collaboration between general language models and vertical industry models. However, these two types of models differ significantly in their operating mechanisms, input structures, computing power requirements, and response times, making traditional centralized model scheduling inadequate for the collaborative operation of agents.

More challenging is the third threshold— data privacy and compliance.

The biggest feature of edge AI is localized intelligence, which also means that the data it relies on is highly privatized and sensitive, involving core assets such as enterprise operational metrics, user behavior trajectories, and production chain statuses. In traditional AI, data is uploaded to the cloud for unified training and inference, but in agent systems, data is often generated, processed, and decided locally. How the system can achieve collaboration and learning among agents without violating data privacy becomes a difficult problem.

Finally, the issue of agent governance is gradually coming to the forefront.

Multiple agents collaborating in the same system inevitably lead to resource competition, task conflicts, strategy competition, and even information deception. The traditional task priority system becomes complex within the agent framework, especially when agents possess learning or self-updating capabilities, making their behavior paths unpredictable and increasing system risks.

Only by overcoming these four thresholds can agents truly “come to life,” capable not only of running and collaborating but also of continuously evolving, self-repairing, and operating safely within complex systems.

Final Thoughts

The future of edge AI lies not in deploying more models but in activating more “understanding, acting, and collaborating” agents. So-called intelligence is no longer about stacking computing power and model inference in the cloud, but about machines possessing perception and purpose in the physical world, having the ability to react and judge in local environments. In this upcoming new phase, enterprises will no longer just deploy models but will schedule agents.

AI is not running at the edge; it is beginning to think from the edge.

For enterprises, this is no longer a question of “whether to adopt AI” but a strategic decision of “whether they can build their own agent ecosystem.”

The future of edge agents is not as tools but as partners. They will make decisions, collaborate in execution, and coexist with humans. We are not training models; we are shaping new organizational boundaries, new system intelligence, and new industrial orders.

References:

1. Edge AI Matures: Widespread Adoption, Rising Budgets, and New Priorities Revealed in ZEDEDA’s CIO Survey, Source: ZEDEDA2. 16 Changes to AI in the Enterprise: 2025 Edition, Source: a16z.com3. Why is EDGE AI growing so fast, Source: imaginationtech.com