With the explosive growth of information in modern society, more and more memory and AI computing are being used for information storage and computation. However, even with powerful cloud computing, it is challenging to fully meet the demands for data bandwidth and transmission speed. Additionally, as people’s emphasis on data privacy protection increases, edge AI computing has become a breakthrough. It can assist people’s production and life in the era of big data. A very important part of edge computing is embedded memory. This article will introduce how edge AI and embedded memory help achieve computation in big data networks.

What is Edge AI Computing

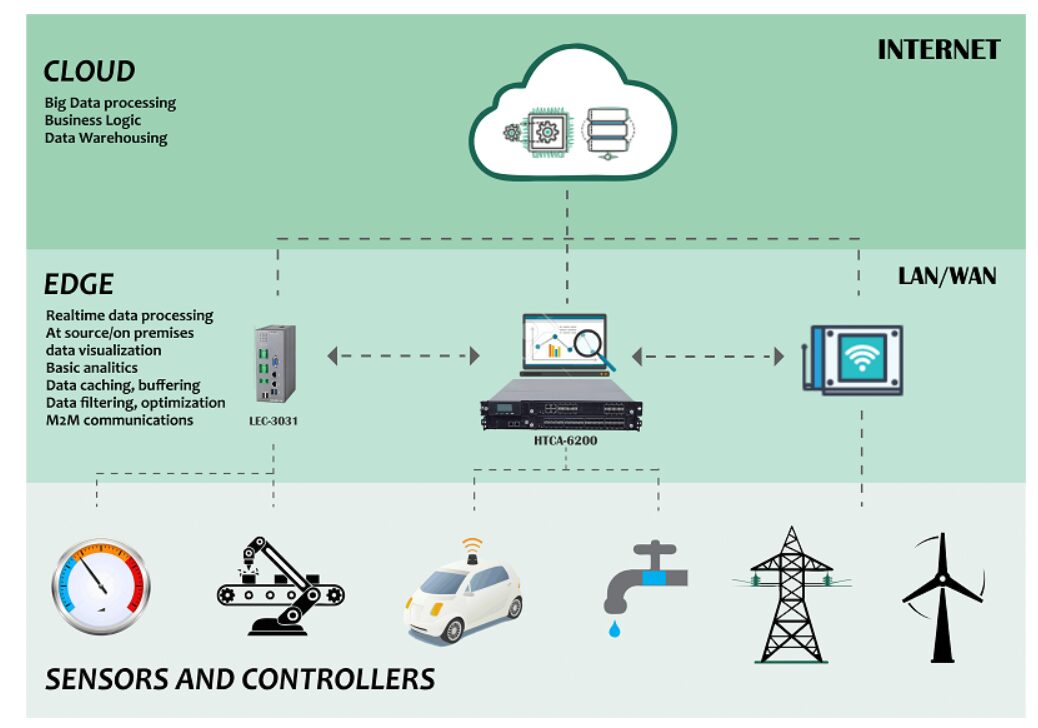

Edge computing is a new computing model that brings data computation closer to the data source. Unlike cloud computing, which relies on cloud servers for large-scale data computation, edge computing does not require data to be uploaded to cloud devices but instead processes it directly on devices close to the data source or at the edge. Edge computing focuses more on how to move some basic computations to edge terminal devices, thereby saving device power consumption, improving data timeliness, and enhancing user privacy protection.

Figure 1. The computational architecture model and relationship between Edge AI and Cloud AI ref[1]

Edge AI is a further development of edge computing. Leveraging the rapid improvement in cloud server data computing capabilities, artificial intelligence and machine learning have made significant progress, developing more comprehensive artificial neural networks. In the era of big data, a massive amount of information is generated daily that needs to be analyzed and processed. As machine learning, neural network training, and other network architectures and tools continuously adapt and integrate into embedded systems, more and more AI applications can run directly on edge devices, making “edge AI” a hot topic of discussion and research.

Edge AI refers to AI algorithms processed on local hardware devices, capable of data processing without a network connection. To achieve this goal, edge computing trains neural network weights through deep learning in the cloud, while the model inference and prediction are executed on the device itself (the edge) where the data is generated. Currently, major manufacturers have begun exploring this field. Traditional cloud service providers like Google, Amazon, and Microsoft have launched edge AI service platforms, enabling machine learning inference by running pre-trained models locally on terminal devices, bringing intelligent services to the edge. Many companies are conducting research, and various edge AI chips have already appeared on the market.

Advantages of Edge AI Computing

With the explosive application of the Internet of Things and smart devices, smartphones, smart home appliances, smart cars, and wearable devices have gradually integrated into the IoT. During operation, these smart devices generate massive amounts of data. However, existing cloud computing bandwidth and computing resources cannot effectively process this data in real-time, and uploading to the cloud may also pose data loss and leakage risks. At the same time, terminal devices consume a lot of energy when sending and receiving wireless information. This limits the large-scale deployment of battery-powered terminal devices, increases maintenance costs, and hinders the application and popularization of IoT. Therefore, many industries’ big data and AI devices are gradually shifting from a cloud computing-centered centralized processing approach to an edge computing processing approach centered on the Internet of Everything. By reducing data transmission between the cloud, the ability for real-time data updates, timely processing, and analysis is enhanced, complementing and optimizing the computational chain’s reliance on cloud devices, maximizing computational efficiency utilization.

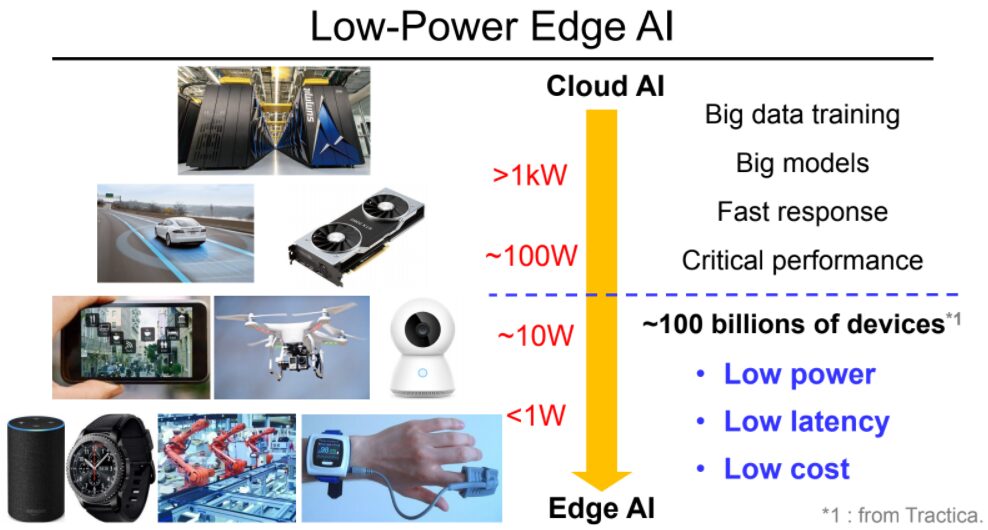

Figure 2. Edge AI can provide lower power consumption, latency, and costs compared to cloud AI technology ref[2]

Edge AI computing has several advantages over cloud computing:

First, edge AI computing can better address bandwidth issues. By not uploading large amounts of data to the cloud and only processing some temporary data generated, it significantly reduces the network bandwidth load and lowers the energy consumption of network transmission.

Second, edge AI computing can reduce system and data latency. By processing data on the device side, it can greatly reduce system latency and improve the response time of instructions. For example, in emergencies, smart cars can receive signals through onboard sensors, process and analyze them, and respond accordingly. This processing brings the signal handling closer to the signal source rather than processing it in the cloud or external processors, thus reducing data latency and enabling quick responses.

Third, edge AI computing can also protect user privacy. Sensitive data does not need to be uploaded to the cloud, avoiding risks from hacker attacks and network viruses, thereby enhancing data security. For instance, password information from smart locks, monitoring information from smart cameras, personal health data from wearable devices, and conversational data from voice assistants.

Edge AI Computing and Embedded Memory

Memory is a crucial component in computer architecture, and edge devices and edge AI computing require advanced storage technology to support and promote their development. Below, we will introduce the relationship between edge AI computing and embedded memory.

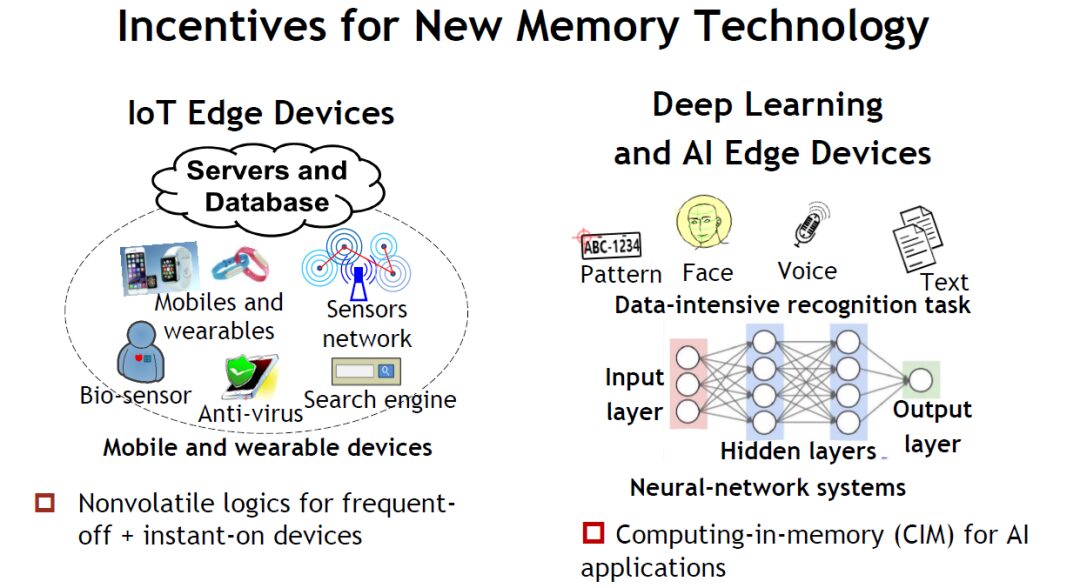

Figure 3. With the development of IoT devices and edge AI computing, new storage technologies are applied in various scenarios ref[3]

While edge AI computing has significant advantages in low bandwidth, low latency, and privacy protection, it also faces challenges such as power consumption and weight storage. The use of edge computing is increasing in applications such as industrial IoT, robotics, wearable devices, and smart cars. Terminal devices need to continuously collect data, and after training and updating weights in the cloud, these weights are returned to the terminal. As this demand grows, applications such as program storage and data backup increasingly require high-speed, low-latency, non-volatile, low-power, and low-cost memory to achieve data recording.

Data recording in terminal devices generally requires memory sizes of several megabits to accommodate long-term accumulated data. Moreover, recorders are typically battery-powered, necessitating low-power memory. Additionally, data recorded during system standby and power-off must be retained indefinitely, requiring non-volatile memory (NVM).

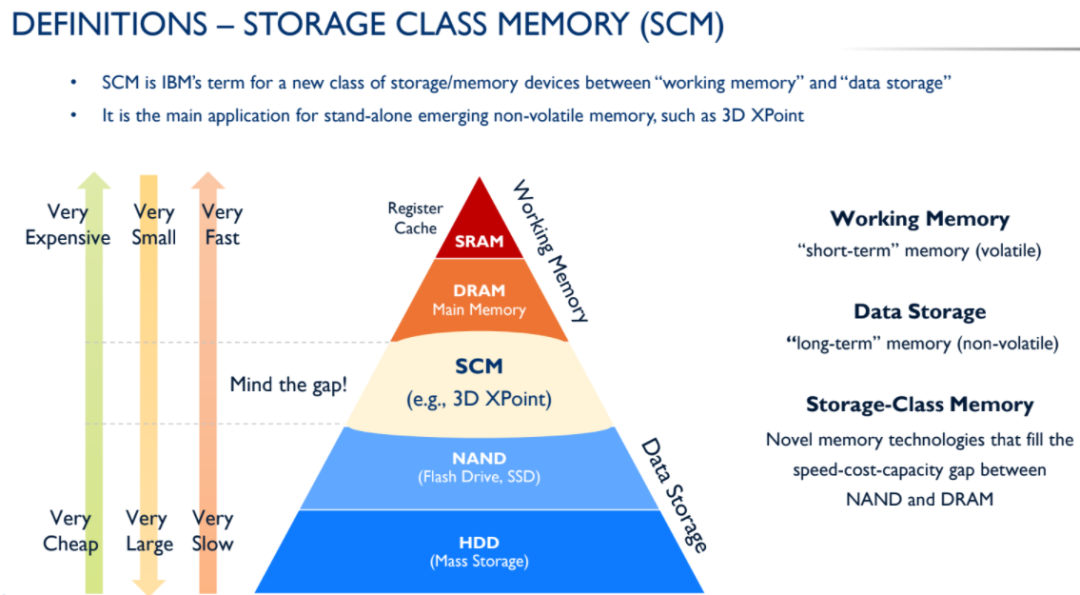

Figure 4. NVM achieves a balance in cost, area, and speed, filling the gap between Memory and Storage ref[4]

For these requirements, Magnetoresistive Random Access Memory (MRAM) in non-volatile memory can meet the needs well. For edge AI, in applications where high performance is not required, MRAM can operate at lower voltages, improving energy efficiency. Since many IoT and wearable devices are battery-powered, they require memory with lower power consumption and leakage. GlobalFoundries’ MRAM uses 22FDX technology, which offers low power consumption and features a Deep Sleep Mode to reduce leakage, significantly extending the standby time of terminal devices; high Data Retention ensures data is preserved correctly for longer periods, and it operates normally under extreme temperature conditions (-40℃ to 125℃); it also has short access times, enabling fast data retrieval. Furthermore, compared to External Memory, embedded MRAM can reduce PCB area and costs.

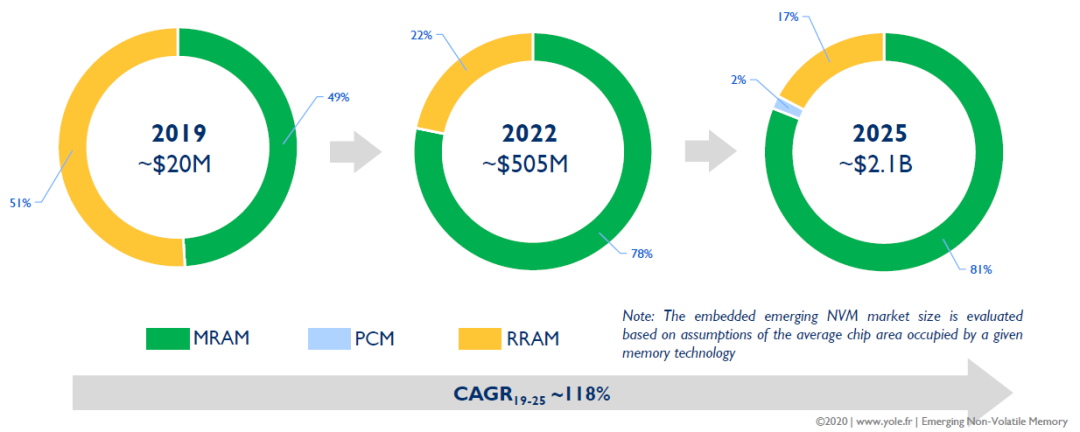

Figure 5. MRAM’s market share in embedded applications is expected to increase and evolve from 2019 to 2025 ref[4]

Near-Memory Computing in Edge AI

To further enhance edge AI and reduce energy consumption and latency caused by data transmission, it is necessary to minimize the physical distance between memory and computing units. By reducing energy consumption and latency caused by data transport, efficiency is improved, and adverse factors related to energy consumption and latency are minimized. Therefore, near-memory computing has emerged.

Near-memory computing, as the name suggests, allows data to be computed close to the storage side. This requires the computing units to be physically closer to the storage units, which not only reduces the load on the CPU and memory of the computing nodes but also greatly alleviates the network transmission load, significantly reducing application response latency, providing a new solution for optimizing applications involving large data processing such as big data and artificial intelligence based on data centers.

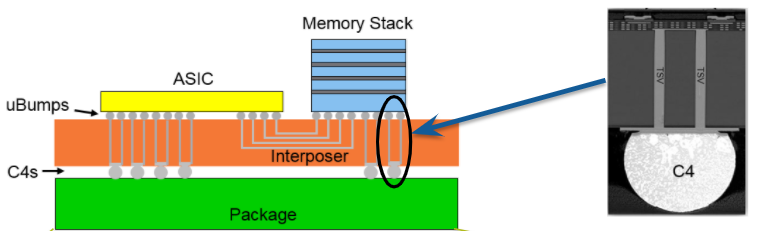

One of the methods that can be applied in near-memory computing is High Bandwidth Memory (HBM) 2.5D packaging technology. This 2.5D packaging technology allows multiple small chips to be packaged onto a single substrate, offering many advantages, primarily higher computational rates, higher bandwidth, and relatively lower costs and power consumption, achieving a perfect balance between cost, performance, and reliability.

Figure 6. GlobalFoundries’ 2.5D packaging technology helps reduce the physical distance between memory and computing units ref[5]

GlobalFoundries’ 2.5D packaging technology was commercialized in 2017 and has since been successfully applied to high-end chips from various system manufacturers both domestically and abroad. It can cover different product sizes and is based on a three-layer Metal process to achieve cost advantages. In terms of advanced packaging design services for 2.5D, GlobalFoundries has completed the entire development process from physical realization to simulation analysis to physical verification and continues to develop support for the design processes of multiple mainstream EDA manufacturers, facilitating various customer uses. Additionally, GlobalFoundries has established long-term cooperative relationships with third-party IP vendors and various OSATs (such as Amkor, ASE), providing one-stop services (from design to production processing), allowing customers’ products to have fast, high-quality, and cost-effective design and production solutions, enabling products to enter the market more quickly while ensuring unique technology and solutions.

In-Memory Computing in Edge AI

In the future, the demand for computing power in big data processing and machine learning will further increase, and there will also be a need to enhance the computing power capacity and efficiency of edge AI computing. This extremely high demand for computing power will create a conflict with the memory wall caused by the von Neumann computing architecture, which limits the development of the electronic information field. Recently introduced in-memory computing (compute-in-memory) chips hold great promise for solving this issue. The compute-in-memory chip technology aims to transform the traditional compute-centric architecture into a data-centric architecture, directly utilizing memory for data processing, thus integrating data storage and computation on the same chip, completely eliminating the bottleneck of the von Neumann computing architecture. Since edge AI computations, such as those involving convolutional neural networks (CNNs), involve relatively straightforward instructions, primarily multiply-accumulate (MAC) operations, using memory for MAC units is highly feasible.

During CNN computation, under traditional computing architecture, the memory bit-cell only stores weights. When performing computations, weights must be retrieved from memory and combined with input data in a digital processor for computation, then the results are output. In-memory computing performs weight and input multiply-accumulate operations directly within memory, allowing multiple word-lines to be activated simultaneously, rather than only one word-line as in traditional architectures through an address decoder, significantly enhancing computational efficiency and throughput. Therefore, in-memory computing is particularly suitable for deep learning neural networks, which involve large-scale parallel applications with massive data.

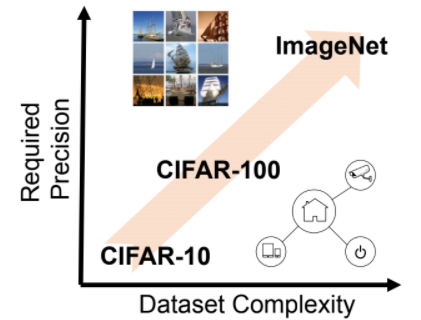

Figure 7. Utilizing SRAM’s storage and computation characteristics to address bottlenecks in computing architecture and recognize high-precision datasets ref[6]

Solutions for edge devices are very cost-sensitive. Many edge AI chip suppliers need to deliver products on mature nodes around 40nm. Currently, this process is ideal and not expensive. However, looking to the future, suppliers aim to achieve higher performance with lower power consumption.

GlobalFoundries’ new 12LP+ technology offers an alternative path by reducing voltage rather than transistor size to lower power consumption. GlobalFoundries has also developed new SRAM and multiply-accumulate (MAC) circuits optimized specifically for AI acceleration. The SRAM cells under the 12LP+ process include dual-function transistors, allowing for voltage reduction and thereby decreasing the required voltage margin.

Conclusion

While cloud computing has always been the preferred choice for data management, processing, and storage, it has its limitations. Edge computing can serve as an alternative solution, but since this technology is still in its early stages, it faces many challenges, such as the need to develop software and hardware capable of handling cloud-diverted computing tasks and teaching machines to switch between computing tasks that can be executed at the edge and those that require cloud execution.

Nevertheless, as edge AI computing becomes more widely adopted, enterprises will have more opportunities to test and deploy this technology across various fields. At that time, embedded memory will also rejuvenate.

Wang Ruizhe, Senior R&D Engineer, China Chip Design CenterLiu Lei, Department of Micro and Nano Electronics, Shanghai Jiao Tong UniversityMeng Chao, Senior Design Manager, China Chip Design CenterLi Qing, Director, China Chip Design Center

Upload Reports! Shoot Videos! Write Insights!

Experts in Electronic Competitions Wanted!

Activity Rewards:

1. Participation Reward: The first 20 participants can receive a 20 yuan JD card through community messages within 1-2 working days after publishing content.

2. Excellent Reward: Outstanding original articles will receive rewards of 100-200 yuan. Rewards will be distributed within 7 working days after the activity ends via community messages.

Requirements for Excellent Reward:

1. Articles must be original and not previously published (on this community or other platforms).

2. Articles must be no less than 1500 words, with 3 or more accompanying images.

3. Bonus points: Include circuit diagrams, code, PCB diagrams, encourage practical insights and personal reflections.

4. Videos can also be embedded in your posts.