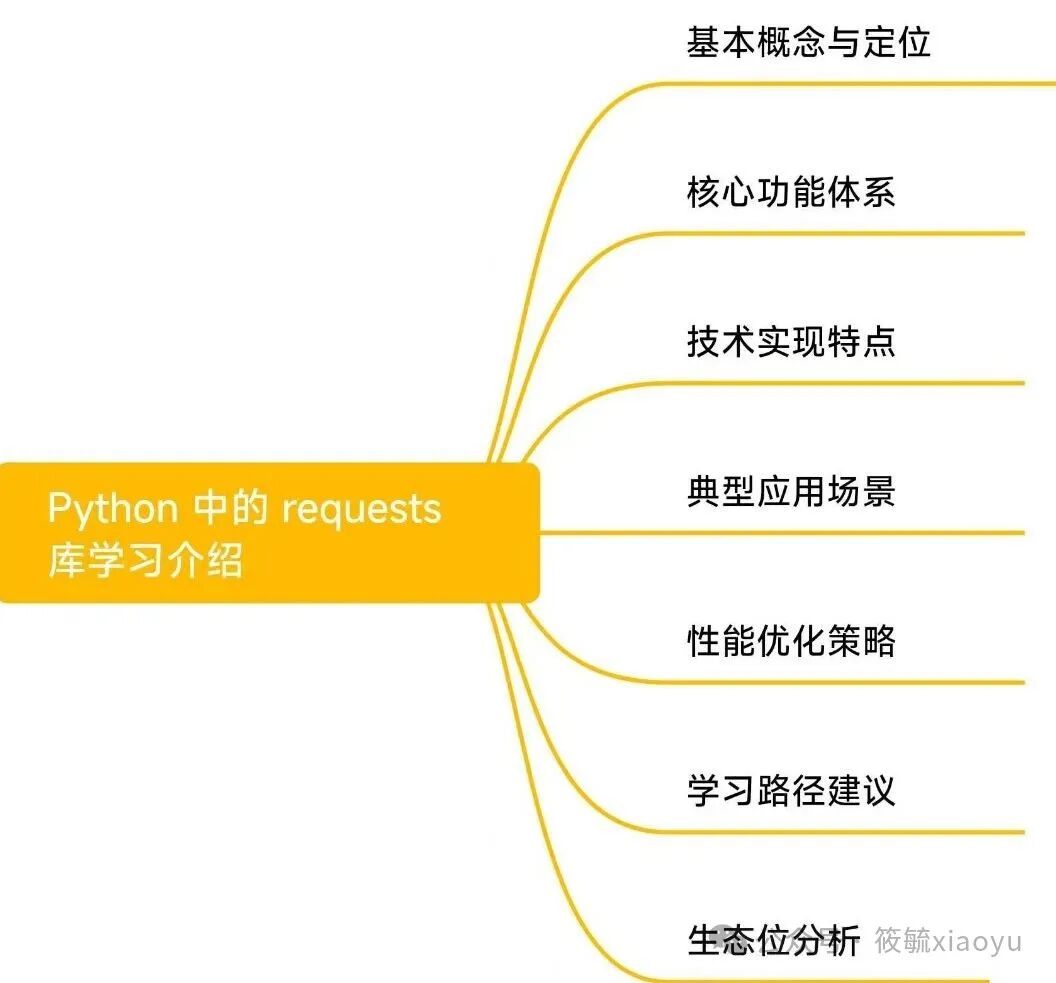

How can a Python web scraper do without Requests? This HTTP tool is simply a Swiss Army knife for data acquisition! Whether it’s scraping a webpage, calling an API, or downloading a file, just a few lines of code will do the trick without the hassle of low-level details. Today, let’s take a look at what makes this package so powerful.

Installation is super simple

pip install requestsOnce installed, it can be used immediately without the need to deal with other dependencies, which is very convenient.

Sending requests is straightforward

The most basic GET request can be done in one line:

import requests

response = requests.get('https://www.python.org')

print(response.status_code) # 200 means success

print(response.text[:100]) # Print the first 100 characters

Want to send a GET request with parameters? Just add a params:

params = {'key1': 'value1', 'key2': 'value2'}

response = requests.get('https://httpbin.org/get', params=params)

print(response.url) # Check the actual request URLSending data with a POST request is also very intuitive:

data = {'username': 'admin', 'password': '123456'}

response = requests.post('https://httpbin.org/post', data=data)

print(response.json()) # Directly parse the JSON responseHandling responses in various ways

Requests makes handling response content super convenient:

# Text content

print(response.text)

# JSON data (automatically parsed)

print(response.json())

# Binary content

print(response.content)

# Response headers

print(response.headers)

# Response status code

print(response.status_code)

Friendly reminder: It’s best to confirm that the response is indeed in JSON format before using <span>response.json()</span>, or it will throw an error. You can check if <span>response.headers['Content-Type']</span> contains ‘application/json’.

Customizing requests in various ways

Add custom headers to dress up your requests:

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'}

response = requests.get('https://httpbin.org/get', headers=headers)Set a timeout to avoid letting your program hang:

response = requests.get('https://httpbin.org/get', timeout=3) # Raises an exception if no response after 3 seconds

Handling cookies from websites:

response = requests.get('https://httpbin.org/cookies/set/sessionid/123456789')

print(response.cookies['sessionid']) # Get the cookie set by the serverSession objects to maintain connections

Want to maintain session state? The Session object can help:

session = requests.Session()

session.get('https://httpbin.org/cookies/set/sessionid/123456789')

response = session.get('https://httpbin.org/cookies') # Automatically carries over the previous cookie

print(response.json())When scraping, the Session object is very useful for maintaining login states and reusing connections, improving efficiency.

Downloading large files is also a breeze:

response = requests.get('https://example.com/large-file.zip', stream=True)

with open('large-file.zip', 'wb') as f:

for chunk in response.iter_content(chunk_size=8192):

f.write(chunk)With this, you can scrape anything and download everything, and the code is super concise. If you’re still using urllib, it’s time to switch! Next time you write a scraper with Requests, it will definitely be twice the result with half the effort!

Like and share!

Let money and love flow to you