Recently, everyone has been discussing MCP, and it has been found that one of the most important points has been overlooked: decoupling the tool providers from application developers through standardized protocols will lead to a shift in the development paradigm of AI Agent applications (similar to the separation of front-end and back-end in web application development).

This article takes the development of the Agent TARS (https://agent-tars.com/) application as an example to detail the role of MCP in development paradigms, and tool ecosystem expansion.

Terminology Explanation

AI Agent: In the context of LLMs, an AI Agent is an entity that can autonomously understand intentions, plan decisions, and execute complex tasks. An Agent is not just an upgraded version of ChatGPT; it not only tells you “how to do it” but also helps you do it. If Copilot is the co-pilot, then the Agent is the pilot. Similar to the human process of “doing things”, the core functions of an Agent can be summarized in a three-step cycle: Perception, Planning, and Action.

Copilot: Copilot refers to an AI-based assistant tool, typically integrated with specific software or applications, designed to help users improve work efficiency. Copilot systems analyze user behavior, input, data, and history to provide real-time suggestions, automate tasks, or enhance functionality, assisting users in decision-making or simplifying operations.

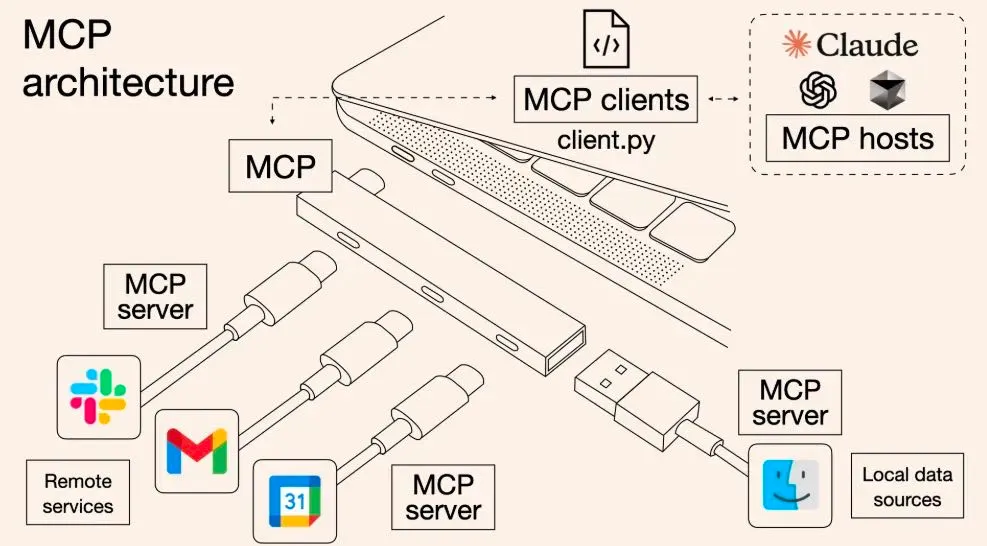

MCP: Model Context Protocol is an open protocol that standardizes how applications provide context for LLMs. You can think of MCP as the USB-C port for AI applications. Just as USB-C provides a standard way for your devices to connect to various peripherals and accessories, MCP provides a standard way for your AI models to connect to different data sources and tools.

Agent TARS: An open-source multimodal AI agent that provides seamless integration with various real-world tools.

RESTful API: RESTful is a software architectural style and design style, not a standard, but rather a set of design principles and constraints. It is primarily used for client-server interaction software.

Background

AI has evolved from the initial chatbots that could only converse, to copilots that assist human decision-making, and now to agents that can autonomously perceive and act. This requires AI to have richer task context (Context) and a toolset (Tools) necessary for executing actions.

Pain Points

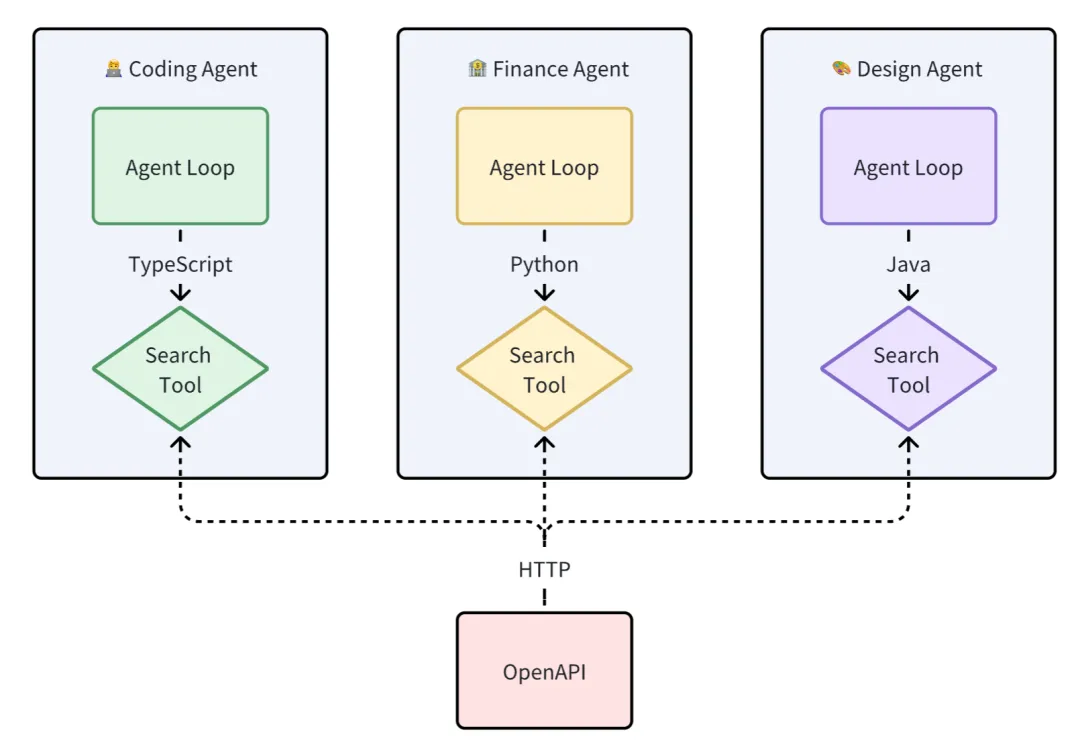

The lack of standardized context and toolsets leads to three major pain points for developers:

- High coupling in development: Tool developers need to have an in-depth understanding of the internal implementation details of the Agent and write tool code at the Agent layer. This leads to difficulties in tool development and debugging.

- Poor tool reusability: Since each tool implementation is coupled within the Agent application code, even if an API is used to implement an adaptation layer, there are differences in the input and output parameters given to the LLM. From a programming language perspective, it is impossible to achieve cross-language reuse.

- Fragmented ecosystem: Tool providers can only offer OpenAPI, and due to the lack of standards, tools in different Agent ecosystems are incompatible.

Goals

“All problems in computer science can be solved by another level of indirection” — Butler Lampson In computer science, any problem can be solved through an abstraction layer.

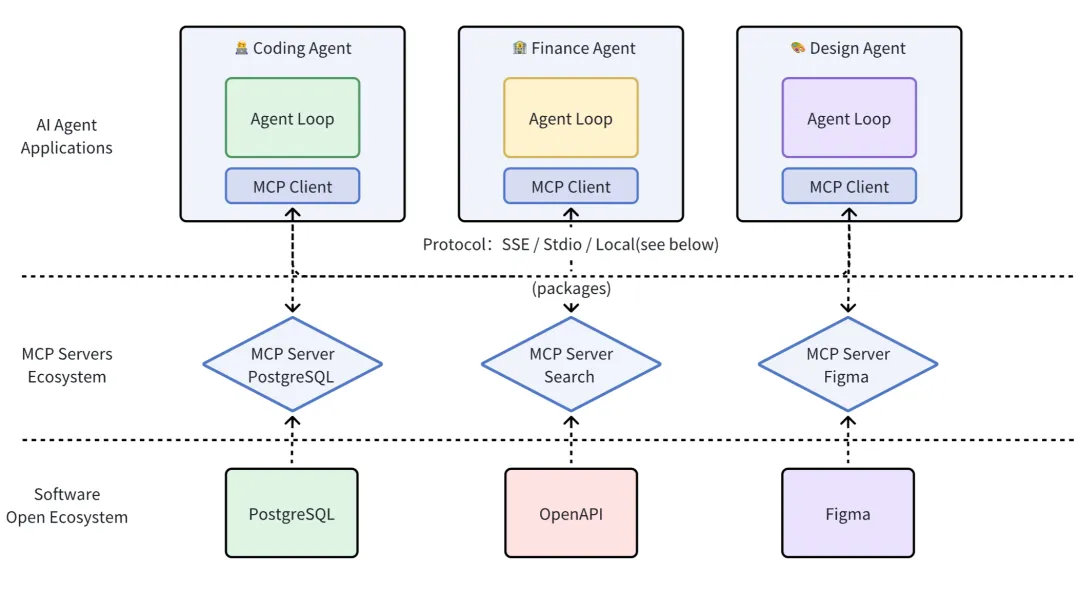

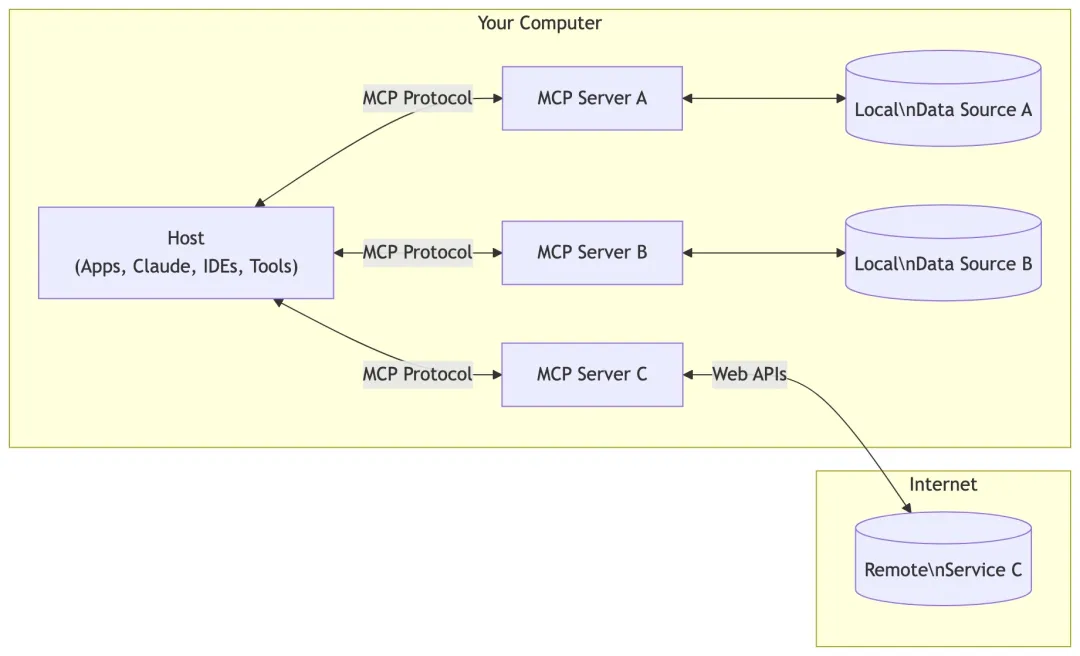

Decouple tools from the Agent layer, transforming them into a separate MCP Server layer, and standardize development and invocation. The MCP Server provides context and a standardized invocation method for tools to the upper-layer Agent.

Demonstration

Let’s look at a few examples of how MCP plays a role in AI Agent applications.

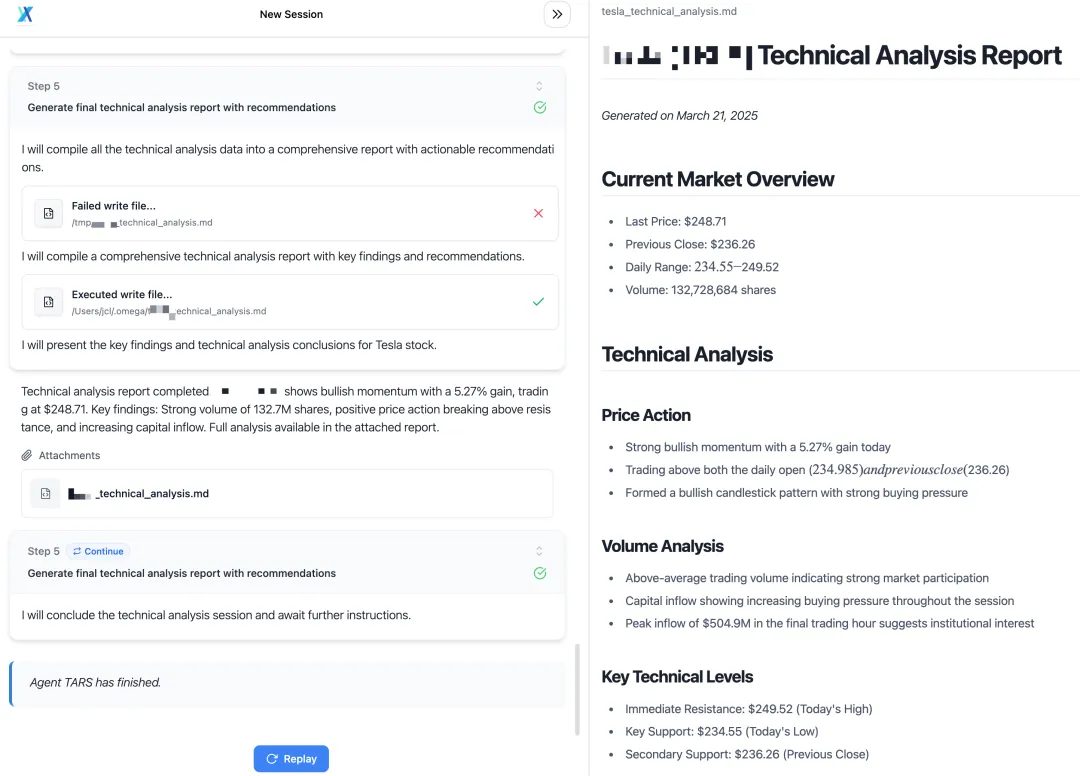

Example 1 (This does not constitute investment advice; orders are placed using a brokerage simulation account)

- Instruction: Analyze the stock from a technical perspective, then buy 3 shares at market price

- Playback:

- Used MCP Servers:

- Brokerage MCP: https://github.com/longportapp/openapi/tree/main/mcp

- File System MCP: https://github.com/modelcontextprotocol/servers/tree/main/src/filesystem

Example 2

- Instruction: What are the CPU, memory, and network speeds of my machine?

- Playback:

- Used MCP Servers:

- Command Line MCP: https://github.com/g0t4/mcp-server-commands

- Code Execution MCP: https://github.com/formulahendry/mcp-server-code-runner

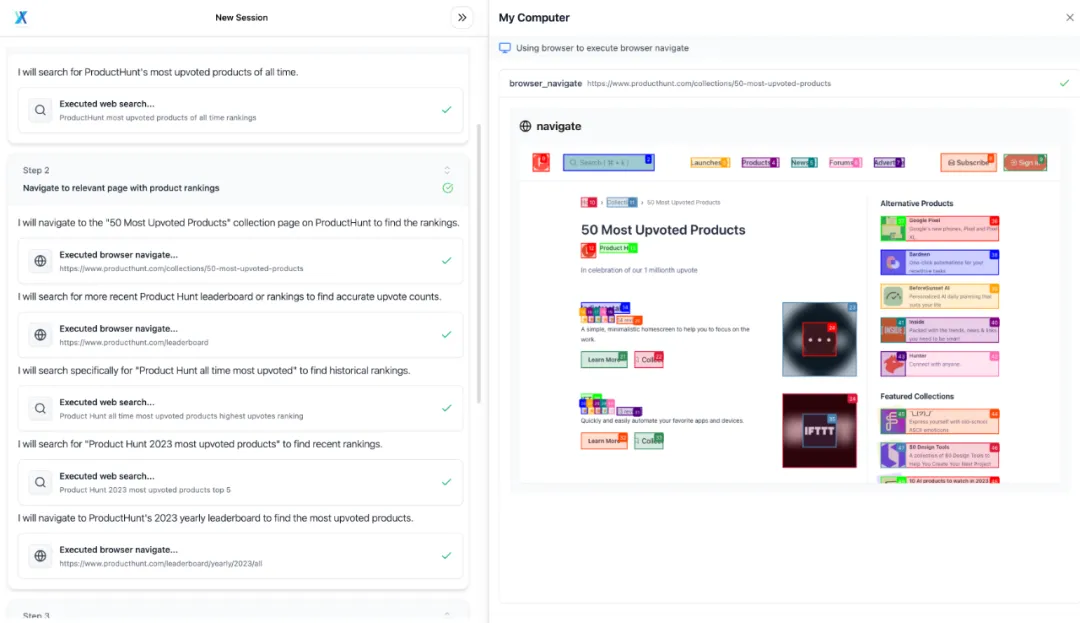

Example 3

- Instruction: Find the top 5 products with the most likes on ProductHunt

- Playback:

- Used MCP Servers:

- Browser Operation MCP: https://github.com/bytedance/UI-TARS-desktop/tree/fb2932afbdd54da757b9fae61e888fc8804e648f/packages/agent-infra/mcp-servers/browser

Example 4

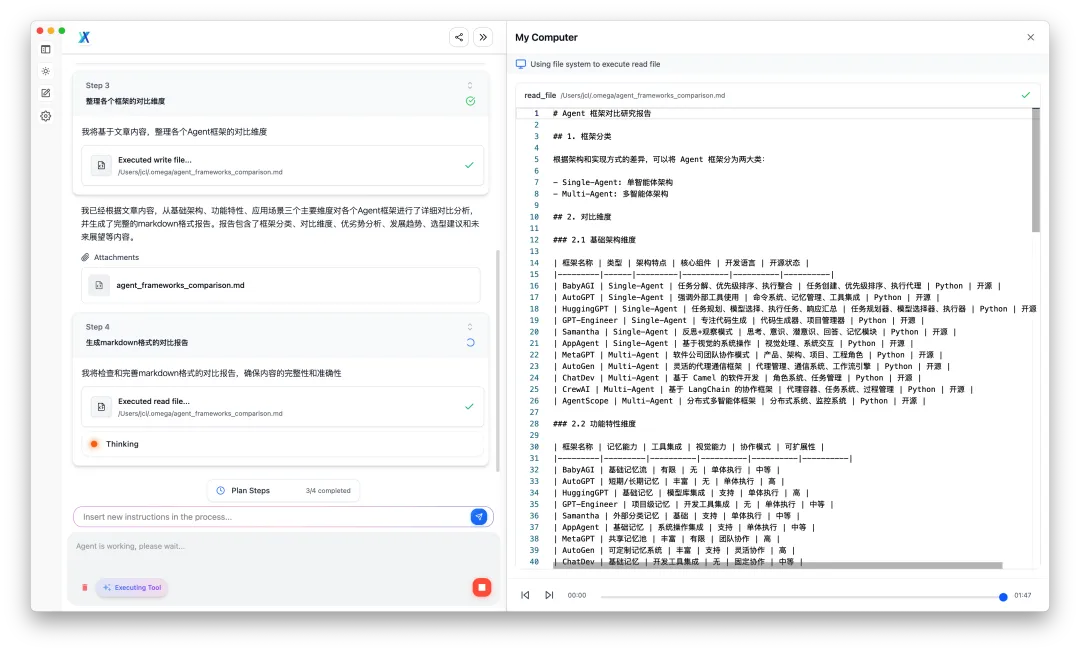

- Instruction: Based on this article, research the comparative dimensions of various Agent frameworks and generate a report in markdown

- Playback:

- Used MCP Servers: Link Reader

Custom MCP Servers are now supported! !415 (https://github.com/bytedance/UI-TARS-desktop/pull/415) !489 (https://github.com/bytedance/UI-TARS-desktop/pull/489), allowing the addition of Stdio, Streamable HTTP, and SSE type MCP Servers.

More: https://agent-tars.com/showcase

Introduction

What is MCP?

Model Context Protocol is a standard protocol launched by Anthropic for communication between LLM applications and external data sources (Resources) or tools (Tools), following the basic message format of JSON-RPC 2.0.

You can think of MCP as the USB-C interface for AI applications, standardizing how applications provide context for LLMs.

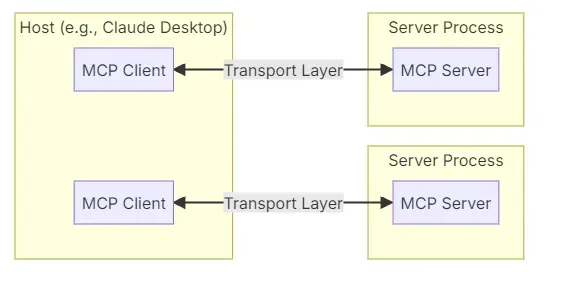

The architecture diagram is as follows:

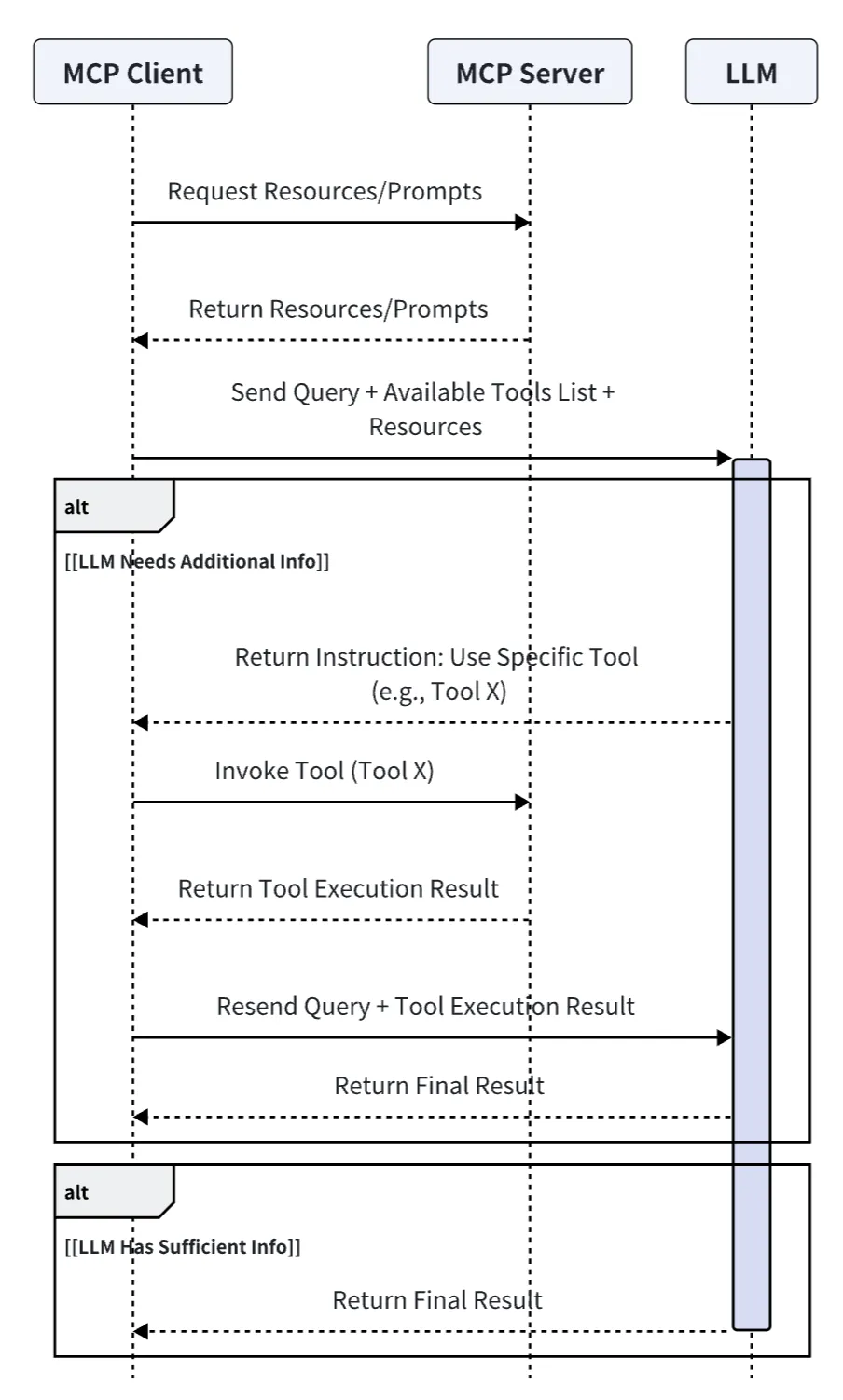

- MCP Client: Communicates with Servers via the MCP protocol and maintains a 1:1 connection.

- MCP Servers: Context providers that expose external data sources (Resources), tools (Tools), prompts (Prompts), etc., called by the Client.

- Language support: TypeScript and Python, Java, Kotlin, C#.

Flowchart

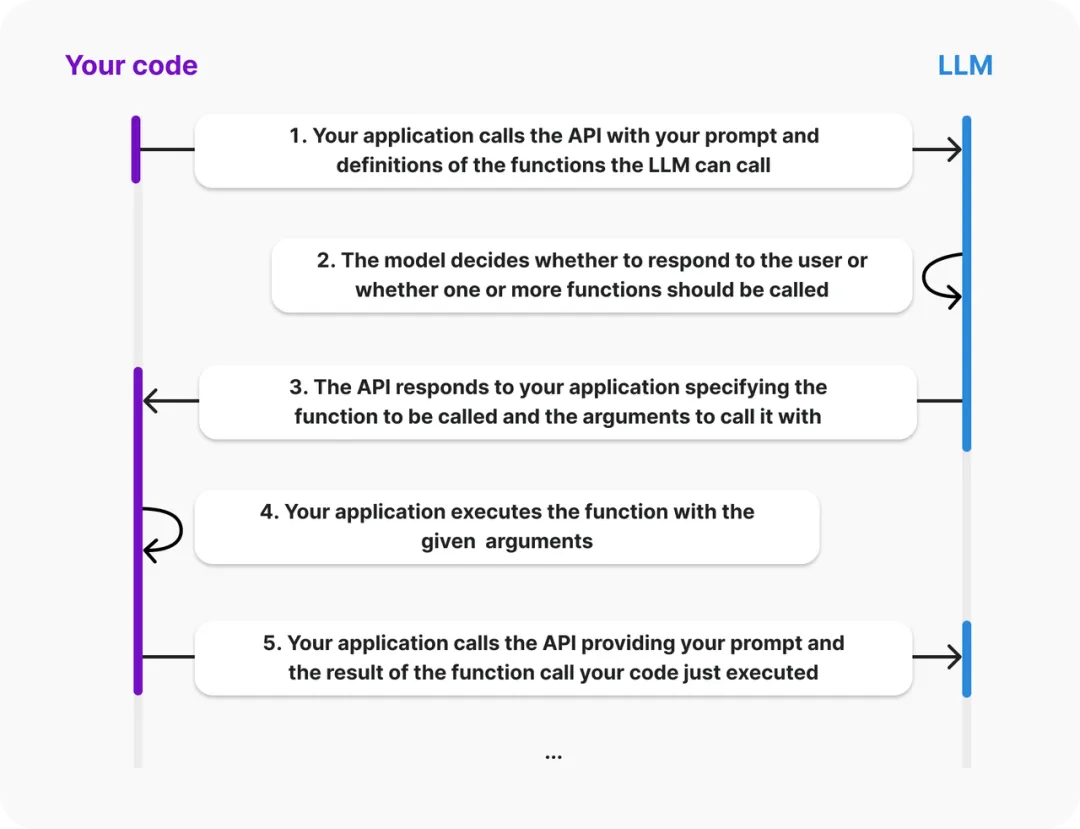

In one sentence, MCP provides the context required for LLM: Resources, Prompts, Tools.

What is the difference between MCP and Function Call?

| MCP | Function Call | |

| Definition | A standard interface for integrating models with other devices, including:

|

Connects the model to external data and systems, listing tools in a flat manner. The difference from MCP Tools is that MCP Tools have defined input-output protocol specifications. |

| Protocol | JSON-RPC, supports bidirectional communication (though not widely used currently), discoverability, and update notification capabilities |

JSON-Schema, static function call |

| Invocation Method | Stdio/SSE/Streamable HTTP/same-process call (see below) | Same-process call/programming language corresponding function |

| Applicable Scenarios | More suitable for dynamic, complex interaction scenarios | Single specific tool, static function execution call |

| System Integration Difficulty | High | Simple |

| Engineering Degree | High | Low |

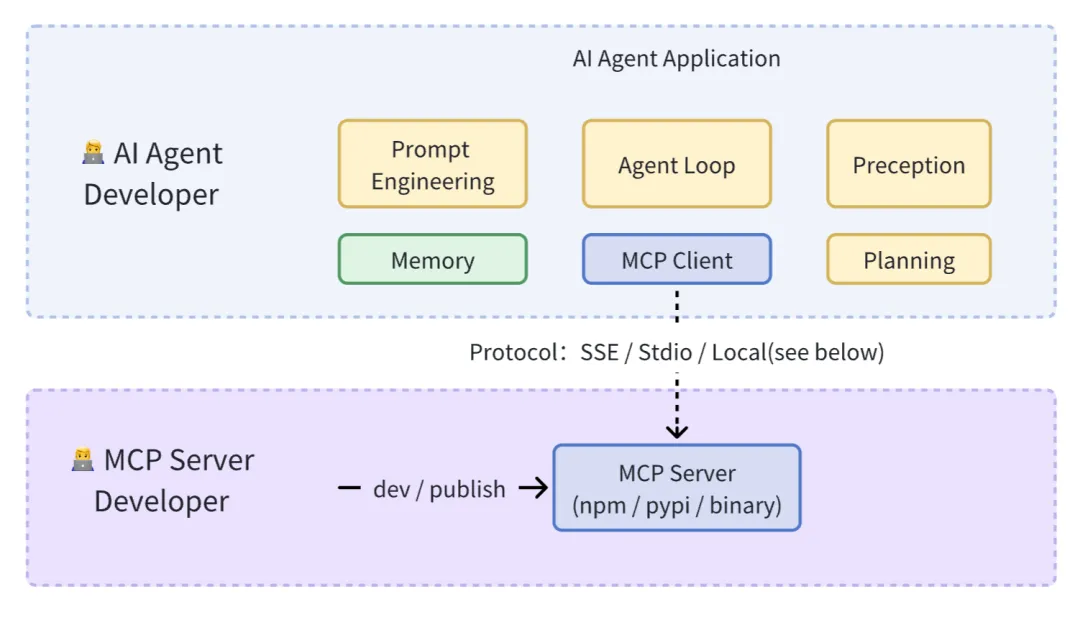

Looking at MCP from the perspective of front-end and back-end separation

In the early days of web development, when JSP and PHP were prevalent, front-end interactive pages were coupled with back-end logic, leading to high development complexity, difficult code maintenance, and inconvenient front-end and back-end collaboration, making it hard to meet the higher demands of modern web applications for user experience and performance.

AJAX, Node.js, and RESTful APIs promoted front-end and back-end separation, and MCP is also realizing the “tool layering” of AI development:

- Front-end and back-end separation: Front-end focuses on the interface, back-end focuses on API interfaces;

- MCP layering: Allows tool developers and Agent developers to focus on their respective roles, and the iteration of tool quality and functionality does not require Agent developers to be aware of it. This layering allows AI Agent developers to combine tools like building blocks, quickly constructing complex AI applications.

Practice

Overall Design

Taking the development and integration of the MCP Browser tool (https://github.com/bytedance/UI-TARS-desktop/tree/main/packages/agent-infra/mcp-servers/browser) as an example, we will gradually analyze the specific implementation.

When designing the Browser MCP Server, the official stdio call method (i.e., cross-process calling via <span><span>npx</span></span>) was not adopted. The reason is to lower the usage threshold and avoid requiring users to install Npm, Node.js, or UV upon first use, which would affect the out-of-the-box experience of the Agent (related issue#64: https://github.com/modelcontextprotocol/servers/issues/64).

Therefore, the design of Agent tools is divided into two categories:

- Built-in MCP Servers: Fully comply with MCP specifications and support both Stdio and function calls. (In other words, develop Function Call using MCP standards)

- User-defined MCP Servers: For users who need to extend functionality, it is assumed that they already have an Npm or UV environment, thus allowing for more flexible extension methods.

The differences between the two:

- Built-in MCP Servers are essential tools for completing the current Agent functionality

- Invocation method: The built-in method does not require Agent users to install Node.js / npm, which is more user-friendly for ordinary users.

MCP Server Development

Taking mcp-server-browser as an example, it is essentially an npm package, with the following configuration in <span><span>package.json</span></span>:

{

"name": "mcp-server-browser",

"version": "0.0.1",

"type": "module",

"bin": {

"mcp-server-browser": "dist/index.cjs"

},

"main": "dist/server.cjs",

"module": "dist/server.js",

"types": "dist/server.d.ts",

"files": [

"dist"

],

"scripts": {

"build": "rm -rf dist && rslib build && shx chmod +x dist/*.{js,cjs}",

"dev": "npx -y @modelcontextprotocol/inspector tsx src/index.ts"

}

}

<span><span>bin</span></span>specifies the entry file for calling via stdio.<span><span>main</span></span>,<span><span>module</span></span>are the entry files for same-process calls via Function Call.

Development (dev)

In practice, using the Inspector (https://modelcontextprotocol.io/docs/tools/inspector) to develop and debug the MCP Server is quite effective, as it decouples the Agent from the tools, allowing for separate debugging and development of tools.

Directly running <span><span>npm run dev</span></span> starts a Playground that includes debuggable features of the MCP Server (Prompts, Resources, Tools, etc.).

$ npx -y @modelcontextprotocol/inspector tsx src/index.ts

Starting MCP inspector...

New SSE connection

Spawned stdio transport

Connected MCP client to backing server transport

Created web app transport

Set up MCP proxy

🔍 MCP Inspector is up and running at http://localhost:5173 🚀

Note: When debugging the development Server with Inspector, console.log will not display, which can be a bit troublesome for debugging.

Implementation (Implement)

Entry Point

For the built-in MCP Server to function as a Function call for same-process invocation, three shared methods are exported in the entry file src/server.ts:

<span><span>listTools</span></span>: Lists all functions<span><span>callTool</span></span>: Calls a specific function<span><span>close</span></span>: Cleanup function after the Server is no longer in use

// src/server.ts

export const client: Pick<Client, 'callTool' | 'listTools' | 'close'> = {

callTool,

listTools,

close,

};

At the same time, Stdio invocation is supported, and can be used directly by importing the module in <span><span>src/index.ts</span></span>.

#!/usr/bin/env node

// src/index.ts

import { client as mcpBrowserClient } from "./server.js";

const server = new Server(

{

name: "example-servers/puppeteer",

version: "0.1.0",

},

{

capabilities: {

tools: {},

},

}

);

// listTools

server.setRequestHandler(ListToolsRequestSchema, mcpBrowserClient.listTools);

// callTool

server.setRequestHandler(CallToolRequestSchema, async (request) =>

return await mcpBrowserClient.callTool(request.params);

);

async function runServer() {

const transport = new StdioServerTransport();

await server.connect(transport);

}

runServer().catch(console.error);

process.stdin.on("close", () => {

console.error("Browser MCP Server closed");

server.close();

});

Tool Definition

The MCP protocol requires using JSON Schema to constrain the input and output parameters of tools. In practice, it is recommended to use zod (https://github.com/colinhacks/zod) to define a set of Zod Schema, which can then be converted to JSON Schema when exporting to MCP.

import { z } from 'zod';

const toolsMap = {

browser_navigate: {

description: 'Navigate to a URL',

inputSchema: z.object({

url: z.string(),

}),

handle: async (args) => {

// Implements

const clickableElements = ['...']

return {

content: [

{

type: 'text',

text: `Navigated to ${args.url}\nclickable elements: ${clickableElements}`,

},

],

isError: false,

}

}

},

browser_scroll: {

name: 'browser_scroll',

description: 'Scroll the page',

inputSchema: z.object({

amount: z

.number()

.describe('Pixels to scroll (positive for down, negative for up)'),

}),

handle: async (args) => {

return {

content: [

{

type: 'text',

text: `Scrolled ${actualScroll} pixels. ${

isAtBottom

? 'Reached the bottom of the page.'

: 'Did not reach the bottom of the page.'

}`,

},

],

isError: false,

};

}

},

// more

};

const callTool = async ({ name, arguments: toolArgs }) => {

return handlers[name].handle(toolArgs);

}

Tips:

Unlike OpenAPI, which returns structured data, MCP’s return values are designed specifically for LLM models. To better connect models with tools, the returned text and the tool’s description <span><span>description</span></span> should be more semantic, thereby enhancing the model’s understanding and increasing the success rate of tool calls.

For example, <span><span>browser_scroll</span></span> should return the page’s scroll status (such as remaining pixels to the bottom, whether it has reached the bottom, etc.) after each tool execution. This way, the model can provide appropriate parameters for the next tool call.

Agent Integration

After developing the MCP Server, it needs to be integrated into the Agent application. In principle, the Agent does not need to be concerned with the specific details of the tools, input parameters, and output parameters provided by the MCP Servers.

MCP Servers Configuration

The MCP Servers configuration is divided into “Built-in Servers” and “User Extended Servers”. Built-in Servers are called through same-process Function Calls, ensuring that the Agent application is ready to use for novice users, while Extended Servers provide advanced users with extended capabilities for the Agent.

{

// Internal MCP Servers(in-process call)

fileSystem: {

name: 'fileSystem',

localClient: mcpFsClient,

},

commands: {

name: 'commands',

localClient: mcpCommandClient,

},

browser: {

name: 'browser',

localClient: mcpBrowserClient,

},

// External MCP Servers(remote call)

fetch: {

command: 'uvx',

args: ['mcp-server-fetch'],

},

longbridge: {

command: 'longport-mcp',

args: [],

env: {}

}

}

MCP Client

The core task of the MCP Client is to integrate different invocation methods (Stdio / SSE / Streamable HTTP / Function Call) of the MCP Server. The Stdio and SSE methods directly reuse the official example (https://modelcontextprotocol.io/quickstart/client), and here we mainly introduce how we support Function Call invocation in the Client.

Function Call Invocation

export type MCPServer<ServerNames extends string = string> = {

name: ServerNames;

status: 'activate' | 'error';

description?: string;

env?: Record<string, string>;

+ /** in-process call, same as function call */

+ localClient?: Pick<Client, 'callTool' | 'listTools' | 'close'>;

/** Stdio server */

command?: string;

args?: string[];

};

The MCP Client invocation method is as follows:

import { MCPClient } from '@agent-infra/mcp-client';

import { client as mcpBrowserClient } from '@agent-infra/mcp-server-browser';

const client = new MCPClient([

{

name: 'browser',

description: 'web browser tools',

localClient: mcpBrowserClient,

}

]);

const mcpTools = await client.listTools();

const response = await openai.chat.completions.create({

model,

messages,

// Different model vendors need to convert to the corresponding tools data format.

tools: convertToTools(tools),

tool_choice: 'auto',

});

At this point, the overall process of MCP has been fully implemented, covering all aspects from Server configuration, Client integration to connection with the Agent. More details/code of MCP have been open-sourced on GitHub:

- Agent Integration: https://github.com/bytedance/UI-TARS-desktop/blob/fb2932afbdd54da757b9fae61e888fc8804e648f/apps/agent-tars/src/main/llmProvider/index.ts#L89-L91

- mcp-client: https://github.com/bytedance/UI-TARS-desktop/tree/main/packages/agent-infra/mcp-client

- mcp-servers: https://github.com/bytedance/UI-TARS-desktop/tree/main/packages/agent-infra/mcp-servers

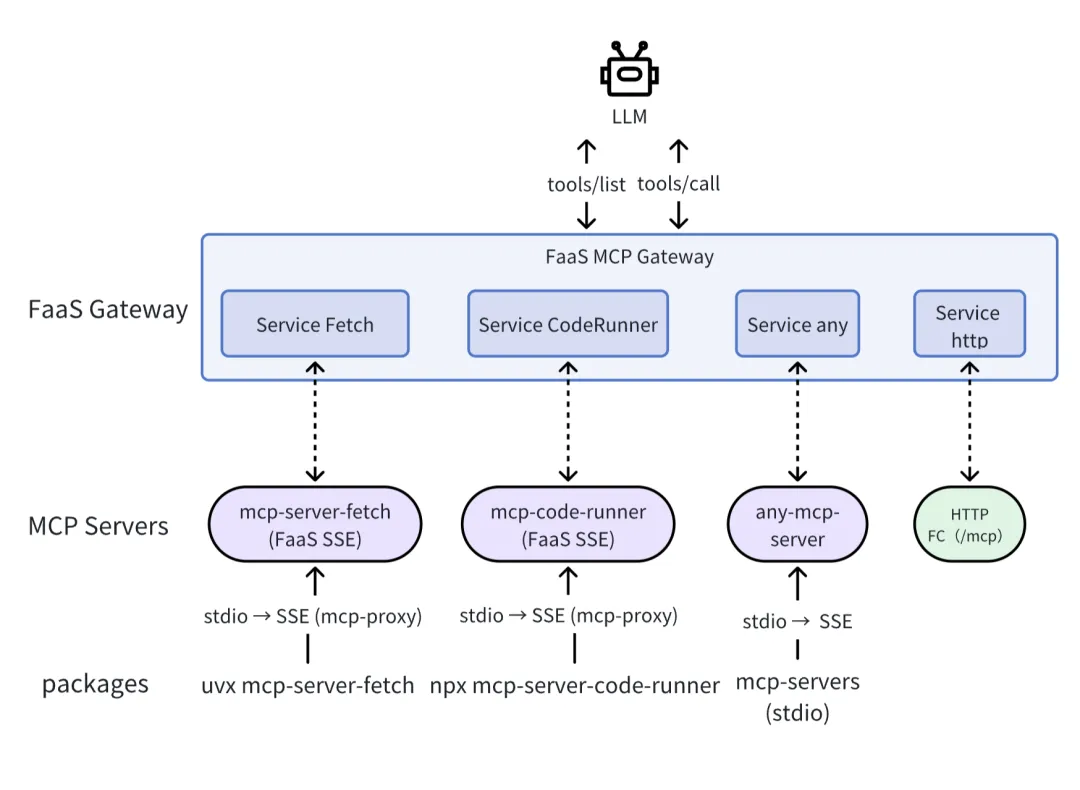

Remote Invocation

If it is a web application (unable to use Stdio MCP Server), you can use FaaS to convert Stdio into an SSE MCP Server, thereby seamlessly supporting MCP-type Tools based on Function Call. This process can be described as “cloudifying MCP Servers”.

Invocation code example:

import asyncio

import openai

import json

from agents.mcp import MCPUtil

from agents.mcp import MCPServerSse

from agents import set_tracing_disabled

set_tracing_disabled(True)

async def chat():

client = openai.AzureOpenAI(

azure_endpoint=base_url,

api_version=api_version,

api_key=ak,

)

+ async with MCPServerSse(

+ name="fetch", params={"url": "https://{mcp_faas_id}.mcp.bytedance.net/sse"}

+ ) as mcp_server:

+ tools = await MCPUtil.get_function_tools(

+ mcp_server, convert_schemas_to_strict=False

+ )

prompt = "Request the URL https://agent-tars.com/, what is its main purpose?"

completion = client.chat.completions.create(

model=model_name,

messages=[{"role": "user", "content": prompt}],

max_tokens=max_tokens,

+ tools=[

+ {

+ "type": "function",

+ "function": {

+ "name": tool.name,

+ "description": tool.description,

+ "parameters": tool.params_json_schema,

+ },

+ }

+ for tool in tools

+ ],

)

for choice in completion.choices:

if isinstance(choice.message.tool_calls, list):

for tool_call in choice.message.tool_calls:

if tool_call.function:

+ tool_result = await mcp_server.call_tool(

+ tool_name=tool_call.function.name,

+ arguments=json.loads(

+ tool_call.function.arguments

+ ), // or jsonrepair

+ )

+ print("tool_result", tool_result.content)

if __name__ == '__main__':

asyncio.run(chat())

Thoughts

Ecology

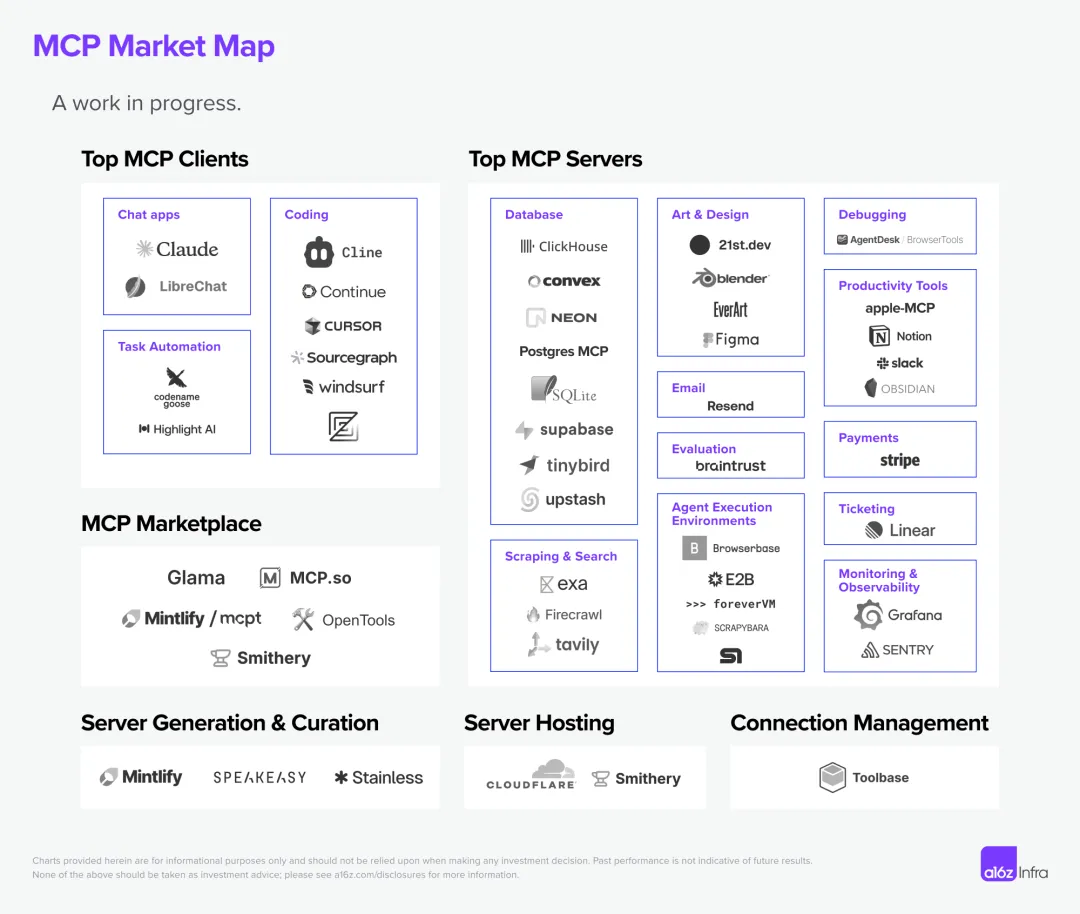

The MCP ecosystem is continuously growing, with more and more applications supporting MCP, while open platforms also provide MCP Servers. Additionally, platforms like Cloudflare, Composio, and Zapier use SSE to host MCP (i.e., connecting to an MCP Endpoint connects to a batch of MCP Servers). The ideal scenario for Stdio is for MCP Servers and the Agent system to run in the same Docker container (similar to the Sidecar pattern).

MCP Ecosystem Diagram

For example, after integrating the MCP Server of a map vendor, the Agent gains the capability of life service tools, far superior to relying solely on search methods.

Future

- The current MCP development is very rudimentary, lacking a complete framework to constrain and standardize engineering.

- According to the MCP Roadmap (https://modelcontextprotocol.io/development/roadmap), the main three things in the future are:

- Remote MCP Support: Authentication, service discovery, stateless services, clearly aiming for a K8S architecture to build a production-grade, scalable MCP service. According to the recent RFC, replace HTTP+SSE with new “Streamable HTTP” transport (https://github.com/modelcontextprotocol/modelcontextprotocol/pull/206), supporting Streamable HTTP for low-latency, bidirectional transmission.

- Agent Support: Enhance complex Agent workflows in different fields and improve human-computer interaction.

- Developer Ecosystem: More developers and large vendors need to participate to expand the capabilities of AI Agents.

- In practice, the MCP Server SSE is not an ideal solution because it requires maintaining connection and session state, while cloud services (like FaaS) tend to favor stateless architectures (issue#273: https://github.com/modelcontextprotocol/typescript-sdk/issues/273#issuecomment-2789489317), so a more cloud-friendly Streamable HTTP Transport has been proposed recently.

- MCP model invocation and RL reinforcement learning: If MCP becomes the future standard, the ability of Agent applications to accurately invoke various MCPs will become a key feature that models need to support in the future RL. Unlike the Function Call model, MCP is a dynamic tool library, and models need to have the ability to generalize understanding of newly added MCPs.

- Agent K8s: Although a standardized communication protocol has been established between LLMs and contexts, a unified standard for interaction protocols between Agents has not yet formed, and a series of production-level issues such as Agent service discovery, recovery, and monitoring remain to be solved. Currently, Google’s A2A (Agent2Agent) and the community’s ANP (Agent Network Protocol) are exploring and attempting in this area.

References:

- Detailed Explanation of MCP: The Optimal Solution for the Agentic AI Middleware, the Standardization Revolution of AI Applications

- a16z Detailed Explanation of MCP and the Future of AI Tools

- How to Build an MCP Server Fast: A Step-by-Step Tutorial: https://medium.com/@eugenesh4work/how-to-build-an-mcp-server-fast-a-step-by-step-tutorial-e09faa5f7e3b

- Three Major Trends of the Agent Internet: The Connection Paradigm is Completely Restructuring: https://zhuanlan.zhihu.com/p/31870979392

- With MCP, AI is Complete: https://www.youtube.com/watch?v=ElX5HO0wXyo

- Silicon Valley 101: The Dawn Before the Explosion of AI Agents: Manus is Not Good Enough, but Dawn is Coming: https://www.youtube.com/watch?v=2PSCnOFkR3U

- The Ultimate Guide to MCP: https://guangzhengli.com/blog/zh/model-context-protocol

- OpenAI Supports MCP: https://x.com/sama/status/1904957253456941061