Written by: Zeping Macro TeamArtificial Intelligence (AI)has greatly expanded its application boundaries and capabilities, from perceptual AI, which excels in image and speech recognition, to generative AI, which has recently been able to generate various types of content such as text and images. Currently, large language models like Deepseek and ChatGPT have profoundly changed the way people acquire information, shifting from searching for answers to asking large models for answers.However, despite having a smart brain and being adept at providing information and suggestions, large language models lack execution capabilities and cannot put ideas into practice.The emergence of Agents aims to break this limitation. According to the definition on Anthropic’s official website, an Agent is a system that allows large language models to dynamically control how tools are used, enabling them to autonomously decide how to complete tasks. In other words, it aims to allow large models to autonomously use tools and execute tasks, achieving a transition from “Conversational AI” to “Working AI”.Currently, Agents are still in their infancy, and the functionality envisioned is still quite distant. However, following the Manus incident in March, the attention on Agents has surged. How should we understand this new concept of Agents, and why has it become the new wave of AI? What Agent products are currently available, and what functionalities can they achieve? What is the MCP protocol that has gained popularity alongside Agents? How will the emergence of Agents reshape the software ecosystem in the future?

Main Text

1. Core Concept of Agents: Empowering Large Models with Tool Usage to Enhance Productivity

Agent, directly translated as “proxy”, is described by OpenAI as a system that can independently represent users to complete tasks. Compared to large models viewed as knowledge bases, Agents are more like actors that orchestrate workflows and call various tools to execute tasks in a highly independent manner under user authorization, ultimately delivering complex tasks.

Calling external tools is the most significant distinction between Agents and current large models like DeepSeek and ChatGPT. In the era of generative AI, large models primarily rely on their vast internal databases to answer user questions.

With the advent of the Agent era, large models will no longer be limited to their internal data but will gain the ability to call external tools, becoming more practical.

Agents do not exist independently of large models; in fact, they represent a step forward for large models, fundamentally enabling them to use tools and enhance their productivity. This is clearly reflected in Anthropic’s (Claude) definition of Agents: Agents “are systems that allow large language models to dynamically control how tools are used and can autonomously decide how to complete tasks.” Former OpenAI executive Lilian Weng further elaborated on the technical framework of Agents: Agents are automated systems driven by large models, with the large model serving as the “brain” and comprising three key components: planning, memory, and tool usage.

Planning: Using a chain of thought to break down the overall task into sub-tasks;

Memory: Having short-term and long-term memory functions for reflection and workflow correction;

Tool Usage: Large models call various external tools to complete tasks, such as web searches, calculators, code interpreters, weather, maps, ticket booking systems, etc.

Agents signify the evolution of AI large models from mere “Conversational AI” to “Working AI”.

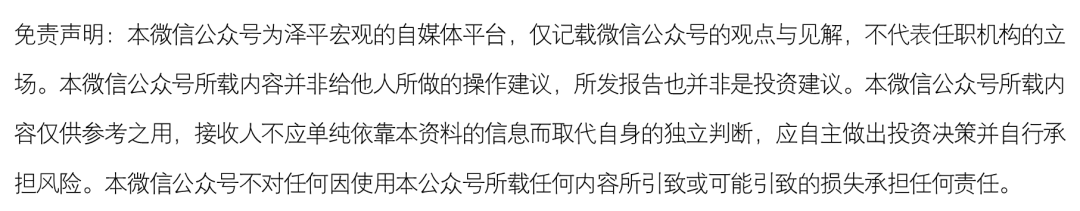

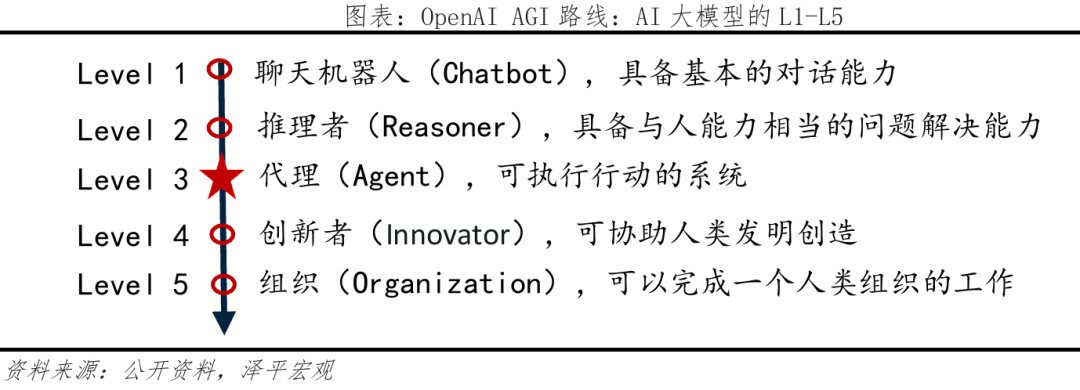

According to OpenAI’s internal AGI roadmap proposed in 2024, Agents belong to the L3 level of AI large models.

2. Agents as a New Wave in AI Development, Alongside Embodied Intelligence as a Major Trend for the Future

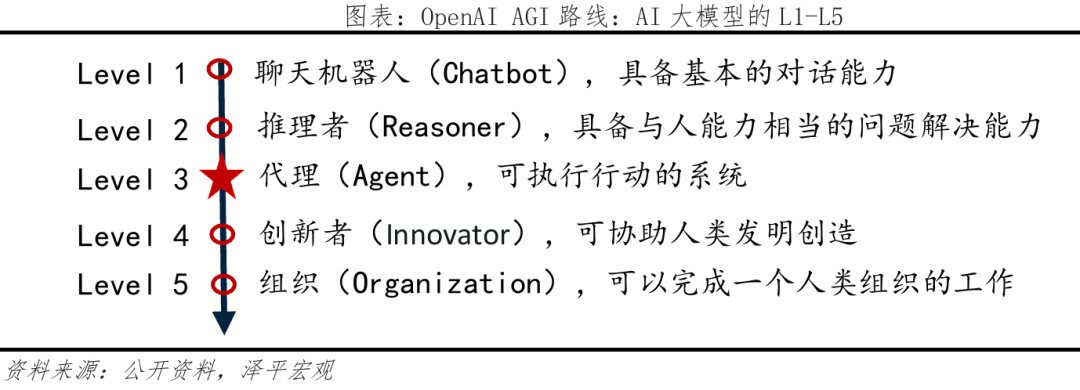

At the 2025 GTC conference, Jensen Huang proposed that since the deep learning revolution began in 2012, AI development will go through four stages: Perceptual AI, Generative AI, Agent-based AI (i.e., Agents), and Embodied AI.

First is Perceptual AI, which is the initial stage of artificial intelligence, enabling machines to “see” and “hear”, i.e., possessing computer vision and speech recognition capabilities, such as facial recognition and voice assistant applications.

Next is Generative AI, which has developed rapidly over the past three years, primarily focused on content generation, capable of creating text, images, videos, etc. Text generation has the highest popularity and usage rate, represented by large models like Deepseek and ChatGPT, which have significantly impacted traditional search engines, leading people to gradually get used to interacting with large models to acquire knowledge.

Agent-based AI (i.e., Agents) and Embodied AI are considered the next directions for development. The evolution of artificial intelligence must transition from “intelligence” to “smartness”, meaning enabling AI to have action capabilities, which is the common significance of developing Agents and Embodied AI. Embodied AI focuses on the physical world, embedding AI in physical entities like new energy vehicles and humanoid robots, allowing AI to perceive, understand, and act in the physical world. Agents, on the other hand, focus on the computer world, granting AI the ability to call software tools, enabling AI to execute tasks in the computer world.

3. Current Development Status of Agents: Manus’s Emergence Propels the Popularity of “General Agents”, Major Companies are Accelerating Their Layouts

The transition from “Conversational AI” to “Working AI” is an inevitable trend. This trend first lands in specialized fields, namely specialized Agents, with programming Agents like Devin, Cursor, and Windsurf being the most typical examples. The Manus incident in March propelled the popularity of general Agents.

On March 6, 2025, an Agent product called “Manus”, developed by the Chinese AI company Monica, was officially released, claiming to be “the world’s first general-purpose AI assistant”. Manus is positioned as a general Agent, unlike specialized Agents, it can decompose and execute various complex tasks without being limited to specific fields or task types. The official website showcased dozens of cases, including travel planning, stock analysis, PPT creation, and more.

Manus is currently priced high, with its overseas rollout progressing faster than in China. The basic version costs $55/month, while the upgraded version costs $279/month, exceeding OpenAI’s Operator upgrade version priced at $200/month. On March 28, the mobile app version of Manus AI was launched in the US Apple App Store. Currently, no products have been launched in China, but a strategic cooperation with Alibaba Tongyi Qianwen was announced in March to jointly develop a Chinese version of Manus.

The Manus team stated, “The product is very simple, with no secrets,” which is also a reason for the controversy following Manus’s emergence. Although the media widely touted this as another “DeepSeek moment”, many believe that Manus cannot be equated with DeepSeek, which represents the innovation and rise of domestic large models, while Manus is merely a “shell” without breakthroughs in original technology, such as not developing its foundational model but instead integrating Anthropic’s Claude 3.5 model. After Manus’s emergence, many teams quickly replicated similar products like OpenManus, etc. However, it is undeniable that Manus’s emergence has brought unprecedented attention to general Agents, effectively becoming a significant driving force for the entire AI industry to move towards “Working AI”.

Under this momentum, major domestic companies are accelerating their layouts for general Agents, aiming to secure early positions, such as ByteDance and Baidu.

On April 18, ByteDance’s web-based Agent product “Kouzi Space” began internal testing, positioned as “the best place for users to collaborate with AI Agents”. The foundational large model uses ByteDance’s self-developed Doubao large model, incorporating various callable tools, including Amap, Feishu documents, etc., to enhance actual delivery capabilities. The official website showcased many user-shared task replays, including webpage creation, travel planning with Amap annotations, song creation, research report creation, etc.

On April 25, Baidu’s mobile general Agent product “Xinxiang App” was officially released, now fully launched on Android. It adopts the Agent Use scheme, which can automatically schedule Baidu’s own and all third-party sub-intelligent agents, as well as various internal and external AI tools, applications, and service interfaces, enhancing task completion and matching rates. Currently, there are ten major task scenarios: routine tasks, city tourism, AI matchmaking, AI picture books, leisure games, in-depth research, legal consultation, health consultation, smart charts, and exam question explanations. The Baidu Xinxiang App is very user-friendly, and any Android user can download and experience it from the mobile application market.

4. Building the Agent Ecosystem: MCP & A2A Protocols, Future Large Models Will Have Strong Tool Calling Capabilities

4.1 MCP Protocol (Model Context Protocol): The “Type-C Interface” Between Large Models and External Tools

The core of Agents is to enable large models to call tools, thus, the future performance of Agents will be determined by two factors: first, the progress in reasoning and decision-making capabilities of large models; second, the convenience of large models accessing and calling tools.

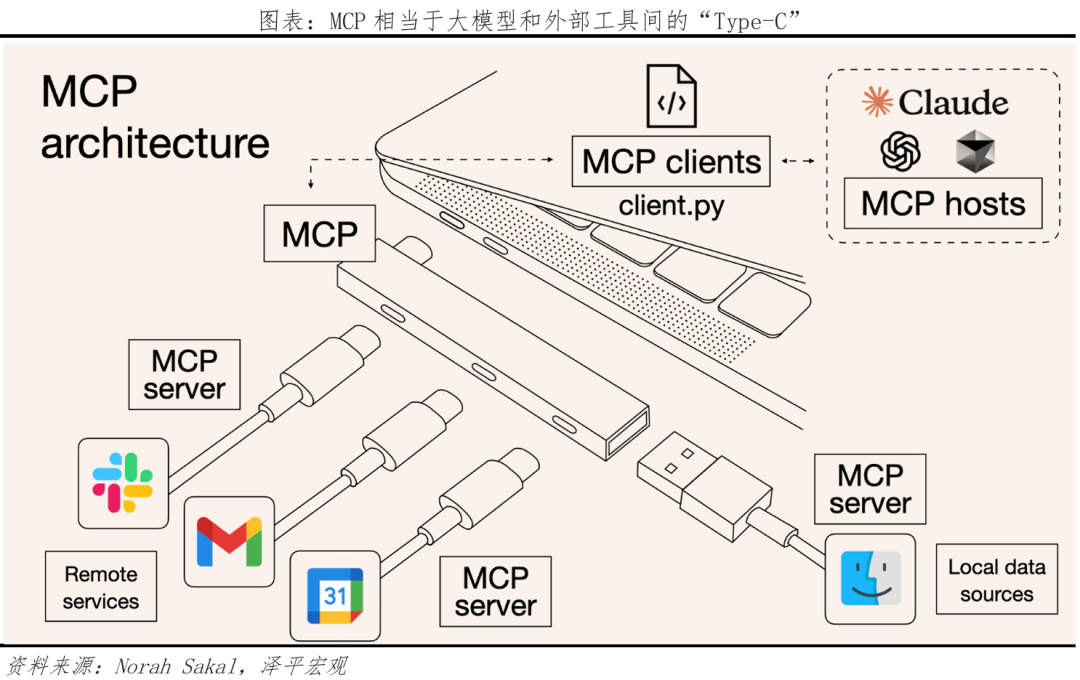

Regarding the second point, Anthropic (Claude) proposed the MCP protocol in November 2024, aiming to establish a unified connection standard between large models and various external tools. The MCP protocol greatly simplifies the difficulty of large models accessing external tools, allowing developers to avoid writing complex interfaces for each external tool, and the “large model + external tools” begins to enter the “plug-and-play” era.

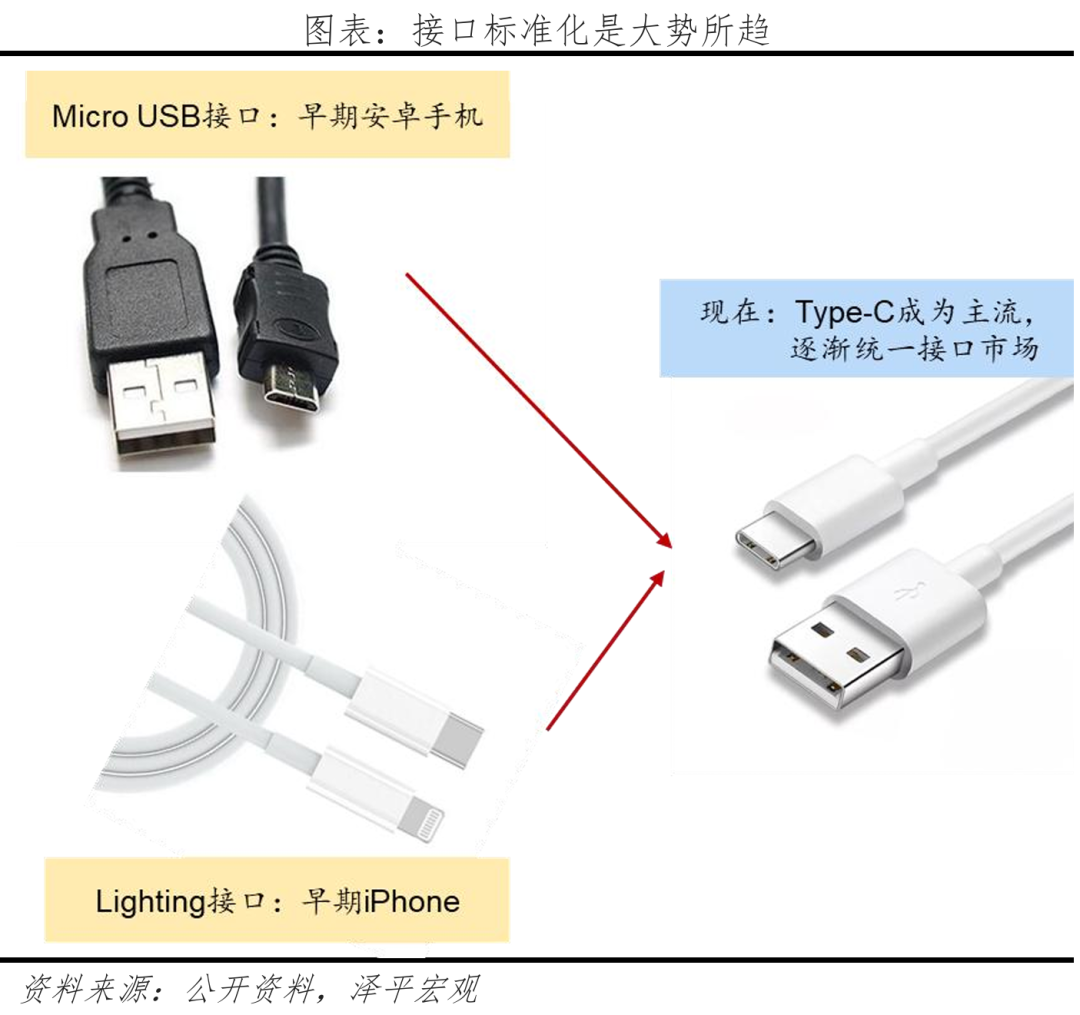

The significance of the MCP protocol is that it serves as a Type-C interface between large models and various external tools.

Before the emergence of Type-C, electronic device interfaces varied widely, with different devices using different interfaces, requiring users to carry multiple data cables, which was very inconvenient. The emergence of Type-C gradually unified the interface standards of many devices, allowing smartphones, tablets, laptops, and some household devices to use the same data cable, significantly reducing the variety and quantity of data cables, making connections between devices simple and efficient.

Similarly, the MCP protocol also simplifies the connection between large models and various external tools.

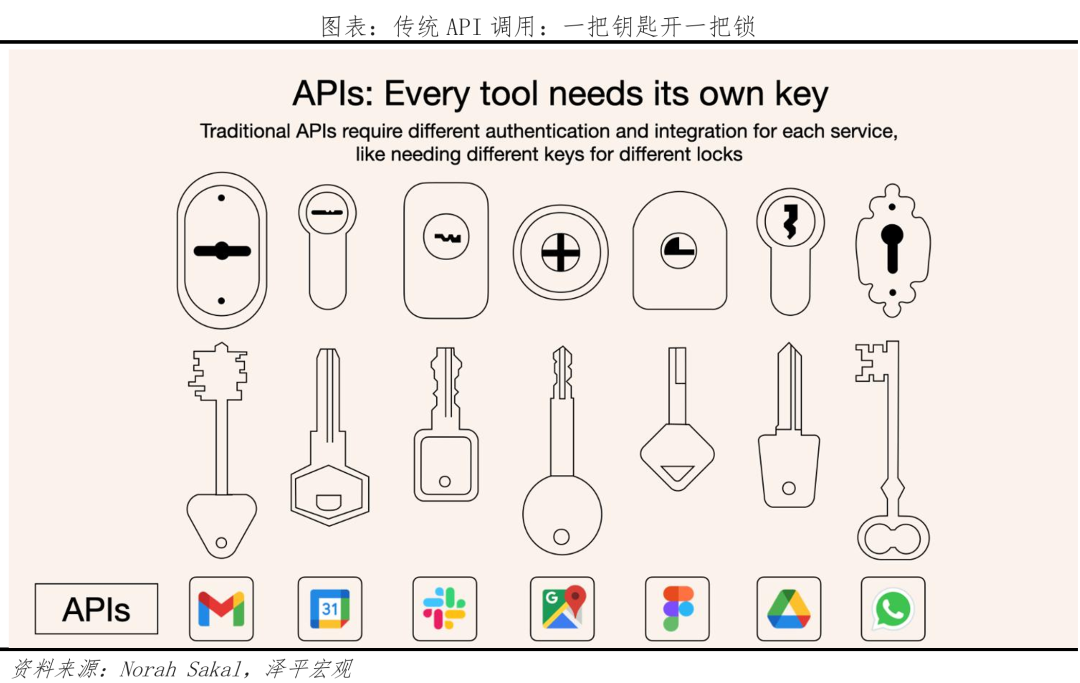

Traditionally, connecting large models and external tools was mainly done by using APIs specific to those external tools, meaning that separate interfaces had to be developed and maintained for each external tool, with one key opening one lock.

With the MCP protocol, as long as each external tool creates an MCP server according to the protocol, large models can achieve “plug-and-play” with external tools, avoiding the problem of developers reinventing the wheel.

It can be said that MCP acts like a bridge, connecting large models with various external tools for interaction. For example, for a travel planning Agent, if using the API method, developers need to write separate code for calendar, map, flight booking, etc., with each API requiring customized rules for authentication, content delivery, and error handling; but with the MCP protocol, as long as the calendar, map, and flight booking external tools support the MCP protocol, developers can easily integrate them, allowing large models to call them seamlessly.

Undoubtedly, the introduction of the MCP protocol will leave a key mark in the transition from the era of large models to the era of Agents. Currently, MCP is becoming an industry standard, rapidly gaining adoption:

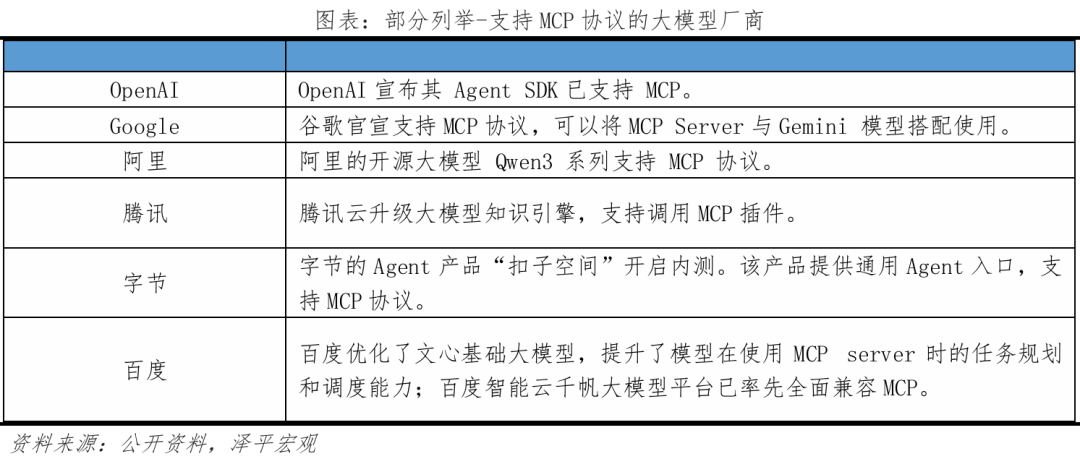

More and more large model vendors are announcing support for the MCP protocol, including overseas companies like OpenAI and Google, as well as domestic companies like Alibaba, Tencent, ByteDance, and Baidu.

At the same time, many applications are also entering the MCP ecosystem.

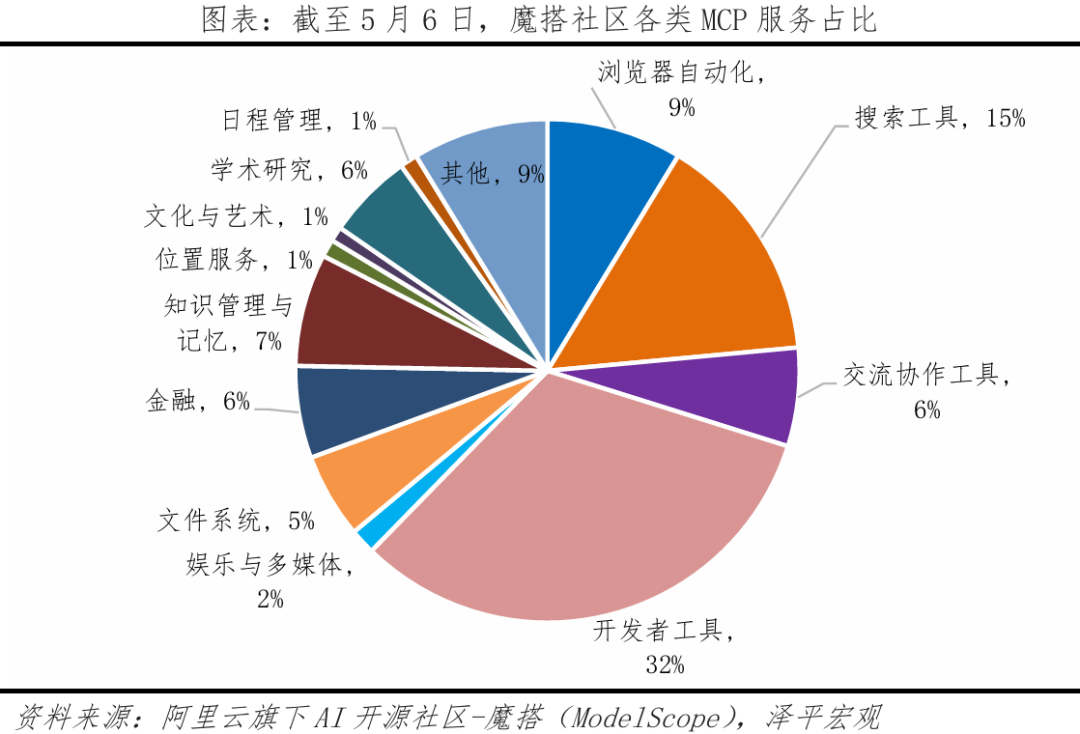

For example, as of now, the Modao community has over 2700 MCP Servers, providing convenience for developers.

Common applications like Alipay and Amap have launched official MCP Servers.

In April of this year, Alipay became the first payment institution in China to support the MCP protocol, allowing Agent developers to easily access payment services through Alipay’s “Payment MCP Server”.

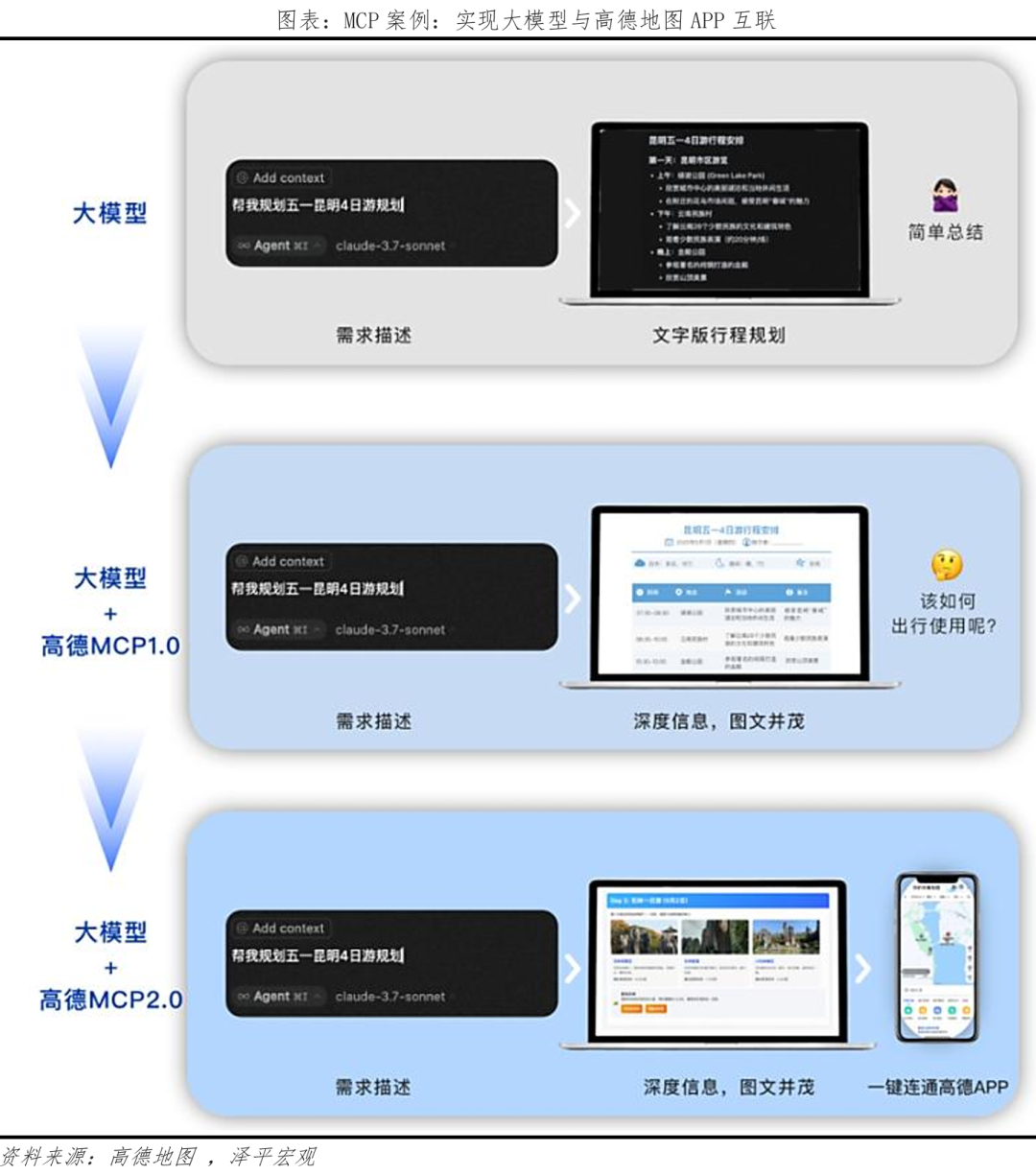

Amap released MCP 1.0 in March this year, integrating 12 core interfaces for open location services, place information search, route planning, weather inquiries, etc., allowing users to easily obtain real-time information in travel planning and location information retrieval scenarios. In April, it was fully upgraded to MCP 2.0, enabling one-click conversion of AI-generated travel content into exclusive maps, and allowing navigation, taxi booking, and ticketing from the travel guide.

Baidu is also strongly supporting the MCP. In April, Li Yanhong stated that MCP “provides developers with solutions in the era of AI explosion, allowing AI to call tools more freely, which is a significant step in AI development,” announcing comprehensive support for developers to embrace MCP. Currently, Baidu’s product search, product transactions, product details, product parameter comparisons, and product rankings capabilities are also provided externally through Baidu’s e-commerce MCP server, making it the first MCP service in China to support e-commerce transactions. Additionally, applications like Wenku, Wangpan, and maps are also providing MCP Server services externally.

4.2 A2A (Agent-to-Agent Protocol): Breaking Silos, Enabling Interconnectivity Between Agents

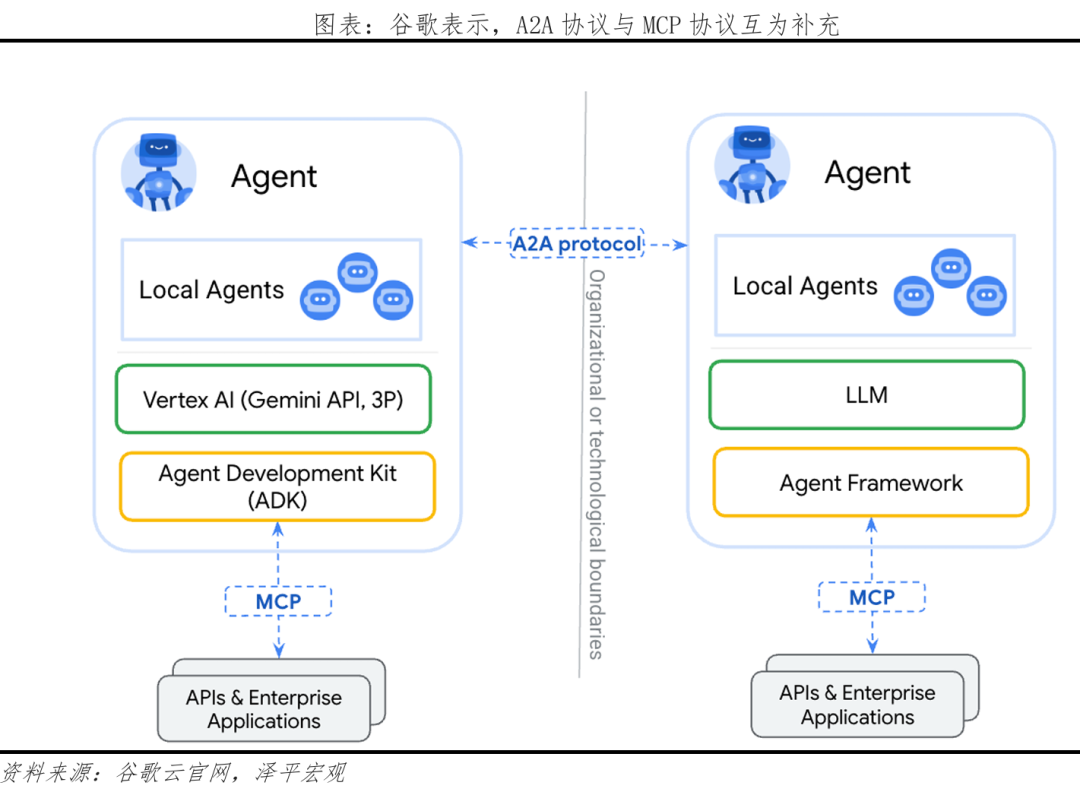

In April 2025, Google proposed the A2A protocol, a standardized communication protocol between Agents. Google positions the A2A protocol as a complement to the MCP protocol: the MCP protocol facilitates large models’ calls to external tools, while the A2A protocol facilitates interconnectivity between Agents.

With MCP + A2A, the capabilities of Agents can be maximized—each Agent can not only easily access and use various external tools but also leverage the power of other Agents.

The A2A protocol has not gained as much traction as MCP, but according to Google Cloud’s official website, there are still a considerable number of supporters for this protocol, currently around 60.

5. Outlook: General Agents Represent a Paradigm Revolution, Potentially Becoming the Largest Traffic Distribution Center, Restructuring the Current Software Ecosystem

General Agents represent the third round of transformation in interaction paradigms: from desktop operating systems in the PC era to super applications in the mobile internet era, and now to general Agents in the era of AI large models. The current software ecosystem may be disrupted, and general Agents may reshape the power dynamics of the entire digital world.

From a technical perspective, the traditional software ecosystem is function-oriented, requiring users to actively adapt to the fixed patterns of software to achieve specific goals, while general Agents are characterized by autonomous decision-making, understanding user intentions and autonomously calling various tools (including major software) to deliver tasks.

In the future, as general Agents are implemented, traffic distribution rights will gradually concentrate among various general Agent products. This means that the competitiveness of major software will increasingly depend on whether their services can be accurately recognized and recommended by Agents, rather than traditional user stickiness.

Currently, the ecosystem of general Agents is not yet complete. As Perplexity CEO Aravind stated, “Anyone claiming that Agents in 2025 can be fully operational should be viewed with skepticism.” This is certainly due to technical reasons, such as the need for further improvement in the reasoning and decision-making capabilities of large models and the need to reduce hallucination issues. However, there is also a long way to go on the ecological front, as Aravind has also mentioned: currently, there is no way for AI Agents to control multiple applications simultaneously, especially on iOS, where they cannot even access other applications due to Apple’s ecosystem restrictions. How Agents will ultimately be implemented, including traffic distribution and charging models, is highly anticipated.