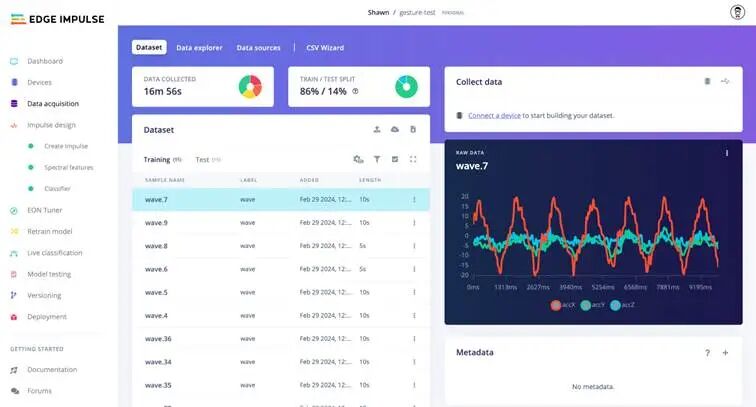

Artificial intelligence has fundamentally transformed numerous industries, thanks to its ability to analyze vast amounts of data, extract complex models, and make autonomous decisions. Despite the many advantages of AI, most applications to date have been implemented on high-performance computing platforms, such as cloud servers or computers equipped with powerful GPUs. With the growing demand for decentralized and cost-effective solutions, efforts are underway to apply these models to low-power small devices, such as low-cost hardware platforms based on MCUs. The ability to run AI models directly on MCUs opens up endless possibilities for applications in the Internet of Things, automotive, industrial, consumer, robotics, wearables, and any application that must minimize energy consumption and costs. Today, due to continuous technological advancements, AI algorithms can even be implemented on hardware without powerful computing capabilities.MCU Power Consumption and PerformanceMCUs are small, low-power computing devices integrated into hardware that requires basic computations. They enable smart functionality in billions of devices we use daily (such as home appliances and mobile connected devices) without relying on expensive hardware or a reliable internet connection, and are not limited by bandwidth and power consumption. MCUs also help protect privacy, as no data leaves the device. Imagine smart appliances that adapt to our daily activities, or smart industrial sensors that can distinguish between faults and normal operations.Shifting computing power from powerful centralized servers to MCUs is a revolutionary approach. MCUs are designed to be integrated into low-power, resource-constrained, low-cost devices, with computing capabilities far below those of PCs or servers. From a hardware perspective, MCUs typically have tens or hundreds of KB of RAM, clock frequencies ranging from a few MHz to several tens of MHz, and 32-bit (sometimes even 8-bit) architectures, making their performance inferior to traditional platforms typically used for executing AI models.Another aspect to consider is power consumption. MCUs are often used in battery-powered systems, such as wireless sensor networks for remote control or portable wearables, where every milliamp of current consumed can shorten battery life and reduce performance. Therefore, AI technology must be optimized not only for computing power but also for energy efficiency. The power consumption of MCUs varies by model and specific application. Generally, low-power MCUs (such as the Arm Cortex-M0+ series) consume only a few microamps in sleep mode and only a few milliamps during operation. On the other hand, more powerful and complex MCUs (such as the Arm Cortex-M4 or M7 series) can consume tens of milliamps when fully operational. It is always important to consider power management in design to optimize power consumption, especially for battery-dependent applications.Typically, the first phase of implementing AI applications on edge devices involves training models using datasets and then deploying the trained models directly on the devices. Users can download public datasets or create their own custom datasets.Implementing AI on MCUsOn-chip AI integration brings intelligent local processing directly to the edge (i.e., close to the data collection point), eliminating the need to send information to a central server for processing. One advantage of implementing AI on MCUs is reduced latency: since data processing occurs locally, the response time to operations is very fast. In fact, data communication is a bottleneck, as uploading data to cloud servers for real-time processing introduces latency and privacy issues. MCUs with AI capabilities can process complex data from sensors in real-time.Edge AI can also improve energy efficiency. MCUs are designed to operate with minimal power consumption, which is crucial for portable or battery-powered applications. Avoiding intensive network usage reduces the energy consumption of transmitting large amounts of data to remote servers. Since the processing occurs locally, sensitive data does not need to be transmitted over potentially insecure networks, thus enhancing data privacy and security.AI-enabled MCUs can be deployed at relatively low costs on a large scale, enabling intelligent systems in various devices and applications. Integrating AI on MCUs also allows for a high level of personalization, as devices can learn from data and user interactions to provide highly personalized experiences.The tech industry has developed new solutions to optimize AI models for running on MCUs. In recent years, Edge Impulse and Google’s TensorFlow Lite for MCUs have stood out for their ability to address the limitations of MCUs and provide ongoing support for developers. These platforms are designed to run machine learning (ML) models on devices with a few kilobytes of memory.TensorFlow Lite (now LiteRT) is a cross-platform, fully open-source deep learning framework that can convert pre-trained TensorFlow models into formats optimized for speed and memory. The model can be deployed on embedded devices based on different operating systems (such as Android, iOS, Linux, or Raspberry Pi) and on MCUs for local inference. A typical workflow for running TensorFlow models on MCUs includes training the model on the target device using special libraries and running inference.Edge Impulse and TensorFlow Lite for MCUs have paved the way for implementing AI models on embedded devices. By combining advanced model compression techniques, hardware-specific optimizations, and intuitive toolchains, neural networks can now run on devices with very limited resources. The continuous miniaturization of hardware and the development of efficient algorithms have reduced the demand for computing power and memory, leading to significant progress. The impact of these new technologies is evident in areas such as smart homes, where devices with embedded MCUs can use AI to recognize gestures or voice commands, analyze video streams without remote servers, or detect people using systems that obtain camera data through light sensors. These applications are indeed diverse. Even in the automotive industry, AI-enabled MCUs can be used for real-time analysis of data from vehicle sensors, enhancing driving safety and optimizing the performance of autonomous driving systems.Edge Impulse Promotes AI Adoption on MCUsEdge Impulse is an innovative platform dedicated to promoting AI, focusing on data collection, model creation, training, and deployment on edge computing devices (especially MCUs). Founded in 2019, it has quickly become a leader in the MCU machine learning field with its comprehensive toolset that aids in designing, training, and deploying AI models optimized for low-power hardware. One of the notable features of Edge Impulse is its “end-to-end” approach, allowing developers to build ML pipelines directly from data collection to final deployment. Thanks to its intuitive web interface, this process is streamlined, obtaining data from sensors connected to MCUs, processing it, training AI models, and deploying those models to real devices, all without requiring advanced programming or embedded software development knowledge. Edge Impulse helps complete every stage of the edge AI lifecycle, from data collection, feature extraction, ML model design, model training and testing, to deploying these models to end devices (Figure 1).Figure 1: The edge AI lifecycle using Edge Impulse. (Source: Edge Impulse)Since the processing capabilities, operating systems, and supported languages of edge devices vary, implementation can be a complex process. Therefore, Edge Impulse offers various deployment options on which users can build applications. Typically, these deployments are open-source libraries that can easily interact with models. Edge Impulse can easily connect to other ML frameworks, allowing for model expansion and customization as needed. For example, in practical applications, Edge Impulse can be used to develop voice recognition models for battery-powered wearables that can recognize voice commands locally without sending data to remote servers. By optimizing models for hardware, power consumption can be kept at extremely low levels, extending the battery life of devices.Another notable application case is using Edge Impulse for predictive diagnostics in industrial factories, where low-cost sensors integrated with MCUs are programmed to run optimized models on low computational resources to monitor machine behavior and detect anomalies in real-time. This enables companies to reduce downtime and improve preventive maintenance. Additionally, the platform supports a wide range of MCUs, from the simplest Arm Cortex-M devices to the most advanced solutions, and is compatible with existing AI model libraries. Its flexibility allows developers to integrate AI across various hardware, making it easier to create IoT smart solutions.Edge Impulse Studio is a web-based tool with a graphical interface that supports data collection and model deployment to end devices (Figure 2). This collection tool can store, sort, and label data. Figure 2: Data collection. (Source: Edge Impulse)Edge Impulse supports the use and modification of standard feature extraction methods to fit specific projects and allows for the creation of new methods through custom processing blocks. Learning blocks can then be used to train ML models. The platform provides predefined learning blocks and supports the creation of custom learning blocks or modifying ML code using expert mode. Once training is complete, the model can be tested with real-time data through a holdout set or connected devices (Figure 3).

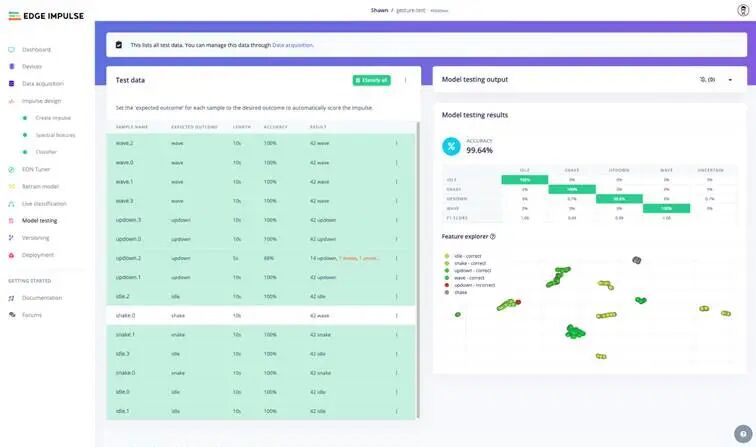

Figure 2: Data collection. (Source: Edge Impulse)Edge Impulse supports the use and modification of standard feature extraction methods to fit specific projects and allows for the creation of new methods through custom processing blocks. Learning blocks can then be used to train ML models. The platform provides predefined learning blocks and supports the creation of custom learning blocks or modifying ML code using expert mode. Once training is complete, the model can be tested with real-time data through a holdout set or connected devices (Figure 3). Figure 3: Testing models on Edge Impulse. (Source: Edge Impulse)After this process is completed, the finished model can be deployed in various formats, including C++ libraries, pre-built firmware for supported hardware, etc. The platform also offers a range of enterprise features for building complete edge ML pipelines and scaling deployments while providing faster performance and more training time to build larger and more complex models.Google’s TensorFlow Lite for MCUsIn the field of AI for MCUs, Google’s TensorFlow Lite for MCUs is another important reference platform. TensorFlow Lite is a simplified version of the well-known TensorFlow deep learning library, designed to optimize AI models for mobile and embedded devices. Google’s TensorFlow Lite for MCUs represents a further development, specifically aimed at running neural networks on MCUs with extremely limited computational resources and memory capacity.The platform’s success lies in its use of quantization techniques to reduce the size of AI models without compromising accuracy. Quantization compresses neural models from 32-bit representations to 8-bit versions, making them lighter and less computationally expensive. Thanks to this technology, the platform can run advanced models on MCUs with only a few kilobytes of RAM and flash memory.TensorFlow Lite for MCUs can be integrated into smart agricultural sensors. For example, consider a pilot project where an MCU programmed with TensorFlow Lite analyzes data from real-time environmental sensors (such as soil temperature and moisture) to predict the optimal irrigation time, thereby reducing water waste and improving crop yields. In this case, the ability to run AI directly on the device reduces the need for frequent communication with the cloud, lowering connection costs and improving overall energy efficiency and latency.The platform has also been successfully used in health monitoring devices, such as wearables for monitoring heart rate and vital signs. The ability to process data locally and provide real-time feedback without relying on centralized cloud processing greatly enhances the responsiveness of these devices, enabling critical alerts for detecting health issues.Another notable feature of TensorFlow Lite for MCUs is its large developer community and extensive support for various hardware architectures, including Arm MCUs, ESP32, and even third-party MCUs and development boards. The platform has a wealth of examples and documentation, making it easy for even inexperienced AI developers to adopt.AI models optimized for MCUs are revolutionizing the way we interact with AI and edge computing. Today, the synergy between AI platforms and MCUs represents a successful solution to overcome the technical limitations of hardware with limited computational resources. Platforms like Edge Impulse and TensorFlow Lite for MCUs demonstrate that the future is not limited to the cloud but can be realized anywhere there are MCUs and specific project ideas. Ongoing innovations in this field are expected to bring AI to every device, making it ubiquitous, even in applications where cost, energy consumption, and performance are most critical.Author: Giordana Francesca Brescia(Editor: Franklin)

Figure 3: Testing models on Edge Impulse. (Source: Edge Impulse)After this process is completed, the finished model can be deployed in various formats, including C++ libraries, pre-built firmware for supported hardware, etc. The platform also offers a range of enterprise features for building complete edge ML pipelines and scaling deployments while providing faster performance and more training time to build larger and more complex models.Google’s TensorFlow Lite for MCUsIn the field of AI for MCUs, Google’s TensorFlow Lite for MCUs is another important reference platform. TensorFlow Lite is a simplified version of the well-known TensorFlow deep learning library, designed to optimize AI models for mobile and embedded devices. Google’s TensorFlow Lite for MCUs represents a further development, specifically aimed at running neural networks on MCUs with extremely limited computational resources and memory capacity.The platform’s success lies in its use of quantization techniques to reduce the size of AI models without compromising accuracy. Quantization compresses neural models from 32-bit representations to 8-bit versions, making them lighter and less computationally expensive. Thanks to this technology, the platform can run advanced models on MCUs with only a few kilobytes of RAM and flash memory.TensorFlow Lite for MCUs can be integrated into smart agricultural sensors. For example, consider a pilot project where an MCU programmed with TensorFlow Lite analyzes data from real-time environmental sensors (such as soil temperature and moisture) to predict the optimal irrigation time, thereby reducing water waste and improving crop yields. In this case, the ability to run AI directly on the device reduces the need for frequent communication with the cloud, lowering connection costs and improving overall energy efficiency and latency.The platform has also been successfully used in health monitoring devices, such as wearables for monitoring heart rate and vital signs. The ability to process data locally and provide real-time feedback without relying on centralized cloud processing greatly enhances the responsiveness of these devices, enabling critical alerts for detecting health issues.Another notable feature of TensorFlow Lite for MCUs is its large developer community and extensive support for various hardware architectures, including Arm MCUs, ESP32, and even third-party MCUs and development boards. The platform has a wealth of examples and documentation, making it easy for even inexperienced AI developers to adopt.AI models optimized for MCUs are revolutionizing the way we interact with AI and edge computing. Today, the synergy between AI platforms and MCUs represents a successful solution to overcome the technical limitations of hardware with limited computational resources. Platforms like Edge Impulse and TensorFlow Lite for MCUs demonstrate that the future is not limited to the cloud but can be realized anywhere there are MCUs and specific project ideas. Ongoing innovations in this field are expected to bring AI to every device, making it ubiquitous, even in applications where cost, energy consumption, and performance are most critical.Author: Giordana Francesca Brescia(Editor: Franklin)

Hot Articles

Dissecting Nanfu Battery Tester, Cost-Optimized to the Extreme……

2025-04-13

China Semiconductor Industry Association Issues Urgent Notice

2025-04-11

Can NVIDIA H20 Be Sold to China Again?

2025-04-11

Yu Chengdong Resigns as Chairman of Huawei Car BU

2025-04-09

Why Did Huawei Revisit Ternary Computing?