Machine Heart ColumnMachine Heart Editorial Team

Now, robots have learned to perform precision control tasks in factories.

In recent years, significant progress has been made in the field of robotic reinforcement learning technologies, such as quadrupedal walking, grasping, and dexterous manipulation. However, most of these advancements remain limited to laboratory demonstrations. The widespread application of robotic reinforcement learning technologies in real production environments still faces numerous challenges, which to some extent restricts their applicability in real-world scenarios. During the practical application of reinforcement learning technologies, various complex issues must be overcome, including reward mechanism design, environment resetting, sample efficiency improvement, and action safety assurance. Industry experts emphasize that solving the many challenges of implementing reinforcement learning technologies is equally important as the continuous innovation of the algorithms themselves.In response to this challenge, scholars from the University of California, Berkeley, Stanford University, the University of Washington, and Google have jointly developed an open-source software framework called the Efficient Robotic Reinforcement Learning Suite (SERL), aimed at promoting the widespread use of reinforcement learning technologies in practical robotic applications.

- Project Homepage: https://serl-robot.github.io/

- Open Source Code: https://github.com/rail-berkeley/serl

- Paper Title: SERL: A Software Suite for Sample-Efficient Robotic Reinforcement Learning

The SERL framework mainly includes the following components:1. Efficient Reinforcement LearningIn the field of reinforcement learning, agents (such as robots) learn to perform tasks through interaction with the environment. They learn a strategy aimed at maximizing cumulative rewards by trying various actions and receiving reward signals based on the outcomes of those actions. SERL employs the RLPD algorithm, enabling robots to learn from both real-time interactions and previously collected offline data, significantly shortening the training time required for robots to master new skills.2. Diverse Reward Specification MethodsSERL provides various reward specification methods, allowing developers to customize the reward structure according to the specific needs of tasks. For example, fixed-position assembly tasks can define rewards based on the position of the robotic arm, while more complex tasks can use classifiers or VICE to learn an accurate reward mechanism. This flexibility helps to precisely guide robots in learning the most effective strategies for specific tasks.3. No Reset FunctionalityTraditional robotic learning algorithms require periodic resetting of the environment to conduct the next round of interactive learning. This is not automatically achievable in many tasks. SERL’s no-reset reinforcement learning functionality simultaneously trains both forward and backward strategies, providing environment resets for each other.4. Robot Control InterfaceSERL offers a series of Gym environment interfaces for Franka robotic arm tasks as standard examples, making it easy for users to extend SERL to different robotic arms.5. Impedance ControllerTo ensure that robots can safely and accurately explore and operate in complex physical environments, SERL provides a special impedance controller for the Franka robotic arm, ensuring that excessive torque is not generated upon contact with external objects while maintaining accuracy.Through the combination of these technologies and methods, SERL significantly reduces training time while maintaining a high success rate and robustness, enabling robots to learn to complete complex tasks in a short time and effectively apply them in the real world.

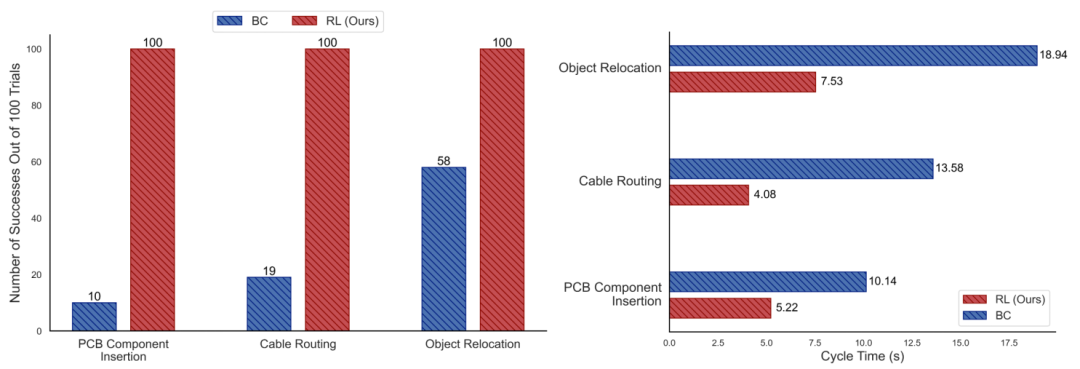

Figures 1 and 2: Comparison of success rates and cycle counts between SERL and behavior cloning methods. With similar amounts of data, SERL’s success rate is several times higher than that of cloning (up to 10 times), and the cycle count is at least twice as fast.

Figures 1 and 2: Comparison of success rates and cycle counts between SERL and behavior cloning methods. With similar amounts of data, SERL’s success rate is several times higher than that of cloning (up to 10 times), and the cycle count is at least twice as fast.

Application Cases1. PCB Component Assembly:Assembling through-hole components on a PCB is a common yet challenging robotic task. The pins of electronic components are easily bent, and the tolerances between the holes and pins are very small, requiring the robot to be both precise and gentle during assembly. With just 21 minutes of autonomous learning, SERL enabled the robot to achieve a 100% task completion rate. Even when faced with unknown disturbances such as the circuit board’s position shifting or partial occlusion of the line of sight, the robot was able to stably complete the assembly work.

Figures 3, 4, and 5: During the execution of the circuit board component installation task, the robot was able to handle various disturbances it had not encountered during training and successfully complete the task.2. Cable Routing:In the assembly of many mechanical and electronic devices, we need to accurately install cables along specific paths, which places high demands on precision and adaptability. Due to the deformability of flexible cables during routing, and the potential for various disturbances such as accidental movement of the cables or changes in the gripper’s position, traditional non-learning methods struggle to cope. SERL achieved a 100% success rate in just 30 minutes. Even when the gripper’s position differed from that during training, the robot was able to generalize its learned skills, adapt to new routing challenges, and ensure the correct execution of the routing work.

Figures 6, 7, and 8: The robot can directly route the cable through clips that are in different positions from those during training without additional specialized training.3. Object Grasping and Placing Operations:In warehouse management or retail, robots often need to move items from one place to another, requiring them to recognize and handle specific items. During the reinforcement learning training process, it is challenging to automatically reset under-actuated objects. Utilizing SERL’s no-reset reinforcement learning functionality, the robot learned two strategies with a 100/100 success rate in just 1 hour and 45 minutes. The forward strategy moves an object from box A to box B, while the backward strategy returns the object from box B to box A.

Figures 9, 10, and 11: SERL trained two strategies, one for moving an object from the right to the left, and another for returning it from the left to the right. The robot achieved a 100% success rate on the trained objects and was able to intelligently handle unseen objects.Main Authors1. Jianlan LuoJianlan Luo is currently a postdoctoral scholar in the Department of Electrical Engineering and Computer Sciences at the University of California, Berkeley, collaborating with Professor Sergey Levine at the Berkeley Artificial Intelligence Research (BAIR) lab. His main research interests include machine learning, robotics, and optimal control. Before returning to academia, he was a full-time researcher at Google X, collaborating with Professor Stefan Schaal. Previously, he obtained a Master’s degree in Computer Science and a Ph.D. in Mechanical Engineering from the University of California, Berkeley, where he worked with Professors Alice Agogino and Pieter Abbeel. He has also served as a visiting research scholar at DeepMind’s London headquarters.2. Zheyuan HuHe graduated with a Bachelor’s degree in Computer Science and Applied Mathematics from the University of California, Berkeley. Currently, he is conducting research in the RAIL lab led by Professor Sergey Levine. He has a strong interest in the field of robotic learning, focusing on developing methods that enable robots to rapidly and broadly master dexterous manipulation skills in the real world.3. Charles XuHe is a fourth-year undergraduate student in Electrical Engineering and Computer Sciences at the University of California, Berkeley. Currently, he is conducting research in the RAIL lab led by Professor Sergey Levine. His research interests lie at the intersection of robotics and machine learning, aiming to build robust and generalizable autonomous control systems.4. You Liang TanHe is a research engineer at the Berkeley RAIL lab, guided by Professor Sergey Levine. He previously obtained his Bachelor’s degree from Nanyang Technological University in Singapore and completed his Master’s degree at the Georgia Institute of Technology in the United States. Prior to this, he was part of the Open Robotics Foundation. His work focuses on the application of machine learning and robotic software technologies in the real world.5. Stefan SchaalHe obtained his Ph.D. in Mechanical Engineering and Artificial Intelligence from the Technical University of Munich in Germany in 1991. He is a postdoctoral researcher in the Department of Brain and Cognitive Sciences and the Artificial Intelligence Laboratory at MIT, a visiting researcher at the ATR Human Information Processing Research Laboratory in Japan, and an assistant professor at the Department of Kinesiology at Georgia Tech and Penn State University. During the ERATO project in Japan, he also served as the leader of the Computational Learning Group, which contributed to the Kawato Dynamic Brain Project (ERATO/JST). In 1997, he became a professor of Computer Science, Neuroscience, and Biomedical Engineering at the University of Southern California and was promoted to full professor. His research interests include statistics and machine learning, neural networks and artificial intelligence, computational neuroscience, functional brain imaging, nonlinear dynamics, nonlinear control theory, robotics, and biorobotics.He is one of the founding directors of the Max Planck Institute for Intelligent Systems in Germany, where he led the Autonomous Motion Department for many years. He is currently the Chief Scientist at Intrinsic, a new robotics subsidiary of Alphabet [Google]. Stefan Schaal is an IEEE Fellow.6. Chelsea FinnShe is an assistant professor of Computer Science and Electrical Engineering at Stanford University. Her lab, IRIS, explores intelligence through large-scale robotic interactions and is affiliated with SAIL and the ML Group. She is also a member of the Google Brain team. She is interested in the ability of robots and other agents to develop a wide range of intelligent behaviors through learning and interaction. Previously, she completed her Ph.D. in Computer Science at the University of California, Berkeley, and obtained her Bachelor’s degree in Electrical Engineering and Computer Science from MIT.7. Abhishek GuptaHe is an assistant professor at the Paul G. Allen School of Computer Science and Engineering at the University of Washington, leading the WEIRD lab. Previously, he was a postdoctoral scholar at MIT, collaborating with Russ Tedrake and Pulkit Agarwal. He completed his Ph.D. in machine learning and robotics at UC Berkeley, where he was advised by Professors Sergey Levine and Pieter Abbeel. He also completed his Bachelor’s degree at UC Berkeley. His main research goal is to develop algorithms that enable robotic systems to learn to perform complex tasks in various unstructured environments, such as offices and homes.8. Sergey LevineHe is an associate professor in the Department of Electrical Engineering and Computer Sciences at the University of California, Berkeley. His research focuses on algorithms that enable autonomous agents to acquire complex behaviors through learning, particularly those that allow any autonomous system to learn to solve any task. Applications of these methods include robotics and a range of other fields requiring autonomous decision-making.

© THE END

For reprints, please contact this public account for authorization

Submissions or inquiries: [email protected]