Key Knowledge Points in Chapter 1:

Understand the characteristics, classifications, development, and applications of embedded systems, and be familiar with the logical composition of embedded systems.

Understand the main types of embedded processing chips, be familiar with the SoC development process, and comprehend the significance of IP cores.

Be familiar with the encoding of Chinese and Western characters, as well as the types and processing of digital text, and master the parameters, file formats, and main applications of digital images.

Understand the classification and composition of computer networks, be familiar with the main content of the IP protocol, and master the composition of the internet and commonly used access technologies.

The digital images used in embedded systems are also known as sampled images, raster images, or bitmap images.

1. Acquisition and Main Parameters of Digital Images

The devices used for image acquisition are mainly of two types: digital cameras and scanners. Their function is to input a 2D image of a real scene into a computer and represent it in the form of a digital image.

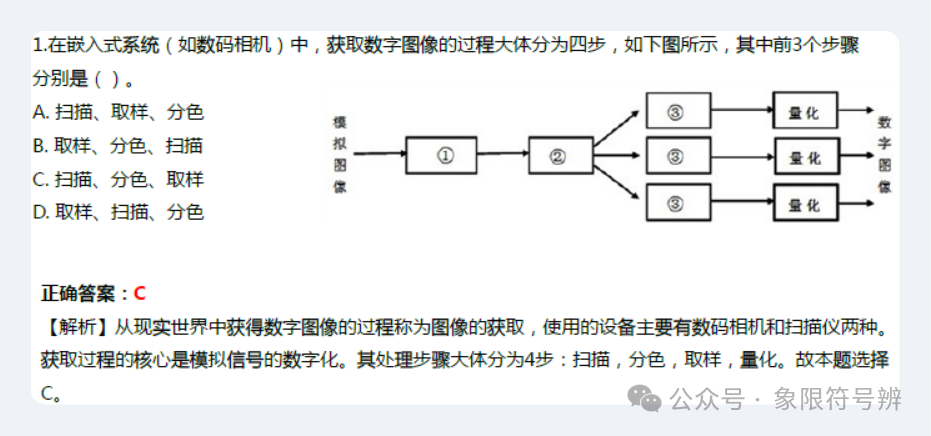

The core of the image acquisition process is the digitization of the analog signal, which generally consists of four steps:

-

• Scanning: Scanning divides the image into MxN grids, with each grid referred to as a sampling point. -

• Color Separation: Decomposes the color of each sampling point into three primary colors: Red, Green, and Blue (R, G, B). If it is a grayscale or black-and-white image, color separation is unnecessary. -

• Sampling: Measures the brightness value of each component (primary color) at each sampling point. -

• Quantization: Performs A/D conversion on the brightness values of each component at the sampling points, i.e., representing the analog quantity with a digital quantity (usually a positive integer of 8 to 12 bits).

The main parameters of digital images and their meanings are as follows:

-

• Image Size: Also known as image resolution (expressed as horizontal resolution x vertical resolution). For example, 400×300, 800×600, 1024×768, etc. The commonly referred high-definition image resolution is 1920×1080; -

• Number of Bit Planes: Refers to the number of pixel color components. Black and white or grayscale images have only one bit plane, while color images have three or more bit planes; -

• Pixel Depth: Refers to how many binary bits are used to represent each pixel. It is the sum of the binary bits of all color components of the pixel, determining the maximum number of different colors (or brightness) that can appear in the image. For example, in a monochrome image, if its pixel depth is 8 bits, then the total number of different brightness levels is 2^8=256; -

• Color Model: Refers to the method of color description used for color images. Typically, monitors use the RGB (Red, Green, Blue) model, while color printers use the CMYK (Cyan, Magenta, Yellow, Black) model.

2. Common File Formats for Digital Images and Their Applications

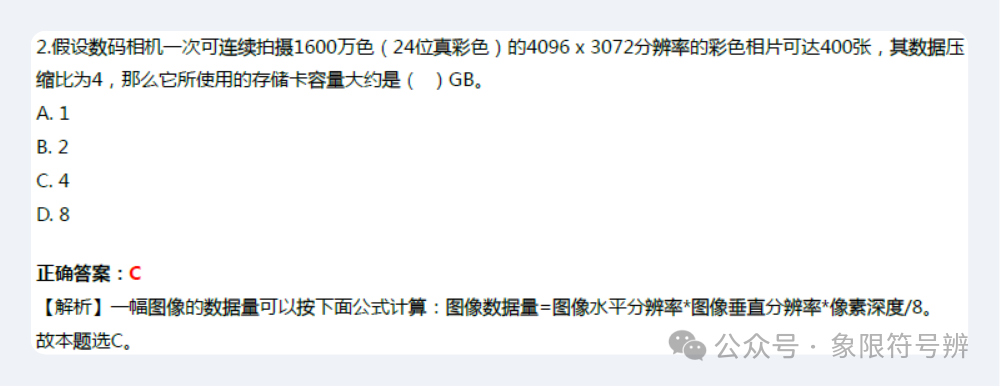

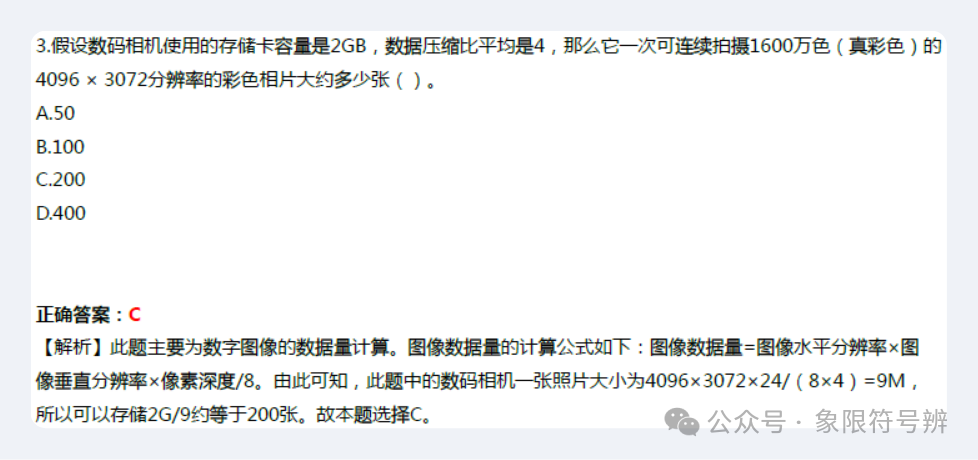

The data volume of an image can be calculated using the following formula (in bytes):

Data compression types: lossless compression and lossy compression

-

• Lossless Compression: Refers to the process where the restored image (also known as decompression) after compression is identical to the original image, with no errors. For example: Run-Length Encoding (RLE), Huffman coding, etc. -

• Lossy Compression: Refers to the process where the restored image from the compressed data may have some errors compared to the original image, but this does not affect the correct understanding and use of the image’s meaning. To achieve a higher data compression ratio, digital image compression generally adopts lossy compression methods, such as transform coding, vector coding, etc.

Several commonly used image file formats in embedded systems are shown in the table below:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Sample Questions

Alright, that’s all for this content!

Thank you for reading, and feel free to like, follow, and share

See you next time!