✅ Author Bio: A Matlab simulation developer passionate about research, skilled in data processing, modeling simulation, program design, complete code acquisition, paper reproduction, and scientific simulation.

🍎 Previous reviews, follow the personal homepage:Matlab Research Studio

🍊 Personal motto: Investigate things to gain knowledge, complete Matlab code and simulation consultation available via private message.

🔥 Content Introduction

In today’s highly complex industrial systems, financial markets, and biomedical fields, accurate classification of data and fault identification are crucial. Traditional methods often struggle to capture the nonlinear relationships and multimodal information within complex data, especially when dealing with time-series data. In recent years, the rapid development of deep learning technologies has provided new paradigms for addressing these challenges. Among them, Convolutional Neural Networks (CNNs) have demonstrated their powerful local feature extraction capabilities through significant success in image processing, while Recurrent Neural Networks (RNNs) like Long Short-Term Memory (LSTM) networks excel at handling sequential data. However, effectively integrating different features and focusing on key information in complex data that contains both temporal dependencies and spatial correlations remains a topic worthy of in-depth research.

The Markov Transition Field (MTF) is a novel method that transforms one-dimensional time series data into two-dimensional images, preserving the temporal and state transition information within the time series. This opens new pathways for utilizing CNNs to process time-series data. Meanwhile, attention mechanisms, particularly Multihead Attention, have shown strong capabilities in capturing global dependencies and weighting key information in fields such as natural language processing. Combining MTF, CNN, and Multihead Attention is expected to construct more powerful models to tackle classification prediction and fault identification tasks for complex multi-feature data.

This article aims to explore the MTF-CNN-Attention model framework in depth and detail how to implement MTF-CNN-Multihead-Attention in Matlab for multi-feature classification prediction and fault identification. We will start from the theoretical foundation, gradually build the model, and validate its effectiveness through experiments.

Theoretical Foundation

- Markov Transition Field (MTF)

The core idea of MTF is to transform time series data

- Convolutional Neural Network (CNN)

CNN is a deep learning model specifically designed for processing data with a grid-like topology, such as images. Its core components include convolutional layers, pooling layers, and fully connected layers. The convolutional layer extracts local features by sliding learnable filters over the input data. The pooling layer reduces the dimensionality of the feature maps, decreasing computational load and enhancing model robustness. The fully connected layer maps the extracted features to the final output classes. The strength of CNN lies in its ability to automatically learn feature representations at different levels of abstraction, from low-level edges and textures to high-level semantic features.

- Attention Mechanism and Multihead Attention

The attention mechanism simulates human visual attention, allowing the model to dynamically focus on more important parts of the input sequence while processing data. In sequence processing tasks, the attention mechanism helps the model assign different weights to different parts of the input sequence when generating output, thereby better capturing the relationship between input and output.

Multihead attention is an extension of the attention mechanism that enhances the model’s expressive power by performing multiple attention calculations in parallel and concatenating and linearly transforming the results. Each “head” learns different attention weights, allowing the model to jointly focus on different aspects of the input sequence from different representation spaces. This enables the model to comprehensively capture complex dependencies in the input data and focus on features that are crucial for the final classification result. In classification prediction tasks, multihead attention can help the model pay more attention to features that are critical for the final classification outcome.

Model Framework: MTF-CNN-Multihead-Attention

The proposed MTF-CNN-Multihead-Attention model framework is illustrated as follows:

[Here, a schematic diagram of the model framework can be inserted, including data input, MTF transformation, CNN feature extraction, multihead attention mechanism, fully connected layer, and output layer. Since this is a textual description, the structure of the diagram can be conceptualized during writing.]

The main process of this framework is as follows:

-

Data Preprocessing and MTF Transformation: For time-series data containing multiple features, MTF transformation is first performed independently for each feature dimension. Assuming the original data has

P N P N × N N × N × P -

CNN Feature Extraction: The generated MTF three-dimensional tensor is used as input for the CNN. The CNN extracts local and global features from the MTF matrices through multiple layers of convolution and pooling operations. The convolutional layers capture the spatial correlations within the MTF matrices, i.e., the transition patterns between different time points. The pooling layers reduce the feature dimensions, enhancing the model’s robustness.

-

Flattening and Feature Fusion: The feature maps extracted by the CNN are typically multidimensional. To input them into the multihead attention mechanism and fully connected layers, the feature maps need to be flattened into one-dimensional vectors. The flattened vectors contain information about the dynamics of the time series and the interactions of multiple features extracted from the MTF matrices.

-

Multihead Attention Mechanism: The flattened feature vectors are input into the multihead attention mechanism. The multihead attention mechanism learns the dependencies between different elements in the feature vectors and assigns different weights to different feature elements. Through parallel computations of multiple attention heads, the model can focus on key features from different perspectives, thereby enhancing the model’s feature representation capability. The output of the attention mechanism is a weighted aggregated feature vector.

-

Fully Connected Layer and Classification Output: The feature vector processed by the multihead attention mechanism is input into the fully connected layer. The fully connected layer maps the attention-weighted features to the final class labels. The output layer typically uses the Softmax function to convert the output into a probability distribution for each class, thus achieving classification prediction or fault identification.

Matlab Implementation Details

To implement the MTF-CNN-Multihead-Attention model in Matlab, the deep learning toolbox is utilized. Here are some key implementation details:

-

Implementation of MTF Transformation Function: Write a Matlab function to perform MTF transformation. This function needs to accept one-dimensional time series data as input and output the corresponding MTF matrix. It can be implemented based on the angular representation method mentioned above. For multi-feature data, each feature needs to be processed in a loop to generate the corresponding MTF matrix.

-

Construction of CNN Model: Use Matlab’s deep learning toolbox to construct the CNN model. Functions such as

<span>convolution2dLayer</span>,<span>reluLayer</span>, and<span>maxPooling2dLayer</span>can be used to define convolutional layers, activation functions, and pooling layers. Depending on the actual task and data characteristics, different numbers and configurations of convolutional and pooling layers can be designed. -

Implementation of Multihead Attention Mechanism: The Matlab deep learning toolbox provides functions for implementing attention mechanisms or can be implemented through custom layers for multihead attention. Implementing the multihead attention mechanism requires constructing Query, Key, and Value matrices, calculating attention weights, performing weighted summation, and concatenating and linearly transforming the outputs of multiple attention heads. This part may require a certain understanding of the internal mechanisms of the Matlab deep learning toolbox or utilizing the custom layer functionality.

-

Model Connection and Training: Connect the data transformed by MTF as input to the CNN, flatten the CNN output, and input it into the multihead attention mechanism, and finally input the output of the attention mechanism into the fully connected layer and output layer. The

<span>layerGraph</span>function can conveniently connect different layers to construct the entire model. Then, use the<span>trainingOptions</span>to set training parameters (such as optimizer, learning rate, number of iterations, etc.) and use the<span>trainNetwork</span>function to train the model. -

Data Preparation and Division: Prepare a multi-feature time series dataset for training and testing. Divide the dataset into training, validation, and test sets. Before performing MTF transformation, data normalization and other preprocessing operations may be necessary.

-

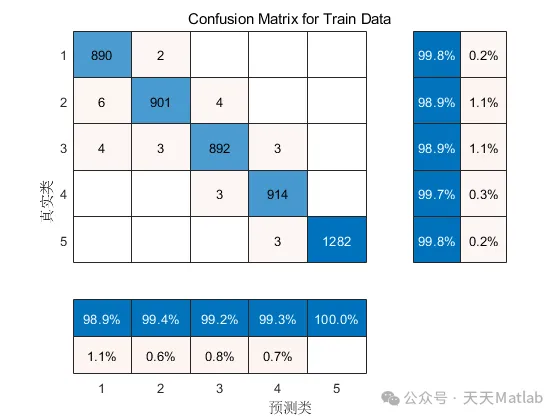

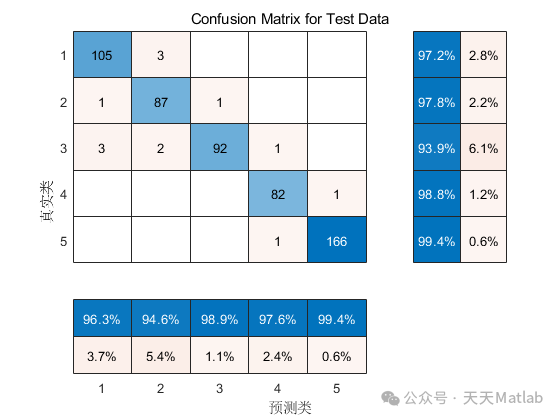

Performance Evaluation: Evaluate the model’s performance on the test set. Common evaluation metrics include accuracy, precision, recall, F1-score, and confusion matrix.

Experiments and Result Analysis

To verify the effectiveness of the MTF-CNN-Multihead-Attention model in multi-feature classification prediction/fault identification tasks, experiments can be conducted for specific application scenarios. For example, industrial equipment operational data (containing time series signals collected from multiple sensors) can be used for fault identification, or multi-dimensional time series data from financial markets can be used for stock trend prediction.

The experimental steps are roughly as follows:

- Dataset Preparation: Obtain and organize a multi-feature time series dataset, and perform preprocessing and division.

- MTF Transformation: Perform MTF transformation on the dataset to generate MTF matrices for CNN input.

- Model Construction and Training: Construct the MTF-CNN-Multihead-Attention model and train it using the training set. During training, the validation set can be used to monitor the model’s performance and adjust hyperparameters.

- Model Evaluation: Evaluate the performance of the trained model on the test set and calculate the corresponding evaluation metrics.

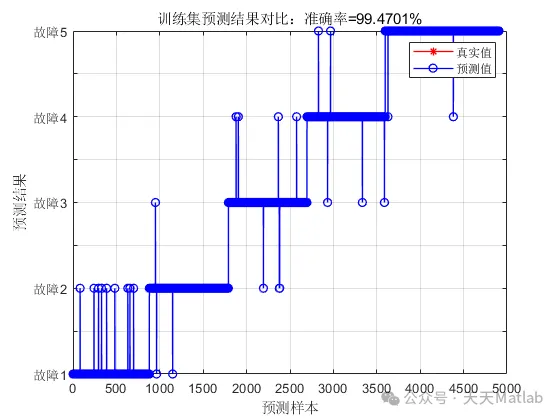

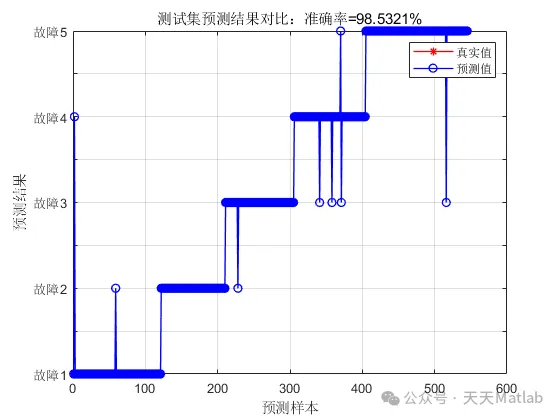

- Result Analysis: Analyze the classification results of the model, such as the confusion matrix, to understand the model’s performance across different categories. Compare with traditional machine learning methods or standalone CNN models to analyze the performance improvement brought by MTF transformation and multihead attention mechanism.

Potential Application Scenarios

The MTF-CNN-Multihead-Attention model has broad application prospects in many fields, such as:

- Industrial Fault Diagnosis: Classifying and predicting fault types using operational data collected from multiple sensors.

- Financial Market Prediction: Using time series data from various financial indicators such as stocks and futures for price trend or risk prediction.

- Medical Diagnosis: Classifying and diagnosing diseases using time series data from multiple physiological signals (e.g., ECG, EEG).

- Environmental Monitoring: Predicting pollutant concentrations or identifying abnormal events using time series data from multiple environmental parameters.

⛳️ Running Results

🔗 References

📣 Some Code

🎈 Some theoretical references from online literature, please contact the author for removal if there is any infringement

👇 Follow me to receive a wealth of Matlab e-books and mathematical modeling materials

🏆 Our team specializes in guiding customized Matlab simulations in various research fields, helping to realize research dreams:

🌈 Various intelligent optimization algorithm improvements and applications

Production scheduling, economic scheduling, assembly line scheduling, charging optimization, workshop scheduling, departure optimization, reservoir scheduling, three-dimensional packing, logistics site selection, cargo location optimization, bus scheduling optimization, charging pile layout optimization, workshop layout optimization, container ship loading optimization, pump combination optimization, medical resource allocation optimization, facility layout optimization, visual domain base station and drone site selection optimization, knapsack problem, wind farm layout, time slot allocation optimization, optimal distributed generation unit allocation, multi-stage pipeline maintenance, factory-center-demand point three-level site selection problem, emergency material distribution center site selection, base station site selection, road lamp post arrangement, hub node deployment, transmission line typhoon monitoring devices, container scheduling, unit optimization, investment portfolio optimization, cloud server combination optimization, antenna linear array distribution optimization, CVRP problem, VRPPD problem, multi-center VRP problem, multi-layer network VRP problem, multi-center multi-vehicle VRP problem, dynamic VRP problem, two-layer vehicle routing planning (2E-VRP), electric vehicle routing planning (EVRP), hybrid vehicle routing planning, mixed flow shop problem, order splitting scheduling problem, bus scheduling optimization problem, flight shuttle vehicle scheduling problem, site selection path planning problem, port scheduling, port bridge scheduling, parking space allocation, airport flight scheduling, leak source localization

🌈 Time series, regression, classification, clustering, and dimensionality reduction in machine learning and deep learning

2.1 BP time series, regression prediction, and classification

2.2 ENS voice neural network time series, regression prediction, and classification

2.3 SVM/CNN-SVM/LSSVM/RVM support vector machine series time series, regression prediction, and classification

2.4 CNN|TCN|GCN convolutional neural network series time series, regression prediction, and classification

2.5 ELM/KELM/RELM/DELM extreme learning machine series time series, regression prediction, and classification

2.6 GRU/Bi-GRU/CNN-GRU/CNN-BiGRU gated neural network time series, regression prediction, and classification

2.7 Elman recurrent neural network time series, regression prediction, and classification

2.8 LSTM/BiLSTM/CNN-LSTM/CNN-BiLSTM long short-term memory neural network series time series, regression prediction, and classification

2.9 RBF radial basis neural network time series, regression prediction, and classification

2.10 DBN deep belief network time series, regression prediction, and classification

2.11 FNN fuzzy neural network time series, regression prediction

2.12 RF random forest time series, regression prediction, and classification

2.13 BLS broad learning time series, regression prediction, and classification

2.14 PNN pulse neural network classification

2.15 Fuzzy wavelet neural network prediction and classification

2.16 Time series, regression prediction, and classification

2.17 Time series, regression prediction, and classification

2.18 XGBOOST ensemble learning time series, regression prediction, and classification

2.19 Transform various combinations of time series, regression prediction, and classification

Covering wind power prediction, photovoltaic prediction, battery life prediction, radiation source identification, traffic flow prediction, load forecasting, stock price prediction, PM2.5 concentration prediction, battery health state prediction, electricity consumption prediction, water body optical parameter inversion, NLOS signal identification, precise prediction of subway stops, transformer fault diagnosis

🌈 In image processing

Image recognition, image segmentation, image detection, image hiding, image registration, image stitching, image fusion, image enhancement, image compressed sensing

🌈 In path planning

Traveling salesman problem (TSP), vehicle routing problem (VRP, MVRP, CVRP, VRPTW, etc.), three-dimensional path planning for drones, drone collaboration, drone formation, robot path planning, grid map path planning, multimodal transport problems, electric vehicle routing planning (EVRP), two-layer vehicle routing planning (2E-VRP), hybrid vehicle routing planning, ship trajectory planning, full path planning, warehouse patrol

🌈 In drone applications

Drone path planning, drone control, drone formation, drone collaboration, drone task allocation, drone safe communication trajectory online optimization, vehicle collaborative drone path planning

🌈 In communication

Sensor deployment optimization, communication protocol optimization, routing optimization, target localization optimization, Dv-Hop localization optimization, Leach protocol optimization, WSN coverage optimization, multicast optimization, RSSI localization optimization, underwater communication, communication upload and download allocation

🌈 In signal processing

Signal recognition, signal encryption, signal denoising, signal enhancement, radar signal processing, signal watermark embedding and extraction, EMG signals, EEG signals, signal timing optimization, ECG signals, DOA estimation, encoding and decoding, variational mode decomposition, pipeline leakage, filters, digital signal processing + transmission + analysis + denoising, digital signal modulation, bit error rate, signal estimation, DTMF, signal detection

🌈 In power systems

Microgrid optimization, reactive power optimization, distribution network reconstruction, energy storage configuration, orderly charging, MPPT optimization, household electricity, electric/cold/heat load forecasting, power equipment fault diagnosis, battery management system (BMS) SOC/SOH estimation (particle filter/Kalman filter), multi-objective optimization in power system dispatch, photovoltaic MPPT control algorithm improvement (perturbation observation method/conductance increment method)

🌈 In cellular automata

Traffic flow, crowd evacuation, virus spread, crystal growth, metal corrosion

🌈 In radar

Kalman filter tracking, trajectory association, trajectory fusion, SOC estimation, array optimization, NLOS identification

🌈 In workshop scheduling

Zero-wait flow shop scheduling problem (NWFSP), permutation flow shop scheduling problem (PFSP), hybrid flow shop scheduling problem (HFSP), zero idle flow shop scheduling problem (NIFSP), distributed permutation flow shop scheduling problem (DPFSP), blocking flow shop scheduling problem (BFSP)

👇