The startup speed of embedded systems varies based on the device’s performance and the quality of the code. However, from a consumer’s perspective, the faster the system starts, the better. Therefore, optimizing the performance of embedded systems to speed up device startup time is a crucial task in the later stages of a project. It is important to note that optimizing embedded Linux devices is not a one-time effort but a continuous process of improvement.

Standard for Startup Speed

Currently, there is no unified standard for the speed of device startup. Generally, it follows the customer’s standards in the project.

Performance Evaluation

Performance Evaluation

For developers, evaluating device performance is generally done by adding logs in the code. This method has the following advantages:

1. High Accuracy

It can usually be accurate to the millisecond. In special cases, it can be precise to the millisecond, such as using the gettimeofday function.

2. High Flexibility

It can measure the time taken by any part of the code.

Reasons for Poor Performance

In embedded devices, the reasons for long startup times and poor performance generally include the following aspects:

1. Hardware Reasons

Hardware reasons generally refer to the device’s CPU and Flash performance. If the code has a large computational load, the performance limitations of the CPU and Flash can lead to the CPU being overly busy. Some devices, due to cost constraints, have too small Flash memory, requiring many items to be compressed for storage, meaning decompression during device startup also takes time.

2. Program Reasons

The code may require a large number of IO operations, such as reading and writing files, and memory access, causing the CPU to often be in a waiting state. Additionally, some code may cause mutual waiting between processes due to how it is written, resulting in low CPU utilization and limiting device performance.

Principles of Optimization

Principles of Optimization

Optimization should not be done blindly; pursuing performance without consideration can lead to issues. Generally, the following principles should be followed:

1. Principle of Equivalence

The functionality of the code before and after optimization must be completely consistent.

2. Principle of Effectiveness

The optimized code must run faster than the original and occupy less storage space, or both; otherwise, it is meaningless optimization.

3. Principle of Economy

Many parts of code with poor performance are also limited by hardware performance, such as compressing files to save storage costs. Optimization should consider existing conditions and not replace storage space size for decompression time. Optimization should require a minimal cost; many programmers complain about limited device performance while requesting improvements, which is counterproductive.

Methods of Optimization

Methods of Optimization

The optimization methods proposed here mainly focus on code-level considerations, excluding hardware upgrades.

Shell script optimization:

The vast majority of embedded devices use BusyBox as the tool for implementing Linux commands, so BusyBox provides a relatively complete environment suitable for any small embedded system.

BusyBox is a software that integrates over one hundred of the most commonly used Linux commands and tools. BusyBox includes some simple tools, such as ls, cat, and echo, as well as larger and more complex tools like grep, find, mount, and telnet. Some people refer to BusyBox as the Swiss Army Knife of Linux tools. In simple terms, BusyBox is like a large toolbox that integrates many tools and commands of Linux and also includes the built-in shell of the Android system.

BusyBox includes three types of commands:

-

APPLET

These are the well-known applets, created by BusyBox to spawn a subprocess and then call exec to perform the corresponding function, returning control to the parent process upon completion.

-

APPLET_NOEXEC

The system calls fork to create a subprocess and then executes the corresponding function in BusyBox, returning control to the parent process upon completion.

-

APPLET_NOFORK

This is equivalent to built-in commands, executing BusyBox’s internal functions without needing to create a subprocess, thus achieving high efficiency.

As is well known, calling fork and exec in Linux is time-consuming, so we should use APPLET_NOFORK commands as much as possible, followed by APPLET_NOEXEC, and finally APPLET.

In BusyBox 1.9, the functions belonging to APPLET_NOFORK include:

basename, cat, dirname, echo, false, hostid, length, logname, mkdir, pwd, rm, rmdir, deq, sleep, sync, touch, true, usleep, whoami, yes

Functions belonging to APPLET_NOEXEC include:

awk, chgrp, chmod, chown, cp, cut, dd, find, hexdump, ln, sort, test, xargs……

Thus, the strategies for optimizing shell scripts generally include:

1. Remove unnecessary scripts

2. Use BusyBox internal commands as much as possible

3. Avoid using pipes

4. Reduce the number of commands in pipes

5. Avoid using ·

Optimizing Process Startup Speed

Optimizing Process Startup Speed

The process startup sequence is as follows:

1. Search for its dependent dynamic libraries

2. Load dynamic libraries

3. Initialize dynamic libraries

4. Initialize the process

5. Transfer control of the program to the main function

To speed up the startup of the process, the following aspects can be considered:

1. Reduce the number of dynamic libraries loaded

a) Use dlopen to defer loading unnecessary dynamic libraries

b) Convert some dynamic libraries to static libraries

Advantages: It reduces the number of dynamic libraries loaded; after merging with other dynamic libraries, the functions within the dynamic library no longer need dynamic linking or symbol lookup, thus improving speed

Disadvantages: If this dynamic library is dependent on multiple other dynamic libraries or processes, it will be copied multiple times into the new dynamic library, increasing the overall file size and occupying more Flash memory.

It loses the memory sharing of the original code segment of the dynamic library, which may lead to increased memory usage.

2. Optimize the search path when loading dynamic libraries

a) Set LD_HWCAP_MASK to disable some unused hardware features.

b) Place all dynamic libraries in one directory and put that directory at the beginning of LD_LIBRARY_PATH.

c) If not placed in one directory, add the -rpath option in the process to specify the search path. If previous efforts still do not meet the speed requirements for process startup, then work on process scheduling can be done: Change the process to a thread; Split the original process into two parts.

The resident memory part: This is a daemon process primarily responsible for loading the dynamic libraries required by the process, listening for user signals, and creating and destroying user logic threads. The user logic part is created by the daemon part to meet user demands, thus saving the loading dynamic libraries, initializing dynamic libraries, and global variables, which can shorten the response time of the process to meet user needs.

Furthermore, it can be extended that the original multiple daemon processes’ resident memory parts can be merged, creating different processes based on user logic needs.

Advantages: When creating threads, there is no need to reload dynamic libraries, thus shortening the response time of the process; when multiple business logics share dynamic libraries, it avoids the system creating data segments for each business logic, saving a significant amount of memory.

Disadvantages: Changing from original processes to threads involves significant work, with code modifications carrying certain risks; when multiple business logic threads share dynamic libraries, it may lead to global variable conflicts.

Since there are still parts of the daemon process, its stack memory will not be released, and memory leaks from multiple business logic threads will entangle, complicating issues.

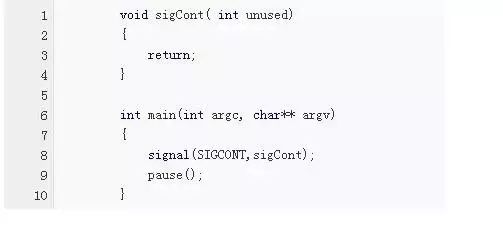

3. Preload Processes

Insert a line of code in the main function of the process:

pause();

Thus, when the process starts and loads dynamic libraries, it will pause here and not execute user logic. When we need to respond to the user, we send a signal to the process, allowing it to continue and handle user logic, thus saving the process of loading dynamic libraries.

When user logic execution is complete, the process exits, and then this process is restarted, which will pause after loading the dynamic libraries.

Preload, delay exit.

When a process takes a long time to start, many programmers only consider preloading (starting at boot time) without considering exit conditions, leading to an additional daemon process in the process. Therefore, preloading and delaying exit require more precise control over the process lifecycle.

Adjusting CPU Frequency:

-

In embedded devices, the CPU generally has several operating frequencies

-

The higher the CPU frequency, the faster the running speed, but the higher the power consumption

-

The CPU frequency can be increased before startup and decreased after completion

-

This method increases power consumption and may not be suitable in some situations

4. Code Optimization

The if expression

Evaluates expressions from left to right; once the result is determined, there is no need to calculate other expressions, which is commonly referred to as the “short-circuit” mechanism. Therefore, for if statements, the following optimizations can be made:

-

Remove redundant conditions

-

Remove conditions that are certainly false

-

Use the short-circuit mechanism to place the fastest-evaluating expression on the left

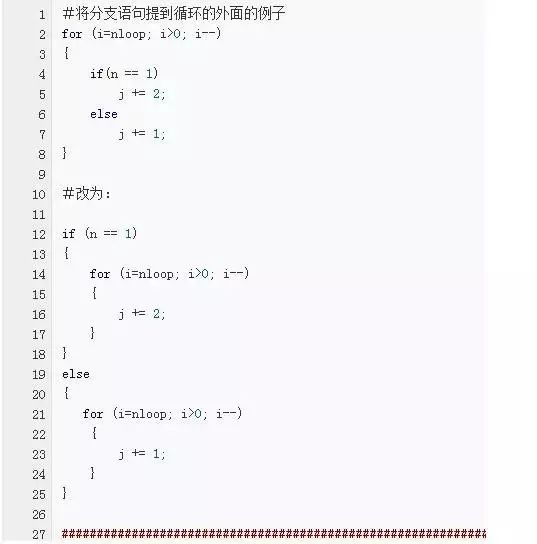

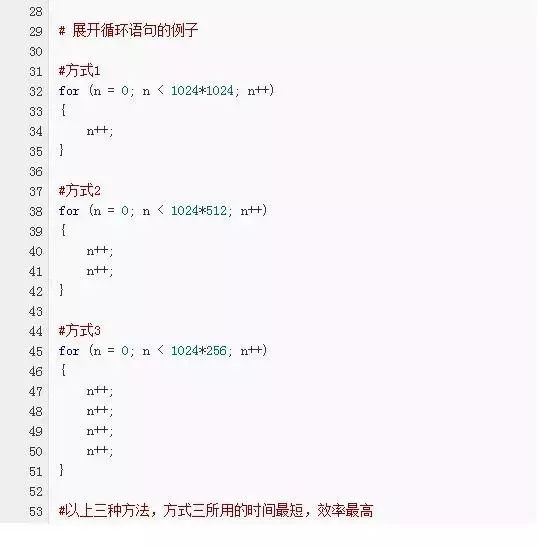

Optimization of loop statements:

-

Move invariant code outside the loop

-

Move branch statements outside the loop

-

By unrolling loop branches, reduce the number of loops, thus minimizing the impact of branch statements on the loop

-

Use decrement instructions instead of increment instructions

-

Register usage follows the ATPCS standard.

The ATPCS standard is a guideline that should be followed in embedded development.

-

Subroutine parameters are passed through registers R0-R3.

The called subroutine does not need to restore the contents of registers R0-R3 before returning.

-

In the subroutine, registers R4-R11 are used to save local variables.

-

If certain registers R4-R11 are used in the subroutine, their values must be saved upon entry and restored before returning; for registers not used in the subroutine, these operations are unnecessary.

-

R12 is used as a scratch register between subroutines, referred to as ip.

These rules are often used in the connection code segment between subroutines.

-

R13 is used as the data stack pointer, referred to as sp.

Register R13 cannot be used for other purposes between subroutines.

-

R14 becomes the link register, referred to as lr.

It is used to save the return address of the subroutine.

-

R15 is the program counter, referred to as pc.

When the subroutine returns a result as a 32-bit integer, it can be returned through register R0; for a 64-bit integer, it can be returned through registers R0 and R1, and so on.

5. Function Parameter Optimization

It is best not to exceed 4 parameters for functions; less than 4 parameters can be passed via registers, while more than 4 need to be passed via the stack. Moreover, if there are fewer than 4 parameters, the remaining registers in R0-R4 can store local variables in the function.

6. Reduce the Number of Local Variables

Try to limit the number of local variables used in function internal loops to no more than 12, so the compiler can allocate variables to registers.

If no local variables are saved to the stack, the system will not need to set and restore the stack pointer.

When the number of local variables in a function exceeds 12, it does not mean that only the first 12 temporary variables are allocated registers; subsequent temporary variables will be operated on through stack memory.

When registers are fully allocated, if new temporary variables are encountered, first check if the already allocated local variables will not be used in later code; if so, the new local variable will use its allocated register.If all allocated local variables will be used later, one temporary variable must be chosen to be saved to the stack, and then its allocated registers will be assigned to local variables.

7. File Operation Optimization

-

The buffer for reading and writing files should be 2048 or 4096 for optimal speed

-

Use mmap for file reading and writing

The basic process of mmap is:

– Create a target file identical to the source file

– Use mmap to map both the source and target files into memory

– Use memcpy to convert file read and write operations into memory copy operations

8. Thread Optimization

-

Creating threads incurs a cost; if the created threads do very little and are frequently created and destroyed, it is not worth it.

-

Use asynchronous IO to replace multi-threading + synchronous IO approaches

-

Use thread pools to replace thread creation and destruction

9. Memory Operation Optimization

Memory access process:

-

The CPU attempts to access a block of memory

-

The CPU first checks whether that memory has already been loaded into the cache

-

If loaded into the cache, it locates directly in the cache

-

If not loaded into the cache, it sends the high 27 bits of the address to memory through the CPU and memory’s direct address bus

-

When memory receives the high 27 bits address, it uses the burst transfer mode of SDRAM to send 32 consecutive bytes to the CPU’s cache, filling a cache line

-

The CPU can locate the cache line using the high 27 bits of the address and use the low 5 bits to locate specific bytes within the cache line

-

Try to use algorithms that occupy less memory

-

Utilize the pipelined memory access and computation parallelism characteristics to combine memory access with computation

10. Adjusting Process Priority

Linux supports two types of processes: real-time processes and normal processes.

The priority of real-time processes is statically set and always higher than that of normal processes. For real-time processes, the concept of absolute priority is used, with a range of 0-99; the higher the number, the higher the priority.

The absolute priority value for normal processes is 0. Among normal processes, there are static and dynamic priorities. Static priority can be modified through programs. The system continuously calculates the dynamic priority of each process based on the static priority during operation, with the process having the highest dynamic priority being selected by the scheduler. Generally, the higher the static priority, the longer the time slice allocated to the process.

Avoid placing certain processes in startup scripts; try to start daemon processes upon first use.

1. Electronic journals, why not get one!

2. Baidu’s IoT chip claims to offer lifetime free licensing; is this feasible?

3. Book recommendations | In March, great books keep coming~

4. Analyzing the four levels and six principles of IoT security architecture; everything you can think of and not think of is here!

5. These basic knowledge of embedded Linux systems you should know!

6. The ARM seen through the eyes of financial professionals is like this!

Disclaimer: This article is a network reprint, and the copyright belongs to the original author. If there are copyright issues, please contact us, and we will confirm copyright and pay fees or delete content based on the copyright certificate you provide.