More and more enterprises are considering virtualization to improve the efficiency of IT resources and applications while reducing operational costs. In traditional server maintenance, if a server goes down, only the applications on that server are affected, which can be resolved through methods like cluster high availability; however, in a virtualized environment, if a physical host server fails, all the virtual machines running on it will also fail to operate normally.

Although virtualization centralizes resource management, the impact of failures is broader, making the cluster high availability feature particularly important in virtualization. Protecting physical servers is not enough; it is also essential to protect virtual servers that contain critical business data and information. Virtual servers provide flexibility, but at the same time, if a physical server hosting multiple virtual servers fails, it can lead to significant data loss.

This article mainly discusses how to optimize the existing VMware virtualization environment by restructuring it into a high availability architecture. High availability is achieved through dual-link redundancy at the network resource layer, compute resource layer, and storage resource layer. Before making any architectural changes, it is crucial to have a thorough understanding of the existing environment and to grasp the impact of changes on business operations.

-

Before adjusting the architecture, it is essential to understand and familiarize oneself with the shortcomings of the existing architecture.

-

Before making architectural adjustments, resolve any existing issues in the environment.

-

Understand the compatibility of the existing architecture with business needs.

-

Pre-assess the workload and dependencies for the existing architecture transformation.

The following sections will detail how to adjust the existing VMware architecture to a high availability dual-link architecture.

Background of High Availability Solutions

VMware vSphere is a server virtualization solution launched by VMware, consisting of a software suite that mainly includes ESXi for host virtualization, vCenter for virtualization management, Update Manager for upgrades, and Auto Deploy for automated deployment.

In the previous architecture, the existing virtualization setup had been in place for two years and was operating well overall. However, whenever issues arose, they affected business operations, whether due to network device failures, SAN switches, or even compute nodes. The overall solution utilized gigabit network switches for managing and migrating virtual machines, with HA not enabled between clusters, and the SAN’s hierarchical division was unclear, with no standardized naming. This led to delays in virtual machine migration after failures. The business traffic was too high, and network bandwidth was limited. Damage to hardware links would cause the virtualization environment to collapse, affecting normal business operations. Due to poor performance in vMotion and Storage vMotion in the previous architecture, business migrations during hardware or system changes were challenging, putting significant pressure on operations and maintenance.

Principle of VMware vMotion Migration

As one of the powerful features provided by VMware, vMotion can effectively help operations and maintenance personnel address the challenges of single-machine maintenance impacting business. Understanding the migration principles of vMotion lays a solid foundation for better architectural design.

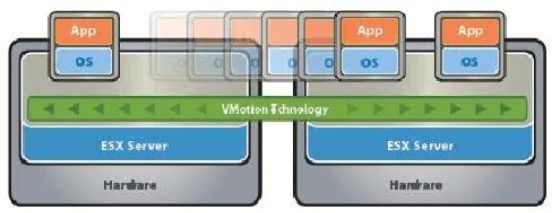

VMware vMotion enables real-time migration between two running servers with zero downtime, significantly enhancing server availability and ensuring the integrity of transaction data. Users can manually migrate virtual machines from one server to another, allowing for upgrades and maintenance of the original server without interrupting service. Virtual machines can also be automatically migrated between multiple servers to achieve load balancing and improve resource utilization. Additionally, this technology supports hot backup for dual or multiple machines, ensuring high availability of services. The specific working principles are illustrated in Figure 1, and Storage vMotion is shown in Figure 2:

Figure 1. vMotion Migration Principle Diagram

Figure 1. vMotion Migration Principle Diagram

Terminology Explanation:

-

vMotion: A unique technology developed by VMware that fully virtualizes servers, storage, and network devices, allowing an entire running virtual machine to be instantly moved from one server to another. The entire state of the virtual machine is encapsulated by a set of files stored in shared storage, and VMware’s VMFS clustered file system allows both the source and target VMware ESX to access these virtual machine files simultaneously.

-

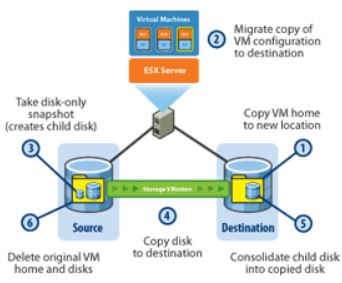

Storage vMotion: A feature introduced by VMware for storage migration, allowing the storage location of a virtual machine to be changed while it is powered on. Like vMotion, the entire migration process is transparent to the user, and applications will not be interrupted.

Storage vMotion, as another powerful feature provided by VMware, greatly facilitates administrators in managing storage operations, allowing for online migration of VM storage whenever needed without affecting normal business operations. Moreover, vMotion and Storage vMotion can be operated simultaneously.

Figure 2. Storage vMotion Principle Diagram

Figure 2. Storage vMotion Principle Diagram

Principle Diagram Explanation:

-

Before moving disk files, Storage vMotion creates a new virtual machine home directory in the target data store for the virtual machine.

-

Next, a new virtual machine instance is created, with its configuration retained in the new data store.

-

Then, Storage vMotion creates a child disk for each virtual machine disk being moved, capturing a copy of write activity while the parent disk is in read-only mode.

-

The original parent disk is copied to the new storage location.

-

The child disk becomes the parent disk of the newly copied parent disk in the new location.

-

After the transfer to the new virtual machine copy is complete, the original instance will be shut down. Then, the original virtual machine home directory at the source location will be deleted from VMware vStorage VMFS.

The entire process takes approximately the same time as cold migration and is dependent on the size of the virtual machine disk. The final switch from the original virtual machine to the new instance can be completed within two seconds and is transparent to application users. Storage vMotion uses advanced copy engine technology that is independent of specific storage types, meaning it is applicable to any storage type you already have.

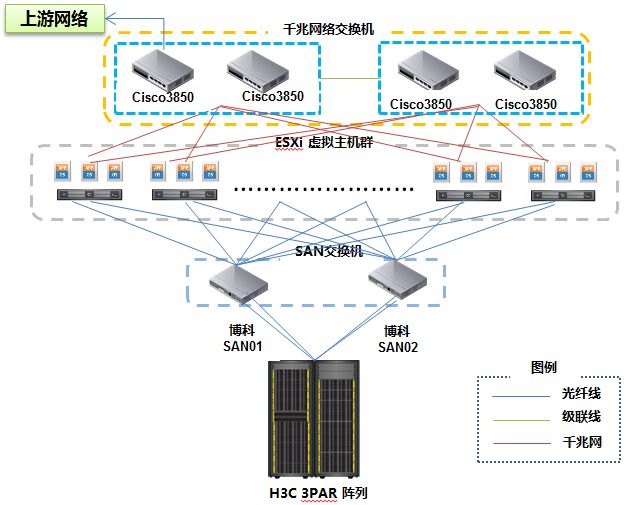

Existing Architecture Design

To help everyone better understand the issues with the existing architecture, a deployment architecture diagram has been drawn based on actual project experience, and a detailed explanation of the architecture diagram is provided in the text. As shown in Figure 3:

Figure 3. VMware Deployment Architecture Diagram

Figure 3. VMware Deployment Architecture Diagram

Explanation:

1. This is a typical centralized storage architecture, using HP’s 3PAR as the storage for virtual machines.

2. Two Brocade SAN switches are cross-linked.

3. Four Cisco gigabit switches are stacked in pairs, but the gateway and exit are on the first one.

4. All ESXi hosts are connected to both the SAN network and the Ethernet network.

High Availability Architecture

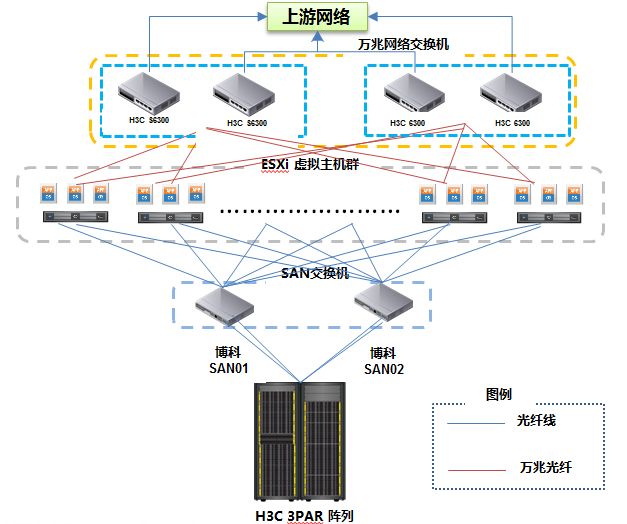

At first glance, the previous architecture seems fine, but upon closer inspection, it is found that the network lacks redundancy. If the first switch unexpectedly goes down, the entire virtualization environment will lose network connectivity, directly affecting normal business operations. All four switches are gigabit switches, which cannot meet the current business needs and future expansion. To address these issues, we revised the existing architecture to ensure that all links are redundant, so that any single link failure will not affect normal business operations. The new architecture is shown in Figure 4:

Figure 4. Virtualization Environment Architecture Diagram

Figure 4. Virtualization Environment Architecture Diagram

High Availability Architecture Explanation

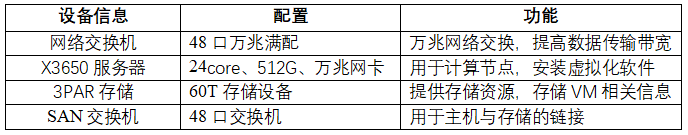

Due to hardware resource limitations, it is first necessary to purchase 10-gigabit switches, and each server needs to be equipped with 10-gigabit network cards, as detailed in Table 1.

Table 1. Environment Equipment Description

Design of Compute Nodes

The X3650 server is additionally configured with two 10-gigabit dual-port network cards, four gigabit network ports, and two HBA cards. To enhance the performance and stability of virtualization, traffic management is separated into management traffic, vKernel traffic, and VM traffic, with different network cards handling different traffic types, ensuring no interference between them. A total of 24 servers are established into four clusters, used for production and testing. The specific traffic separation configuration will be detailed in the following network configuration section.

ESXi Installation

In the actual project, VMware vSphere 6.0 was used to build the virtualization environment, which has improved stability and functionality compared to previous versions.

Administrators remotely installed ESXi on 24 servers via IMM and configured IP addresses, hostnames, DNS, and other settings to enable communication between all hosts, while enabling firewalls to prevent remote logins and strengthen security defenses.

Adding Clusters in ESXi

According to the plan, four clusters were created in vCenter, named Prd01, Prd02, Dev01, and Dev02. Each cluster added six hosts, with host naming conforming to planning requirements. The high availability configuration of the hosts will be introduced in the following sections.

Network Design

The four network switches were removed from the previous stacking mode and are now used as access layer switches connecting to the upper aggregation layer, dividing into two VLANs: the production VLAN named prod and the development/testing VLAN named Dev. Each switch operates independently as an access layer, minimizing unrelated network configurations.

SAN Design

Two Brocade SAN switches connect all 24 hosts and are configured in a primary-backup manner. Each switch is divided into 24 zones, organized by host, which improves I/O reading and ensures isolation between zones, preventing interference with other hosts and storage.

Storage Design

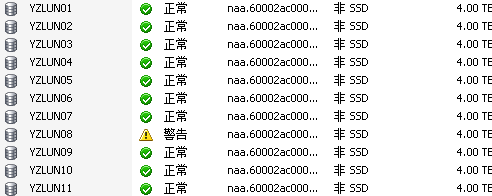

Currently, HP’s high-end 3PAR storage is used as the storage medium for VMs and other related files, with a total of 15 LUNs, each 4TB. All are formatted as VMFS5 and mounted to each host. Since 3PAR’s underlying RAID technology ensures data redundancy, we can directly allocate the relevant LUNs.

Environment Configuration

Through the above operations, the infrastructure of the virtualization environment has been described, from the underlying storage to SAN switches, hosts, and upper-layer network devices, ensuring that connection lines are normal and comply with cross-connection methods to avoid single link failures. The following sections will mainly introduce specific configurations to meet high availability requirements.

VMware Host High Availability Configuration

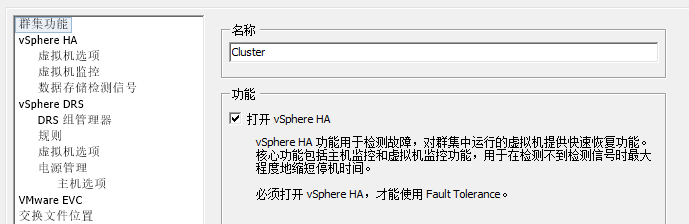

The successful installation of the ESXi software only completes the virtualization setup, but its high availability features require additional configuration. Different configuration parameters will yield different effects. Many of VMware’s advanced features are set within clusters, which will serve as high availability zones (Availability zones) for unified resource allocation and adjustment of internal hosts. Virtual machines within the cluster will automatically migrate and load balance based on the cluster boundaries. To meet high availability requirements, HA must be enabled within the cluster, ensuring that if a single host fails, virtual machines can automatically restart on other hosts within the cluster, preventing prolonged business interruptions. As shown in Figure 5:

Figure 5. VMware HA Configuration

Figure 5. VMware HA Configuration

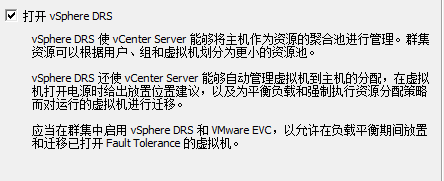

Enabling DRS functionality is recommended to be set to fully automated mode, although different scenarios may require adjustments. Once enabled, newly created VMs will be automatically allocated to reasonably resourced virtual hosts, preventing uneven resource distribution. If resource changes occur during later use, VMs will automatically invoke vMotion based on DRS policies for migration, with the entire process requiring no manual intervention. As shown in Figure 6:

Figure 6. VMware DRS Configuration

Figure 6. VMware DRS Configuration

Many advanced features within VMware rely on DRS and HA, so to utilize higher-level functionalities, both features must be enabled in advance.

SAN Configuration

After ensuring correct cable connections, log into both SAN switches to configure 24 zones, with each zone containing only one host and storage LUN aggregation. Then, save all zones in a configuration file named vcf. Each SAN switch will only have one configuration file active, so be careful not to create multiple files. The configuration of multiple configs should be determined based on your specific scenario. Once configured, hosts within the same cluster can see the shared storage LUNs. As shown in Figure 7:

Figure 7. VMware LUN Configuration

Figure 7. VMware LUN Configuration

Network Configuration

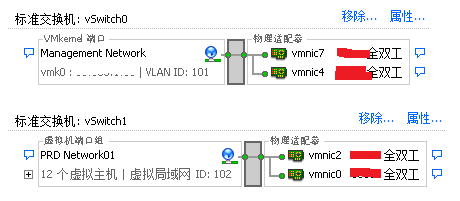

To meet the requirements for network traffic isolation by function and high availability, each host’s network configuration must cross-connect the four 10-gigabit network ports to different virtual switches. vSwitch0 is primarily responsible for vMotion, FT, and management traffic, while vSwitch1 is mainly responsible for virtual machine traffic transmission. As shown in Figure 8:

Figure 8. VMware Network Configuration

Figure 8. VMware Network Configuration

Storage High Availability Design

The adjusted architecture has basically achieved high availability for all links, but the only shortcoming is that storage is a single centralized storage. According to the current architecture, if the storage fails, all data will be inaccessible, with a risk of loss. Due to budget constraints, the entire project cannot purchase storage this quarter. To ensure that virtual machines remain available after storage failure, we temporarily adopt a backup mechanism to resolve similar issues through backup recovery. If conditions permit, it is still recommended to purchase additional 3PAR storage or IBM SVC for storage-level redundancy, which can significantly reduce the losses caused by storage failures and quickly restore business operations to normal storage.

Through the above design, the non-high availability factors in the previous architecture, such as network and hosts, have been largely eliminated, improving the availability and reliability of the infrastructure.

Conclusion

This article presents one of the classic architectures of VMware, primarily constructed using centralized storage. In the new version of VMware vSphere, it is also possible to eliminate the limitations of centralized storage by using vSAN, adopting distributed object storage to distribute virtual machines across different X86 hosts. Any host failure will not affect data access, while also reducing the overall infrastructure construction costs. Interested readers can refer to the resources below to access TWT or VMware.

Reference Resources

-

Refer to the official VMware documentation for more information on VMware vSphere features.

-

Refer to the TWT Community for more VMware usage scenarios.

Author: Zhang Zhiqiang, a senior technical expert at a manufacturing enterprise, with years of experience in cloud computing, virtualization architecture design, enterprise information construction, and automated operations and maintenance.

For more related articles, please click to read the original text.

Long press the QR code to follow the public account.