Typical Embedded Edge AI Application Scenarios

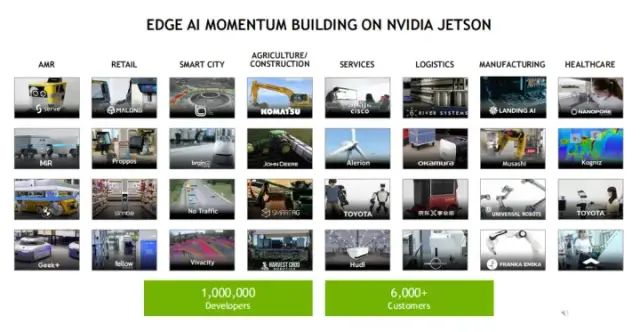

Industrial manufacturing and logistics applications are on the rise. We are seeing a shift due to worker shortages and increased demands for industrial safety and operational efficiency. Better planning and scheduling in supply chain management are becoming increasingly important. This, in turn, drives automation in warehouses, mobile robotics, industrial pick-and-place, optical inspection, and defect detection robots.

Other use cases we are observing include smart retail applications and scenarios in smart cities and intelligent spaces. As life returns to normal post-COVID-19 pandemic, people are looking for better customer experiences while needing to reduce operational costs and redundancies. The market for smart retail and smart cities is experiencing significant growth. We see applications like digital signage and traffic management in modern intersections, license plate counting, and people analytics. Examples include people detection, public safety camera systems, store and inventory management, and delivery robots.

At the same time, we are witnessing tremendous growth in healthcare, both in medical devices and healthcare robotics.

Lastly, developments in agriculture are also notable. We are observing growth in applications like automated tractors and smart harvesting robots, driven by labor shortages, initiatives around carbon neutrality and sustainability, and the importance of reducing emissions and waste amidst a growing population.

Thus, all these applications, from AMRs to smart cities and retail to agriculture and health, are being deployed on Jetson today. We have over a million developers using Jetson, with more than 6,000 customers, and this number will only continue to grow.

NVIDIA Jetson Addresses Edge AI Challenges

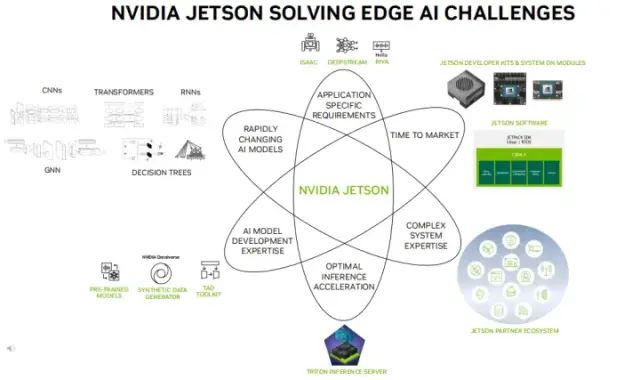

Let’s delve into some of the challenges these applications face and how Jetson truly serves as an ideal platform to address these challenges. We typically see six different challenges. The first is that AI is rapidly evolving, and so are the models. The computing platform needs to support a variety of models, from CNNs and GNNs to the latest generative AI models, such as chat-based Transformer models, GPT, and DoLLE.

Jetson is capable of supporting all these rapidly changing AI models. Additionally, many of the applications we just discussed have various specific requirements, which is why we offer application-specific frameworks. This includes Nvidia Isaac for robotics, DeepStream for computer vision, and Riva for natural language processing.

Time to market is another critical challenge many embedded applications face, and we utilize our Jetson modules, Jetson development kits, and our Jetson software to address this. Our modules are not just GPUs; they also include CPUs and various other processors along with full power architectures and memory, all contained within a single module that can be inserted into a carrier board via a 699-pin connector or DIM connector. Furthermore, we provide development kits that help accelerate the development process and offer easy-to-use tools.

The software is genuinely focused on being a software-defined platform, making it easier for you to enter the market. At the same time, we see that no single company can meet all your needs, and many of our customers possess complex systems expertise. Therefore, we aim to support these requirements through our ecosystem of over 150 Jetson partners. From our hardware partners to camera, sensor, and connector partners to software partners and ultimately distributors, we ensure you get the necessary support in your region.

Another key challenge we observe is that some customers lack the expertise for optimal inference acceleration, which is where you can leverage our Jetson software and our Triton inference server.

Finally, many people do not necessarily possess data science or deep learning expertise, making AI model development quite challenging. Therefore, we provide our pre-trained models and TAO toolkit to facilitate this process. We have hundreds of models covering computer vision and natural language processing that you can train, fine-tune, and optimize using our TAO toolkit on GPU servers, and then easily deploy them at the edge using our Jetson software stack.

Moreover, if you lack sufficient data, you can leverage Nvidia Omniverse and our synthetic data generators to create custom data, which you can use to adjust and optimize these models for your use case. Thus, Nvidia Jetson truly addresses all the major challenges faced by edge embedded applications today.

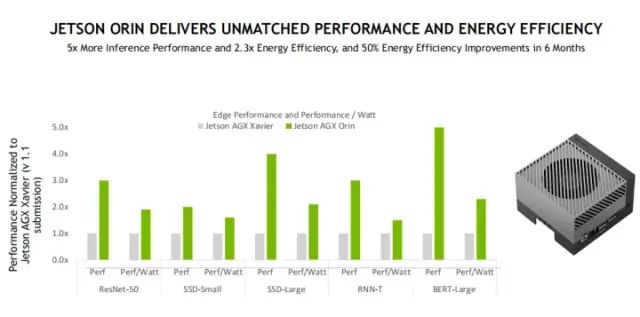

Jetson Orin offers unparalleled performance and energy efficiency, making it an ideal solution for your educational and embedded applications. Here, we present a set of ML perf benchmarks comparing Jetson AGX Xavier with our latest AGX Orin. If you are not familiar with ML perf, it is an alliance of AI leaders from academia, research labs, and industry, aimed at establishing fair and useful benchmarks to provide an impartial assessment of training and inference performance for hardware, software, and services. We are here to demonstrate that our Jetson AGX Xavier has been a leader in the edge inference category. You can see that last year’s submission of Jetson AGX Orin has improved performance by 5 times and energy efficiency by 2.3 times. Another key point to note is that we are continuously optimizing our software. Within just six months, we have been able to improve the energy efficiency of Jetson AGX Orin by 50%.

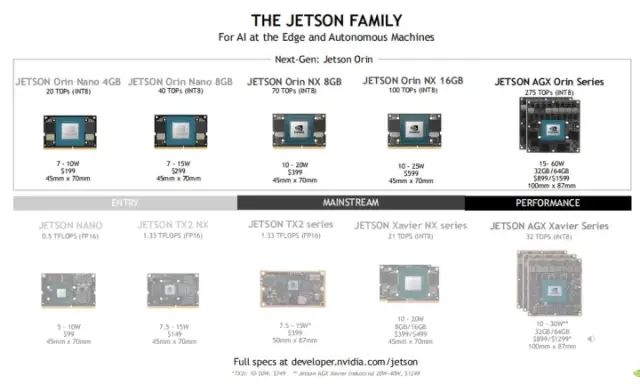

Now, looking at the entire series, we have six modules based on the Orin architecture. For the first time in Jetson’s history, we have based our entire product line from entry-level to high-performance on the same architecture, which is based on our latest Ampere.

(Editor’s note: The specifications for each module are not detailed here; you can refer directly to the official documentation.)

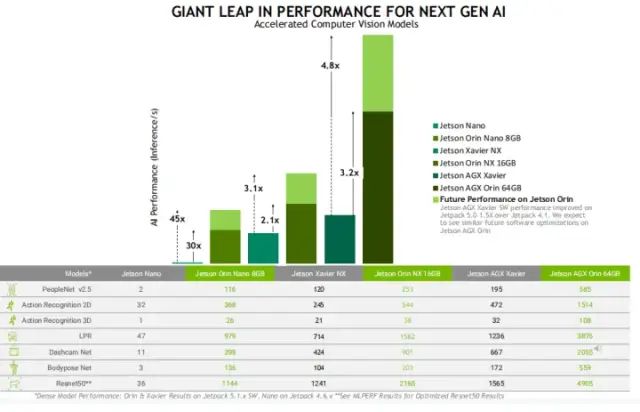

Jetson Orin provides a significant leap in performance for the next generation of AI. This is because Jetson Orin supports the latest generation of AMP, PE, GPUs, and new generation deep learning accelerators, with Ampere GPUs bringing new third-generation tensor cores to our products. Here, you can see some benchmark tests.

Another point to note is that we have been optimizing our software. When we first launched AGX Xavier compared to the latest JetPack 5.X software, we saw about a 1.5 times performance improvement. We expect to see similar results across the entire Jetson family. So you can see here that the light green indicates the expected performance for each module.

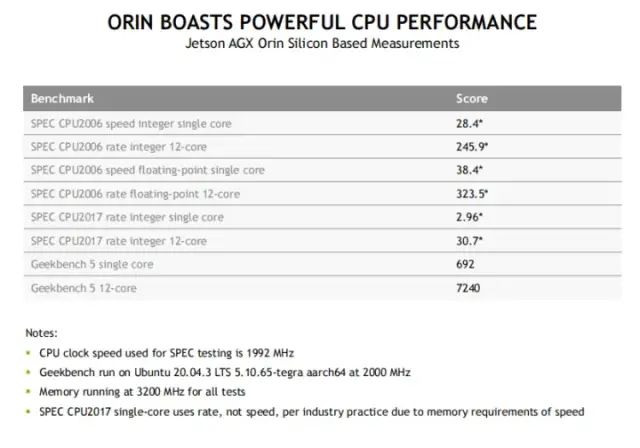

Additionally, the Orin series contains not only a high-performance GPU but also a powerful CPU. Jetson Orin features an ARM A78 CPU. Here, you can see some specifications of CPU benchmark tests and Geekbench tests that truly showcase the high performance of these CPU cores.

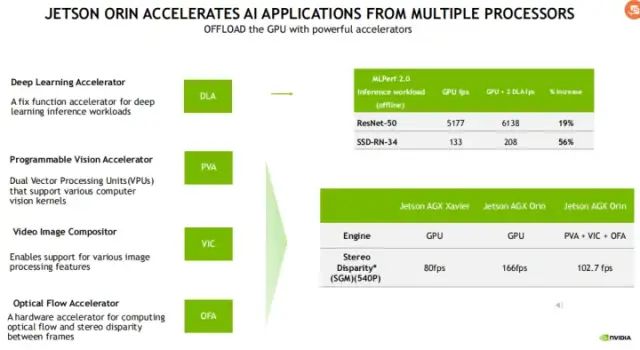

Jetson Orin not only includes GPUs and CPUs but also various other processors. These processors can be used to load portions of applications, allowing you to free up GPUs and CPUs for compute-intensive purposes. First, we have a Deep Learning Accelerator (DLA). This is a fixed-function accelerator for deep learning inference. With new versions of DLA in Orin, it offers 9 times the performance compared to the previous generation Xavier. Additionally, you can see in some of our ML perf benchmarks that including DLA, we are able to achieve 20%-56% performance gains compared to using a standalone GPU.

Furthermore, we also have a Programmable Vision Accelerator (PVA) for offloading computer vision algorithms, a Video Image Composer (VIC) for various image processing functions, and an Optical Flow Accelerator (OFA) for computing optical flow and stereo disparity. PVA and OFA can be programmed using our visual programming interface.

Regarding the product release timeline, we just discussed the AGX Orin Developer Kit and the JetPack 5.0 we released last March. The remaining Jetson Orin commercial modules will be available later this month along with JetPack 5.1.1, followed by the industrial-grade Jetson Orin modules with supporting software JetPack 5.1.2 in May 2023.