Personal Introduction: I am a graduate student majoring in Communication and Information Systems. My research focuses on intelligent optimization algorithms, drone trajectory planning, fine-tuning large language models, and deployment in specific scenarios. If you have any programming questions or customized needs, feel free to contact me through my public account.

1. Introduction

Recently, I received a development board called “Orange Pi AIpro” that has attracted attention from industry professionals. This board has a high cost-performance ratio and is compatible with various operating systems such as Ubuntu and Android. Today, I will deploy the YoloV8s algorithm on an 8+32G Orange Pi AIpro and use an external USB fisheye camera for target detection and recognition. After comprehensive testing, this development board can be used as an edge computing platform to handle target detection tasks in common scenarios. With the continuous development of artificial intelligence technology, target detection, as an important task in the field of computer vision, has been widely researched and applied.

2. Introduction to Orange Pi AIpro

2.1 Overview of Orange Pi

As the first AI development board based on Ascend deep development, the Orange Pi AIpro is excellent in appearance, performance, and technical service support. It adopts the Ascend AI technology route, integrates a graphics processing unit, has 8GB/16GB LPDDR4X, can connect to eMMC modules of 32GB/64GB/128GB/256GB, supports dual 4K HD output, and has 8/20 TOPS AI computing power. It is equipped with the MindStudio full-process development toolchain and provides one-click image burning tools and model adaptation tools for extremely simple development, allowing developers to quickly get started. It supports rapid adaptation of algorithm models and can be widely used in AI teaching training, AI algorithm verification, intelligent cars, robotic arms, edge computing, drones, artificial intelligence, cloud computing, AR/VR, smart security, smart home, smart transportation, and other fields. The Orange Pi AIpro supports Ubuntu and openEuler operating systems, meeting the needs of most AI algorithm prototype verification and inference application development.

2.2 Introduction to Orange Pi Hardware and Interfaces

The Orange Pi AIpro offers a rich array of interfaces, providing it with powerful expandability. It includes two HDMI outputs, GPIO interfaces, Type-C power interfaces, an M.2 slot supporting SATA/NVMe SSD 2280, a TF slot, a Gigabit Ethernet port, two USB 3.0 ports, one USB Type-C 3.0 port, one Micro USB port, two MIPI cameras, one MIPI display, etc., with a reserved battery interface. In terms of operating systems, the Orange Pi AIpro supports Ubuntu and openEuler operating systems, meeting the needs of most AI algorithm prototype verification and inference application development, and can be widely used in AI edge computing, deep visual learning, video stream AI analysis, video image analysis, natural language processing, intelligent cars, robotic arms, artificial intelligence, drones, cloud computing, AR/VR, smart security, smart home, etc., covering various industries in AIoT.

The Orange Pi AIpro offers a rich array of interfaces, providing it with powerful expandability. It includes two HDMI outputs, GPIO interfaces, Type-C power interfaces, an M.2 slot supporting SATA/NVMe SSD 2280, a TF slot, a Gigabit Ethernet port, two USB 3.0 ports, one USB Type-C 3.0 port, one Micro USB port, two MIPI cameras, one MIPI display, etc., with a reserved battery interface. In terms of operating systems, the Orange Pi AIpro supports Ubuntu and openEuler operating systems, meeting the needs of most AI algorithm prototype verification and inference application development, and can be widely used in AI edge computing, deep visual learning, video stream AI analysis, video image analysis, natural language processing, intelligent cars, robotic arms, artificial intelligence, drones, cloud computing, AR/VR, smart security, smart home, etc., covering various industries in AIoT.

3. Practical Application Experience

3.1 Hardware and Technology Used

3.1.1 Introduction to Yolo V8

YOLOv8 is the latest version of the YOLO series algorithm, which maintains efficiency and accuracy while further optimizing the algorithm structure and improving running speed. YOLOv8 adopts a brand-new network structure and loss function, making the model more stable during training and enhancing its generalization ability. YOLOv8 uses an end-to-end training approach, treating target detection as a regression problem, directly predicting the position and category of objects. In terms of model structure, YOLOv8 uses CSPDarknet53 as the backbone network, increasing residual connections and feature fusion to enhance the model’s feature extraction capability. In terms of loss functions, YOLOv8 uses the CIoU loss function, considering the overlap area between the predicted box and the real box, the distance between the center points, and the aspect ratio, making the model pay more attention to improving the accuracy of the predicted boxes during training.

3.1.2 Introduction to Fisheye Lens

A fisheye lens is a lens with a focal length[1] of 16mm or shorter and a field of view[2] close to or equal to 180°. It is an extreme wide-angle lens[3], commonly referred to as a “fisheye lens”. To achieve the maximum photographic field of view[4], the front lens of this photographic lens has a very short diameter and protrudes parabolically toward the front of the lens, resembling a fish’s eye, hence the name “fisheye lens”. Fisheye lenses are a special type of ultra-wide-angle lens[5], aiming to achieve or exceed the range visible to the human eye[6]. Therefore, fisheye lenses create a significant difference in the visual representation of the real world, as the scenes we see in real life have fixed regular shapes, while the images produced by fisheye lenses exceed this category. Fisheye lenses have an extremely large field of view, making them widely used in scene monitoring, satellite positioning[7], robotic navigation, small intelligent systems, and engineering measurement. Since fisheye lenses can achieve all-domain coverage and real-time information acquisition, especially meeting the needs of modern warfare for information acquisition technology, they have important applications in national defense and military fields.

3.2 Image Acquisition from Fisheye Lens

3.2.1 Process Introduction

Connect the fisheye lens to the Orange Pi AIpro via the USB interface to acquire images from the fisheye lens and process them into YUV images.

3.2.2 Related Driver Installation

First, download the relevant source code from the link below: Ascend/EdgeAndRobotics – Gitee.com[8] After downloading, unzip it and enter the sample directory

3.2.3 Install FFmpeg

sudo apt-get install ffmpeg libavcodec-dev libswscale-dev libavdevice-dev

Running result:

3.2.4 Install OpenCV

sudo apt-get install libopencv-dev

Running result:

3.2.5 Compile Sample Source Code

# Execute the compilation command

g++ main.cpp -o main -lavutil -lavformat -lavcodec -lavdevice

After successful execution of the compilation command, an executable file named main will be generated in the USBCamera sample directory. Running result:

3.2.6 Run the Sample to Acquire Images from the Camera

Run the executable file, where /dev/video0 represents the Camera device, which needs to be filled according to the actual situation:

./main /dev/video0

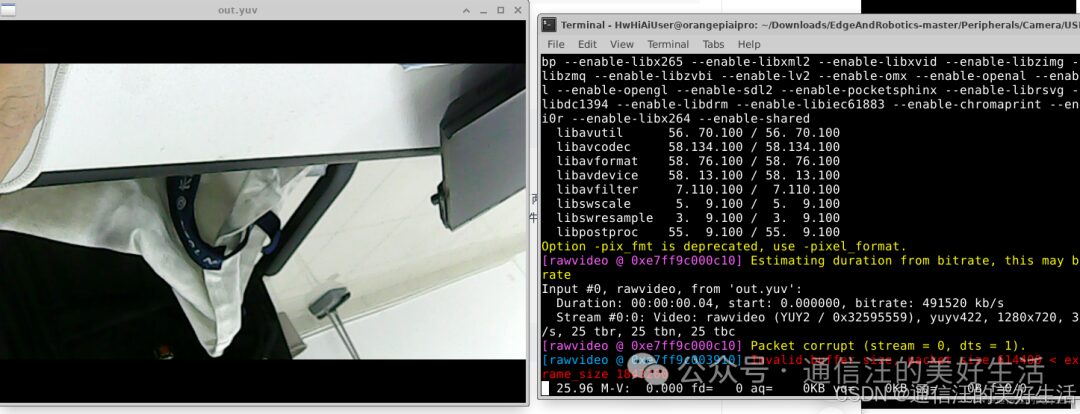

After successful execution, a yuyv422 format, 1280720 resolution out.yuv file will be generated in the USBCamera sample directory. Running result:  Note: When a camera is plugged into the development board, executing the ls /dev/vi command will show the camera’s video nodes. Here, two device nodes appear: /dev/video0 and /dev/video1, because one is for image/video acquisition and the other is for metadata acquisition. Therefore, in this example, when running the executable file, select the image/video acquisition device node /dev/video0.

Note: When a camera is plugged into the development board, executing the ls /dev/vi command will show the camera’s video nodes. Here, two device nodes appear: /dev/video0 and /dev/video1, because one is for image/video acquisition and the other is for metadata acquisition. Therefore, in this example, when running the executable file, select the image/video acquisition device node /dev/video0.

3.2.7 Check the Images Acquired from the Camera

Execute the following command to view the images using FFmpeg software:

ffplay -pix_fmt yuyv422 -video_size 1280*720 out.yuv

Running result:

3.3 YoloV8s Algorithm Deployment

Before using YOLOv8, it is necessary to install related dependency libraries, including Python, PyTorch[9], etc. Meanwhile, to facilitate the use of YOLOv8, we can also choose to install some auxiliary tools such as the COCO dataset and pre-trained models.

3.3.1 Environment Preparation

I chose to install torch 2.2 and torchvision 0.17 as follows:

pip install opencv-python -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install torch==2.2.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install torchvision==0.17.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

3.3.2 Install Yolo V8

pip install ultralytics -i https://pypi.tuna.tsinghua.edu.cn/simple

3.3.3 Write Real-Time Target Detection Program

import cv2

from ultralytics import YOLO

from cv2 import getTickCount, getTickFrequency

# Load YOLOv8 model

model = YOLO("weights/yolov8s.pt")

# Get camera content, parameter 0 indicates using the default camera

cap = cv2.VideoCapture(1)

while cap.isOpened():

loop_start = getTickCount()

success, frame = cap.read() # Read a frame of image from the camera

if success:

results = model.predict(source=frame) # Perform target detection on the current frame and display results

annotated_frame = results[0].plot()

# Insert your own display program here

loop_time = getTickCount() - loop_start

total_time = loop_time / (getTickFrequency())

#FPS = int(1 / total_time)

# In the upper left corner of the image, add FPS text

#fps_text = f"FPS: {FPS:.2f}"

#font = cv2.FONT_HERSHEY_SIMPLEX

#font_scale = 1

#font_thickness = 2

#text_color = (0, 0, 255) # Red

#text_position = (10, 30) # Upper left position

#cv2.putText(annotated_frame, fps_text, text_position, font, font_scale, text_color, font_thickness)

cv2.imshow('img', annotated_frame)

# Exit the loop by pressing 'q'

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release() # Release camera resources

cv2.destroyAllWindows() # Close OpenCV window

3.3.4 Final Recognition Results

4. Conclusion

In this article, I used the Orange Pi AIpro to deploy the Yolo V8s target detection and recognition algorithm, while using a fisheye camera suitable for autonomous robots to acquire real images and pass the real-time acquired images to the YoloV8 algorithm for target detection and recognition in the images. After testing, the Orange Pi AIpro can use the YoloV8 algorithm for real-time target recognition and detection, and the recognition accuracy is quite high. After comprehensive testing, the computing power of the Orange Pi AIpro can fully serve as an edge computing platform for handling target detection tasks in common scenarios. I believe this high cost-performance development board will provide a cost-effective and efficient solution for people to quickly verify and deploy their artificial intelligence algorithms. In the future, I will also try to train a model on the Orange Pi AIpro by creating my own dataset and labeling it.

Focal Length: https://baike.baidu.com/item/%E7%84%A6%E8%B7%9D/1880759?fromModule=lemma_inlink

[2]Field of View: https://baike.baidu.com/item/%E8%A7%86%E8%A7%92/1192660?fromModule=lemma_inlink

[3]Lens: https://baike.baidu.com/item/%E9%95%9C%E5%A4%B4/2956437?fromModule=lemma_inlink

[4]Wide-Angle Lens: https://baike.baidu.com/item/%E5%B9%BF%E8%A7%92%E9%95%9C%E5%A4%B4/278835?fromModule=lemma_inlink

[5]Field of View: https://baike.baidu.com/item/%E8%A7%86%E8%A7%92/1192660?fromModule=lemma_inlink

[6]Ultra-Wide-Angle Lens: https://baike.baidu.com/item/%E8%B6%85%E5%B9%BF%E8%A7%92%E9%95%9C%E5%A4%B4/514152?fromModule=lemma_inlink

[7]Satellite Positioning: https://baike.baidu.com/item/%E5%8D%AB%E6%98%9F%E5%AE%9A%E4%BD%8D/0?fromModule=lemma_inlink

[8]Ascend/EdgeAndRobotics – Gitee.com: https://gitee.com/ascend/EdgeAndRobotics/tree/master/Peripherals/Camera/USBCamera#camera%E5%9B%BE%E5%83%8F%E8%8E%B7%E5%8F%96usb%E6%8E%A5%E5%8F%A3

[9]PyTorch: https://cloud.baidu.com/product/wenxinworkshop