On July 28, WasmEdge 0.10.1 was officially released. Today, we will take a detailed look at the wasi-nn proposal in version 0.10.1. This article is the first in the wasi-nn series, and the next article will introduce the optimization points of WasmEdge for the wasi-nn proposal.

Nowadays, the term AI inference is no longer unfamiliar. From a technical perspective, the path of AI inference is input data, call the model, and return results. This looks like a perfect serverless function[1], as AI inference has simple input and output, and is stateless. It is well known that AI inference is a computationally intensive task. Using WebAssembly and Rust can achieve high-performance AI inference functions while ensuring the safety and cross-platform usability of the functions through Wasm.

Recently, the 0.10.1 version of WasmEdge Runtime[2] has provided support for the WASI-NN[3] interface proposal, and the backend inference engine currently only supports Intel OpenVINO.

In addition to OpenVINO,

WasmEdge Runtimealso supports TensorFlow[4] inference engine, but these two models adopt two different support schemes, and this article focuses on wasi-nn.

However, according to the development plan announced at the WasmEdge community meeting in July, WasmEdge will gradually support TensorRT, PyTorch[5], ONNX Runtime, and other backend inference engines in the future. So, how can this new interface specification be used to build AI inference tasks based on WebAssembly technology? What does the development process look like? How complex is it?

In this article, we attempt to answer these questions through a simple road segmentation ADAS example. The example code and related files involved can be found in the WasmEdge-WASINN-examples[6] code repository, and you are also welcome to add more wasi-nn examples.

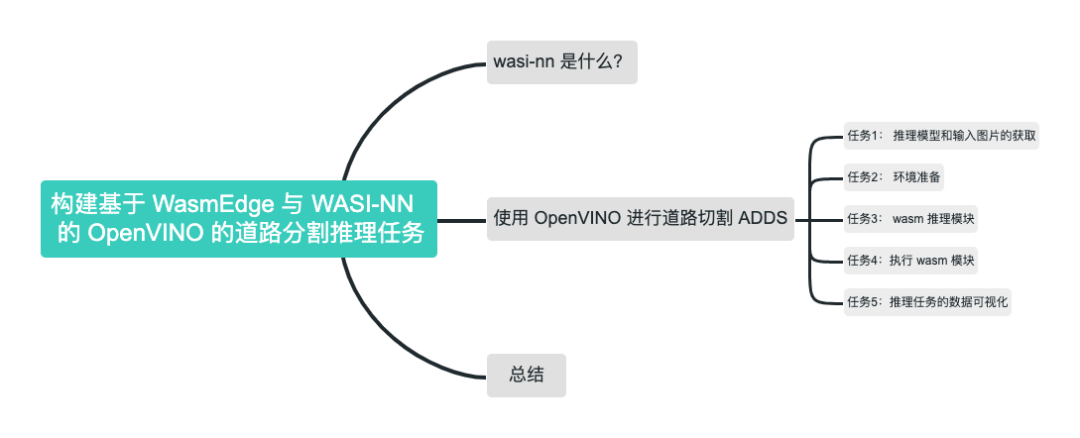

(Outline of this article)

What is WASI-NN?

Before the example, let’s briefly introduce the WASI-NN interface proposal.

In fact, the name of the WASI-NN proposal consists of two parts: WASI is the abbreviation for WebAssembly System Interface, which defines a set of interface specifications that allow WebAssembly to run safely in non-browser environments with finer-grained permission control; NN stands for Neural Network. It is evident that WASI-NN is a component of the WASI interface specification, mainly targeting the application scenario of machine learning.

Theoretically, this interface specification can be used for both model training and model inference, but our example focuses only on the model inference part. For more details about the WASI and WASI-NN interface specifications, you can go to wasi.dev[7].

Currently, stable support for WASI-NN has been provided on the main branch of the WasmEdge Runtime project, covering the five main interfaces defined in WASI-NN Proposal Phase 2:

// Load the byte sequence of the model

load: function(builder: graph-builder-array, encoding: graph-encoding, target: execution-target) -> expected<graph, error>

// Create an execution instance of the computation graph

init-execution-context: function(graph: graph) -> expected<graph-execution-context, error>

// Load input

set-input: function(ctx: graph-execution-context, index: u32, tensor: tensor) -> expected<unit, error>

// Execute inference

compute: function(ctx: graph-execution-context) -> expected<unit, error>

// Extract results

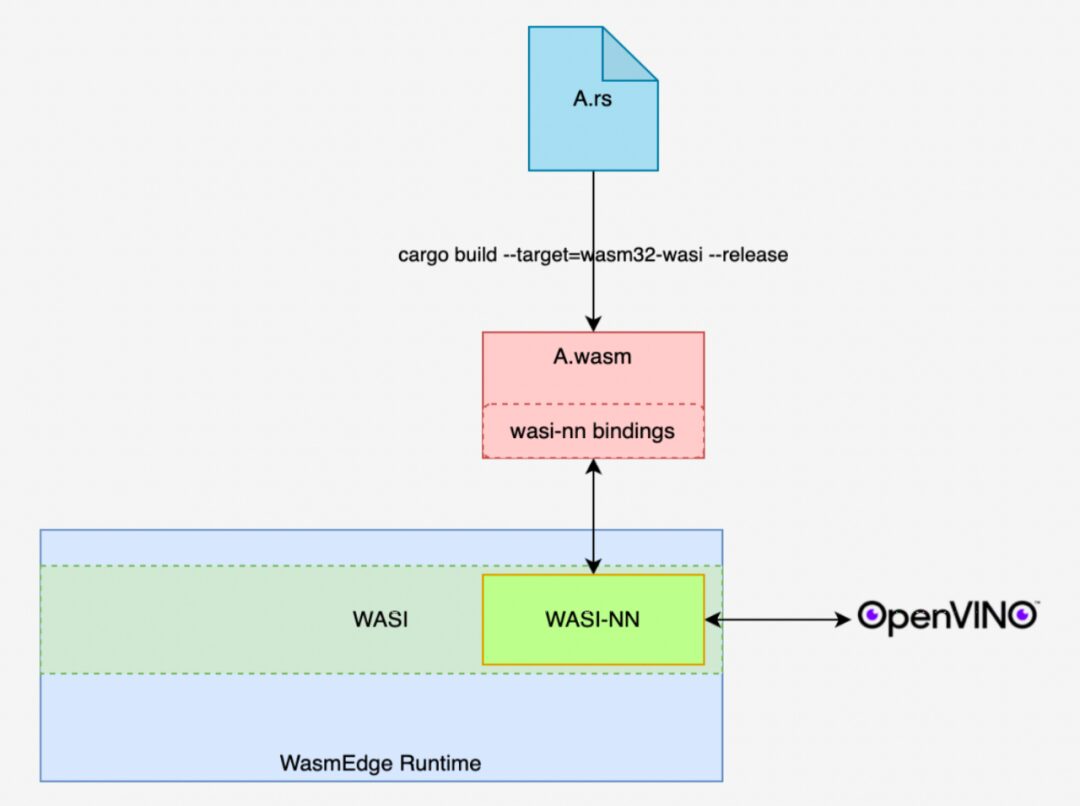

get-output: function(ctx: graph-execution-context, index: u32) -> expected<tensor, error>The main purpose of these interfaces is to provide a pipeline for the communication between the Wasm module and local system resources. In the inference task, the data between the frontend (Wasm module) and the backend (inference engine) is accomplished through this pipeline. The following diagram shows the application schematic of the WasmEdge Runtime WASI-NN interface. In the diagram, the green rectangle represents the WASI-NN interface that binds the frontend Wasm module to the backend OpenVINO inference engine. The example below, during the inference phase, actually completes data interaction, function scheduling, and a series of tasks through the built-in WasmEdge Runtime WASI-NN interface.

Next, let’s combine a specific example to practically see how to build a “simple yet not simple” machine learning inference task based on WasmEdge Runtime.

Using OpenVINO for Road Segmentation ADAS

Before getting started, let’s define the general process for building machine learning inference tasks using the WASI-NN interface, so that we have an overall grasp of the tasks at each stage.

-

Task 1: Define the inference task, obtain the inference model and input -

Task 2: Environment preparation -

Task 3: Build the wasm inference module -

Task 4: Execute the wasm module. Execute the wasm module created in Task 3 using the command-line execution mode provided by WasmEdge Runtime, i.e.,standalonemode, to complete the inference task. -

Task 5: Visualize the result data of the inference task.

Now, we will describe in detail how to accomplish the objectives of the above tasks.

Task 1: Obtaining the Inference Model and Input Image

For the selection of the inference model, for convenience, we chose the road-segmentation-adas-0001 model from Intel’s official openvino-model-zoo[8] open-source code repository. This model is mainly used for real-time segmentation of roads in autonomous driving scenarios. To simplify the scale of the example, we only use images as input for the inference task.

Task 2: Environment Preparation

We choose Ubuntu 20.04 as the system environment. The WasmEdge project also provides its own Ubuntu 20.04 development environment, so those who want to simplify the environment preparation process can pull the system image from doker hub[9]. In addition to the system environment, we also need to deploy the following installation packages:

-

Install OpenVINO 2021.4 -

Install WasmEdge Runtime v0.10.0 -

Install OpenCV 4.2 -

Optional: Install Jupyter Notebook -

Install Rustup compiler toolchain

It should be noted that the installation of Jupyter Notebook is mainly for two reasons: on the one hand, it is used to visualize data using Python, Numpy, and OpenCV, such as sample images and inference results; on the other hand, through the Evcxr[10] plugin, it can provide an interactive lightweight Rust development environment, which is very suitable for developing example code.

After the environment preparation is complete, you can download the code and related files for the example project. The complete code and related files for this example project are stored in WasmEdge-WASINN-examples/openvino-road-segmentation-adas[11], and you can use the following command to download:

// Download the example project

git clone [email protected]:second-state/WasmEdge-WASINN-examples.git

// Enter the root directory of this example

cd WasmEdge-WASINN-examples/openvino-road-segmentation-adas/rust

// View the directory structure of the example project

tree .The directory structure of the example project should look like this:

.

├── README.md

├── image

│ └── empty_road_mapillary.jpg ---------------- (Image used as input for the inference task in the example)

├── image-preprocessor ------------------------ (Rust project used to convert the input image to OpenVINO tensor)

│ ├── Cargo.lock

│ ├── Cargo.toml

│ └── src

│ └── main.rs

├── model --------------------------------------- (OpenVINO model files used in the example: xml file describes the model architecture, bin file stores the model weight data)

│ ├── road-segmentation-adas-0001.bin

│ └── road-segmentation-adas-0001.xml

├── openvino-road-segmentation-adas-0001 -------- (Rust project, where the wasi-nn interface calling logic is defined. Compiled as a wasm module and called for execution via WasmEdge CLI)

│ ├── Cargo.lock

│ ├── Cargo.toml

│ └── src

│ └── main.rs

├── tensor -------------------------------------- ()

│ ├── wasinn-openvino-inference-input-512x896x3xf32-bgr.tensor --- (This binary file is derived from the input image and serves as input for the wasm inference module)

│ └── wasinn-openvino-inference-output-1x4x512x896xf32.tensor --- (This binary file stores the result data produced by the wasm inference module)

└── visualize_inference_result.ipynb ------------ (Used for data visualization)According to the comments in the above directory structure, you should have a general understanding of each part of this example project. Here are a few more points to clarify:

-

Conversion of the sample image to OpenVINO Tensor

For developers who are using

OpenVINOas theWASI-NNinterface backend for the first time, this can be a pitfall. -

The first point is that Intel’s official openvino-rs open-source project uses *.bgrfiles as input for inference tasks in its examples. This file is actually a binary file, andbgrindicates that the file data corresponds to an image inBGRformat. In addition, there is a tool namedopenvino-tensor-converterin the openvino-rs project. This tool is used to generate the*.bgrfiles in the example. Theimage-preprocessorin our example project is also derived from this tool. -

The second common mistake is the arrangement of input tensor dimensions. In Intel’s official openvino-model-zooandopenvino-notebooksopen-source projects,NCHWis used as the arrangement of input tensor dimensions; and when verified using the Python API and Rust API, this arrangement is also followed. However, when using `wasi-nn crate`[12], the arrangement of input tensor dimensions isHWC. The specific reason for this situation is currently uncertain. -

Both

image-preprocessorandopenvino-road-segmentation-adas-0001are Rust projects, and the reason for not integrating them into one project is that the former depends onopencv-rs, which prevents it from being compiled as a wasm module. A potential solution worth trying is to replaceopencv-rswithimage, and interested students can give it a try.

Task 3: Wasm Inference Module

Since the focus of the example is the WASI-NN interface, we will not go into detail about the image-preprocessor part; interested students can take a closer look at the code and should be able to understand it quickly. Now let’s take a look at the WASI-NN interface. The WebAssembly/wasi-nn code repository provides two important documents: one is wasi-nn.wit.md and the other is wasi-nn.abi.md. The former describes the interfaces and related data structures involved in the WASI-NN interface specification using `wit` syntax format[13], while the latter provides more explicit definitions for the data types involved in the former. Below are the five interface functions provided in wasi-nn.wit.md:

// Step 1: Load the model file and configuration needed for this inference task

// builder: The model file to be loaded

// encoding: The type of backend inference engine, such as openvino, tensorflow, etc.

// target: The type of hardware accelerator used, such as cpu, gpu, etc.

load: function(builder: graph-builder-array, encoding: graph-encoding, target: execution-target) -> expected<graph, error>

// Step 2: Initialize the execution environment for this inference task using the graph created in step 1.

// graph-execution-context is actually a session created by the backend inference engine for this inference task, mainly serving to bind the graph created in step 1 with the tensor provided in step 3 for use in executing the inference task in step 4.

init-execution-context: function(graph: graph) -> expected<graph-execution-context, error>

// Step 3: Set the input for this inference task.

set-input: function(ctx: graph-execution-context, index: u32, tensor: tensor) -> expected<unit, error>

// Step 4: Execute this inference task

compute: function(ctx: graph-execution-context) -> expected<unit, error>

// Step 5: After the inference task successfully ends, extract the inference result data.

get-output: function(ctx: graph-execution-context, index: u32) -> expected<tensor, error>From the comments in the above code, we can see that these five interface functions form a template for completing an inference task using the WASI-NN interface. Since the two wit format files mentioned above only provide the “formal” definition of the WASI-NN interface, each programming language can further instantiate these interfaces. In the Rust language community, two engineers from Intel, Andrew Brown[14] and Brian Jones[15], jointly created the Rust binding for WASI-NN: wasi-nn crate. Our example will use the interfaces provided by this crate to build the inference module.

Next, let’s look at the openvino-road-segmentation-adas-0001 subproject used to build the inference Wasm module in this example. The following code snippet is the main part of this project: the inference function.

// openvino-road-segmentation-adas-0001/src/.main.rs

/// Do inference

fn infer(

xml_bytes: impl AsRef<[u8]>,

weights: impl AsRef<[u8]>,

in_tensor: nn::Tensor,

) -> Result<Vec<f32>, Box<dyn std::error::Error>> {

// Step 1: Load the model file and configuration needed for this inference task

let graph = unsafe {

wasi_nn::load(

&[xml_bytes.as_ref(), weights.as_ref()],

wasi_nn::GRAPH_ENCODING_OPENVINO,

wasi_nn::EXECUTION_TARGET_CPU,

)

.unwrap()

};

// Step 2: Initialize the execution environment for this inference task using the graph created in step 1

let context = unsafe { wasi_nn::init_execution_context(graph).unwrap() };

// Step 3: Set the input for this inference task

unsafe {

wasi_nn::set_input(context, 0, in_tensor).unwrap();

}

// Step 4: Execute this inference task

unsafe {

wasi_nn::compute(context).unwrap();

}

// Step 5: After the inference task successfully ends, extract the inference result data

let mut output_buffer = vec![0f32; 1 * 4 * 512 * 896];

let bytes_written = unsafe {

wasi_nn::get_output(

context,

0,

&mut output_buffer[..] as *mut [f32] as *mut u8,

(output_buffer.len() * 4).try_into().unwrap(),

)

.unwrap()

};

println!("bytes_written: {:?}", bytes_written);

Ok(output_buffer)

}

From the logic of the infer function body, we can find:

-

The calling logic of the interface functions completely replicates the previously described WASI-NNinterface calling template. -

Currently, the interfaces provided by the wasi-nncrate are still unsafe interfaces. For theWasmEdge Runtimecommunity, providing a safe wrapper crate based on the existingwasi-nncrate would be more user-friendly for community developers.

Since our example is prepared to be executed through the command line interface provided by WasmEdge Runtime, we will compile the infer function’s project into a wasm module. Before starting the compilation, please check whether the rustup toolchain has the wasm32-wasi target installed using the following command:

rustup target list

If you do not see wasm32-wasi (installed) in the returned result, you can install it using the following command:

rustup target add wasm32-wasi

Now you can execute the following command to compile and obtain the inference Wasm module:

// Ensure the current directory is the root directory of the openvino-road-segmentation-adas-0001 subproject

cargo build --target=wasm32-wasi --release

If the compilation is successful, you can find the module named rust-road-segmentation-adas.wasm under the ./target/wasm32-wasi/release path, which is responsible for calling the WASI-NN interface.

Task 4: Execute the Wasm Module

According to the entry function of the rust-road-segmentation-adas.wasm module, when invoking this module via the WasmEdge Runtime command-line interface, three inputs need to be provided (see the comments in the code snippet below):

// openvino-road-segmentation-adas-0001/src/main.rs

fn main() -> Result<(), Box<dyn std::error::Error>> {

let args: Vec<String> = env::args().collect();

// OpenVINO model architecture file

let model_xml_name: &str = &args[1];

// OpenVINO model weight file

let model_bin_name: &str = &args[2];

// OpenVINO tensor file derived from the image

let tensor_name: &str = &args[3];

...

}

To facilitate reproduction, you can find the files required for the example project:

-

Road-segmentation-adas-0001 model architecture file: model/road-segmentation-adas-0001.xml -

Road-segmentation-adas-0001 model weight file: model/road-segmentation-adas-0001.bin -

Input used for inference: tensor/wasinn-openvino-inference-input-512x896x3xf32-bgr.tensor

Since we borrowed the model from Intel’s official openvino model zoo, information about the model’s input, output, and other details can be found on the road-segmentation-adas-0001 model page[16]. In addition, image files cannot be used directly as input; they need to undergo some preprocessing, such as resizing and converting RGB to BGR, before being converted into byte sequences, so that they can be passed to the backend inference engine via the interfaces provided by wasi-nn crate. The *.tensor file mentioned above is a binary file exported after preprocessing the image file image/empty_road_mapillary.jpg using the image-preprocessor tool. If you want to try using your own images during the inference process, you can obtain the corresponding *.tensor files through the following two methods:

// Enter the root directory of the image-preprocessor subproject and execute the following command

cargo run -- --image ../image/empty_road_mapillary.jpg --dims 512x896x3xfp32 --tensor wasinn-openvino-inference-input-512x896x3xf32-bgr.tensor

// Alternatively, compile the image-preprocessor subproject to obtain the im2tensor executable, and then execute the conversion

cargo build --release

cd ./target/release

im2tensor --image ../image/empty_road_mapillary.jpg --dims 512x896x3xfp32 --tensor wasinn-openvino-inference-input-512x896x3xf32-bgr.tensor

After preparing the input files, we can execute the inference task using the command-line tools provided by WasmEdge Runtime.

-

First, confirm that the command-line tool of

WasmEdge Runtimehas been deployed to the local system:wasmedge --version // Or /your/local/path/to/wasmedge-release/bin/wasmedge --versionIf you do not see

wasmedge version 0.10.0.-71-ge920d6e6or similar version information, you can follow the steps in the WasmEdge Runtime official installation guide[17] to complete the installation. -

If the

WasmEdge Runtimecommand-line tool works correctly, you can execute the following command to run the inference task:// Execute the following command in the root directory of this example project wasmedge --dir .:. /path/to/rust-road-segmentation-adas.wasm ./model/road-segmentation-adas-0001.xml ./model/road-segmentation-adas-0001.bin ./tensor/wasinn-openvino-inference-input-512x896x3xf32-bgr.tensorAfter the inference task starts executing, the following information should be printed in the terminal:

Load graph XML, size in bytes: 401509 Load graph weights, size in bytes: 737192 Load input tensor, size in bytes: 5505024 Loaded graph into wasi-nn with ID: 0 Created wasi-nn execution context with ID: 0 Executed graph inference bytes_written: 7340032 dump tensor to "wasinn-openvino-inference-output-1x4x512x896xf32.tensor" --- After the inference task is completed, the result data is saved in this binary file The size of bytes: 7340032 --------------------------------------- Size of the result data in bytes

It should be noted that to enhance the readability of the output file, we have hardcoded the export file name according to certain rules, where

1x4x512x896xf32is used to indicate that the output data’s original dimension arrangement isNCHWand the data type isfloat32. The purpose of this is to facilitate data conversion during post-processing or visualization of the result data. Next, we will actually operate, using Python, Numpy, and OpenCV, to visualize the input image, inference result data, and final result data in Jupyter Notebook.

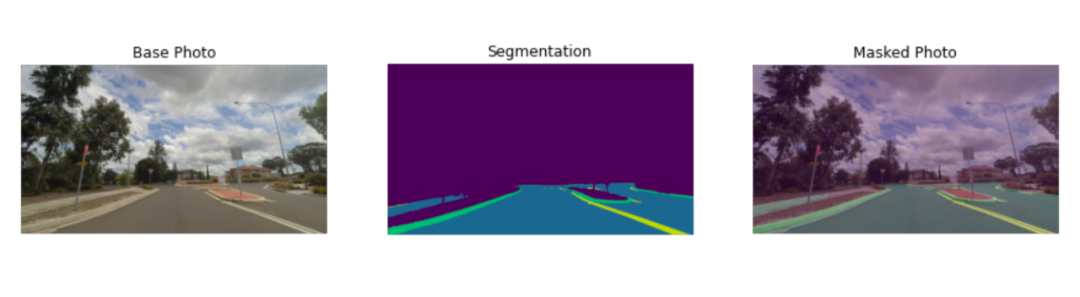

Task 5: Data Visualization of the Inference Task

To facilitate a more intuitive observation of the data before and after the inference process, we use Jupyter Notebook to build a simple data visualization tool. The three images below are the visualization results for three parts of data: the middle Segmentation image is derived from the inference Wasm module, while the left and right images are the original image and the final result image, respectively. The code related to data visualization is defined in visualize_inference_result.ipynb, and interested students can refer to it to rewrite it as needed; this part will not be further elaborated. In terms of data visualization, the functionality and convenience provided by the Python ecosystem are far superior to those provided by the Rust ecosystem.

Conclusion

This article demonstrates how to use the WasmEdge Runtime provided WASI-NN interface to build a road segmentation machine learning example through a simple example.

From this example, we can observe that compared with traditional machine learning methods, the increase in code size and additional code maintenance costs when building machine learning applications based on WebAssembly technology are very limited. However, in terms of application, these small increases in “cost” can help achieve better service performance. For example, in cloud service environments, WebAssembly can provide a cold start speed 100 times faster than Docker, with execution durations reduced by 10% to 50%, and extremely low storage space.

The WASI-NN proposal provides a unified, standardized interface specification that allows WebAssembly runtimes to integrate with various types of machine learning inference engine backends through a single interface, significantly reducing system integration complexity and the costs of later maintenance and upgrades; at the same time, this interface specification also provides an abstraction that isolates the details of the frontend and backend from each other, facilitating the rapid construction of machine learning applications. With the continuous improvement of the WASI-NN interface specification and the gradual establishment of the surrounding ecosystem, it is believed that WebAssembly technology will qualitatively change the deployment and application methods of current machine learning solutions.

Feel free to visit the WasmEdge-WASINN-examples[18] code repository to see more examples, and you are also welcome to add more wasi-nn examples.

Related Reading

-

WasmEdge 0.10.1 Released, Supporting wasi-nn and wasi-crypto and Other WebAssembly Proposals

-

Summary of WasmEdge July Community Meeting: Introduction to wasi-crypto, wasi-nn Proposals and Building the Indian Community

-

LFX Mentorship: Become an Open Source Contributor to CNCF Projects

References

Perfect serverless function: https://www.secondstate.io/articles/ai-as-a-servide-on-webaasembly/

[2]WasmEdge Runtime: https://github.com/WasmEdge/WasmEdge

[3]WASI-NN: https://github.com/WebAssembly/wasi-nn

[4]TensorFlow: https://wasmedge.org/book/en/dev/rust/tensorflow.html

[5]PyTorch: https://github.com/WasmEdge/WasmEdge/pull/1654

[6]WasmEdge-WASINN-examples: https://github.com/second-state/WasmEdge-WASINN-examples/blob/master/openvino-road-segmentation-adas/rust/README.md

[7]wasi.dev: wasi.dev

[8]openvino-model-zoo: https://github.com/openvinotoolkit/open_model_zoo

[9]doker hub: https://hub.docker.com/r/wasmedge/wasmedge/tags

[10]Evcxr: https://github.com/google/evcxr

[11]WasmEdge-WASINN-examples/openvino-road-segmentation-adas: https://github.com/second-state/WasmEdge-WASINN-examples/tree/master/openvino-road-segmentation-adas/rust

[12]wasi-nn crate: https://crates.io/crates/wasi-nn

wit syntax format: https://github.com/bytecodealliance/wit-bindgen/blob/main/WIT.md

Andrew Brown: https://github.com/abrown

[15]Brian Jones: https://github.com/brianjjones

[16]road-segmentation-adas-0001 model page: https://github.com/openvinotoolkit/open_model_zoo/blob/master/models/intel/road-segmentation-adas-0001/README.md

[17]WasmEdge Runtime official installation guide: https://wasmedge.org/book/en/start/install.html

[18]WasmEdge-WASINN-examples: https://github.com/second-state/WasmEdge-WASINN-examples/blob/master/openvino-road-segmentation-adas/rust/README.md

About WasmEdge

WasmEdge is a lightweight, secure, high-performance, real-time software container and runtime environment. It is currently a CNCF sandbox project. WasmEdge is used in SaaS, cloud-native, service mesh, edge computing, automotive, and other fields.

Documentation: https://wasmedge.org/book/en

Documentation: https://wasmedge.org/book/enClick to read the original text and view the WASI-NN documentation

This article is reprinted fromSecond State. Click here to read the original text to learn more.

CNCF Overview (Slides)

Scan the QR code to contact us!

The CNCF (Cloud Native Computing Foundation) was established in December 2015 and is part of the Linux Foundation, a non-profit organization.

CNCF (Cloud Native Computing Foundation) is dedicated to nurturing and maintaining a vendor-neutral open-source ecosystem to promote cloud-native technologies. We democratize cutting-edge patterns to make these innovations accessible to the public. Please long press the following QR code to follow.