Introduction: The Wonderful Collision of C++ and Neural Networks

Today, the wave of AI is sweeping across the globe, from facial recognition payments to intelligent voice assistants, from precise medical imaging diagnosis to self-driving cars, the presence of neural networks is ubiquitous. It has become the core engine driving these innovative applications. In the realm of neural network development, the C++ language, with its outstanding performance and precise control over low-level resources, has always held a key position.

For developers pursuing extreme efficiency and powerful functionality, C++ is like a Swiss army knife; whether building complex deep learning models or deploying models to various hardware platforms, it can handle it all. Today, we will delve into the world of C++ neural networks and explore two extremely important optimization tools: Batch Normalization and training acceleration techniques. Let’s see how they empower the performance of neural networks, helping developers break through bottlenecks and create more possibilities!

1. Understanding Batch Normalization in Neural Networks

(1) What Exactly is Batch Normalization?

Batch Normalization, abbreviated as BN, is like a magical “data magician” that standardizes the outputs of the intermediate layers in a neural network. In simple terms, it cleverly transforms the features of each batch of data into a standard normal distribution with a mean of 0 and a variance of 1.

We can imagine a neural network as a large factory assembly line where data enters from the input end, flows and processes through each layer of the network, and finally produces an output result. Batch normalization acts like a “calibrator” at each key node on the assembly line; whenever a new batch of data flows through the intermediate layer, it quickly adjusts the distribution of this data to the optimal state. This way, the subsequent neurons can work in a relatively stable and unified environment without being “flustered” by large fluctuations in the input data.

This operation brings many benefits! It makes the training process of neural networks more stable, just like equipping a racing car speeding on a bumpy mountain road with a super shock absorber, significantly reducing the risk of bumps and loss of control. Moreover, it can significantly accelerate the convergence speed of the model, allowing it to find the optimal solution faster, like showing a lost traveler a shortcut to reach their destination more quickly. Not only that, but batch normalization also helps improve the model’s generalization ability, allowing the trained model to perform effortlessly when facing unfamiliar new data, easily coping with various complex and changing situations.

(2) The “Magic” Principle of Batch Normalization

Next, let’s delve into the mathematical magic behind batch normalization. Suppose we have the output of an intermediate layer of a neural network, denoted as X, where N represents the batch size, that is, the number of data samples inputted into the neural network at once.

First, we calculate the mean using the formula μ = 1/N * ΣX. This step is like gathering a group of students’ exam scores to find an average score, giving us a preliminary understanding of the overall level of this batch of data. By calculating the mean, we can find out where the data is concentrated.

Next, we calculate the variance using the formula σ² = 1/N * Σ(X – μ)². The variance reflects the degree of dispersion of the data, akin to observing the distribution range of student scores, whether they are concentrated around the average or widely spread out. It tells us the magnitude of fluctuations in this batch of data; the larger the variance, the more dispersed the data.

After completing the first two steps, we enter the normalization phase using the formula X’ = (X – μ) / √(σ² + ε), where ε is a small constant, usually around 1e-8, to prevent the denominator from being zero, avoiding a mathematical “embarrassment”. This step standardizes the students’ scores based on the average score and range of fluctuations, allowing them to start on the same starting line; no matter how large the range of the original data is, after normalization, they are adjusted to a relatively unified scale.

Finally, we perform scaling and shifting using the formula Y = γX’ + β, where γ and β are two learnable parameters, acting like “makeup artists” tailored for the data, responsible for appropriately modifying the data based on the standardization, restoring the expressive ability of the data, allowing the model to flexibly adjust the distribution shape of the data according to the actual situation.

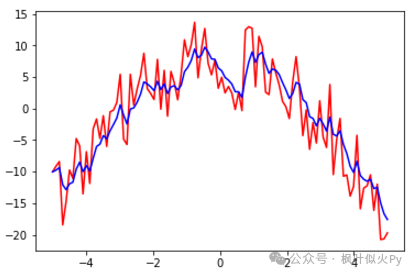

With this series of operations, batch normalization completes its mission. Without batch normalization, as the depth of the neural network increases during training, the distribution of input data becomes increasingly “unruly”, sometimes concentrated in one area and sometimes scattered elsewhere, which can easily lead to problems like gradient vanishing or explosion, causing the model training to encounter difficulties, like a small boat losing direction in turbulent seas, swaying and struggling to move forward. With batch normalization, the data distribution is firmly controlled, gradients become stable and reliable, allowing the model to sail smoothly towards the optimal solution.

(3) Code Example: C++ Implementation of Batch Normalization

Having understood the principles, let’s look at how to implement batch normalization using C++ code. Here is a simple example:

#include <vector>

#include <cmath>

#include <iostream>

// Batch normalization function

std::vector<double> batchNormalization(const std::vector<double>& input) {

std::vector<double> output;

double mean = 0.0;

double variance = 0.0;

// Calculate mean

for (double value : input) {

mean += value;

}

mean /= input.size();

// Calculate variance

for (double value : input) {

variance += std::pow(value - mean, 2);

}

variance /= input.size();

// Normalize

for (double value : input) {

double normalizedValue = (value - mean) / std::sqrt(variance + 1e-8);

output.push_back(normalizedValue);

}

return output;

}

int main() {

std::vector<double> input = {1.0, 2.0, 3.0, 4.0, 5.0};

std::vector<double> normalizedOutput = batchNormalization(input);

// Output normalized results

for (double value : normalizedOutput) {

std::cout << value << " ";

}

std::cout << std::endl;

return 0;

}</double></double></double></double></double>In this code, we define a function named batchNormalization that takes an input vector of type std::vector