PISAstands for the Program for International Student Assessment, initiated and organized by the Organisation for Economic Co-operation and Development (OECD). The assessment targets students aged 15 who are nearing the completion of basic education (typically 9th grade or 10th grade), focusing on reading literacy, scientific literacy, and mathematical literacy, as well as interdisciplinary skills such as self-regulation and problem-solving abilities. Since 2000, PISA assessments have been conducted every three years, with a total of five assessments completed to date.

TIMSSstands for the Trends in International Mathematics and Science Study, organized by the International Association for the Evaluation of Educational Achievement (IEA). TIMSS focuses on understanding students’ performance in mathematics and science education and the factors influencing these performances, covering the curriculum from the middle of primary school, through lower secondary school, to the final year of secondary school. Since its first assessment in 1995, TIMSS has conducted five assessments.

This section introduces the youth scientific literacy surveys and assessments based on the PISA and TIMSS models from 2000 to 2014.

(1) Scientific Literacy Assessment Based on the PISA Model

The scientific literacy assessment framework of PISA 2006 includes three aspects: context, knowledge, and competence. The “context” involves specific situations related to science and technology, including areas such as life and health, earth and environment, and technology, with each area further divided into three levels: individual, family and community, and global, forming a contextual system. “Knowledge” refers to scientific knowledge and knowledge about science, where the former includes knowledge of material systems, life systems, earth and space systems, and technological systems; the latter includes aspects of scientific inquiry and scientific explanation. “Competence” includes the ability to identify scientific problems, explain scientific phenomena, and use scientific evidence, mainly involving cognitive processes such as inductive/deductive reasoning, critical thinking, and integrative thinking, as well as mathematical modeling. Additionally, PISA also focuses on students’ attitudes towards science, which mainly includes interest in science, support for scientific research, and a sense of responsibility towards resources and the environment.①Clearly, PISA includes interest in science as part of scientific attitudes.

PISA uses a paper-and-pencil testing format, with question types including multiple-choice questions, multiple-answer questions, fixed-answer questions, and open-ended questions, with open-ended questions accounting for about one-third of the total. The questions are grouped, with each group providing a realistic life context through a narrative and designing 2-5 questions based on that context.

In 2006, to learn from PISA’s advanced assessment concepts, theories, and technologies, the Ministry of Education’s Examination Center introduced and initiated the PISA 2006 China pilot study project to establish evaluation standards, methods, technologies, and methodologies suitable for China’s national conditions. In 2009 and 2012, the Examination Center organized samples from 10 provinces (cities) in eastern, central, and western China to participate in the PISA 2009 and PISA 2012 pilot studies. In 2009, Shanghai officially participated in the PISA project and achieved the highest score globally.

In 2007, Qin Haozheng and Qian Yuanwei constructed a structural model of five competencies in scientific literacy based on the PISA scientific literacy assessment framework and conducted a survey of scientific literacy among middle school students in Shanghai using a self-developed test. The five competencies are reading ability (R), mathematical ability (M), scientific explanation ability (Pe), scientific inquiry ability (S), and problem-solving ability (P).②Clearly, this model incorporates both reading literacy and mathematical literacy from the PISA assessment into scientific literacy.

Qin Haozheng and Qian Yuanwei selected a sample of 1180 middle school students who had completed 7, 8, and 9 years of compulsory education, using a self-developed assessment questionnaire for the survey. The assessment questionnaire included four situational questions covering energy in life activities, batteries, holiday travel, and online shopping, with each situational question designed to test five competencies. The five competencies are divided into two levels: the first level includes reading ability, mathematical ability, and problem-solving ability, with about 30 test points for each; the second level includes scientific explanation and prediction, and scientific inquiry, with about 10 test points for each. In total, there are 110 test points. The test results indicate: (1) The average score of students is 55.5 points (with a score rate of 0.5), with a standard deviation of 19.5 points, and as the grade increases, the average score of students significantly improves, while the standard deviation gradually decreases; (2) In terms of gender differences, in the first year of junior high school, girls’ average scores are higher than boys’ (by about 5.69 points), but by the third year, boys’ scores are slightly higher than girls’ (by about 1.21 points); (3) In terms of the five competencies, the score rate for reading ability (R) is 0.6, for mathematical ability (M) is 0.36, for problem-solving ability (P) is 0.75, for scientific explanation ability (Pe) is 0.36, and for scientific inquiry ability (S) is 0.51.

Specifically, regarding the content of each competency, in reading comprehension ability, middle school students have strong skills in acquiring explicit information and expressing reading results in words but have weak skills in acquiring implicit information; in mathematical tool ability, middle school students are not strong in applying mathematical tools in context, and their ability to organize and analyze data is relatively weak; in scientific explanation and prediction abilities and scientific inquiry, middle school students are generally insufficient.

In 2012, Hu Yongmei, in a project commissioned by the China Association for Science and Technology Youth Science Center, constructed a youth scientific literacy assessment framework based on PISA 2006’s model, focusing on four aspects: context, knowledge, competence, and attitude.③In the “context” aspect, it includes personal contexts, social contexts, and global contexts closely related to science and technology; in the “knowledge” aspect, it mainly includes content such as mathematics, material science, life science, earth and space science, science and technology, and information; in the “competence” aspect, it mainly includes three levels: identifying scientific events, scientifically explaining various phenomena, and using scientific evidence to solve practical problems; in the “attitude” aspect, it mainly includes three aspects: interest in science, views and attitudes towards scientific practice, and scientific values, as well as understanding of science-technology-society (STS).

Unlike the previously mentioned Miller model, three-dimensional target model, and four-element model, the four aspects proposed by the PISA 2006 model are not parallel or equivalent. First, context is not the subject of scientific literacy assessment but the background on which scientific literacy assessment items are based, which permeates the examination of scientific attitudes and scientific knowledge and requires certain competencies to solve practical problems. Second, scientific knowledge and attitudes influence each other; on one hand, understanding and mastering scientific knowledge helps enhance interest in science, establish correct scientific values, and form good scientific attitudes; on the other hand, good scientific attitudes can promote the learning of scientific knowledge. Finally, competence is the main line and core of the youth scientific literacy assessment framework, and having good scientific attitudes and rich scientific knowledge can enhance scientific competence.

In contrast to the PISA 2006 assessment framework, Hu Yongmei’s framework includes mathematics as part of scientific knowledge in the scientific literacy assessment and views the three competencies as three levels. Additionally, the three aspects included in scientific attitudes are also determined based on China’s science curriculum standards. Based on this framework, Hu Yongmei and others developed the “China Youth Scientific Literacy Assessment Questionnaire” and analyzed the overall quality of the scientific literacy assessment questionnaire using data from high school students in six provinces and cities: Sichuan, Hubei, Liaoning, Gansu, Fujian, and Beijing. The analysis results indicate that the overall difficulty of the questionnaire is 0.412, reliability is 0.817, discrimination is 0.301, and validity is 0.508, which basically meets the requirements for large-scale assessments.

Jin Zhifeng and Xue Haiping analyzed scientific literacy survey data from 2497 students in five provinces: Sichuan, Hubei, Liaoning, Gansu, and Fujian, with the following results: (1) The average score of students’ scientific literacy is 60.94 points, with average scores for identifying problems, explaining phenomena, and using evidence being 62.73, 65.68, and 54.58 respectively; (2) Students participating in science and subject competitions have an average score of 63.72, higher than the 57.96 of non-participating students, with participating students significantly outperforming non-participants in all three competency dimensions; (3) The average score of girls in scientific literacy is 54.95 points, which is 6.89 points lower than boys’ average score, especially significantly lower in “explaining phenomena” and “using evidence”; (4) From a regional perspective, students’ scientific literacy levels show the highest in the east, followed by the central region, and the lowest in the west; (5) From the perspective of urban types, the highest is in provincial capitals, followed by general cities, and the lowest in county towns.④

In 2012, the Zhejiang Provincial Examination Institute, following PISA 2012 requirements, sampled students from 57 schools across the province, including third-year junior high school students and first-year high school students, with an effective sample size of 2382. The test results indicate that the average score of scientific literacy among students in Zhejiang Province is 582 points, second only to Shanghai’s 596 points.⑤

(2) Scientific Literacy Assessment Based on the TIMSS Model

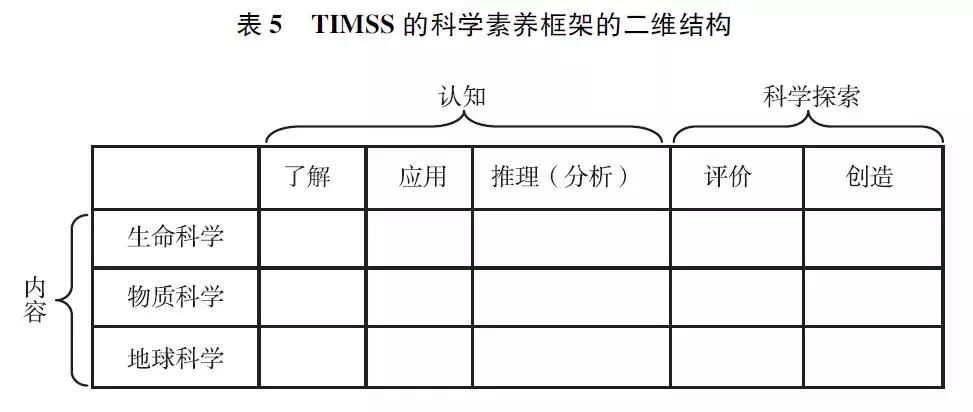

Since 1995, TIMSS has conducted five tests. Based on previous tests, TIMSS 2011 improved the scientific assessment framework. In the previous assessment framework, “scientific inquiry” was only a component of the “cognitive” dimension (referred to as “reasoning and application”), while TIMSS 2011 independently categorized “scientific inquiry” alongside “content” and “cognition”, forming three assessment dimensions.⑥Among these, the “content” dimension details the content areas and topics involved in scientific assessment, including life science, material science, and earth science; the “cognitive” dimension describes the levels of thinking students should achieve in assessments, mainly including understanding, application, and reasoning; the “scientific inquiry” dimension includes five abilities: posing questions and hypotheses, designing investigation plans, presenting data, analyzing and interpreting data, and drawing conclusions and explanations.

From the above structure, it can be seen that the TIMSS 2011 scientific literacy assessment framework is built based on the revised structure of Bloom’s taxonomy of educational objectives. In 1995, at the suggestion of measurement and evaluation expert David K. Clark and curriculum theorist Lorin W. Anderson, a Bloom’s taxonomy revision group was formed. After six years of writing and revision, the revised version of Bloom’s taxonomy was established.⑦This taxonomy system constructs educational objectives based on “knowledge categories” and “cognitive processes”. The “knowledge categories” include factual knowledge, conceptual knowledge, procedural knowledge, and metacognitive knowledge (also known as reflective cognitive knowledge), while the “cognitive processes” include six levels: remembering, understanding, applying, analyzing, evaluating, and creating. The two dimensions work together to form the following two-dimensional model (see Table 4).

From the two-dimensional structure of the revised Bloom’s taxonomy, the content dimension of TIMSS corresponds to “knowledge types”, but is not decomposed according to knowledge categories, rather according to knowledge fields; the cognitive dimension corresponds to “cognitive processes”, but only includes understanding (comprehension), application, and analysis (reasoning), while evaluation and creation correspond to the “scientific inquiry” dimension in TIMSS. Based on this analysis, TIMSS’s scientific literacy framework can be described in a two-dimensional structure as shown in Table 5.

In 2012, Gan Lu and He Shusheng referenced TIMSS’s assessment framework, using exploratory factor analysis techniques to compile a questionnaire for assessing scientific literacy among middle school students, measuring some first-year high school students in Guangzhou and comparing with the international average level for the same grade. ⑧Gan Lu and He Shusheng constructed the scientific literacy assessment framework from the following five aspects: understanding simple information, understanding complex information, using tools, standard procedures, and scientific processes, theorizing, analyzing, and solving problems, and exploring the natural world. These five dimensions are unfolded from the “cognitive” and “scientific inquiry” dimensions, where “understanding simple information”, “understanding complex information”, and “using tools, standard procedures, and scientific processes” correspond to the “cognitive” dimension, while “theorizing, analyzing, and solving problems” and “exploring the natural world” correspond to the “scientific inquiry” dimension. The survey results indicate that high school students in Guangzhou generally perform better than the international average level, particularly excelling in “understanding complex information”, “using tools, standard procedures, and scientific processes”, and “exploring the natural world”, but students’ scientific literacy levels develop unevenly across different subject areas.

In 2013, Guo Qixiang compared the scientific literacy levels of students at various levels in mainland China and Taiwan using the “Scientific Literacy in Media” (SLM) assessment tool designed by the research team from National Taiwan Normal University. This assessment tool examines students’ scientific literacy levels from two aspects: “whether they understand frequently occurring scientific concepts in the media” and “whether they can apply scientific knowledge to solve everyday problems”. The test includes 21 conceptual questions and 29 situational questions, each worth 1 point, for a total of 50 points. Among them, the conceptual questions mainly assess students’ understanding of frequently occurring scientific concepts in the media; situational questions mainly assess students’ ability to use scientific concepts and knowledge to solve everyday problems. The test content covers four fields: biology, earth science, physics, and chemistry. Clearly, this assessment tool’s framework can also be analyzed according to the two-dimensional model composed of “knowledge fields” and “cognition”. Compared to TIMSS’s assessment framework, it simply does not include requirements for “scientific inquiry”.

The survey sampled 206 first-year junior high school students, 240 high school students, and 313 university students in mainland China, and 803 first-year junior high school students, 301 high school students, and 239 university students in Taiwan. The survey results indicate: (1) The total SLM score of first-year junior high school and first-year high school students in mainland China (30.23) is slightly lower than that of corresponding grade students in Taiwan (32.68), but there is no significant difference in the total SLM scores of university students between mainland and Taiwan; (2) Regarding question types, the score rate of mainland students in conceptual questions (0.49) is lower than that of Taiwan students in the same question type (0.65), while the score rate of mainland students in situational questions (0.64) is lower than that of Taiwan students in the same question type (0.79); (3) In terms of scores across subjects, overall, mainland students’ scores in biology, earth science, and chemistry are significantly lower than those of Taiwan students. Additionally, this survey also found that students’ interest in natural sciences, mathematics, and social sciences has a significant positive correlation with their scientific literacy levels; the amount of news media used by students is also significantly correlated with their scientific literacy levels.

①OECD. PISA 2006 Assessment Framework: Key Competencies in Reading, Mathematics and Science, Paris: OECD, 2006.

②Qin Haozheng, Qian Yuanwei: “Shanghai Youth Scientific Literacy Survey Report”, Research on Educational Development, 2008, Issue 24.

③Hu Yongmei: “Development and Quality Analysis of Youth Scientific Literacy Assessment Tools”, Educational Academic Monthly, 2012, Issue 3.

④Jin Zhifeng, Xue Haiping: “Investigation and Analysis of Scientific Quality of High School Students in China”, Chinese Youth Research, 2014, Issue 10.

⑤Feng Chenghuo: “Zhejiang Basic Education from the Perspective of PISA: Performance and Insights of Zhejiang Students in the PISA 2012 Pilot Test”, Zhejiang Education Science, 2014, Issue 5.

⑥Yue Zonghui, Zhang Junpeng: “Overview, Changes, and Implications of the TIMSS 2011 Scientific Assessment Framework”, Curriculum and Teaching, 2012, Issue 12.

⑦L. W. Anderson, D. R. Krathwohl, A Taxonomy for Learning, Teaching, and Assessing—a Revision of Bloom’s Taxonomy of Educational Objectives. New York, NY: Longman, 2001.

⑧Gan Lu, He Shusheng: “Survey and Analysis of High School Students’ Scientific Literacy—A Case Study of Guangzhou City”, Educational Guide (First Half Month), 2012, Issue 7.

Authors:Li Yifei, Zhou Lijun

Source:Excerpt from the Blue Book of Science Popularization Education: China Science Education Development Report (2015) (Chief Editors: Luo Hui, Wang Kangyou; Social Sciences Academic Press) Investigation Section “Youth Scientific Literacy Survey (2000-2014)”