Author | Bruno Menard

Through AI and embedded vision, next-generation digital image processing technologies can enhance the efficiency of machine vision systems, enabling devices to adapt to uncontrolled real-world conditions and bring continuous learning to machines in the field.

Digital image processing has greatly transformed our ability to observe our world and the external environment from a two-dimensional perspective. Initially, digital image processing required significant computational power to handle low-resolution images, but developments in the 1960s provided us with the first image of the Moon, changing our perception of Earth’s natural satellite.

Subsequently, advancements in traditional digital image processing technologies have brought us essential technologies, from medical visualization techniques to machine vision systems in factory workshops. Despite the innovations brought by traditional digital image processing technologies, the room for improvement is limited. In contrast, the application of artificial intelligence (AI) and more sophisticated embedded vision technologies is pushing digital image processing systems to a whole new level.

What advantages does AI-based image processing have over traditional image processing, and how can you utilize it if you are not an AI expert? What are the current and future applications of AI and embedded vision? This article will delve deeper into these questions.

01

Comparison of AI and Traditional Image Processing

If all image processing were conducted in controlled environments, such as indoor spaces with uniform lighting, shapes, and colors, we would hardly need AI. However, this is rarely the case, as most image processing occurs in the real world—in uncontrolled environments where varying shapes and colors of objects are the norm rather than the exception.

AI can tolerate significant variations in environmental lighting, angles, rain, dust, occlusion, and other environmental factors. For example, if you need to capture images of cars driving on the street over a 24-hour period, the lighting and angles of image capture will constantly change.

For instance, suppose you are a large tomato grower, and you need to package tomatoes into three packs for distribution to grocery store warehouses. The shape and color of the tomatoes must be uniform to meet the quality control standards of warehouse customers. Only an AI-based imaging system can support high variability in shape and color, identifying only those tomatoes that meet the ripeness requirements. This sounds great, but how do you achieve this if you are neither an AI expert nor an image processing expert?

02

AI-Based Image Processing Technologies

It has been a few years since GUI tools supporting AI became available for training neural networks on 2D images. Before this significant achievement, training neural networks for machine vision and detection or intelligent traffic platforms required extensive expertise in machine learning and data science, which was an expensive and time-consuming investment for any company. Fortunately, as times have changed, AI tools have evolved as well. So, what features should AI GUI tools have?

· Flexibility: Find a GUI tool that allows you to import your own image samples and train neural networks to perform classification, object detection, segmentation, and denoising, thus benefiting from greater flexibility and customization.

· Localization: Use a tool that allows you to model training data on your own PC without needing to connect to the cloud, providing a higher level of data privacy that many industries now require.

· Export for Inference: Choose a tool that allows you to export model files to inference tools so you can perform operations on live video streams.

· Intuitive: Use numerical metrics and heat maps to visualize model performance.

· Utilize Pre-trained Models: Use pre-trained models included with the software package to reduce training workload.

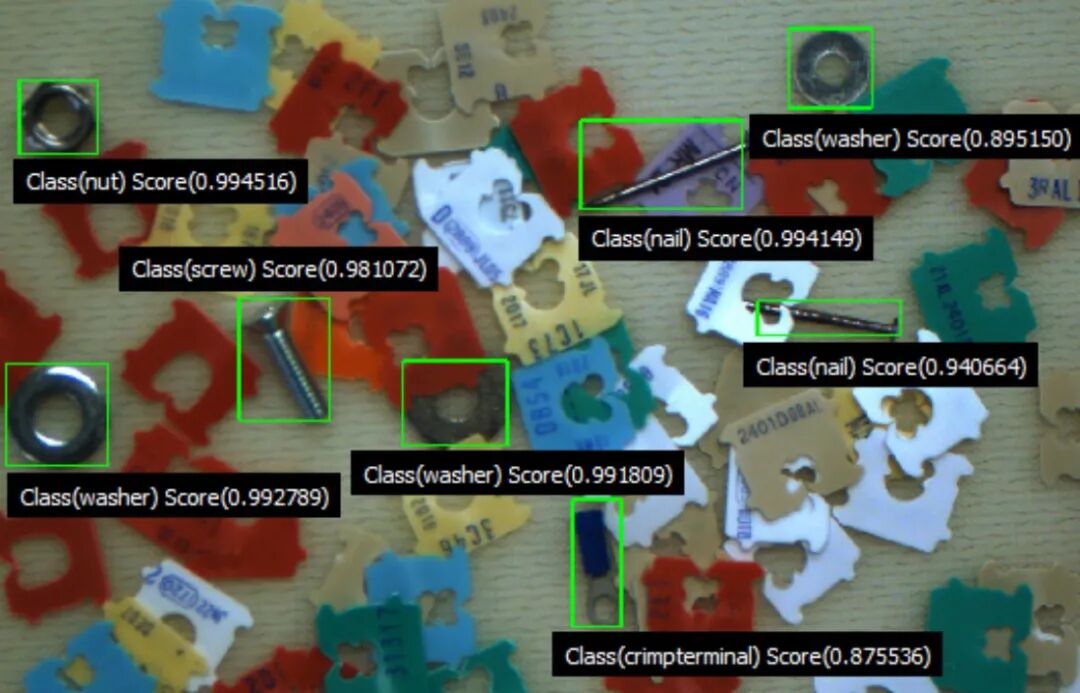

Let’s look at an example. You need to find and identify specific hardware parts, nuts, screws, nails, and washers, but these parts are crammed onto a reflective surface with many colorful labels (Figure 1). Achieving the required high robustness with traditional image processing would be very time-consuming, but AI tools can provide an object detection algorithm that can be easily trained with just a few dozen samples. This type of software tool will enable you to build robust and accurate localization and detection systems more quickly and easily, reducing manual development time and associated costs.

▲Figure 1: Achieving the required high robustness with traditional image processing would be very time-consuming, but AI tools can provide an object detection algorithm that improves efficiency and reduces associated costs.

Intelligent Transportation Systems (ITS) also align perfectly with AI image processing systems. From toll management and traffic safety monitoring to speeding and red-light enforcement, AI software can be used to accurately locate, segment, and identify vehicles and other moving and stationary objects.

03

Development Process of AI Software Tools

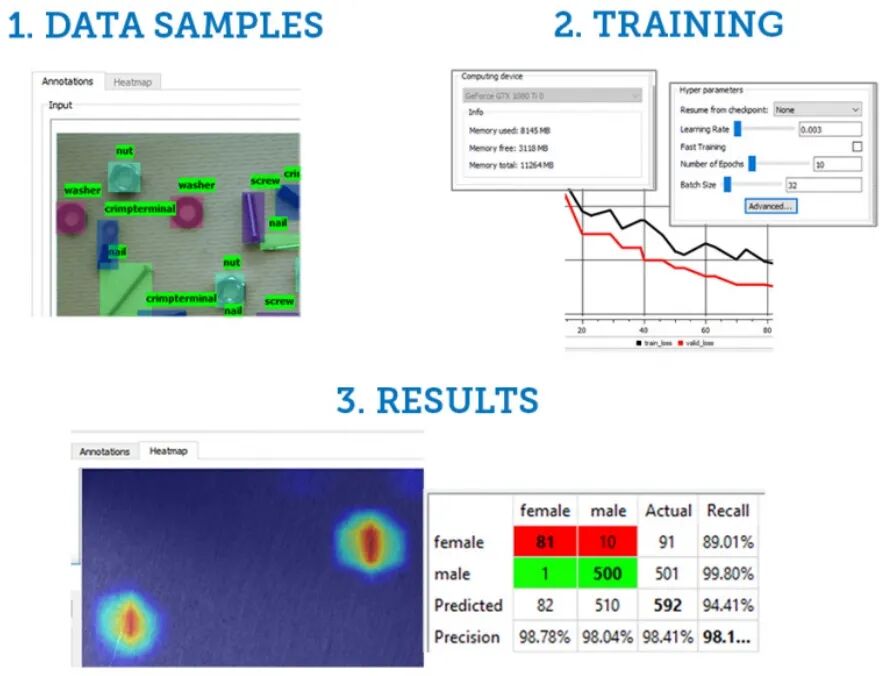

Once you have AI software tools in hand, the development process typically involves several main steps.

First, you need to create data and then edit and modify the dataset. You need to obtain training images and provide annotations corresponding to these images. Generally, you will import these images from a folder in a remote location or from a folder on the network or PC. Keep in mind that the quality of the model depends on the quality of the dataset within the model. You will also need to choose a graphics processing unit (GPU) with sufficient power to manage image processing.

Next, you will pass the data through the training engine to create the model. You will need to test the model using the results. This includes using a confusion matrix to show false positives and false negatives, as well as visualizing heat maps to display the activation of the neural network. Once the model is trained and tested, it can be exported as a model file for image processing applications.

04

Plug-and-Play Embedded Vision Features

As a topic, embedded vision bridges many different interpretations. There are various options available, including embedded vision platforms with built-in AI, and each user should weigh the various options (including costs) before making a decision.

Embedded vision may include cameras with embedded processors or field-programmable gate arrays (FPGAs), programmable vision sensors, or smart cameras, or general-purpose machines with flexible embedded applications. Regardless of how embedded vision applications are deployed, they are known for their small size, light weight, and low power consumption.

▲Figure 2: Main steps in the AI tool development process.

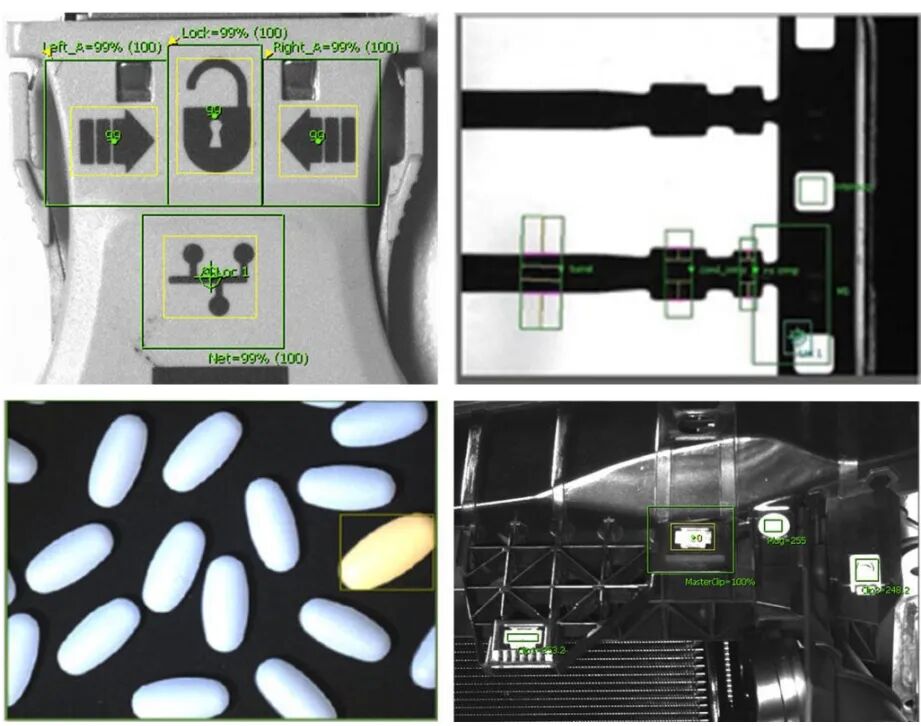

Embedded vision applications can also reduce the amount of data transmitted from the camera to the host PC, thereby decreasing the data volume through the pipeline. Additionally, it offers other advantages, including reduced costs (as embedded vision applications do not require expensive GPU cards on PCs), predictable performance, offline operation (no network connection required), and ease of setup. If you are looking for an integrated product that is easy to set up and deploy in the field, embedded vision can provide tangible benefits. Embedded vision is particularly suitable for industrial applications, such as in error-proofing and identification processes.

Applications in Error-Proofing Processes

· Pattern matching, checking presence and position;

· Presence/absence/counting of features or parts;

· Measurement of features or parts;

· Verification of parts or assemblies by color.

Applications in Identification Processes

· Product verification: reading product codes to avoid label confusion;

· Mark verification: validating product type, batch, date code;

· Quality verification: checking labels, label positions, presence/absence of features;

· Assembly verification: tracking assembly history at each stage of manufacturing;

· Logistics: ensuring normal processes for receiving, picking, sorting, and shipping.

05

Integrating AI and Embedded Vision

Whether used separately or together, AI and embedded vision represent a leap forward in digital image processing. For example, a traffic application that enforces red lights and speed limits uses an embedded vision system to capture images in the most efficient way. It then uses AI to help the device operate reliably under different weather and lighting conditions. Is it raining or snowing? Is the light bright or dim? The implementation of AI enables devices to adapt to uncontrolled real-world conditions.

With AI and embedded vision, next-generation digital image processing technologies can determine whether a car has enough passengers to enter a carpool lane, whether the driver is using a phone inside the car, or whether the driver and passengers are wearing seatbelts. This level of intelligence can lead to more thoughtful driving and safer operation of vehicles.

▲Figure 3: Embedded vision is particularly suitable for industrial applications, such as in error-proofing and identification processes.

While advancements in sensing, processing, software technologies, and smart cameras are the main reasons for our progress in AI image processing and embedded vision systems, we must not overlook the rise of edge AI. Edge AI reduces costs and bandwidth as large amounts of data are not continuously sent to the cloud for processing, while also lowering latency, enhancing privacy, and improving application performance.

And this is just the beginning of what can be achieved with these newer image processing technologies. Once we can bring “continuous learning” to machines in the field, we will have devices that learn automatically in real-time. By allowing existing models to adapt to contextual changes, continuous learning saves development teams countless hours of manual retraining in the lab.

A great example of this approach is using drones to monitor traffic at multiple altitudes. We can use images collected at a single altitude (e.g., 10 meters) to train the initial model. Once deployed in the field, the continuous learning algorithm kicks in, automatically “adjusting” the model as the drone flies at different altitudes.

Without a continuous learning algorithm, we would have to retrain the model every time the drone reaches a new altitude to achieve accurate performance. But with continuous learning, the model can respond to changes in size, distance, and the perspective of the flying vehicle. This instant learning approach in image processing technology greatly improves application performance.

– END –

2025 10th Japan Lean Manufacturing Study TourPress and hold to register (May 18-25, 2025)

Click to follow for more AI manufacturing insights