This issue recommends Professor Lu Siliang’sComprehensive Review of IoT Edge Computing for Machine Signal Processing and Fault Diagnosis (Part 1).With the continuous development of sensor technology, more and more sensors are being installed in machines or devices.These sensors generate massive amounts of “big data” during operation. However, some raw sensor data are interfered with by noise, limiting useful information, andlarge amounts of data upload consume significant network bandwidth and continuously deplete cloud server storage and computing resources.Data uploading, signal processing, feature extraction, and fusion inevitably cause delays, impacting the timeliness of fault detection and identification.This paper will review edge computing methods based on signal processing for machine fault diagnosis from the perspectives of concepts, cutting-edge methods, case studies, and research outlook.

Paper Link: You can read online and download the original text by clicking thelower left cornerof this articleRead Original.

Basic Information of the Paper

Paper Title:

Edge Computing on IoT for Machine Signal Processing and Fault Diagnosis: A Review

Journal:IEEE INTERNET OF THINGS JOURNAL

Publication Date:January 2023

Paper Link:

https://doi.org/10.1109/JIOT.2023.3239944

Authors:Siliang Lu (a), Jingfeng Lu (a), Kang An (a), Xiaoxian Wang (b, c), Qingbo He (d)

Institutions:

a: College of Electrical Engineering and Automation, Anhui University, Hefei 230601, China;

b: College of Electronics and Information Engineering, Anhui University, Hefei 230601, China;

c: Department of Precision Machinery and Precision Instrumentation, University of Science and Technology of China, Hefei 230027, China

d: State Key Laboratory of Mechanical System and Vibration, Shanghai Jiao Tong University, Shanghai 200240, China

Corresponding Author Email: [email protected]

Author Introduction:

Lu Siliang, born in 1987, PhD, Professor, PhD/Master Supervisor, Anhui Province Excellent Youth, Anhui Province Young Talent, Top 2% Global Scientist, IEEE Senior Member. He received his bachelor’s and doctoral degrees from the University of Science and Technology of China in 2010 and 2015, respectively.

His main research areas include dynamic testing and intelligent operation and maintenance of electromechanical complex systems, edge computing and embedded systems, information processing and artificial intelligence, robotics and industrial factory automation. He teaches courses such as “Principles and Applications of Sensors”, “Testing Technology and Data Processing”, “Robot Technology”, and “Scientific Paper Writing”.

He has hosted three National Natural Science Foundation projects (two general projects and one youth project), one Anhui Province Natural Science Foundation Excellent Youth project, one youth project, two open projects of the National Key Laboratory, and many school-enterprise cooperation development projects with State Grid, Chery Automobile, and China Machinery Industry Corporation.

He has published more than 100 academic papers, cited over 3000 times, and has five papers selected as ESI top 1% globally highly cited papers. He has applied for/authorized/assigned more than 30 national invention patents. He serves as the associate editor of the authoritative journal in the field of instrument measurement, “IEEE Trans. Instrum. Meas.”, editorial board member of “J. Dyn. Monit. Diagnost.”, and youth editorial board member of “Journal of Southwest Jiaotong University”. He has served as a reviewer for more than 50 domestic and foreign journals in the fields of electromechanical signal processing and intelligent diagnosis, and as a peer review expert for the National Natural Science Foundation of China.

He has won the second prize of Shanghai Science and Technology Award, the second prize of Anhui Province Natural Science Award, the first prize of China Electrotechnical Society Science and Technology Award, and the excellent completion project of the National Natural Science Foundation Committee in the mechanical discipline.

He is a member of the Fault Diagnosis Professional Committee of the China Vibration Engineering Society and the Youth Committee member, a director of the Rotor Dynamics Professional Committee of the China Vibration Engineering Society, and a committee member of the Equipment Intelligent Operation and Maintenance Branch of the China Mechanical Engineering Society.

Table of Contents

1 Abstract

2 Introduction

3 Basic Principles of Edge Computing

3.1 Edge Computing Paradigm

3.2 Edge Computing Software and Hardware Platforms

3.3 Comments

4 Implementation of IoT Edge Computing in Machine Signal Processing and Fault Diagnosis

4.1 Signal Processing-Based Machine Fault Diagnosis Process

4.2 Application of Edge Computing in Machine Signal Acquisition and Wireless Transmission

4.2.1 Sensor Signal Acquisition

4.2.2 Wireless Signal Transmission

4.2.3 Comments

4.3 Application of Edge Computing in Machine Signal Preprocessing

4.3.1 Signal Filtering and Enhancement

4.3.2 Signal Compression

4.3.3 Comments

4.4 Application of Edge Computing in Feature Extraction

4.4.1 Simple Statistical Features and Fault Indicators

4.4.2 Complex Feature Extraction

4.4.3 Comments

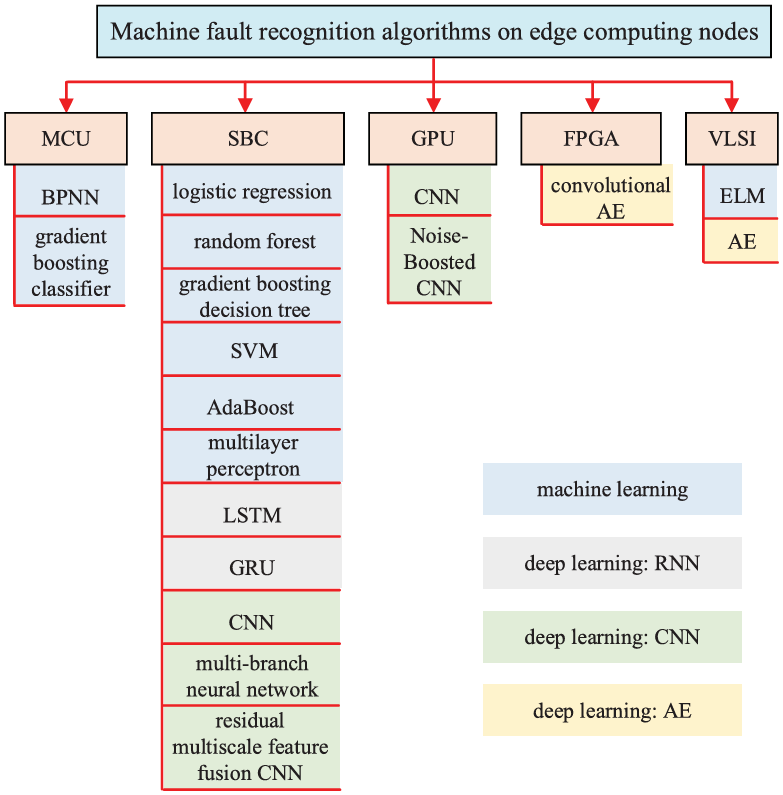

4.5 Application of Edge Computing in Mechanical Fault Identification

4.5.1 Edge Computing Based on Classical Machine Learning Methods

4.5.2 Edge Computing Based on Deep Learning Methods

4.5.3 Comments

(The above marked chapters are the content of this article)

5 Case Studies and Tutorials

6 Discussion and Research Outlook

7 Conclusion

1 Abstract

Edge computing is an emerging paradigm. It offloads computational and analytical workloads to Internet of Things (IoT) edge devices to accelerate computational efficiency, reduce channel occupancy for signal transmission, and lower storage and computational workloads on cloud servers. These unique advantages make it a promising tool for IoT-based machine signal processing and fault diagnosis. This paper reviews edge computing methods in machine fault diagnosis based on signal processing from the perspectives of concepts, cutting-edge methods, case studies, and research outlook. In particular, it provides a detailed review and discussion of lightweight design algorithms and specific application hardware platforms for edge computing in typical fault diagnosis processes, including signal acquisition, signal preprocessing, feature extraction, and pattern recognition. This review offers an in-depth understanding of edge computing frameworks, methods, and applications to meet the needs of real-time signal processing, low-latency fault diagnosis, and efficient predictive maintenance in IoT-based machines.

Keywords: Edge computing, Internet of Things, low-latency fault diagnosis, machines, real-time signal processing

2 Introduction

Condition monitoring and fault diagnosis are essential for ensuring the normal operation of machines and preventing risks and disasters. The operating status of the machine is reflected in the signals collected by sensors installed on the machine. Therefore, signal processing methods have proven to be one of the most effective means for machine fault diagnosis [1]. Typical signals used for machine condition monitoring include two categories, namely low-frequency condition signals and high-frequency waveform signals. The former includes signals such as the temperature and composition of lubricating oil, which generally reflect the overall health status of the machine, with refresh cycles ranging from several seconds to several hours. Waveform signals include vibration signals, sound signals, acoustic emission signals, motor voltage, current, and magnetic signals [2]. These signals can reflect the direct and instantaneous state of machine components, with a sampling frequency range from kHz to MHz.

With the continuous development of sensor technology, the cost of individual sensors continues to decrease, and more sensors are being installed in machines or devices. For example, thousands of sensors are installed on a high-speed train with eight carriages to monitor the train’s operating status in real time [3]. A wind farm has thousands of wind turbines, each equipped with multiple accelerometers, wind speed and direction sensors, and voltage and current sensors [4]. Industrial motors and drives in modern steel mills are also equipped with thousands of sensors to ensure the safety of production lines. These sensors generate massive amounts of “big data” during operation.

In this context, cloud computing is an appropriate paradigm for processing massive amounts of big data. Since Google CEO Eric Schmidt first proposed the concept of cloud computing in 2006, cloud computing has rapidly developed over the past decade [5]. Cloud computing provides fast and secure storage and computing services over the network by establishing large clusters of high-performance computers, allowing every network terminal to use the powerful computing resources and data centers on cloud servers [6]. Cloud computing has also been widely studied and applied in machine condition monitoring and fault diagnosis. Machine status data is directly uploaded to cloud servers via wired or wireless networks, where signal processing, feature extraction, fault identification, and prediction are performed on servers with sufficient storage and computing resources. In addition, fault diagnosis models and algorithms can be iteratively updated using newly collected data to further improve diagnostic accuracy.

Cloud computing has obvious advantages in machine status monitoring and intelligent diagnosis. However, it is important to note that large data uploads consume significant network bandwidth and continuously deplete cloud server storage and computing resources. In fact, some raw sensor data are interfered with by noise, limiting useful information. Moreover, data uploading, signal processing, feature extraction, and fusion inevitably cause delays, impacting the timeliness of fault detection and identification. Therefore, for machines that require real-time diagnosis and dynamic control, cloud computing cannot meet the extremely fast response requirements. Therefore, how to effectively process massive sensor data to achieve real-time intelligent diagnosis remains a challenge. Edge computing is an emerging computing paradigm that has rapidly developed in recent years. By processing data directly on distributed systems close to the sensor edge, it can effectively address the shortcomings of cloud computing in real-time processing of massive sensor data. Edge computing has inherent advantages in machine fault diagnosis due to its low latency, large bandwidth, and massive connectivity. Recently, edge computing has also been applied to machine signal processing, feature extraction, and fusion, significantly improving the performance of real-time fault diagnosis.

Research on edge computing in machine signal processing and fault diagnosis has achieved encouraging results. Although machine fault diagnosis algorithms have been widely studied, only a small portion is related to hardware implementation. One of the main reasons is that edge computing highly relies on hardware and algorithms, while implementing fault diagnosis methods on specific processors remains a difficulty and challenge[7]. Therefore, the purpose of this paper is to review the latest progress of edge computing methods in the field of machine signal processing and fault diagnosis, which will help better understand its research history, progress, and trends. Specifically, this paper will focus on explaining and discussing how to choose appropriate hardware platforms for implementing specific algorithms.

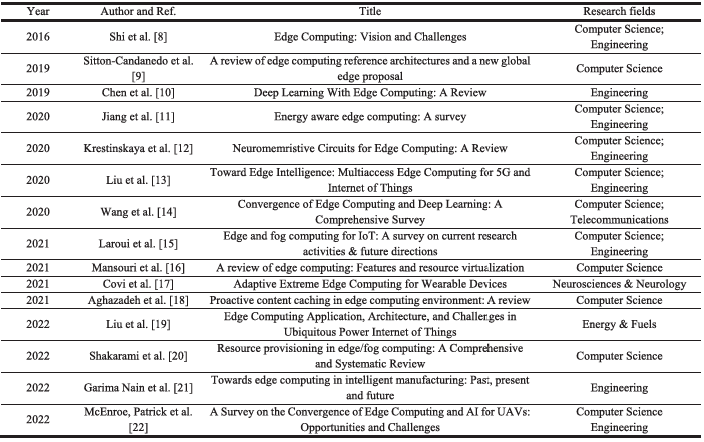

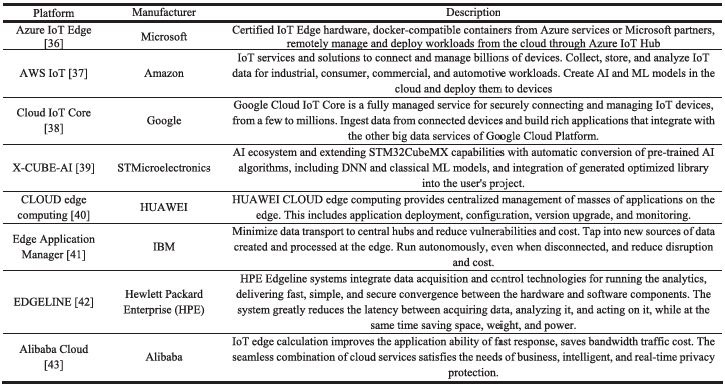

Edge computing has attracted wide attention from researchers, and many comprehensive and in-depth review articles have been published in recent years, as shown in Table 1. It can be seen that the themes of these reviews mainly focus on fields such as computer science, engineering, telecommunications, neuroscience, and energy and fuels. Compared to other related fields, the research focus on machine fault diagnosis is somewhat different. For example, many types of signals have been used for machine fault diagnosis, so the selection of edge computing nodes should consider the compatibility of sensor peripheral interfaces. In addition, machine signals are often subject to noise interference, so the design of edge computing systems should consider how to implement various one-dimensional signal denoising algorithms. Furthermore, IoT nodes used for machine condition monitoring may be installed in harsh environments without power supply, so consideration should be given to how to reduce the power consumption of signal transmission and computation.

Table 1 Recent Reviews Related to Edge Computing

Therefore, conducting a comprehensive review of edge computing in the field of machine signal processing and fault diagnosis is meaningful and urgent. So far, there has been no such review reported. This review will focus on the key issues that significantly affect the accuracy and efficiency of edge computing systems and provide convenience for academics and engineers to design algorithms and hardware for real-time, low-latency machine signal processing and fault diagnosis systems. Specifically, case studies and source code provide intuitive tutorials on how to implement algorithms on hardware platforms.

3 Basic Principles of Edge Computing

This section introduces the concept, history, and paradigm of edge computing, and summarizes the hardware and software platforms of edge computing to facilitate a comprehensive understanding of this topic.

3.1 Edge Computing Paradigm

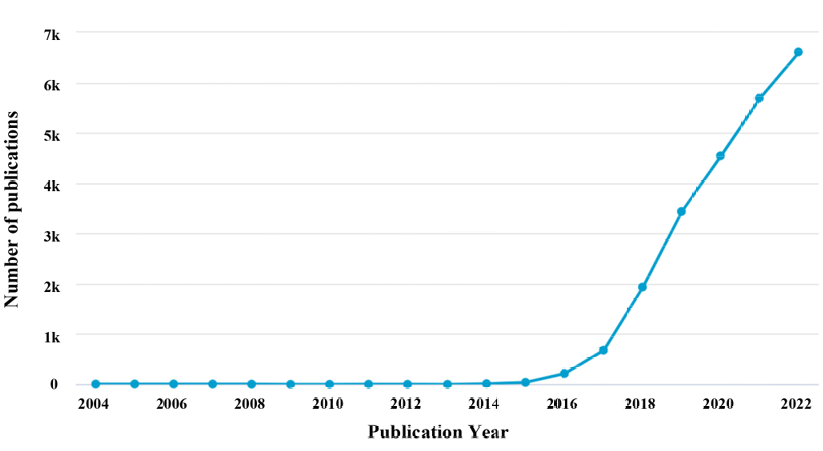

The concept of edge computing was first proposed by Pang and Tan [23]. However, due to the limited computing power of embedded systems at that time, this technology did not receive much attention. The number of publications related to the theme of edge computing in the Scopus database is shown in Figure 2. The search strategy was TITLE-ABS-KEY (“edge computing”), with a publication year range from 2004 to 2022. It can be seen that the number of edge computing publications has increased rapidly since 2015. The rise of edge computing is attributed to: the rapid increase in storage space and computing power of edge computing nodes; and the decrease in the price of processors per TOPS/TFLOPS/MIPS.

Figure 2 Number of Publications Related to “Edge Computing” in the Scopus Database (2004-2022)

In 2016, the Edge Computing Alliance was jointly established by Huawei, Intel, ARM, Softcom, the China Academy of Information and Communications Technology, and the Chinese Academy of Sciences. By the end of 2021, the number of alliance members had reached 320 [24]. In 2016, Shi published a review article titled “Edge Computing: Vision and Challenges” [8], which attracted wide attention and has been cited over 5600 times in the Google Scholar database (as of January 2023).

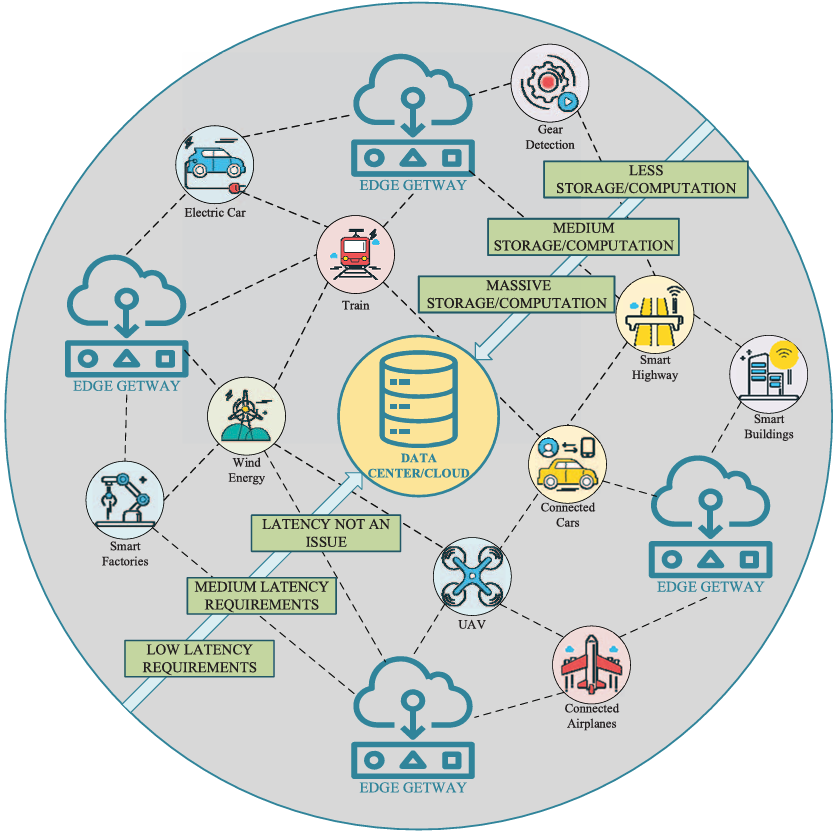

A schematic diagram of the edge computing framework is shown in Figure 3. Centralized data centers are surrounded by distributed edge computing nodes. One of the most notable features of edge computing is thatsensor data is processed directly on various edge nodes, such as handheld instruments, IoT nodes, smartphones, smartwatches, and electric vehicle processors [25]. Most raw data are processed at the edge nodes, and only filtered data and extracted features are transmitted to cloud servers for storage, further analysis, and mining. This process reduces the consumption of transmission bandwidth and computing resources, thereby further reducing computing latency and improving the response speed of fault diagnosis [26]. Storage space, computing power, and latency increase as the diameter of the circle shrinks, as indicated by the two arrows and six green background text boxes in Figure 3.

Figure 3 Edge Computing Framework

Cloud computing and edge computing are essentially complementary and can work together to balance computing and storage resources. Generally, raw data can be cleaned, filtered, compressed, and formatted at edge computing nodes close to the sensor. Only filtered, denoised, and processed data and features are transmitted to cloud servers for data mining, feature fusion, model training, storage, and backup [27]. On the other hand, parameters, models, and control instructions are regularly updated and deployed to distributed edge nodes via the network. This collaborative approach facilitates information sharing through connections between sensors, gateways, and servers, thereby improving signal processing efficiency, increasing fault diagnosis accuracy, and reducing the overall power consumption of the cloud-edge collaborative system [28].

Edge computing summarizes the advantages of machine signal processing and fault diagnosis as follows.

Latency: The development of machine faults can be sudden and rapid. Edge computing reduces the time delay from the occurrence of a fault to fault diagnosis by processing sensor data in real time at edge nodes.

Resource Consumption: More and more sensors are installed on monitored machines. If all the raw signals are transmitted to the cloud, it will deplete the storage and computing resources of the cloud server, further affecting the efficiency of fault diagnosis. Edge computing technology avoids the data explosion problem through distributed processing.

Privacy: The sensor data transmitted to the cloud may have privacy issues. Edge computing technology eliminates this concern by processing sensor data at edge nodes without signal transmission.

Diagnosis and Control: By utilizing edge computing technology, fault diagnosis and machine control algorithms can be implemented on the same processor, which is beneficial for anomaly detection and real-time control systems.

Scalability: Since each edge computing node has computing and communication capabilities, networking can be flexibly configured. The scale of the sensor network can be adjusted based on the number of machines that need to be monitored.

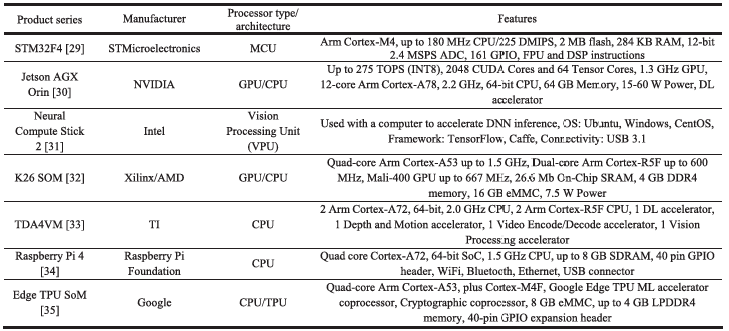

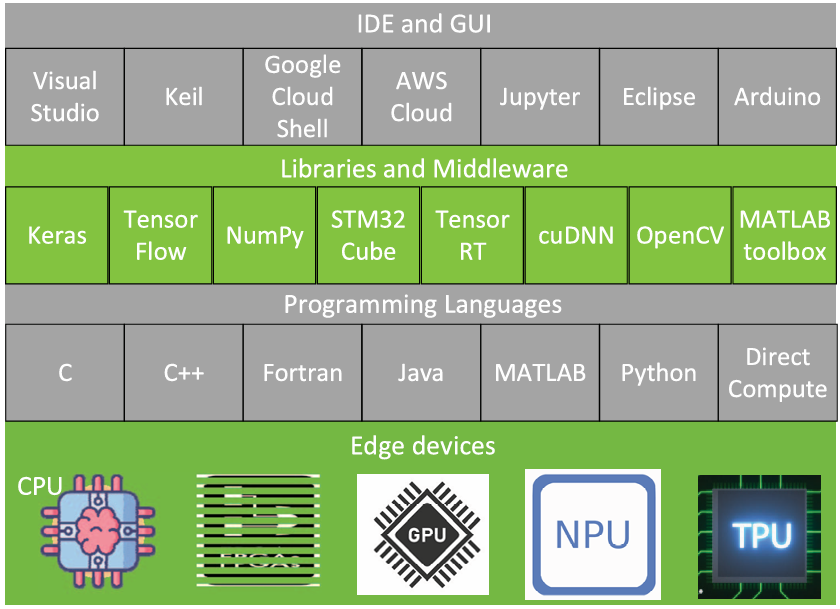

Machine signal processing and fault diagnosis algorithms can generally be programmed using various programming languages (such as C/C++, Python, MATLAB, and LabVIEW) and then executed on x86 or ARM architecture computers/servers with powerful processors and sufficient memory [44]. However, the design of edge computing nodes is constrained by power consumption and size. Therefore, before deploying existing algorithms to edge nodes, modifications and lightweight designs are required [45]. The following introduces the hardware and software platforms for algorithm implementation and deployment. Table 2 lists some processors dedicated to edge computing. Only some typical product series are listed here; in fact, manufacturers can provide a richer product portfolio for selection. For example, in the second row of Table 2, in addition to the F4 series, STM32 also has F0, F1, F3, G0, G4, WB, WL, F7, and H7 series, suitable for different scenarios such as ultra-low power consumption, high-performance computing, wireless communication, and automotive applications.

From Table 2, it can be seen that manufacturers provide a wide range of processors to implement edge computing algorithms with different computing requirements. For example, low-cost, low-power STM32 microcontroller units (MCUs) with digital signal processing (DSP) instructions can execute typical algorithms such as convolution operations and fast Fourier transforms (FFT), making them suitable for implementing some fault diagnosis methods based on classical vibration signal processing. Jetson GPUs with Tensor cores and deep learning (DL) accelerators have powerful parallel computing capabilities and are suitable for implementing deep neural network (DNN) models in applications requiring stringent timing for fault diagnosis. Raspberry Pi provides a convenient way and cost-effectiveness for algorithm deployment from servers to edge nodes.

Table 2 Hardware for Edge Computing

Table 3 Development Platforms for Edge Computing

Figure 4 Architecture for Development and Deployment of Edge Computing Algorithms

3.3 Comments

It can be seen that edge computing has rapidly developed with the increasing investment from both industry and academia in recent years. One of the main difficulties of machine fault diagnosis based on edge computing is the implementation of algorithms on hardware devices. About twenty years ago, coding for embedded systems was complex and required extensive experience, and engineers needed to be familiar with the operations of MCU registers. About ten years ago, embedded system manufacturers began to provide functional libraries such as standard peripheral drivers, significantly improving development efficiency. In recent years, manufacturers have provided more user-friendly IDE platforms where engineers can configure embedded system peripherals using GUIs. With the continuous improvement of the software and hardware of embedded systems, the implementation of machine signal processing and fault diagnosis algorithms on edge nodes will become more convenient and efficient.

4 Implementation of IoT Edge Computing in Machine Signal Processing and Fault Diagnosis

This section will review edge computing methods based on the four continuous steps of typical signal processing-based fault diagnosis, namely signal acquisition, signal preprocessing, feature extraction, and pattern recognition.

4.1 Signal Processing-Based Machine Fault Diagnosis Process

4.2 Application of Edge Computing in Machine Signal Acquisition and Wireless Transmission

This section will review edge computing methods in machine signal acquisition and wireless transmission. With the rapid development of low-power chips and high-energy-density batteries, more and more machines use IoT nodes for condition monitoring [58], [59]. IoT nodes can be installed in a distributed manner, making it convenient to replace or adjust without complex power and signal cable wiring.

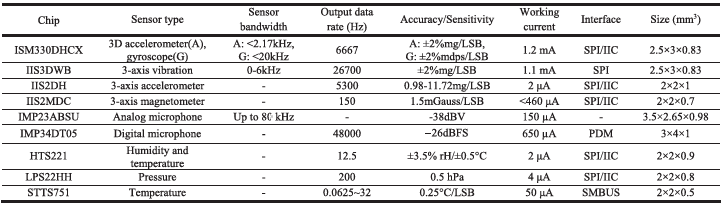

4.2.1 Sensor Signal Acquisition

Signal acquisition is the first step in machine signal processing and fault diagnosis. In traditional centralized monitoring systems, data acquisition systems are connected to sensors via cables, and the size and power consumption of sensors do not significantly affect system performance. However, for IoT nodes with edge computing capabilities, sensor power consumption is a key factor affecting battery life. Fortunately, many low-power microelectromechanical system (MEMS) sensors have been developed and released in recent years. Sensors based on system-on-chip (SoC) technology integrate analog conditioning circuits and analog-to-digital converters (ADCs), and can communicate directly with MCUs via digital interfaces such as universal synchronous-asynchronous receiver-transmitter (USART), inter-integrated circuit (IIC), and serial peripheral interface (SPI). This configuration significantly reduces overall power consumption, improves the signal-to-noise ratio, and enables the integration of multiple sensors into a small printed circuit board (PCB).

Table 4 introduces a wireless IoT node (STEVAL-STWINKT1B) for machine condition monitoring and predictive maintenance applications, equipped with various sensors. It can be seen that MEMS sensors can measure commonly monitored signals, including humidity, pressure, temperature, vibration, magnetic field, and sound signals. The size and operating current of the sensors can be reduced to the μA/mA range, respectively. These sensors provide the possibility for efficient edge computing and machine fault diagnosis integrated into low-power IoT nodes.

Table 4 Sensors in the STEVAL-STWINKT1B IoT Node

Sampling frequency and signal length are also important factors affecting the battery life of edge nodes. Obviously, longer signals increase sampling time, storage space, computational costs, and power consumption [64]. According to Nyquist’s theorem, if the bandwidth of the sensor signal extends from DC to a maximum frequency, the signal should be sampled at least at that frequency. In some specific scenarios, undersampling methods can be used to reduce sampling frequency and signal length. For example, reference [65] proposed an undersampling vibration signal analysis method for the condition monitoring and fault diagnosis of motor bearings. In this reference, the resonance band of the vibration signal is first determined by spectral kurtosis, and then the programmable filter is configured to band-pass mode according to the resonance band. The minimum sampling frequency is calculated and used for sampling the vibration signal. The results show that compared to traditional methods, this method can reduce the sampling frequency and signal length to about 1/8 of the traditional methods. Reference [66] studied the optimal sampling rate of sensors in IoT applications and discussed the trade-off between sampling rate and sensing performance. Reference [67] studied the impact of sampling frequency on the performance of recurrent neural networks (RNNs) in real-time fall detection devices in IoT. Therefore, to reduce resource consumption in edge computing, sensor signal acquisition parameters should be considered and appropriately selected, including sensor type, sampling frequency, and signal length.

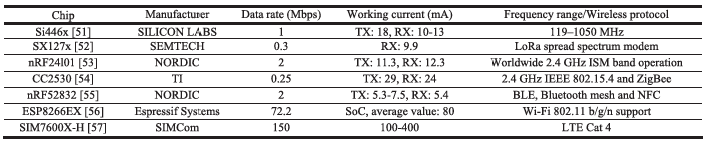

4.2.2 Wireless Signal Transmission

By utilizing edge computing technology, most machine signals can be analyzed at edge nodes, and some key signals and processing results can be transmitted to the server for storage and further mining. Many types of wireless communication protocols have been applied to transmit machine signals. For example, reference [65] used a pair of transceivers to transmit vibration signals collected by IoT nodes; reference [68] used a low-power Bluetooth (BLE) 5.0 protocol embedded MCU (STM32WB55, STMicroelectronics) to collect and transmit motor vibration signals; reference [69] used wireless transmitters to transmit mixed vibration and magnetic signals; reference [70] proposed an intelligent sensor system for monitoring and predictive maintenance of centrifugal pumps, and the designed wireless sensor communicates with the server via narrowband IoT protocol; reference [71] proposed a method for data-driven condition monitoring of mining mobile machinery during non-stationary operation using wireless acceleration sensor modules, with signals transmitted using BLE protocol.

Table 5 lists some commonly used wireless transceivers for IoT and edge nodes. These transceivers based on different wireless protocols have different data rates, communication distances, operating currents, and prices. For example, LTE modules have the highest data rates, but they also consume the most current. In common applications, operating current is generally limited to the mA level. Chips can easily switch between working mode and standby/sleep/shutdown mode, with standby/sleep/shutdown mode currents as low as nA/μA level. In addition, antennas built into the chips usually have low power consumption. If the signal needs to be transmitted over long distances, power amplifier circuits should be designed according to the chip specifications.

Table 5 Wireless Transceivers for IoT and Edge Nodes

4.2.3 Comments

4.3 Application of Edge Computing in Machine Signal Preprocessing

In addition to traditional parameter codecs based on transformations and predictions, some recent neural network-based audio codecs have also been proposed and shown good results. For example, reference [87] proposed a WaveNet generative speech model to generate high-quality speech from the bitstream of standard parameter codecs. Reference [88] introduced a prediction-variance regularization algorithm in the generative model to reduce sensitivity to outliers, significantly improving the performance of speech codecs. Reference [89] designed a neural audio codec called SoundStream to compress speech, music, and general audio bitrates. This encoder is implemented by a speech-customized codec. The architecture of this model includes a fully convolutional encoder/decoder network and a residual vector quantizer, trained jointly in an end-to-end manner. Reference [90] designed a neural audio codec called EnCodec, which adopts a streaming encoder-decoder architecture and quantizes the latent space trained in an end-to-end manner.

In addition to traditional signal compression methods based on Nyquist’s theorem, compressed sensing is an emerging technology dedicated to directly obtaining compressed data during the acquisition process [91]. This technology has been studied in fields such as imaging, biomedicine, audio and video processing, and communications [92]. However, machine signals are often subject to severe noise interference and have significant randomness, and sometimes critical samples may be lost during the sampling process. Therefore, before deploying compressed sensing algorithms to edge devices, noise and sample loss issues should be considered and properly addressed. Some algorithms have been proven effective in recovering signals that are severely interfered with by noise and/or have missing samples. For example, reference [93] proposed a sparse signal reconstruction method to reconstruct missing samples. In this method, missing samples and available samples are treated as minimization variables and fixed values, respectively, and a gradient-based algorithm is used to reconstruct unavailable signals in the time domain. Experimental results show that severely damaged images can be effectively recovered using this method. In addition to image recovery, reference [94] proposed a statistical analysis-based method that effectively detects signal components with missing data samples. This method is validated in various scenarios with different component frequencies of sinusoidal signals and various available sample missing scenarios. The combination of compressed sensing and edge computing is very promising for analyzing machine status signals, which will significantly reduce data length and extend the battery life of IoT nodes.

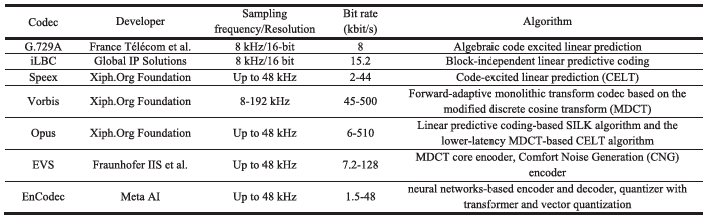

Table 6 lists some codecs designed for waveform signal compression that can be implemented on edge nodes. These codecs provide a wide range of options in terms of sampling frequency, bandwidth, bitrate, latency, and compression quality. However, codecs have different computational complexities and hardware requirements, which should be considered in edge computing applications [95].

Table 6 Codecs for Waveform Signal Compression at IoT Edge Nodes

4.3.3 Comments

4.4 Application of Edge Computing in Feature Extraction

After signal processing, features will be extracted for machine fault detection and identification. In centralized fault diagnosis systems, feature extraction algorithms are executed on servers with sufficient storage and computing resources. Before deploying algorithms to edge nodes, considerations should be given to available random access memory (RAM), data types, and the number of multiply-accumulate (MAC) operations, and the algorithms should be redesigned in a lightweight manner. This section reviews the implementation of edge computing-based feature extraction methods based on the computational complexity of signal features.

4.4.1 Simple Statistical Features and Fault Indicators

When machines operate under steady conditions, statistical features and fault indicators can be used to monitor the health status of the machines [96], [97]. The construction of fault indicators should consider the characteristics of the signals. Some classic time-domain and frequency-domain features have low computational costs, making them suitable for implementation on edge computing nodes for real-time machine state recognition.

For example, reference [98] used spectral correlation and short-time root mean square (RMS) algorithms to accelerate the computation of envelope analysis and reduce the power consumption of wireless sensor networks (WSNs). In the TM4C1233H6PM edge computing processor, a ping-pong algorithm and direct memory access (DMA) technology were used to accelerate the data acquisition process and optimize memory usage through data type format conversion and downsampling. Experimental results show that compared to traditional Hilbert transform methods, spectral correlation and short-time RMS can double and more than five times the computation speed, respectively.To achieve online fault diagnosis of motor bearings under variable speed conditions, reference [99] proposed a hardware-based order tracking algorithm and deployed it on two MCUs. One MCU is used to obtain the angular increment of the motor current signal, using a computationally low-cost inverse sine function to calculate the angle of sequential sampling points. The other MCU samples the sound signal at equal angles based on the trigger signal generated by the first MCU. Finally, the envelope order spectrum is calculated to identify the type of motor bearing fault. Reference [102] extracts the MSAF-RATIO30 feature from the motor current signal for feature fusion and fault diagnosis. These features are based on the differences in normalized spectra, with sampling frequencies and signal durations of 20.096kHz and 5s, respectively. The main computational load is the use of FFT to calculate the spectrum, implemented on a Raspberry Pi 3B single-board computer (SBC).

These references introduce the implementation of edge computing in the design of classic statistical features and fault indicators for machine fault diagnosis. However, when machines operate under complex conditions and environments, simple features and indicators may not perform well due to noise interference. Therefore, for machine fault diagnosis based on edge computing, more reliable features should be studied.

4.4.2 Complex Feature Extraction

In recent years, many advanced signal processing methods have been developed to extract complex and reliable features from machine signals. Generally, extracting high-dimensional features involves huge computational loads, which poses a challenge for deploying these algorithms on resource-limited edge nodes. Therefore, lightweight design of algorithms and selection of suitable edge computing platforms should be considered. The following reviews advanced signal processing methods used for machine fault diagnosis in edge computing nodes.

Reference [75] collected sound signals from bearings using microphones, demodulated the sound signals using energy operators, enhanced them with stochastic resonance filters, and finally calculated the envelope spectrum for fault diagnosis on Arm embedded systems. To reduce computational complexity and memory usage, the authors designed real-valued FFT to replace the original complex-valued FFT. Reference [106] designed and implemented a discrete wavelet transform (DWT)-based algorithm on a field-programmable gate array (FPGA) to detect motor broken bar faults by analyzing vibration signals under steady low-load conditions. Reference [76] first demodulates motor vibration or sound signals using a square-sum low-pass filter envelope method, then enhances the demodulated signals using a random resonance-based nonlinear filter, and finally uses FFT to calculate the envelope spectrum of the processed signals for motor bearing fault diagnosis on STM32 embedded systems. This method optimizes parameters using a computationally low-cost one-dimensional forward-backward algorithm. Reference [109] proposed an online anomaly detection method for automated systems based on edge intelligence, first processing the collected sensor signals using smoothing filters to identify the most sensitive parameters as inputs for the prediction model. The processed data is temporarily stored at the edge computing node, and then the structured data is sent to the cloud server.With the development of high-performance edge computing processors, there is an expectation to transplant more complex and advanced algorithms to edge nodes for real-time signal processing and fault diagnosis.

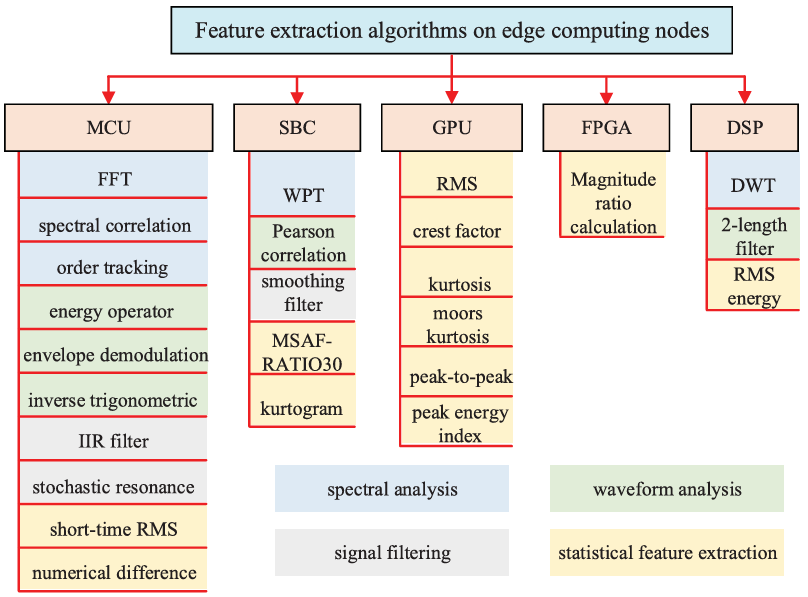

4.4.3 Comments

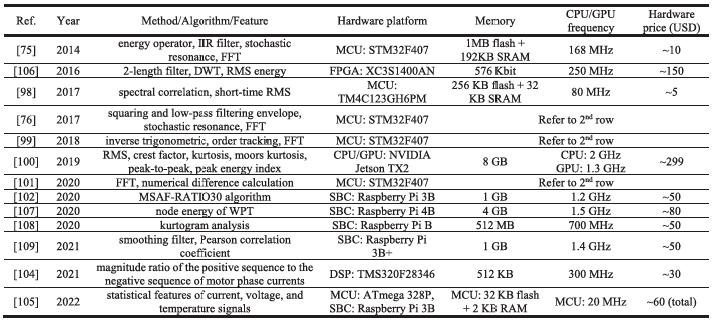

With the rapid increase in storage and computing power, most fault diagnosis researchers focus on optimizing models and algorithms to improve diagnostic accuracy, with little consideration given to hardware limitations. When deploying algorithms to edge nodes, it becomes challenging to choose suitable platforms from various commercial computing platforms. Therefore, Table 7 summarizes the implementation of feature extraction methods on specific edge hardware platforms. These methods are sorted by publication year. Some hardware platforms may have become outdated and lack further technical support, so researchers may prefer to choose newly released platforms when implementing specific algorithms. On the other hand, it can be seen that some classic algorithms with lower computational burdens, such as root mean square calculations, FFTs, and IIR filters, can be implemented on low-cost MCU platforms. In general, MCU processors can execute algorithms without an operating system and can conveniently switch between run, standby, sleep, and shutdown modes. These features provide flexibility for configuration, reduce power consumption, and improve the efficiency of edge computing systems.

Table 7 Summary of Feature Extraction Methods Implemented on Edge Computing Nodes

Figure 5 Classification of Feature Extraction Algorithms Implemented on Edge Computing Nodes

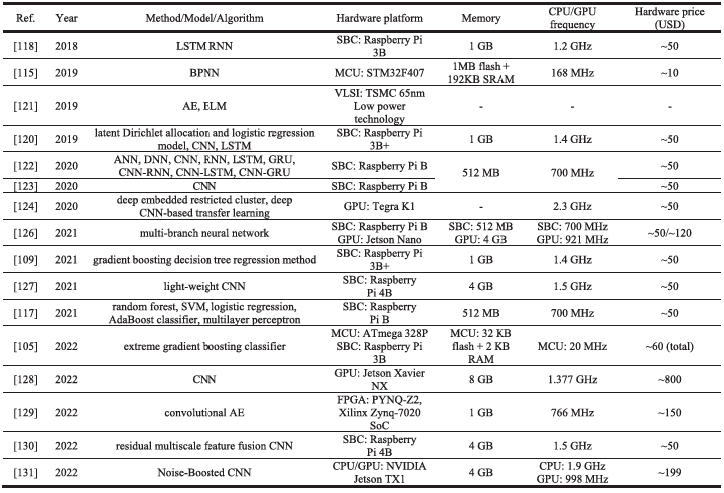

4.5 Application of Edge Computing in Mechanical Fault Identification

Original Text Acquisition:

Guest, please click on an advertisement before you leave~