Concept of Cybernetics

Executing a purposeful act, such as picking up a book from the table, is not a simple one-way process. In this process, neurons in the brain send signals to the muscles, which must act to achieve the expected goal. Conversely, in any system (a combination of components that work together to achieve a specific goal), information is fed back to the central nervous system at each stage to initiate the next action, referred to as the state of the system. This process continues until the initially desired goal is achieved. This characteristic is related both to biology and to some artificial machines, known as feedback.

This is the concept of Cybernetics; it was created by Norbert Wiener for the study of control and communication systems. Cybernetics and feedback are integrated concepts, and in a sense, we can conclude that any specific system that generates and studies continuous feedback is using cybernetic methods, enabling it to adapt to unpredictable changes. Stafford Beer cleverly shifted the term from systems to cybernetics, stating:

“When I say any system is in control, I mean it is ultra-stable: capable of adapting smoothly to unpredicted changes. It has within its structure a proper deployment of requisite variety.”

If the current output of a system depends solely on its current input, it is termed a static system. On the other hand, when the current output of the system depends on its past inputs, the system is dynamically. “In a dynamic system, if the system is not in equilibrium, the output changes over time.” Cybernetics allows dynamic systems to self-regulate and self-correct without any final state or predetermined goal.

Cybernetics and Interactivity

The budding concept of interactive art began with the artist’s goal of sharing their previous authoritative status not only with the audience but also with machines.

Marcel Duchamp‘s Rotary Glass Plates from the 1920s were the first step toward interactive art. In 1938, Duchamp attempted to eliminate a painting with a lamp that would only turn on when a visitor activated a light sensor.

Before the 1960s, some Dada artists’ works were the first steps toward the tradition of interactive art. Max Ernst placed an axe next to his sculpture for visitors to use when they “disliked the item”. Some Dada painters also invited the audience to complete incomprehensible Dada paintings or drawings on intentionally left blank spaces.

Dual Origins: Computer Science and Art

Interactive art has two distinct origins: one is the development path of participatory art forms, such as performance, happening art, and site-specific works. The other is the technology-oriented approaches of artist/computer scientists (e.g., Myron Krueger and David Rokobey) and video artists (e.g., Nam June Paik).

Many roots of interactive art can be traced back to the 1960s, eliminating barriers between life and art, the dematerialization of the art object (an idea in conceptual art), process art (actual actions and how to define actions as true art; viewing art as pure human expression), participation art (a form of art that allows the audience to directly participate in the creative process, making them co-authors, editors, and observers of the work), and the Fluxus movement (an international interdisciplinary group of artists, composers, designers, and poets formed in the 1960s and 70s), to the Happening movement (a form of performance art that takes place in streets, garages, and shops, contrasting with the general exclusivity of art galleries and exhibitions) and situationism, art and technology, kinetic art, and cybernetic art. They are part of a profound influence on the relationship between artworks and their audiences.

In 1960, J. C. R. Licklider proposed the concept of man-computer symbiosis from his unusual background in engineering and behavioral science, as a cooperative interaction between humans and electronic machines. He suggested that man-computer symbiosis refers to systems that are extensions or semi-automated systems of humans, where machines are merely mechanical (or computational) extensions of humans, envisioning that the purpose of such (fully automated) systems is primarily to allow humans and computers to collaborate in decision-making and controlling complex situations without rigidly relying on predetermined programs.

In 1961, Allan Kaprow defined Happenings as a form of [performance] art taking place in streets, garages, and shops, rather than exclusively in galleries and exhibitions. Alongside Happenings, reactive kinetic art was also developing, replacing the directives given by leaders of Happenings with technical communication and pre-programmed participation.

In the early 1960s, Nicolas Schoffer created a series of Cybernetic-Spatiodynamic (CYSP) sculptures that could respond to changes in audience sound, light intensity, color, and movement. CYSP sculptures are primary examples of the cybernetic art movement. In 1965, Schoffer also presented plans for a cybernetic city at the Jewish Museum in New York, indicating that Schoffer could not only program sculptures but also entire urban areas, providing the idea of dialogue between technology and the environment.

In 1966, a series of performances titled Nine Evenings: Theater and Engineering was held in New York. In one variation of Nine Evenings, John Cage and Merce Cunningham used a sound system that responded to sounds and the dancers’ movements through photoelectric tubes and microphones. John Cage used a wireless system to turn speakers on and off, with speakers responding to movement through photovoltaic cells. In Variations VII, Cage also utilized contact microphones to capture normally inaudible bodily functions, such as heartbeats and sounds from the stomach and lungs.

1968, a key figure in the art and technology movement, Robert Rauschenberg, developed a visual reactive environment that allowed untrained, unprepared visitors to participate, named Soundings. Soundings consisted of three pieces of acrylic glass placed one after the other. The front glass had a mirror, while the two smaller pieces displayed different silk-screened views of a chair. If visitors remained quiet in the exhibition space, they would only see their reflection. However, as soon as someone spoke or made a sound, the lights would activate, revealing different views of the chair.

Happenings imply a staged situation, restricted to a specific performance time, while reactive environments occur within exhibition contexts of galleries and museums.

1969, the first video group exhibition at Howard Wise Gallery in New York featured television as a creative medium: Nam June Paik‘s Participation TV (I & II) was another attempt among other reactive environment projects. In Participation TV I, visitors used two microphones to produce sound, which could then be seen as sound waves on the display. In Participation TV II, three color displays and three cameras were aligned, generating endless visual feedback. If visitors moved between the cameras and displays, their images would appear in the displays amidst endless visual feedback.

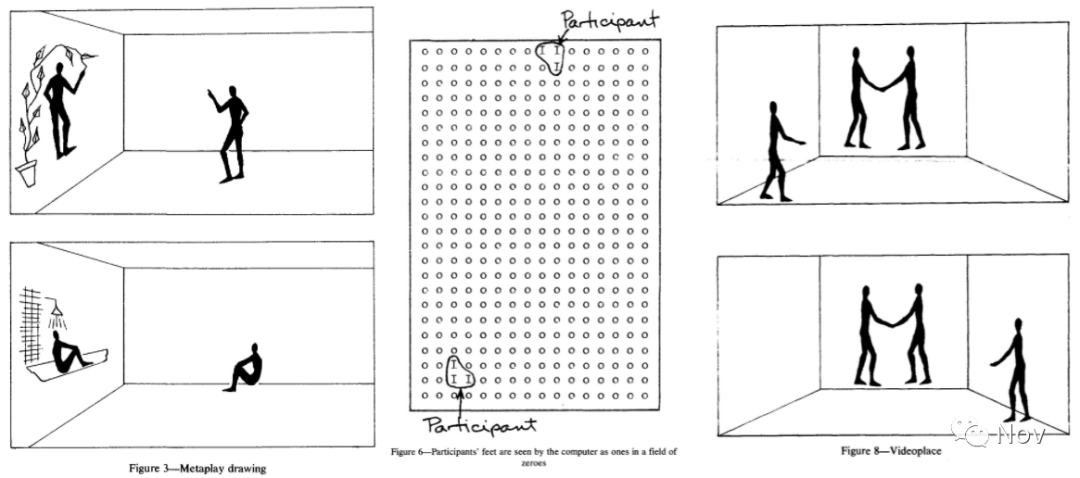

Myron Krueger was also a pioneer and avant-garde figure, making influential strides toward participatory space interactive art. His interactive art exhibitions proposed responsive environments; GLOWFLOW (1969, in an environmental exhibition, light lines could glow based on participants’ movements in the exhibition space), METAPLAY (1970, where visitors’ real-time video images and computer graphics drawn remotely by the artist overlapped on a digital screen), PHYSIC SPACE (1971, a program-driven environment that automatically responded to the footsteps of people entering the room), and VIDEOPLACE (1975, a video screen allowing participants to interact in unexpected ways through the video medium in a shared visual experience) can be considered cornerstones of spatial interactive art, where the use of computer algorithms played a key role. He also developed a theoretical framework for others and himself, describing responsive environments as an art form:

“The [responsive] environments described suggest a new art medium based on a commitment to real-time interaction between men and machines. The medium is comprised of sensing, display and control systems. It accepts inputs from or about the participant and then outputs in a way he can recognize as corresponding to his behavior. The relationship between inputs and outputs is arbitrary and variable, allowing the artist to intervene between the participant’s action and the results perceived.”

Myron Krueger believes that the audience of responsive environments must actively participate in shaping their environment. Participants are endowed with new behavioral insights, enabling them to express themselves in new ways. They do not simply appreciate the artwork; rather, they must process the moment in their own way, co-creating a unique spatiotemporal experience.

The main distinction between the tradition of interactive art, reactive environments, and most events themed around dramatic participation lies in the leadership of the artist in participatory art forms versus the leadership of events (or mechanisms) in interactive art projects. Soke Dinkla considered the socio-political aspects of this art form:

“The artistic material of interactive art is the automatized dialogue between program and user. Interactive artworks provide a critical analysis of the automatized communication that is replacing inter-human relationships in more and more social fields. Thus the distribution of power between user and system is not just a technological issue but a social and political one as well.”

Interaction in Spatial and Environmental Contexts

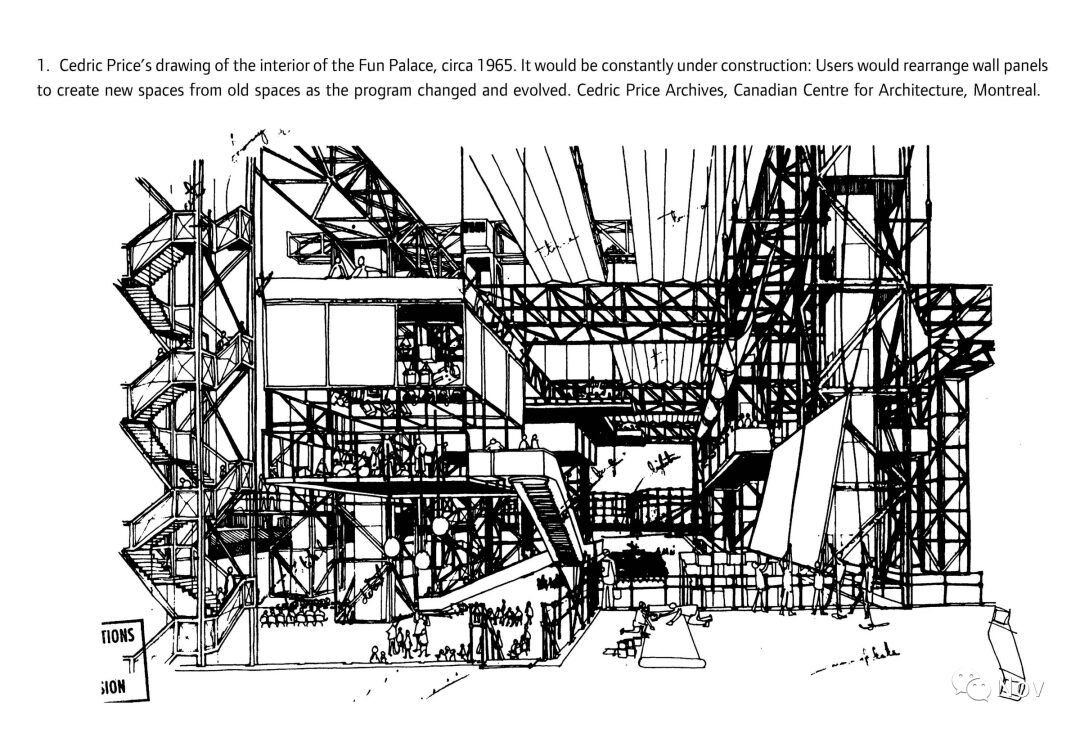

When discussing space and cybernetics, it is indeed necessary to mention Cedric Price‘s milestone project from the early 1960s, called Fun Palace. In addition to incorporating the basic laws of cybernetics, Cedric Price also synthesized various contemporary discourses of his time (such as information technology, game theory, and situationism) to produce a new type of improvisational architecture. The Fun Palace originated from the collaboration between architect Price, who valued the inevitability of change, chance, and indeterminacy in human environments, and avant-garde theater producer Joan Littlewood, who dreamed of a theater where people could experience transcendence and transformation not as spectators but as actors. The Fun Palace had no single program; its form could adapt to the “constantly changing and unpredictable” temporary programs determined by the users. In the Fun Palace, contrary to traditional architectural practices, architects typically express problems in terms of “permittivity” from the perspective of events rather than objects.

As the approach of the Fun Palace gradually shifted from theatrical thinking to cybernetics, the project planners placed greater emphasis on mathematical models based on statistics, psychology, and sociology. Later, Gordon Pask participated in the project as the head of the cybernetics committee. Even Price hoped that computer programs could reposition movable walls and corridors based on usage changes to adapt to the layout of the Fun Palace. The Fun Palace was never built. However, it has been widely praised and imitated, especially by young architectural students who formed the core of avant-garde architectural groups.

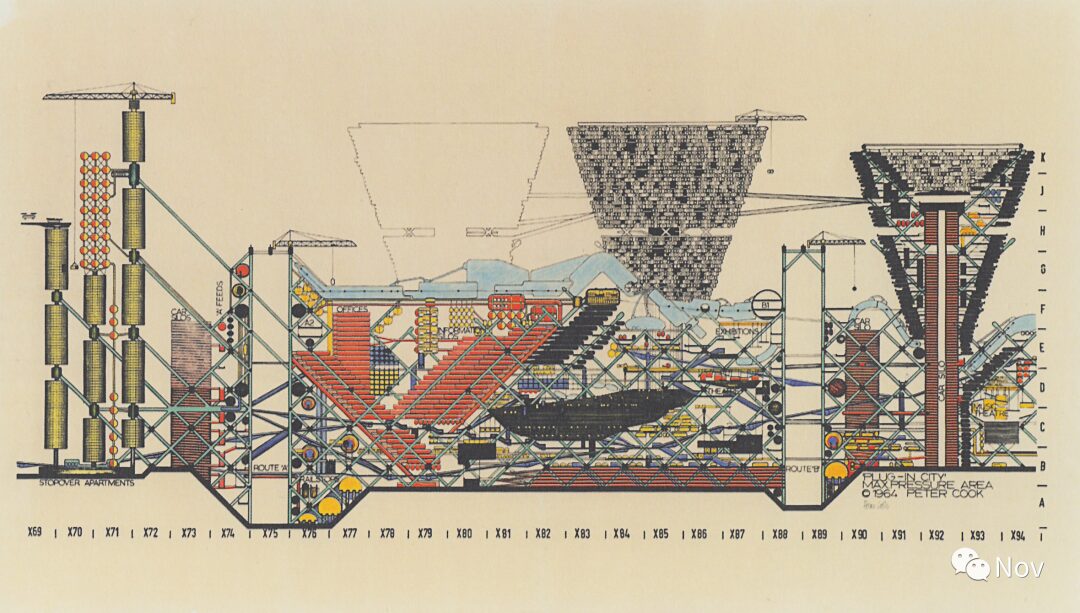

Archigram was a magazine supported by a group of architects and designers, publishing nine issues in the 1960s. The name of the magazine is a blend of the words Architecture and Telegram. Each issue was also a blend intersecting structure and communication. The magazine is now considered a response to electronically driven technologies within the popular domain of consumer products and services. Archigram provided images from system design to cybernetic planning.

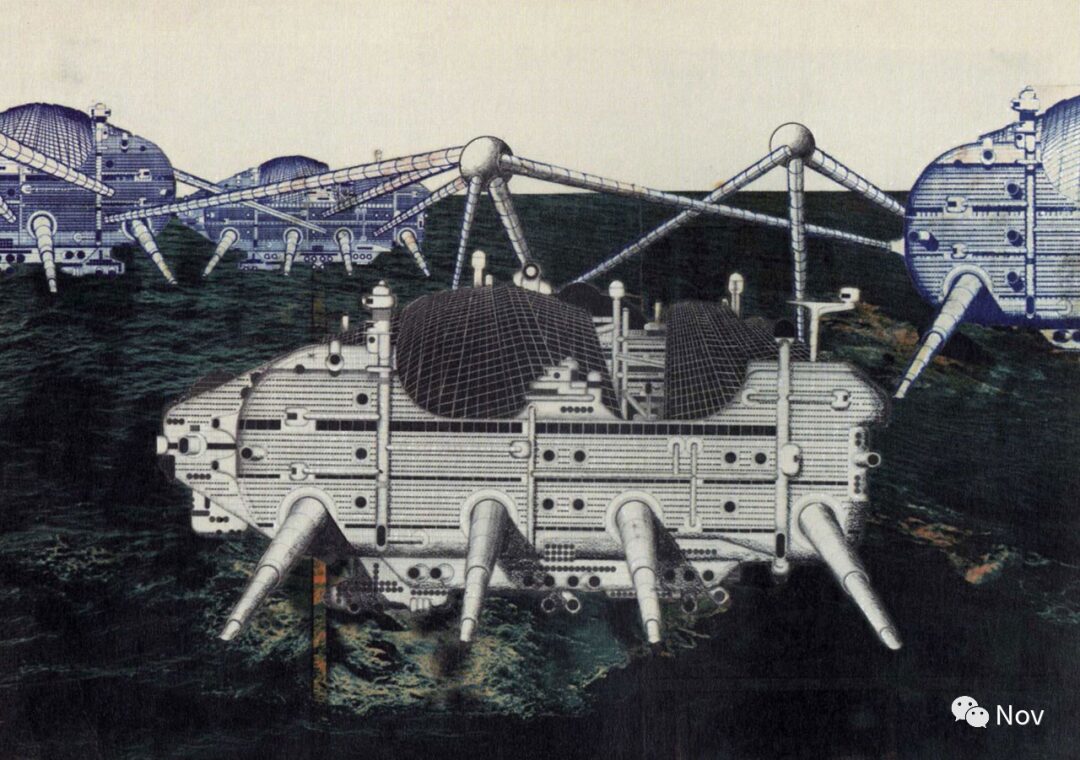

Their most notable works include Plug-In City and Walking City. In Plug-In City, Peter Cook proposed a city composed of permanent infrastructure and circulation networks, containing temporary spaces and services that could be added or removed. This proposal addressed urban issues such as population growth, transportation, and land use by viewing the entire city as a system. Herron‘s Walking City consisted of a massive walking structure that could serve as a human settlement after a nuclear war. These structures would be able to connect to each other or to a circulation infrastructure network to exchange passengers/residents and goods.

Mark Weiser coined the term Ubiquitous Computing in 1988. Taking writing as an example, as the first long-term storage technology for spoken information, he described literacy technology products as having a continuous presence in the background. Although they do not require active attention, the information to be transmitted is ready for use at a glance.

Mark Weiser recognized that (at the time) silicon-based technology was far from this concept. He proposed that ubiquitous computers operate in an invisible, non-intrusive manner, embedding themselves in the background of everyday life. It is important to understand that the core of this concept is that devices connected through networks will have information available everywhere, as people do not place information on their devices but rather place their devices on an information network. He emphasized that the power of this concept does not come from any one of these devices but from the intersection of many devices.

Ubiquitous computing considers the social aspect of the human environment. Subsequently, designs emerged for embedded (not just portable), location-aware, positioning (not generic), and adaptive (not uniform) systems.

Malcolm McCullough pointed out in his book Digital Ground:

“When most objects boot up and link to networks, designers have to understand the landscape of technology enough to take a position about their design.”

A major contribution of ubiquitous computing is its transformation of computer interfaces. Malcolm McCullough believes that ubiquitous computing is far from portable or mobile computing forms, as it is embedded in the spaces we live in. He advocates for a new form of location-aware computing to replace existing desktop computing. This new computing “emerges on the assumption that what you need, and with whom you wish to be connected at the moment, is based on where you are.”

McCullough also proposed elements that make this new form of computing possible. These elements include microprocessors, sensors for detecting motion, communication links between devices, tags for identifying actors, and actuators for closing feedback loops. He also proposed controllers, display devices, location tracking devices, and software components to complete the series of components needed for ubiquitous computing.

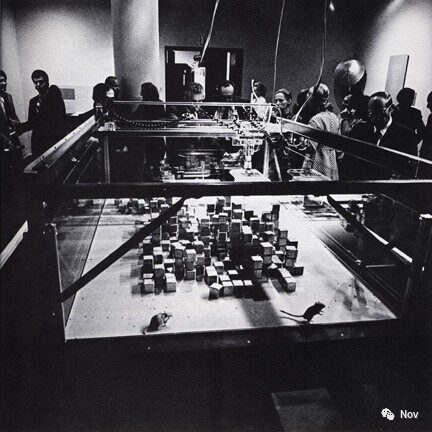

In the 1970s, Nickolas Negroponte discussed various aspects of the designer-machine dialogue and its several byproducts that emerged in the fields of architecture and urbanization in the late 1960s and early 70s, such as: flexibility, adaptability, reactivity, responsiveness, and manipulativeness [architectural styles or methods]. His project SEEK was a declarative exhibition/installment that pioneered the concept of digital combinations in architecture. He considered the boundaries between two types of interaction; one being passive and manipulative (i.e., “moved as opposed to move”), and the other being responsive, where the environment plays an active role in the computational process. Negroponte went far beyond simple feedback loops in traditional control systems. His responsive architecture evolved toward artificial intelligence, possessing intent and contextual cognition, with the ability to dynamically alter its goals. In his book Soft Architecture Machines, Negroponte proposed a model of architecture without architects. He believed that architectural machines transcended some auxiliary means in the design process. Instead, in his view, they themselves were architecture. Intelligent machines or cognitive physical environments could respond to the direct needs and desires of their inhabitants.

Democratization of Microcomputers and Interactive Design

Creating interactive [art] projects, technical product design prototypes, and technology-embedded systems, as suggested by Malcolm McCullough, requires core electronic and engineering skills. Utilizing the simplest technologies, such as basic control mechanisms, sensors, or motors, artists and designers either purchase a consumer version (if available) to control the systems as desired, hire an engineer, or invest time and money to learn the necessary skills to research and develop a solution themselves.

However, this barrier was overcome in two steps during the first decade of the 21st century. The first step was in 2001, when Processing—an open-source computer programming language and integrated development environment (IDE)—was released for the electronic art, new media art, and visual design community. The second time was in 2005, when Arduino—an open-source electronic platform (microcontroller) developed by the Interaction Design Institute Ivrea in Italy—entered the market, aimed at creating a low-cost, simple platform for non-engineers, initially prepared for art students wanting to create interactive electronic art projects.

Arduino quickly became a tool for artists and designers and entered art museums and galleries. Gaining increasing popularity in mainstream society and museums, artists and designers embraced this new potential as a tool for their art projects. Processing and Arduino, alone or in combination, facilitated the development of interactive art and design, and in many ways, promoted the development of architecture and urbanism. These two platforms paved the way and were followed and supported by similar hardware/software platforms like Raspberry Pi boards, Intel Galileo boards, BeagleBoards, openFrameworks, and Pure Data.

Analytical statistics from search engine queries show significant interest in these platforms. In 2009, the term Arduino appeared on 1.9 million websites. Searching for “Arduino and Design” yielded 613,000 sites, while searching for “Arduino and Art” yielded 603,000 sites.

Multiple user groups simultaneously used these platforms, leading to overlapping research fields. The possibilities of these new tools and platforms, traditionally mastered by engineers and computer programmers, are now accessible to artists, interaction designers, educators, and others. These different groups continuously work and share their codes, materials, and techniques. The byproducts of these revolutionary products have democratized the tradition of interactive design, providing artists and designers with a new, exploitable domain.

Interaction and Experience Design: Similar but Different

It is crucial to distinguish the roots of interaction (interaction design and interactive art) and user experience (user experience). While there are many similarities between these two concepts (in the arts and/or design fields), the main difference is that experience design has been a topic for a long time, while interaction design can be considered a concept less than a century old.

The concept of interaction design has been shaped by various artists and computer scientists. It can be understood as the entanglement of computer science and art. However, from the history of modern architecture and industrial design, user experience has always been an important topic. User experience is user-centered, focusing on methods for solving user problems, while interaction design questions the authority of the creator/designer and emphasizes the importance of the system.

Finally, in 1995, Don Norman coined the term “User Experience”, following the activities of companies like Toyota and Apple, as well as ideas from scholars like Henry Dreyfuss.

UX Design

Today, with the rise of digital products, “User Experience Design” has emerged specifically in the form of UX design. In today’s design field, user experience is a contentious topic. Many believe that interaction design is part of user experience design. However, these are mostly popular viewpoints from well-known product/UX designers or design firms, without considering the actual history and development path of interaction design.

I believe that user experience design is a form of experience design, akin to the digitization of a given product. Interaction design is almost born through digital technology; without it, it would not make sense. For me, when people use the term UX design, their intention is to emphasize the interactive aspects of user/customer experience (from the perspective of human-computer interaction). User experience does not necessarily require the use of [digital] technology, but in interaction design, technology plays a key role. For example, when designing a hammer, user experience remains a valid concern. On the other hand, in some very preliminary examples of interactive art, the presence of digital technology gives meaning to the concept of interaction itself. People might say that when thinking of UX, there is always some form of [digital] interaction design, while designers often use the term User Experience Design in a more general and inclusive manner to describe any given product, regardless of its technical attributes.

Compiled from: Where did this interaction come from? — a brief history of interaction design