When it comes to autonomous driving, robotic vision, and high-definition cameras, the camera unit is crucial. Previously, we discussed some FPGA applications in high-definition cameras and machine vision, including depth cameras and stereo cameras. The role of FPGA is primarily to process the captured images, which requires hardware with excellent parallel performance. So, how does its processing speed compare to that of ARM CPUs? Today, we will explore the applications of FPGA in edge computing.

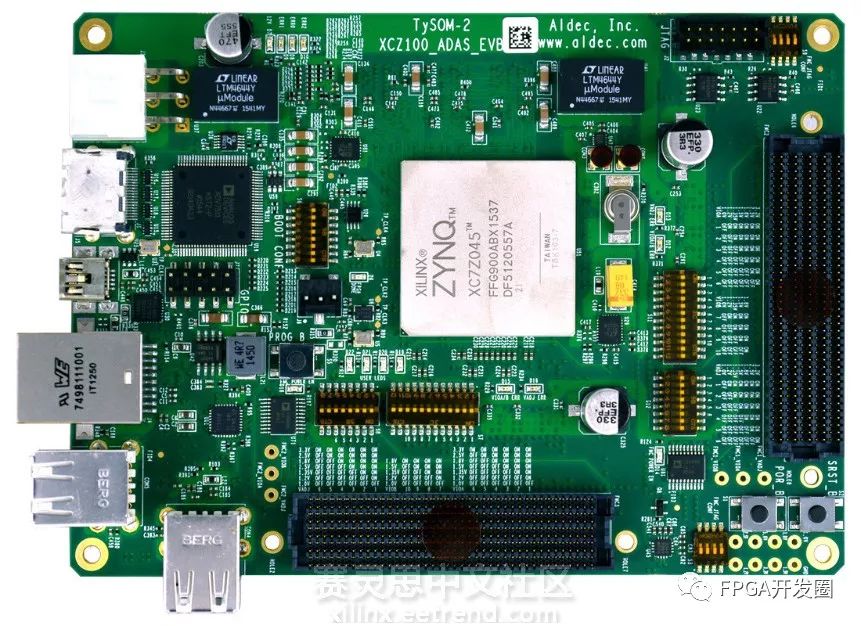

When we think of computing speed, cloud computing comes to mind first. Cloud computing has countless benefits, such as fast computation and large data volume. However, no cloud computing provider can guarantee flawless performance; it has its downsides, the most significant being insufficient real-time computing. For example, when you send data to the cloud for processing, there will always be some delay, and the most challenging part is waiting for the results. Edge computing requires high computing performance and real-time capabilities. FPGA can process many tasks in parallel, meeting both data processing speed and real-time requirements, making it a perfect solution. This year, at the Embedded Vision Summit held in Santa Clara, California, a four-camera ADAS model based on the Aldec TySOM-2-7Z100 prototype board was demonstrated, as shown in Figure 1. The performance of TySOM is excellent, mainly because its core processing component is the Xilinx Zynq Z-7100 SoC.

Figure 1: TySOM-2-7Z100 Prototype Board

As seen in Figure 2, we can observe the position of Zynq on the TySOM board. Why can the FPGA in Zynq achieve such excellent applications in edge computing? The Zynq-7000 programmable SoC integrates a software-programmable ARM processor and a hardware-programmable FPGA on a single chip, enabling digital analysis while achieving hardware acceleration. It integrates CPU, DSP, ASSP, and mixed-signal processing modules. The FPGA module in Zynq is responsible for image processing. So, what role does the ARM core play in the TySOM board?

The excellent performance of Aldec’s TySOM-2-7Z100 prototype board depends on the dual-core ARM Cortex-A9 processor and an FPGA logic inside the Zynq. The entire image processing process begins with capturing images from the camera, using an edge detection algorithm (where edges refer to the perception of physical edges, such as the boundaries of objects or streets). This is a compute-intensive task, as millions of pixels need to be processed. If the captured image is processed in an ARM CPU, it can only handle three images per second; however, in FPGA, it can process 27.5 images per second. This shows the crucial role of FPGA in Zynq, resulting in nearly a 10-fold increase in image processing speed.

Figure 2: Front View of TySOM-2-7Z100 Board

Having a high-performance core processing chip is not enough; a versatile peripheral interface is also required for interaction with other devices. The TySOM design can support up to 362 I/O peripheral interfaces, 16 GTX transceivers, and two FMC-HPC connectors for expansion card connections. The ARM CPU requires basic standard interfaces for data processing, such as DDR3 RAM, USB, and HDMI; it also supports Linux and other real-time operating systems. Moreover, the ARM CPU has 1GB of DDR3 RAM at its disposal, supporting up to 32GB of SSD storage space. Network interaction can be achieved through the RJ45 interface via Gigabit Ethernet PHY, and it is equipped with four USB 2.0 interfaces. Most FPGA interfaces are realized through two FMC-HPC sockets for interaction with other devices. This way, both the ARM core and the FPGA module can exchange information with the outside world.

Autonomous driving is progressing rapidly, and with the gradual recognition of autonomous driving by national policies, it is undoubtedly a good thing for technology. Both hardware and algorithms will have their roles to play. In the context of smart cities and smart living, the development of FPGA will steadily advance with the trend, better embracing change and creating opportunities for transformation.

-

Introducing a Compact FPGA Development Board – PicoEVB M.2 Module

-

How to Address Challenges in Embedded Vision Systems?

-

Can VxWorks and Linux Run Simultaneously on MPSoC?

-

[Video]: Centimeter-Precise GPS Positioning for Autonomous Vehicles

-

Shocking! Level 4/5 Autonomous Driving Dedicated Board is Here!

-

[Video] Far-field Voice Recognition and Localization Development Platform

-

Accolade’s New ANIC-200Kq: A Performance Monster for Data Processing at 100GbE Bandwidth!

-

[Video]: FPGA Demonstration on AWS Cloud

-

Industrial-Grade SoM Based on Zynq Z-7100: TE0782-02-100-2I

-

[Video] Video Analysis Solution from Edge to Cloud

-

High-Frequency Trading’s Strong Backbone: Configurable HES-HPC-HFT-XCVU9P PCIe Card Based on Virtex UltraScale+ FPGA

-

[Video]: Xilinx FPGA Accelerator Board Demonstration

-

NI FlexRIO Uses Kintex UltraScale FPGA and Layered I/O Modules for Custom Front-End Development

-

[Video]: CCIX Interconnect Standard Demonstration

-

Dialog Provides a Range of Low-Cost Power Solutions for Zynq UltraScale+ MPSoC

-

Did You Know This Secret About Oscilloscopes?

-

Why is FPGA an Ideal Choice for Embedded System Design?

-

Spectrum Instrumentation Builds a PCIe Platform for High-Speed Multi-Channel ADCs Using Artix-7 Series FPGA

-

[Video]: High-Performance Low-Power Miner Based on Zynq

-

Xilinx Automotive-Grade Zynq UltraScale+ MPSoC Series Launched

-

How to Approach Power Design

-

Hardware Engineer’s Question: What Can the New Brain-1 Board Do?

-

[Video]: Robot Vision Positioning System Based on Zynq

-

Shocking! Server I/O Bandwidth Reaches an Astonishing 3.2Tbps!