This article begins by defining real-time kernels and introducing real-time operating systems, explaining their characteristics. It then discusses monolithic kernels, layered kernels, and microkernels from the perspective of kernel functionality and structure. Finally, it introduces the VxWorks Wind kernel, which possesses microkernel characteristics.

1.1 Overview of Real-Time Kernels

“Real-time” refers to the ability of a control system to promptly handle external events that require control within the system. The time taken from an event’s occurrence to the system’s response is known as latency. For real-time systems, a crucial condition is that latency has a defined upper limit (such systems are deterministic). Once this condition is satisfied, different real-time system performances can be distinguished based on the size of this upper limit. Here, the “system” refers to a complete design from a systems theory perspective, capable of interacting independently with the external world and achieving expected functions. This includes three parts: real-time hardware system design, real-time operating system design, and real-time multitasking design. Implementing a real-time system is the result of the organic combination of these three parts.

From another perspective, real-time systems can be classified into hard real-time systems and soft real-time systems. A hard real-time system is one where timing requirements have a specific deadline; exceeding this deadline results in erroneous outcomes that are intolerable and may lead to severe consequences. Conversely, in soft real-time systems, “real-time” is merely a matter of degree, defining a range of acceptable delays when providing services like interrupts, timing, and scheduling. Within this range, earlier responses are more valuable; late responses are less valuable but can be tolerated as long as they do not exceed the range.

Thus, a real-time operating system (RTOS) kernel must meet many specific basic requirements posed by real-time environments, including:

-

Multitasking: Due to the asynchronicity of real-world events, the ability to run many concurrent processes or tasks is crucial. Multitasking provides a better match to the real world as it allows for multi-threaded execution corresponding to many external events. The system kernel allocates CPU resources to these tasks to achieve concurrency.

-

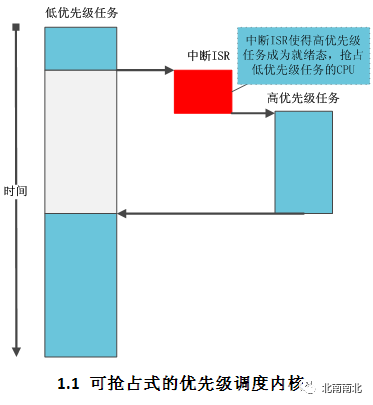

Preemptive Scheduling: Real-world events have inherited priorities, and these priorities must be considered when allocating CPU resources. In priority-based preemptive scheduling, tasks are assigned priorities, and among the executable tasks (those not suspended or waiting for resources), the one with the highest priority is allocated CPU resources. In other words, when a high-priority task becomes executable (referred to as the Ready State in the Wind kernel), it immediately preempts the currently running lower-priority task.

-

Fast and Flexible Inter-task Communication and Synchronization: In a real-time system, many tasks may execute as part of an application. The system must provide fast and powerful communication mechanisms between these tasks. The kernel must also provide synchronization mechanisms necessary for effectively sharing non-preemptable resources or critical sections.

-

Convenient Communication Between Tasks and Interrupts: Although real-world events often arrive as interrupts, to provide effective queuing, prioritization, and reduce interrupt latency, we generally prefer to handle corresponding work at the task level (this strategy is adopted by uC/OS-III). Therefore, communication between task and interrupt levels is necessary.

-

Performance Boundaries: A real-time kernel must provide worst-case performance optimization rather than throughput performance optimization. We prefer a system that can consistently execute a function within 50 microseconds (us) rather than one that averages 10 microseconds but occasionally takes 75 microseconds.

-

Special Considerations: Due to increasing demands on real-time kernels, the requirements for supporting increasingly complex functions must be considered. This includes multi-process handling (such as VxWorks RTP) and support for updated, more powerful processor architectures (such as multicore CPUs). Real-time hardware system design

Real-time hardware system design is the foundation of the other two parts. It requires sufficient processing power while ensuring the software system is efficient. For example, the number of external events that the interrupt handling logic of the hardware system can respond to simultaneously, hardware response time, memory size, processing power, and bus capacity must be adequate to ensure that all calculations can be completed in the worst-case scenario. Multi-processing hardware systems also include internal communication rate designs. When the hardware system cannot guarantee real-time requirements, the entire system cannot be considered real-time. Currently, the speed of various hardware is continuously improving, with advanced technologies emerging, making hardware no longer a bottleneck for real-time systems in most applications. Hence, the focus of real-time systems is on real-time software system design, which has become the primary content of real-time research and is the most complex part. In many cases, real-time hardware systems and real-time operating systems are not distinguished.

Let’s first look at several main indicators for evaluating the performance of real-time operating systems:

-

Interrupt Latency: For a real-time operating system, the most important metric is how long interrupts are disabled. All real-time systems must disable interrupts before entering critical section code and re-enable them after executing the critical code. The longer interrupts are disabled, the longer the interrupt latency. Thus, interrupt latency can be expressed as the sum of the maximum time interrupts are disabled and the time from when the system receives an interrupt signal to when the operating system responds and enters the interrupt service routine.

-

Interrupt Response Time: Interrupt response time is defined as the time from when an interrupt occurs to when the user interrupt service routine code begins executing to handle that interrupt. This includes all overhead prior to starting to handle the interrupt. Interrupt response time includes interrupt latency; therefore, when considering a real-time system’s handling of external interrupts, it typically refers to interrupt response time. Typically, the time taken to protect the context before executing user code, pushing the CPU’s registers onto the stack, is counted as interrupt response time.

-

Task Switching Time: The time spent switching between multitasks, from the first instruction that begins saving the context of the previous task to the first instruction that begins running the next task when restoring the context.

From the perspective of real-time performance, operating systems have gone through three stages: foreground/background systems, time-sharing operating systems, and real-time operating systems.

The foreground/background system actually has no operating system; it runs only an infinite main loop without the concept of multitasking but responds to external events through interrupt service routines. In the foreground/background system, the real-time response characteristics to external events are viewed from two aspects.

-

Interrupt Latency: The main loop generally keeps interrupts open, so interrupt responses in foreground/background systems are very fast and typically allow nesting;

-

Interrupt Response Time: It requires one pass through the main loop to handle external requests collected in the interrupt service routine, so the system response time depends on the main loop cycle.

Time-sharing Operating Systems divide system computing power into time slices, allocating them to various tasks according to certain strategies, usually pursuing some sense of fairness during allocation; time-sharing operating systems do not guarantee real-time performance.

Real-Time Operating Systems (RTOS) aim to achieve real-time responses to external events. According to the definition of real-time, real-time operating systems must provide responses within a defined time. Real-time operating systems must meet the following four conditions:

-

Preemptive priority scheduling kernel, when a running task causes a higher-priority task to enter the ready state, the current task loses its CPU usage rights or is suspended, and the higher-priority task immediately gains control of the CPU, ensuring that the highest-priority task always has CPU usage rights, as shown in Figure 1.1.

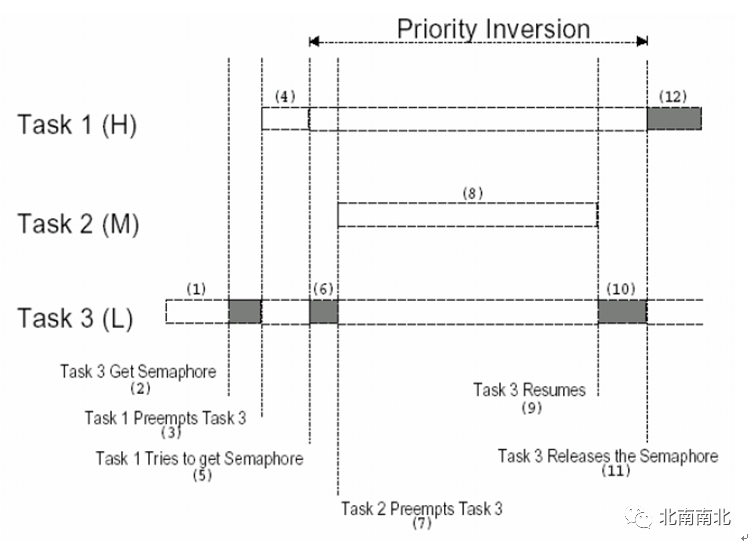

Interrupts have priorities and can be nested, meaning high-priority interrupts can interrupt the execution of low-priority interrupt handlers, gaining priority for execution, and returning to resume the lower-priority task after processing, preventing priority inversion. The priority of system services is determined by the priority of the task requesting that service, with a priority protection mechanism to prevent priority inversion. Priority inversion occurs when a high-priority task is forced to wait for an uncertain completion time of a lower-priority task. Consider the following scenario: tasks T1, T2, and T3 have decreasing priorities. T3 has acquired resources related to a semaphore. When T1 preempts T3 by acquiring the same semaphore, T3 will be blocked. If we can ensure that the time T1 is blocked does not exceed the time T3 takes to release those resources after executing, the situation will not be particularly severe, as T1 occupies a non-preemptable resource. However, lower-priority tasks are vulnerable and are often preempted by medium-priority tasks. For example, T2 can preempt T3, preventing T3 from releasing the resources needed by T1. This situation can persist, leading to an uncertain blocking time for the highest-priority task T1. The schematic diagram is shown as Figure 1.2.

1.2 Schematic Diagram of Priority Inversion

One solution to the above problem is to use the Priority Inheritance Protocol. When a high-priority task needs resources held by a low-priority task, the low-priority task’s priority is raised to the same level as the high-priority task; another protocol to prevent priority inversion is the Priority Ceiling, which is designed to prevent priority inversion rather than remedy it. In other words, regardless of whether it blocks a high-priority task, a task holding this protocol is assigned the priority of the ceiling during its execution to complete operations quickly. Thus, the priority ceiling can be seen as a more proactive way to protect priority. We will analyze the design and implementation of these two solutions in detail in future blog posts.

The performance evaluation indicators of real-time operating systems (interrupt response time and task switching time) have fixed upper bounds. After meeting the four necessary conditions, the specific implementation mechanism of the RTOS kernel determines its real-time performance. The Wind kernel of VxWorks is a true real-time microkernel that meets the above conditions. Simultaneously, the Wind kernel adopts a single real-time address space, resulting in very low task switching overhead, comparable to switching to another thread within the same process on a host like UNIX, without the overhead of system calls. The efficient real-time design allows the Wind kernel to exhibit excellent real-time performance across various fields, from industrial field control to defense and aviation (strictly speaking, the version used in aviation is VxWorks 653).

1.2 Microkernel Operating System Design Philosophy

Traditionally, an operating system is divided into kernel mode and user mode. The kernel operates in kernel mode, providing services to user-mode applications. The kernel is the soul and center of the operating system, determining its efficiency and application domain. When designing an operating system, the kernel’s functionalities and how those functionalities are organized are determined by the designer. From the perspective of kernel functionality and structural characteristics, operating systems can be categorized into monolithic kernels, layered kernels, and microkernels.

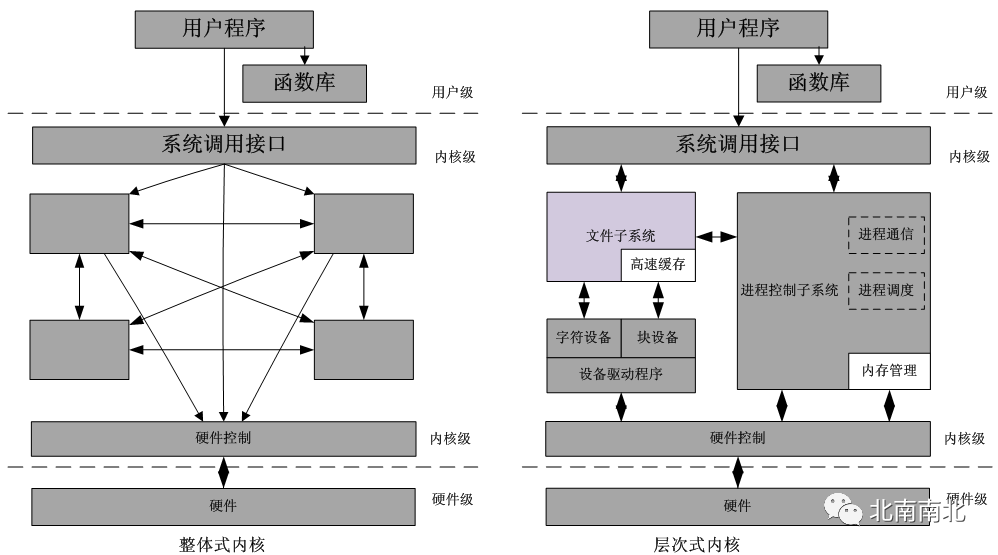

Monolithic kernels are written as a collection of processes, linked into a large executable binary program. Using this technique, each process in the system can freely invoke other processes, provided the latter offers some useful computation work needed by the former. These thousands of processes that can call each other without restrictions often lead to cumbersome and difficult-to-understand systems. Operating systems with monolithic kernel structures do not encapsulate or hide data, resulting in high efficiency but difficulties in scalability and upgrades.

Layered kernel structures divide module functionalities into different layers, with lower-layer modules encapsulating internal details while upper-layer modules call the interfaces provided by lower-layer modules. Unix, Linux, and Multics belong to layered structure operating systems. Layering simplifies the operating system structure, making it easier to debug and extend. The structures of monolithic and layered kernels are shown in Figure 1.3.

1.3 Structure Diagram of Monolithic and Layered Kernels

Regardless of whether it is a monolithic structure or a layered structure, their operating systems include many functionalities needed for various potential fields, hence they are referred to as monolithic kernel operating systems. The entire core program runs in kernel space and supervisor mode, making it possible to consider the kernel itself as a complete operating system. Taking UNIX as an example, its kernel includes process management, file systems, device management, and network communication functions, while the user layer merely provides an operating system shell and some utility programs.

When designing an operating system using a layered approach, designers need to determine where to draw the boundary between the kernel and user. Traditionally, all layers were placed within the kernel, but this is not necessary. In fact, it is better to minimize kernel mode functionalities because errors within the kernel can quickly drag down the system; conversely, user-level tasks can be set with lower privileges, so the consequences of a single error are not fatal. The microkernel design philosophy aims to achieve high reliability by dividing the operating system into small, well-defined modules, with only one module (the microkernel module) operating in kernel mode while the other modules, being relatively less powerful, run as ordinary user tasks. Notably, by treating each device driver and file system as ordinary tasks, errors in these modules may cause them to crash but will not bring down the entire system. For instance, an error in an audio driver may cause sound interruptions or cessation but will not crash the computer system. In contrast, in a monolithic kernel operating system, since all device drivers are in the kernel, a faulty audio driver can easily lead to invalid address references, causing annoying system crashes.

Many microkernels have been implemented and put into use.Microkernels are particularly popular in real-time, industrial, aviation, and military applications because these fields require high reliability for critical tasks.Thus, most embedded operating systems adopt a microkernel structure.Microkernel operating systems are a technology that has developed over the past twenty years, with very small but efficient kernels, ranging from tens of KB to hundreds of KB, suitable for resource-limited embedded applications.Microkernels separate many common operational functionalities from the kernel (such as file systems, device drivers, network protocol stacks, etc.), retaining only the most basic contents.Well-known kernels include the INTEGRITY real-time operating system developed by Green Hills Software, VxWorks developed by Wind River Systems, the QNX real-time system used by BlackBerry manufacturer RIM (Research In Motion Ltd.), as well as L4, PikeOS, and Minix3.

Note: From the perspective that microkernel modules run in CPU kernel mode while other modules run in non-kernel mode, VxWorks’ Wind kernel cannot strictly be classified as a microkernel system. The Wind kernel simulates its privileged state through the global variable kernelState. The core service routines of the Wind kernel begin with wind*. When kernelState is FALSE, it means that the Wind kernel is currently not being accessed by any programs, allowing VxWorks’ peripheral modules to debug the core service routines of the Wind kernel; when kernelState is TRUE, it indicates that other programs are using kernel mode routines, and programs needing to call core service routines must be placed in a delayed queue until the program in kernel mode exits (i.e., kernelState is FALSE), at which point the delayed kernel mode routines will be executed. From this, we can see that the kernelState simulates a non-preemptive kernel mode. The Wind kernel of VxWorks simulates kernel mode service routines through global variables, along with its high configurability, exhibiting characteristics typical of general microkernel operating systems.

Microkernel operating systems are generally believed to have the following advantages:

-

A unified interface, with no need for identification between user mode and kernel mode;

-

Good scalability, easy to expand, adapting to hardware updates and application changes;

-

Good portability, requiring minimal code modifications to port the operating system to different hardware platforms;

-

Good real-time performance, with fast kernel response times, easily supporting real-time processing;

-

High security and reliability, with microkernels designed for security as an internal system feature, using only a small number of application programming interfaces externally.

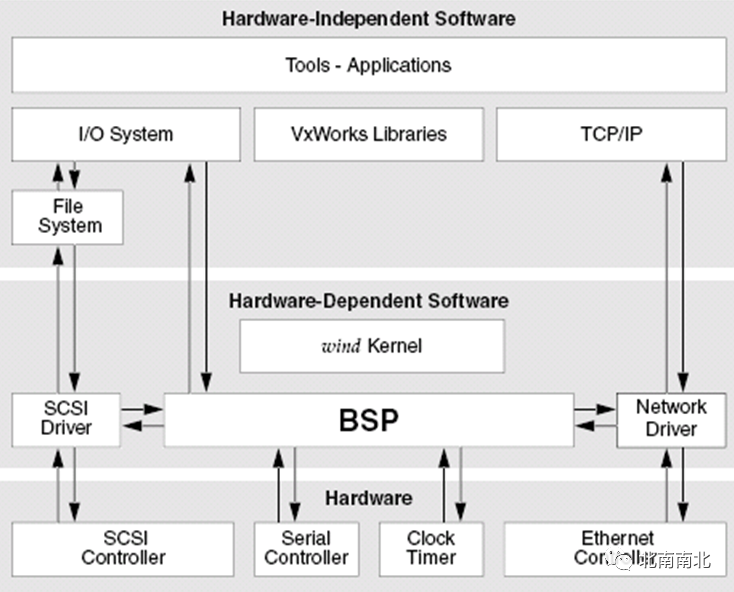

Since the operating system core resides in memory, and the microkernel structure streamlines the core functionalities of the operating system, the kernel size is relatively small, with some functionalities moved to external storage. Thus, the microkernel structure is particularly suitable for dedicated embedded systems, as illustrated in Figure 1-4, which intuitively shows the position of the Wind kernel in the VxWorks system.

Figure 1-4 VxWorks System Structure

1.3 Wind Microkernel Design

To enhance microkernel efficiency, there are two implementation modes: protected virtual address space mode and unprotected single physical address space mode. The former is adopted in monolithic kernel operating systems (like Unix) and some microkernel operating systems (like QNX, Minix3). The advantages of this mode are evident: tasks run independently, unaffected by errors in other tasks, resulting in high system reliability.

The Wind kernel of VxWorks adopts a single physical address space mode, with all tasks running in the same address space, without distinguishing between kernel mode and user mode. Its advantages include:

-

No need for virtual address space switching during task switching

-

Tasks can directly share variables without needing to copy data across different address spaces through the kernel

-

No need to switch between kernel mode and user mode during system calls, equivalent to direct function calls

Note: System calls require switching from user mode to kernel mode to perform operations that cannot be executed in user mode. On many processors, this is achieved through a TRAP instruction equivalent to a system call, which involves strict parameter checks before executing that instruction. VxWorks does not have such switches, so system calls and regular function calls are effectively indistinguishable. However, this series of blog posts will still use the general terminology.

Debates have arisen regarding which of the two modes is superior. Comparing the merits of both is often very difficult. This article tends to believe that for embedded real-time applications, the single physical address mode is more suitable. Many practices have proven that relying on virtual address protection to enhance reliability has its limitations; after all, when a program runs into an error, virtual address protection merely delays, accumulates, and amplifies the error process. Those who have used Windows may have this feeling. The VxWorks operating system, validated by numerous critical applications, has proven to be highly reliable (of course, a reliable system must consist of both a reliable operating system and a reliable application system).

The layered Wind kernel only provides service routines for multitasking environments, inter-process communication, and synchronization functions. These service routines are sufficient to support the rich performance requirements provided by VxWorks at higher levels. The Wind kernel is invisible to users.

Applications use some system calls to manage tasks and synchronization that require kernel participation, but the processing of these calls is invisible to the calling tasks. Applications merely link the appropriate VxWorks routines (typically using VxWorks’ dynamic linking feature) and issue system calls like calling a subroutine. This interface does not require a jump table interface like the Linux kernel, where users specify a kernel function call using an integer.

1.4 Wind Kernel Class Design Philosophy

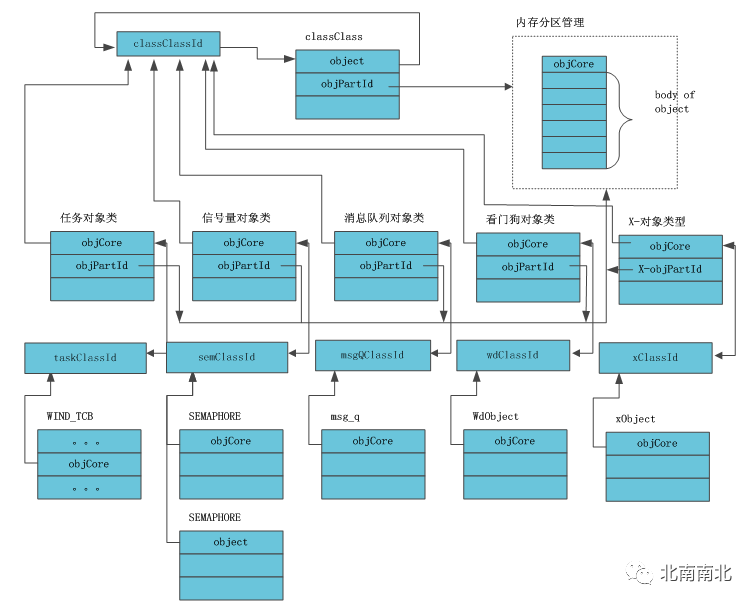

VxWorks adopts class and object concepts to divide the Wind kernel into five components: task management module, memory management module, message queue management module, semaphore management module, and watchdog management module.

Note: In addition to the five modules mentioned above, there are also the Virtual Memory Management Interface (VxVMI) module and TTY circular buffer management module, which are also managed by class and object concepts in the Wind kernel. The Virtual Memory Interface module (VxVMI) is a functional module of VxWorks that utilizes the memory management unit (MMU) on user chips or boards to provide users with advanced memory management capabilities; the TTY circular buffer management module is the core of the ttyDrv device, which acts as a virtual device, forming a conversion layer between the I/O system and the actual drivers, providing VxWorks with a standard I/O interface. A virtual ttyDrv device can manage multiple serial device drivers. These two modules are not strictly part of the theoretical RTOS kernel, which is why they are not included in the components of the Wind kernel.

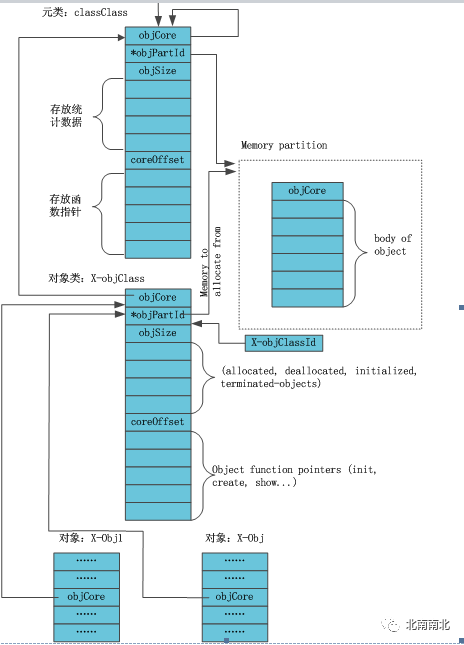

In the Wind kernel, all objects are components of classes, and classes define the methods for operating on objects while maintaining records of all operations on those objects.The Wind kernel adopts C++ semantics, but is implemented in C.The entire Wind kernel is implemented through explicit coding, and its compilation process does not depend on any specific compiler.This means that the Wind kernel can be compiled using both the built-in Diab compiler of VxWorks and the open-source GNU/GCC compiler.VxWorks has designed a metaclass for the Wind kernel, and all object classes are based on this metaclass.Each object class is only responsible for maintaining the operational methods (such as creating, initializing, and deleting objects) of its respective objects, as well as managing statistical records (such as data on created and destroyed objects).Figure 1.5 illustrates the logical relationship between the metaclass, object classes, and objects.

Figure 1-5 Relationship Diagram of Metaclass, Object Classes, and Objects

Note: Every time I draw such class and object relationship diagrams, I feel exceptionally conflicted. This is because in the design of the Wind kernel, the metaclass classClass has an ID number classClassId, and each object class X-objClass also has an ID number X-objClassId. In the C implementation, classClassId and X-objClassId are pointer variables that store the addresses of the respective classes. When initializing object classes and objects, the values of the pointer variables stored in the objCore fields of the object class and objects (i.e., the addresses of the respective classes) have little relation to classClassId. Figure 1.5 accurately reflects the real relationship of the class addresses stored in objCore.

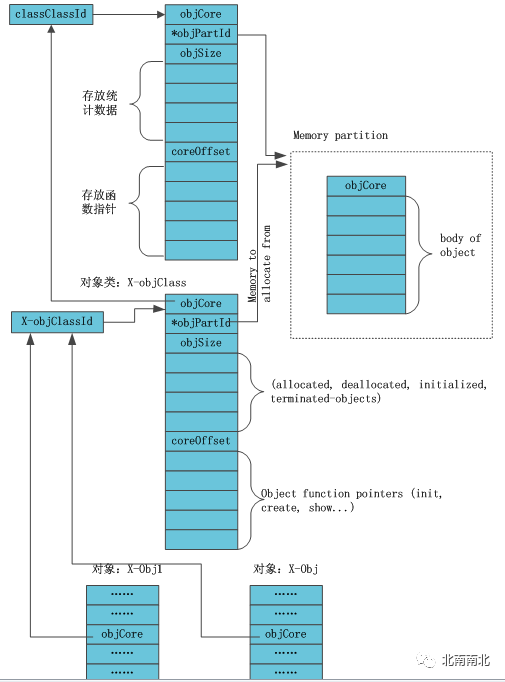

Logically speaking, Figure 1.6 looks more comfortable, although from the C implementation perspective, classClassId and X-objClassId serve different roles, but logically, Figure 1.6 more clearly describes the issue (and the diagram is prettier, although the pointer to the class address is stored in objCore). Therefore, I adopt the method shown in Figure 1.6 when drawing class and object relationship diagrams.

Figure 1-6 Relationship Diagram of Metaclass, Object Classes, and Objects

As shown in Figures 1.5 and 1.6, each object class points to the metaclass classClass, and each object class is only responsible for managing its respective objects. The complete logical key of the metaclass, object classes, and objects in the Wind kernel is illustrated in Figure 1.7.

Figure 1-7 Relationship Between Object Classes, Objects, and Metaclasses in Wind Kernel

Note: The class management model is not a characteristic of the Wind kernel; functionally, it is merely a means of organizing the various modules of the Wind kernel, and all object classes and objects of the kernel modules depend on it. VxWorks uses classes and objects to organize the structure of the operating system, and one significant advantage is that it increases code security, allowing verifications when creating new object classes and objects, as well as when deleting them.

1.5 Characteristics of the Wind Kernel

The Wind kernel of VxWorks naturally possesses all four characteristics common to RTOS described in Section 1.2, and its features can be summarized as follows:

-

Scalable microkernel design;

-

Multitasking concurrent execution;

-

Preemptive priority scheduling algorithm;

-

Optional time-slice round-robin algorithm;

-

Inter-task communication and synchronization mechanisms;

-

Fast context switching times;

-

Low interrupt latency;

-

Fast interrupt response times;

-

Support for nested interrupts;

-

256 task priorities;

-

Task deletion protection;

-

Priority inheritance;

-

Function-based calls, avoiding trap instructions and jump tables;

-

The VxWorks kernel operates in processor privileged mode;

-

The VxWorks kernel is layered, with the core library Wind kernel in kernel mode, protected by the global management kernelState.

Note: The next parts of this series of blog posts will analyze in detail how the Wind kernel is designed to possess these characteristics.

To elaborate a bit more: In analyzing the Wind kernel, I adhere to the principle of separating mechanism and policy. The separation of mechanism and policy is the guiding philosophy of microkernel design. In other words, the guiding principle of microkernel operating system design is to provide mechanisms rather than policies. To clarify this, let’s take task scheduling as an example. A simple scheduling algorithm assigns a priority to each task and lets the kernel execute the ready task with the highest priority. In this example, the mechanism is the kernel’s search for the highest-priority ready task and running it; the policy is assigning corresponding priorities to tasks. In other words, the mechanism is responsible for providing functionality, while the policy is responsible for how to use that functionality. The separation of policy and mechanism can make the operating system kernel smaller and more stable. As the saying goes, “A beautiful kernel is not about what functionalities can be added, but what functionalities can be reduced.”

This series of blog posts aims to analyze the design philosophy, working mechanisms, and characteristics of the Wind kernel while reflecting on the sources of RTOS kernel design ideas. The Wind kernel of VxWorks has undergone nearly 20 years of development and improvement, reaching its current stable state. The current design must have its internal considerations, and I hope to analyze the design philosophy, working mechanisms, and characteristics of the Wind kernel from the perspective of a system designer to provide references for designing an excellent RTOS kernel!