For those of us born in the 80s, our first encounter with computers likely occurred around 1995, when the buzzword was multimedia. I still remember watching a classmate successfully open a game by entering a few DOS commands, and I was utterly impressed. To me, what appeared on the screen was like a foreign language. With computers, our lives have changed dramatically—gaming, browsing the internet, chatting, and even making a career out of it. Sometimes I can’t help but marvel at the power of computers.

Humans are always clever yet lazy. Even simple calculations like 1+1 are often too much to do manually. In 1623, Wilhelm Schickard created a “calculating clock” that could perform addition and subtraction on six-digit numbers and output answers with a bell. It operated by turning gears. Speaking of calculators, our ancient civilization has records of the abacus dating back to the late Eastern Han period.

The development of computers has also progressed with technology, experiencing mechanical computers, electronic computers, transistor computers, small-scale integrated circuits, and very-large-scale integrated circuit computers. We do not intend to discuss the entire development process of computers but rather introduce modern computers based on the Von Neumann architecture.

1. Development of Computers

The development of computers includes both hardware and software advancements. Hardware development provides faster processing speeds, while software development enhances user experience. The two complement each other and are inseparable.

First Stage: Before the mid-1960s, this was the early era of computer system development. During this period, general-purpose hardware became quite common, but software was specifically written for each application, and most people believed that software development did not require prior planning. The software at that time was essentially smaller programs, and the programmers and users were often the same person (or group).

Second Stage: From the mid-1960s to the mid-1970s was the second generation of computer system development. In these ten years, computer technology made significant progress. Multiprogramming and multi-user systems introduced a new concept of human-computer interaction, opening new horizons for computer applications and elevating the collaboration between hardware and software to a new level.

Third Stage: The third generation of computer system development began in the mid-1970s and spanned a full decade. During this time, computer technology advanced considerably again. Distributed systems greatly increased the complexity of computer systems, with local area networks, wide area networks, broadband digital communication, and the growing demand for “instant” data access placing higher demands on software developers.

Fourth Stage: In the fourth generation of computer system development, the focus shifted away from individual computers and programs. People began to feel the integrated effects of hardware and software. Powerful desktop machines and networks, controlled by complex operating systems, combined with advanced application software, have become the mainstream today. The architecture of computers has rapidly transformed from a centralized mainframe environment to a distributed client/server model.

2. Basic Principles of Computers

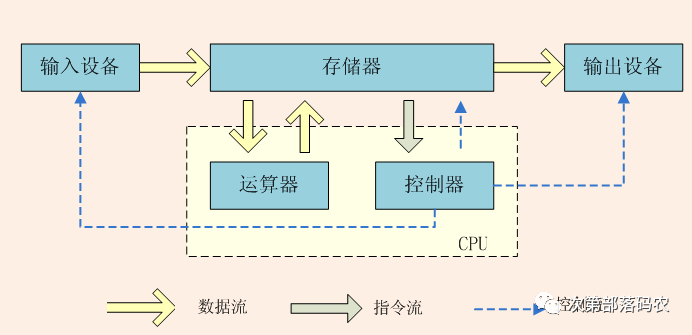

Having briefly covered the history of computer development, let’s now look at the basic working principles of computers. Modern computers are largely based on the Von Neumann architecture, which is the premise we are discussing here. The core of Von Neumann’s architecture is: stored programs, sequential execution. Therefore, regardless of how computers evolve, the basic principles remain the same. A computer program essentially tells the computer what to do.

2.1 Von Neumann Architecture

The Von Neumann architecture has the following characteristics:

- Data and instructions are represented in binary.

- Instructions and data are stored in the same memory without distinction.

- Each instruction in the program is executed sequentially.

- Computer hardware consists of five main parts: the arithmetic logic unit, control unit, memory, input devices, and output devices.

A computer based on the Von Neumann architecture must have the following functions:

- Load the required programs and data into the computer.

- Have the capability to retain programs, data, intermediate results, and final computation results for the long term.

- Be able to perform various arithmetic, logical operations, and data transfer processing.

- Control the flow of programs as needed and coordinate the operation of the machine’s components according to instructions.

- Output the processing results to the user as required.

2.2 Working Principles of Computers

For modern computers, the two most critical components are the CPU and memory. Memory stores the program instructions to be executed, while the CPU executes these instructions. The CPU first needs to know which area of memory the instructions are stored in before it can execute them and write the results back to the execution area.

2.2.1 CPU Instructions and Programming Languages

Before understanding how the CPU and memory work, let’s first explore the relationship between CPU instructions and programming languages.

2.2.1.1 CPU Instructions

Since both instructions and data in a computer are represented in binary, it means the computer only recognizes the numbers 0 and 1. Early computer programs simulated 0s and 1s by manually punching holes in paper tape to perform operations based on different combinations. Later, programming was done directly using 0s and 1s, known as machine language. This raises the question: how does the computer understand these combinations?

Thus, CPU instructions emerged. When we buy CPUs, we often hear about instruction sets. CPU instructions correspond to specific combinations of 0s and 1s. Each CPU is designed with a set of instructions that work with its hardware circuits. With the documentation of the CPU instruction set, you can write machine code that the CPU understands. Therefore, different CPUs may have different machine codes. For example, below we define a set of CPU instructions that our hardware circuit can execute.

| Instruction | Format | Description |

| 0001 | [address][register] | Load value from memory to register |

| 0010 | [register][address] | Write register value to memory |

| 0011 | [register1][register2] | Addition operation |

As computers have developed, the number of instructions supported by the CPU has increased, and their functionality has become more powerful. The above image shows the instruction set supported by the current Core i5 processor.

2.2.1.2 Assembly Language

Using machine language with 0s and 1s is beneficial because the CPU can directly execute it. However, for the program itself, it lacks readability, is difficult to maintain, and is prone to errors. Thus, assembly language emerged, which uses mnemonics (to replace opcode instructions and address symbols). It is essentially a mapping of machine language that is more readable.

| Instruction | Assembly Instruction | Format | Description |

|---|---|---|---|

| 0001 | READ | [addLable][regLab] | Load value from memory to register |

| 0010 | WRITE | [addLable][regLab] | Write register value to memory |

| 0011 | ADD | [var1][var2] | Addition operation |

Converting assembly language to machine language requires a tool called an assembler. Currently, CPU manufacturers release new assemblers alongside their CPU instruction sets. If you are still using an old version of the assembler, you can only use machine code to access the new instructions.

2.2.1.3 High-Level Languages

The emergence of assembly language greatly improved programming efficiency, but a problem arose: different CPUs may have different instruction sets, requiring different assembly programs for each CPU. Thus, high-level languages such as C, and later C++, JAVA, C#, emerged. High-level languages consolidate multiple assembly instructions into a single expression and eliminate many operational details (such as stack operations, register operations), allowing programmers to write programs in a more intuitive manner. The advent of object-oriented languages has further aligned programming with our thought processes, allowing us to focus more on the logic of the program rather than low-level details.

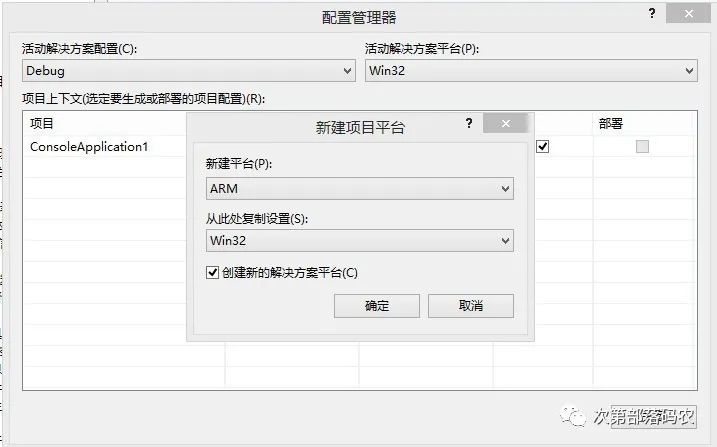

For high-level languages, a compiler is needed to convert high-level language into assembly language. Therefore, for different CPU architectures, as long as there are different compilers and assemblers, our programs can run on different CPUs. In the image below, we can choose the target platform for program compilation in VS2010: X86, X64, ARM, etc. Of course, in addition to these compiled languages, there are also interpreted languages like JS, which will not be discussed here.

At this point, a question arises: when the CPU instruction set is updated, what impact and changes do high-level languages undergo? Generally, when new instructions appear, a corresponding new assembler and compiler are also introduced. This means that the compiler can compile some high-level language expressions into the new assembly instructions, so there is generally no change for high-level languages. However, there are cases where high-level languages may add new syntax to accommodate new assembly languages and instructions, though this is rare. If the compiler does not support the new instructions, then only assembly language can be used to implement them.

2.2.1.4 Summary

From the above, we can see that the programs we write ultimately convert into binary executable programs that the machine recognizes, which are then loaded into memory and executed sequentially. From machine code to assembly to high-level language, we can observe the pervasive hierarchical and abstract thinking in computers. This applies not only to software but also to hardware.

Finally, I leave a question here: C# and JAVA compiled files are not binary machine code but intermediate languages; how do they run?

2.2.2 CPU Working Principles

We have previously understood the general structure of modern computers and know that the CPU executes operations according to CPU instructions, so let’s examine the CPU’s structure and how it executes sequential operations.

2.2.2.1 CPU Functions

-

Instruction Control: Also known as program sequence control, it ensures that the program executes in the prescribed order.

-

Operation Control: Generates a series of control signals (microinstructions) based on the fetched instruction and sends them to the corresponding components, controlling these components to work according to the instruction’s requirements.

-

Timing Control: Some control signals have strict temporal sequences, such as reading data from memory. Only when the address line signal stabilizes can the required data be read through the data line; otherwise, the data read will be incorrect, allowing the computer to work in an orderly manner.

-

Data Processing: Data processing involves performing arithmetic and logical operations on data.

2.2.2.2 Basic Composition of CPU

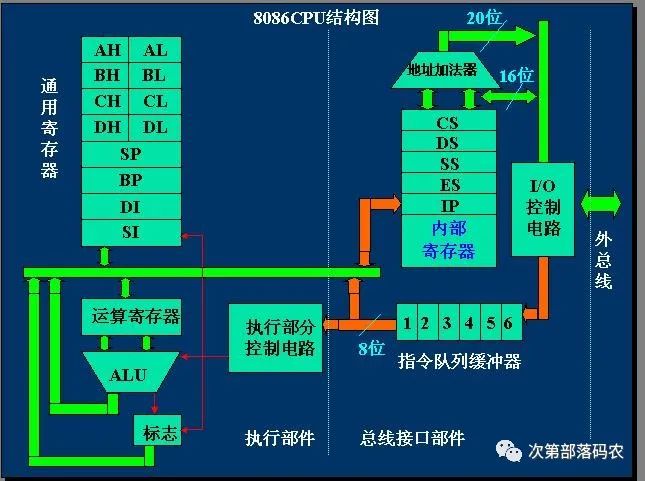

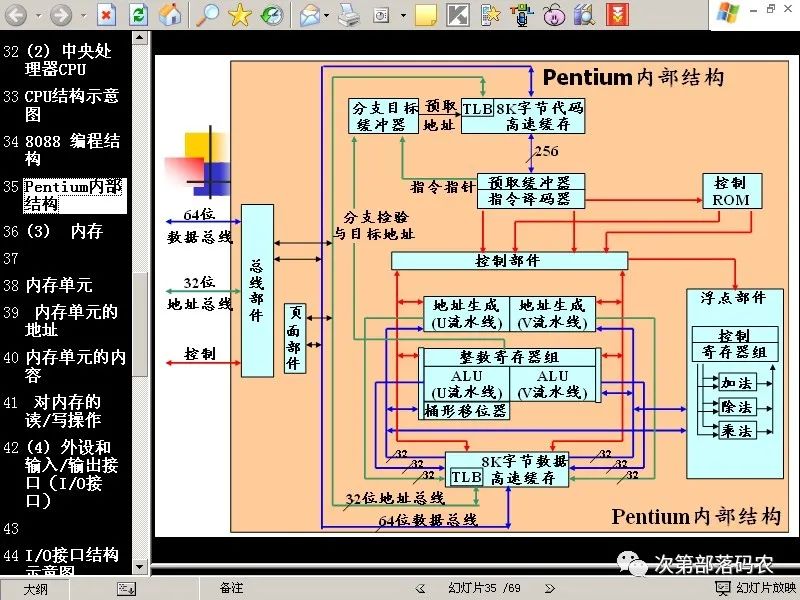

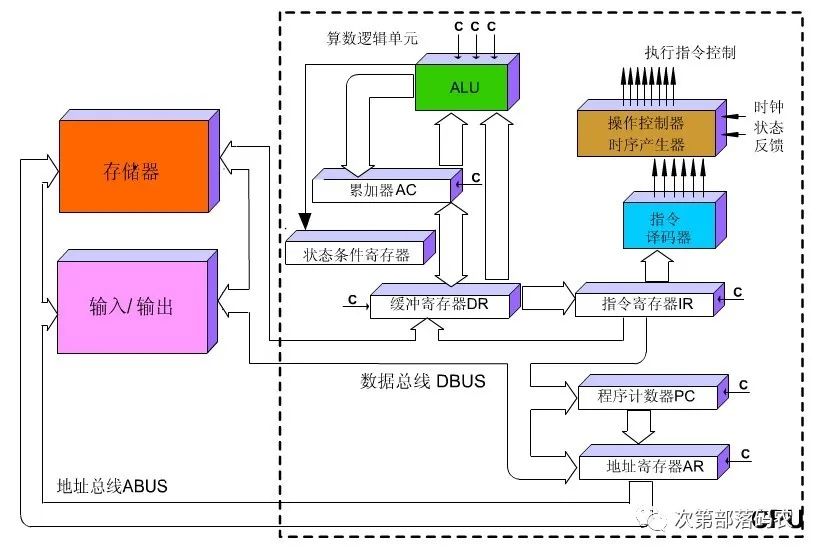

Previously, the CPU mainly consisted of the arithmetic logic unit and control unit. With the development of integrated circuits, current CPU chips integrate additional logical functional components to expand the CPU’s capabilities, such as floating-point units, memory management units, caches, and MMX. The following two images show the structures of the 8086 and Pentium CPUs.

For a general-purpose CPU, we only need to focus on its core components: the arithmetic logic unit and the operation control unit.

1. Composition and Functions of the Control Unit: The control unit consists of the program counter, instruction register, instruction decoder, timing generator, and operation controller. It serves as the computer’s command system, executing command functions. Although the structure of control units varies significantly across different computers, they share the following basic functions:

- Fetch instructions from memory and generate the address of the next instruction.

- Analyze instructions, decode or test them, and generate corresponding control signals to initiate specified actions, such as a memory read/write operation, an arithmetic logical operation, or an input/output operation.

- Execute instructions, directing and controlling the flow of data between the CPU, memory, and input/output devices to accomplish various instruction functions.

- Issue various micro-operation commands during instruction execution, requiring the controller to issue corresponding micro-operation commands according to the nature of the operation.

- Alter the execution order of instructions, allowing for the use of non-sequential structures like branching and looping to enhance programming efficiency.

- Control the input of programs and data and the output of results, facilitating human-computer interaction.

- Handle exceptions and specific requests that may arise during program execution, such as division errors, overflow interrupts, keyboard interrupts, etc.

2. Composition and Functions of the Arithmetic Logic Unit: The arithmetic logic unit (ALU), accumulator, data buffer register, and status condition register make up the arithmetic unit, which processes data and performs various arithmetic and logical operations. In contrast to the control unit, the arithmetic unit acts on commands issued by the control unit, meaning all operations performed by the arithmetic unit are directed by control signals from the control unit, making it the execution component. The arithmetic unit has two primary functions:

- Execute all arithmetic operations, such as addition, subtraction, multiplication, division, and additional calculations.

- Perform all logical operations and logical tests, such as AND, OR, NOT, zero tests, or comparisons between two values.

2.2.2.3 CPU Workflow

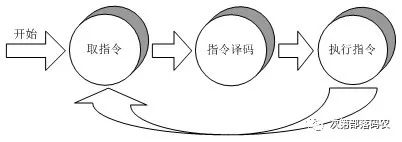

The fundamental task of the CPU is to execute the stored sequence of instructions, which constitutes a program. The execution process involves repeatedly fetching, analyzing, and executing instructions. Almost all Von Neumann architecture CPUs can be divided into five stages: fetching instructions, decoding instructions, executing instructions, accessing memory, and writing back results.

2.2.2.4 Instruction Cycle

-

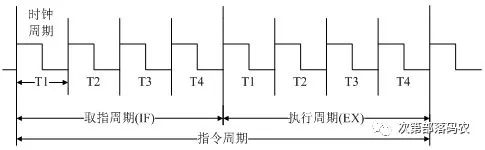

Instruction Cycle: The time required for the CPU to fetch and execute a single instruction is called the instruction cycle. The length of the instruction cycle is related to the complexity of the instruction.

-

CPU Cycle: The shortest time required to read an instruction from main memory defines the CPU cycle. Instruction cycles are often represented in terms of several CPU cycles.

-

Clock Cycle: The clock cycle is the fundamental time unit for processing operations, determined by the machine’s main frequency. One CPU cycle consists of several clock cycles.

From the definitions above, we can see that for the CPU, the shortest time required to fetch and execute any instruction is two CPU cycles. Therefore, the higher the frequency, the shorter the clock cycle, which in turn shortens the CPU cycle and instruction cycle, theoretically speeding up program execution. However, frequency cannot be increased indefinitely, and higher frequencies also lead to power consumption and heat problems. Thus, technologies like hyper-threading and pipelining have emerged to enhance CPU execution speed.

2.2.2.5 Timing Generator

Timing Signals: In the high-speed operation of computers, every action of each component must strictly adhere to timing regulations, with no room for error. The coordination of actions among computer components requires timing markers, which are represented by timing signals. The timing signals required for the operation of various parts of the computer are generated uniformly by the timing generator in the CPU.

Timing Generator: The timing signal generator produces control timing signals for the instruction cycle. When the CPU begins to fetch and execute instructions, the operation controller uses the timing signals generated by the timing signal generator to provide various micro-operation timing control signals needed for orderly and rhythmic operation of the computer’s components.

At this point, a question arises: since both instructions and data are stored in memory, how does the CPU distinguish between instructions and data?

In terms of timing, the instruction fetching event occurs in the first CPU cycle of the instruction cycle, during the “fetch instruction” stage, while the data fetching event occurs in the subsequent CPU cycles of the instruction cycle, during the “execute instruction” stage. In terms of space, if the fetched code is an instruction, it will be sent to the instruction register; if it is data, it will be sent to the arithmetic logic unit.

2.2.3 Summary

Through the above, we have understood the CPU’s working process. Simply put, for the CPU to execute a program’s instructions sequentially, the controller first obtains the address of the first instruction. After the CPU fetches and executes this instruction, the controller generates the address of the next instruction to be executed. The ALU unit is responsible for performing arithmetic operations. The following FLASH demonstrates the workflow of the CPU executing an addition operation.

CPU Workflow FLASH: http://218.5.241.24:8018/C35/Course/ZCYL-HB/WLKJ/jy/Chap05/flash-htm/5.6.swf

3 Summary

This article primarily introduces the history of computer development and the structure and workflow of general-purpose CPUs, deepening our understanding of the Von Neumann architecture’s principles of stored programs and sequential execution. Regardless of the hardware’s appearance, the fundamental principles of Von Neumann computers remain the same.

Of course, understanding the basic principles raises more questions, such as how executable files are loaded into memory, how the CPU and memory communicate, how instructions are located based on addresses, what the memory structure looks like, and how the CPU communicates with devices outside of memory. Answers to these questions will be provided in future discussions.