Author: Kelsey Houston-Edwards“Guangming Daily” (November 7, 2024, Page 14)

The advent of future quantum computers will threaten the security of all classical encrypted information, and cryptographers are racing against time to develop encryption algorithms that can confound quantum computers.

Rapidly Developing Quantum Computers

Digital security experts worldwide are focusing on the Quantum Year Clock (Y2Q clock). This clock continuously counts down to the moment when quantum computers are predicted to be able to crack certain important modern encryption algorithms. These algorithms are sophisticated and can encrypt information using public keys, hence they are called public key encryption algorithms. Although the term “encryption algorithm” may sound unfamiliar, it is ubiquitous for those of us who frequently use the internet to transmit information. When shopping online, it ensures the safety of our credit card numbers; when we update phone software, it ensures that the update is from the phone company and not a hacker. Whistleblowers need secure means to contact reporters, and businesses also need secure ways to send confidential documents.

However, quantum computers will render standard public key encryption algorithms ineffective. “This is a very serious situation,” said Bruno Hettner, co-chair of the Cloud Security Alliance’s Quantum Security Working Group. “If quantum computers can be built tomorrow, we will have no way to conduct secure communications anymore.”

Hettner is one of the creators of the Quantum Year Clock. The name Y2Q mimics the “Y2K crisis” of the late 1990s. Many software programs from the 20th century used two-digit year representations, meaning that the year 2000 and 1900 were both represented as “00” in computers. At that time, people anticipated that programs using this date representation would malfunction as the new millennium approached, potentially causing widespread system failures. However, ultimately, no bank crashed at the turn of the year, no power outages occurred, and no planes fell from the sky. The transition of computer programs into the new century was smooth, primarily because businesses and governments rushed to fix the Y2K issue.

When will quantum computers develop to the point of crushing cryptography? No one can say for sure. The current countdown of the Quantum Year Clock ends on April 14, 2030, but this is merely a guess. Most researchers believe this transformation will occur within the next few decades. “The threat to information security is approaching,” Hettner said, and the Quantum Year Clock serves as a warning, “putting a date on it helps keep people alert.”

However, for institutions like governments that require long-term confidentiality, the real deadline is much earlier. If encrypted data sent today is stored long-term, future quantum computers could decode today’s encrypted information. “If you want to keep something confidential for 20 years and you believe a quantum computer capable of breaking the encryption will emerge within 20 years, you need to start addressing the issue today,” said Chris Peikert, a computer scientist at the University of Michigan.

The National Institute of Standards and Technology (NIST) foresaw this threat and held a public competition in 2016 to solicit “post-quantum” or “quantum-resistant” encryption algorithms—algorithms that can be executed on current computers but are strong enough that quantum computers cannot break them. To emphasize the urgency, the U.S. Congress passed the Quantum Computing Cybersecurity Preparedness Act in December 2022, requiring government agencies to develop plans for transitioning to post-quantum encryption algorithms.

NIST’s competition went through four rounds. The first round allowed participants to submit proposals, with groups advancing after each round of reviews able to adjust their proposals for the next round. The new rounds of reviews were stricter. Ultimately, the institute selected a proposal called CRYSTALS-Kyber as the winning proposal for the public key encryption category. Three winning proposals were selected for the digital signature category, which can be used to securely identify the sender of information. The institute is working with researchers to standardize the winning algorithms so that programmers can begin incorporating these algorithms into current encryption systems, laying the groundwork for post-quantum security.

However, it is concerning that three of the four selected algorithms (including CRYSTALS-Kyber) are based on “lattices”—which refer to periodic arrangements of points in high-dimensional space, with some difficult mathematical problems associated with them. Experts believe these problems are hard to solve, but no one can guarantee that future research won’t crack these algorithms.

Evolution of Encryption Algorithms

Looking back at the development of cryptography, the earliest known encryption algorithm involved replacing letters with other symbols. In letters written by Caesar, he would replace each letter with the letter three places further in the Roman alphabet. For example, in English, “a” would be replaced with “d,” “b” with “e,” and so on. To decode a message written by Caesar, you simply need to reverse the process by moving the letters back three places.

Caesar’s substitution cipher has countless variations based on the same principle—children passing notes in class can create their own versions, like replacing “a” with a heart symbol and “b” with a star, etc.—but these are all easily cracked. A teacher confiscating the notes might notice many isolated triangles in the text, indicating a single-letter word, thus inferring that the triangle represents “I” or “a.” Generally speaking, by comparing the frequency of different symbols and relating them to the frequency of letters in common English texts, cryptanalysts can decipher more complex substitution schemes.

The gold standard of modern cryptography—the Advanced Encryption Standard (AES)—is a super upgrade of Caesar’s method. It performs multiple substitutions and shuffles the order like a deck of cards. After sufficient shuffling and substitution, reconstructing the original information becomes very difficult.

To decrypt the original information, one must reverse every step of the shuffling and substitution process. If it were a physical deck of cards, this would be nearly impossible—since the shuffling order is determined by imperceptible movements. However, if a computer shuffles the information according to a precise set of instructions—for example, “put the second item in the fifth position,” then restoring the original order becomes straightforward. The computer simply needs to reverse the operation: “put the fifth item back in the second position.”

Like Caesar’s cipher, AES also employs a symmetric encryption and decryption process. It executes the same process in both forward and reverse, akin to turning a key in a lock. In fact, before the 1970s, cryptography only had symmetric encryption algorithms (also known as symmetric key encryption algorithms). However, these algorithms have significant limitations: the sender and receiver must agree on the encryption and decryption methods before exchanging information, either face-to-face or through another trusted communication channel.

It is hard to imagine a symmetric encryption algorithm escaping this limitation. In 1974, Ralph Merkle, an undergraduate at the University of California, Berkeley, proposed a project in a class assignment that explored a method for “secure communication without prior agreement between two parties.” At the time, he anticipated how absurd the topic sounded and added, “No, I’m not joking.” Merkle envisioned a system where two people could exchange information completely openly, assuming there is always someone eavesdropping. Through public communication, they could construct a scheme for encoding and decoding, which could then be used to send secret information. Even if others were eavesdropping, they would not be able to guess the decoding scheme and crack the secret message. Merkle’s proposal was dismissed by an expert because “the working assumption was unrealistic.”

However, astonishingly, just a few years later, several mathematical papers realized Merkle’s idea. The two algorithms proposed, Diffie-Hellman and RSA (an acronym for the initials of authors Rivest, Shamir, and Adleman), have gained widespread application in modern communications. In fact, even before Merkle’s class assignment, researchers from British intelligence had discovered such coding schemes—public key encryption algorithms—but did not disclose them.

When you check your bank account online, your computer and the bank’s server are communicating: you send your password, and the bank returns your balance information. As this information travels over the internet, others may read it. Therefore, this information must be encrypted.

Most messages are encrypted using symmetric encryption algorithms, such as AES, which can obfuscate information quickly and efficiently. However, first, your computer and the server on the other end must agree on the specific obfuscation method. But this agreement cannot be directly written down, as all communication channels could be monitored. They must use public key encryption algorithms for encryption.

To understand the process of public key encryption algorithms, we can imagine two friends, Alice and Bob, who co-own a bakery and have a very confidential brownie recipe, confidential enough that even Alice and Bob do not know the complete recipe. They each add a secret ingredient known only to themselves. When making the brownies, Alice and Bob take turns starting. Sometimes Alice is responsible for mixing the base ingredients and adding her secret ingredient, then handing the mixed batter to Bob, who adds his ingredient before baking. Other times, Bob is responsible for mixing the base ingredients and adding his ingredient, then handing it to Alice for her to add her secret ingredient and bake.

In the end, Alice and Bob always get the same brownie—but they never have to share the complete recipe with anyone, not even themselves. Even the sly driver Eve, who delivers their goods, cannot guess the complete recipe. (In cryptography, Alice and Bob often represent the two parties exchanging information, while Eve is a homophone for “eavesdropper.”) Eve cannot deduce the secret ingredients because when she delivers the batter, these ingredients are always mixed with the base ingredients—impossible to separate.

Of course, computers do not bake brownies but perform mathematical operations on numbers. In real public key cryptography, the goal is to obtain a shared secret number between both parties—a temporary password that grants access to private conversations. Next, the two computers can use symmetric encryption algorithms (like AES) for encrypted communication.

Different public key encryption algorithms use different methods to create and share temporary passwords. To prevent Eve from knowing their brownie recipe, Alice and Bob would mix the ingredients into the batter before sending it out. Those implementing public key encryption would use mathematical functions to mix secret numbers.

We can roughly understand functions as a type of machine that, upon receiving numerical input, performs some operations and outputs a new number. The functions used in public key encryption are very special: they must easily mix numbers while ensuring that these numbers are difficult to separate. Thus, even if Eve sees the output of the function, she cannot guess what the input secret number is.

For example, the RSA encryption algorithm is based on multiplication functions and their inverse functions (i.e., factorization). Multiplying numbers as a mixing method is straightforward for computers, even if the numbers are large. However, conversely, if it is a large number, factorization becomes very difficult. The factorization problem asks: which numbers multiplied together yield the input number? For example, factorizing 21 yields 3 and 7. To decode a password created by RSA, one must factor a large number. The best method requires screening many numbers to find the specific combination—this takes a long time for computers.

Boaz Barak, a computer scientist at Harvard University, stated: “We are not trying to make encryption algorithms more complex; instead, we have switched to very simple encryption algorithms based on principles that have been studied for thousands of years, such as factorization.”

The Sword of Damocles

In 1994, mathematician Peter Shor, then a researcher at Bell Labs in the U.S., proposed a method that would allow quantum computers to decrypt any ciphertext encrypted using RSA or Diffie-Hellman algorithms. Shor told me he attended a lecture on how to use quantum computers to solve mathematical problems with periodic structures, which led him to think about the “discrete logarithm” problem. Logarithmic functions are the inverse of exponential functions. Finding logarithms is usually straightforward, but discrete logarithms are variations of logarithms in arithmetic operations under modular conditions, similar to performing arithmetic on a clock, such as 3+10=1 under modulo 21. For example, finding Ind5(16) in a space of modulo 21 requires 5x to yield a remainder of 16 when divided by 21. Although the final answer can be found as x=4, the process for a computer to solve this problem is not easy. Just as RSA is based on factorization, the Diffie-Hellman algorithm is based on the discrete logarithm problem. Computer scientists generally believe that traditional computers cannot quickly calculate discrete logarithms, but Shor discovered an algorithm that could quickly solve this on quantum computers. He then explained how to use a similar logic to quickly find the factors of large numbers using quantum computers. These solutions are collectively known as Shor’s algorithm.

Shor was not thinking about how to program a real quantum computer—he was simply listing mathematical formulas on a blackboard. “At the time, quantum computers seemed far off,” Shor said, “so I mainly thought this was a very good mathematical theorem.” But his algorithm has enormous implications for public key encryption algorithms because quantum computers can use it to break nearly all currently used encryption systems.

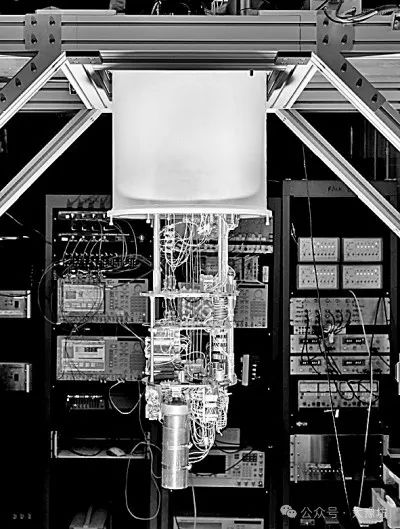

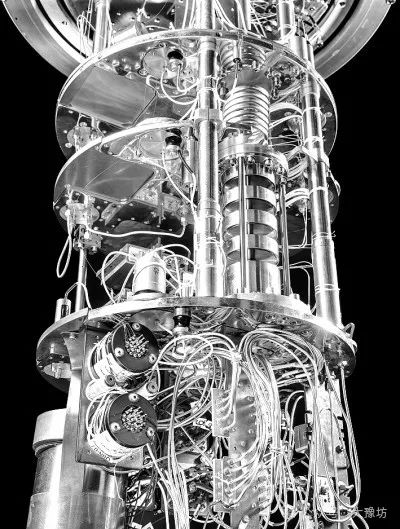

Classical computers process data in long strings of “0” and “1” known as bits, while quantum computers use quantum bits (qubits), where “qu-” is the first two letters of the word “quantum.” Quantum bits can exist in a superposition state—a magical combination of being in both “0” and “1” states simultaneously. When quantum bits are in a superposition state, quantum computers can achieve faster computation speeds on certain problems than classical computers. However, quantum computers are very finicky. Quantum bits must remain in a superposition state throughout the algorithm’s execution, but they can easily “collapse” into a string of “0” and “1” states.

Quantum computers seem formidable—like a giant golden chandelier hanging from the ceiling—but they are not very powerful yet. Scientists have only used a small number of quantum bits for calculations, and the largest number they have factored using Shor’s algorithm on a quantum computer is 21. In 2012, researchers at the University of Bristol in the UK calculated that 21=3×7 using a quantum computer.

Many experts believe that powerful quantum computers capable of breaking RSA and Diffie-Hellman encryption algorithms will emerge in the next few decades, but they quickly admit that this timeline is uncertain. For cryptographers, they must come up with solutions faster than quantum computers, and this uncertainty is quite concerning. Every industry has certain critical tasks that require strict confidentiality. For example, healthcare companies must ensure the security of data used in medical research; power companies must guarantee that the power grid is not compromised by hackers. The most frightening scenario is that if something goes wrong, they will be completely powerless to respond.

Will It Ever Be Safe?

Every public key encryption algorithm is based on a difficult mathematical problem. To ensure confidentiality in the future quantum era, researchers need to find very difficult problems—difficult enough that even quantum computers cannot solve them in a reasonable time. The institute is looking for such public key encryption algorithms that can be widely applied to standard computers and can effectively replace RSA and Diffie-Hellman algorithms. Various interconnected systems and devices used by people should be able to “communicate with this new encryption algorithm and other devices,” said mathematician Lily Chen.

By the 2017 deadline, researchers submitted 82 different post-quantum cryptography proposals. “Quantum cryptography” and “post-quantum cryptography” may sound similar but are entirely different concepts. Quantum cryptography refers to the practice of using quantum phenomena as part of the encryption system. In the following years, researchers tested these algorithms, and NIST experts selected 26 algorithms for the next round.

Public feedback is a crucial part of the competition. Cryptographic systems do not guarantee security, so researchers try to break them to find vulnerabilities. One candidate algorithm used an isogeny-based encryption scheme that had been studied for decades and seemed promising. However, two researchers noted that a mathematical theorem from 25 years ago could be used to break that algorithm. They cracked it using a regular laptop in just an hour.

Experts ultimately selected several algorithms for the finals, most of which are based on lattice problems. “Lattices are a very natural choice,” said Vadim Lyubashevsky, one of the authors of CRYSTALS-Kyber. “People have been studying it in various ways for over twenty years.”

A lattice refers to a set of periodic point arrangements. The simplest lattice can be imagined as a pegboard—points arranged from the vertices of a square grid. Mathematicians believe this set of points forms a structure composed of two basic lines: a horizontal line and a vertical line of equal length. Imagine placing a point at the center of the paper and then walking a few steps along the horizontal or vertical line, marking the final landing point. If you traverse all possible paths, you will find that these points ultimately form a square grid. Different initial line sets will generate different lattices. The lengths of the two lines can vary, or they can be at angles instead of being aligned perfectly. Repeatedly walking many steps with such line sets will also yield periodic point arrangements, but the rows and columns will be offset or at different heights.

Some mathematical problems based on lattices seem simple but are, in fact, very tricky. Suppose I draw two lines on paper and tell you that they form the basis unit of a lattice. Then I randomly draw a point somewhere on the paper. Can you find the nearest lattice point to that point?

You might start by drawing the lattice points from those two lines and ultimately find the nearest one. But this method works only because the paper is two-dimensional. Our spatial imagination is usually limited to three dimensions or lower, but mathematicians can describe lattices in hundreds of dimensions. In such cases, finding the nearest lattice point becomes very difficult.

Researchers use large lattices to construct encryption systems. For example, starting from a 1000-dimensional lattice. From this sea of points, they select one point. The specific location of this point represents the information that needs to be encrypted. Then, starting from that point, they find a new point that is slightly offset from the lattice. Next, you can publicly share the location of that new point without revealing which original secret point it is—finding the nearest lattice point is a very hard mathematical problem. Just as mixing ingredients can protect the secret recipe for brownies, starting from the secret point and deviating slightly can also hide its exact location.

Computer scientists have studied such problems for decades and have reason to believe these problems are very hard to solve. However, when designing new algorithms, cryptographers must also balance security with many other issues, such as the amount of information that needs to be exchanged between two computers, and the computational difficulty of encryption and decryption. In this regard, lattice-based cryptography excels. “Lattices happen to fall in a very reasonable position across the board—none of the aspects are too bad, and none are too good either,” Peikert said.

The problem is that no one can guarantee that lattice-based encryption algorithms will be secure forever. To prevent a breakthrough in mathematical research that solves the underlying problems of encryption algorithms—and thus breaks the passwords—cryptographers must have multiple algorithms in reserve. However, among the four algorithms ultimately selected by NIST, three are based on lattices. Only CRYSTALS-Kyber was chosen as the standardized general public key encryption algorithm.

The competition also had a category for digital signature algorithms, which can ensure the authenticity of the sender of the information and that the information has not been altered. Cryptographer Britta Hale from the U.S. Naval Research Laboratory explained that encryption algorithms answer the question, “Can I ensure that no one else can read this information?” while digital signatures answer, “Can I trust that this data has not been tampered with?” The digital signature algorithms currently in use will also be compromised by Shor’s algorithm. Three digital signature algorithms are being standardized, two of which are based on lattices. Relying heavily on a single type of mathematical problem poses significant risks. No one can guarantee that mathematicians won’t someday crack that problem. Users also have limited freedom of choice—another encryption algorithm might better suit their specific needs. For these reasons, the institute has broadened the standardization process for both categories to explore non-lattice-based algorithms.

Even the selected standard algorithms need adjustments. After the first round of submissions, researchers noticed a minor issue with CRYSTALS-Kyber, which the authors resolved. In subsequent rounds of submissions, they found a way to slightly improve the algorithm. “We changed the parameters and increased security by a few bits,” said Peter Schwabe, one of the authors of CRYSTALS-Kyber. In cryptography, the security level of an algorithm can be measured in “bits”; a security level of n bits means it would take 2^n operations to break it.

The institute is now finalizing the standards, detailing how programmers should implement these algorithms. “Everything on the internet must have very precise, very boring standards, including every little detail. Otherwise, computers cannot communicate with each other,” Lyubashevsky said. Once the standards are established, every computer system will need to switch to post-quantum encryption algorithms. This cannot be accomplished with a simple switch for everyone. Each software company must upgrade protocols, governments need to modify requirements, and even physical hardware may need to be replaced.

The complete transition to post-quantum encryption algorithms could take many years, even decades. Until then, all information encrypted using old methods may be cracked by future quantum computers. If you want to keep something confidential for a long time, the urgent deadline may have already passed. As Hale said, “In the field of cryptography, everyone is watching the clock saying, ‘You’ve missed the deadline.'”

(Author: Kelsey Houston-Edwards)

This version of the article is provided by the Global Science Magazine