Next Frontier Technology in Optoelectronic Sensors

Compiled by Yuan Wang, Open Source Intelligence Center

Adapted from “Military and Aerospace Electronics”

The next generation of infrared sensors will integrate advanced image processing, artificial intelligence, and standardized architectures to discover more information than ever in digital images.

Optoelectronic sensors that perceive light across various spectra enable combat personnel to see objects in the dark, detect disturbed soil that may indicate roadside bombs, identify missile launches, and locate small boats at sea. While these sensors boast that the U.S. military will “own the night,” the battlefield capabilities provided by modern optoelectronic sensors are poised for revolutionary improvements, promising to increase the sensors’ perception range, enhance image resolution, reduce sensor size, weight, and power consumption (SWaP), and automatically identify targets through artificial intelligence (AI).

Today’s optoelectronic sensing technology is shrinking the pixel size of digital images: developing new materials that can sense at higher temperatures, seeking new ways to increase search distances and image resolution, and reducing the size, weight, and power consumption (SWaP) of the next generation of uncooled sensors.

Perhaps more importantly, optoelectronic sensor technology is tightly integrating sensor and digital image processing capabilities, which not only reduces SWaP, increases range, and improves resolution but also introduces AI and machine learning algorithms into image processing for automatic target recognition, mixed spectral sensing for multispectral and hyperspectral sensing, and creates adaptable sensor and processor architectures that follow industry standards for rapid technology insertion.

Art Stout, Director of Product Management for AI Solutions and OEM Team at Teledyne FLIR LLC in Goleta, California, stated, “We are at this turning point, where new sensor designs, tremendous advances in digital signal and image processing, new high-temperature sensor materials, and shrinking image pixels will provide unprecedented sensing capabilities for various military equipment at night, during the day, and in smoke, haze, and adverse weather conditions.”

Impact of Small Pixels on Resolution and Perception Distance

Chris Bigwood, Vice President of Business Development at Clear Align, an optoelectronic sensor specialist in Eagleville, Pennsylvania, stated, “There is still a lot of work to be done on shrinking pixel pitch. It increases the number of pixels you can present a single target with and improves resolution. It drives complex optical solutions to achieve the performance people are looking for.”

Bigwood continued, “Smaller pixel pitches can yield extremely large focal plane arrays, providing you with situational awareness and long-range performance. In the past, you had to choose one or the other, but now you can have both long distance and good resolution at the same time.”

Especially infrared search and track systems can benefit from smaller sensors. “Smaller pixel pitches do not introduce any time delays; they eliminate the dependence on time,” Bigwood said. “In scenarios at sea and in the air, you can achieve all the resolution and field of view without compromising performance.”

However, small pixels are not always the best solution for all optoelectronic sensing challenges. “We are working to improve the sensitivity of small pixels, but sometimes you need larger pixels to capture light,” noted Angelique X. Irvin, CEO of Clear Align.

John Baylouny, COO of Leonardo DRS in Arlington, Virginia, stated that reducing pixel pitch also aids in fusing different types of optoelectronic sensors to provide combat personnel with more situational awareness information.

Baylouny said, “Overall, the trend is toward sensing farther and seeing if there are threats. The third generation of sensors is about seeing further and recognizing from farther away.” This can enhance the performance of weapon sights, allowing combat personnel to effectively shoot at targets they can see.

Baylouny said, “Some trends we are seeing are the ability to fuse these images from several different weapon sights and sensors and observe from any of those sights. We can overlay long-wave thermal imagery with driver vision enhancer (DVE) images, then overlay those with the commander’s or gunner’s sights for a better understanding of the situation at hand.”

He noted that another trend brought about by shrinking pixel pitch includes fusing RF, microwave, and other sensor information into optoelectronic images. “We can fuse RF sensing into these same images and view the battlefield situation in multiple ways. If something flies out, we can fuse RF, sound, and images together.”

Aaron Maestas, Director of Optoelectronic and Infrared Solutions at RTX Raytheon, stated that extending the perception distance of optoelectronic sensors is one of the main benefits of shrinking pixel pitch.

“We value operators, who are experts,” Maestas said. “We want to assist them with artificial intelligence (AI) to bring them into the decision-making loop so they can react faster than before. We are striving to improve platform survivability by identifying threats over a greater range. We will be able to see bad actors 50% farther than we can today. The farther away we detect threats, the better our situation becomes.”

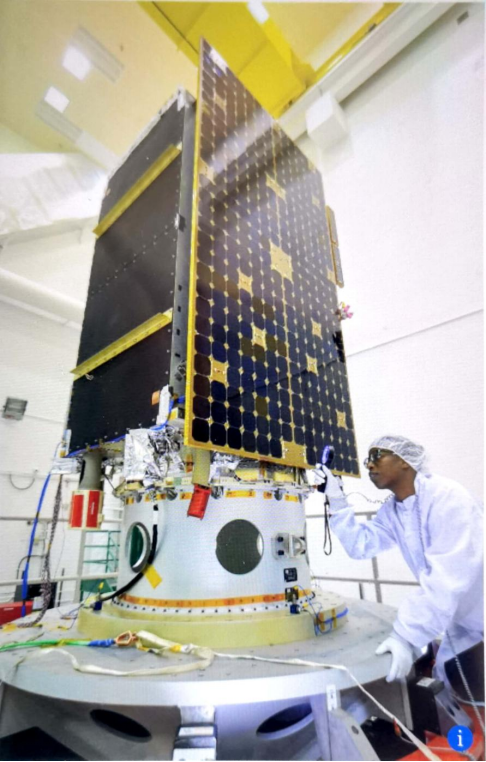

Jeff Schrader, Vice President of Global Situational Awareness at Lockheed Martin Space Systems, pointed out that shrinking the image pixel size of optoelectronic sensors has significant benefits in reducing SWaP. Schrader particularly focuses on on-orbit optoelectronic sensors, where every gram of weight is critical. Schrader stated, “We must consider the SWaP elements — how much power the sensor needs, and how it impacts what you want the sensor to collect.”

New materials for optoelectronic sensors are also on the way to shrink pixel pitch, SWaP, perception distance, and resolution. Stout from Teledyne FLIR stated, “We are moving from cadmium mercury and indium antimonide to a material called strain-layer superlattice, also known as SLS. These semiconductor materials can operate at higher temperatures.” Sensors made from these materials are also referred to as high operating temperature (HOT) detectors.

These materials help optoelectronic sensors convert photons directly into electrons and are used to manufacture infrared focal plane arrays. Stout said, “The benefit of SLS materials is that you can make pixels smaller than indium antimonide. Smaller pixels can provide better detector resolution and recognition performance. The advantage of this new material is better SWaP, allowing for high-definition imaging with smaller pixels.”

Cooling and Thermal Management

Today, high-performance optoelectronic sensors for high resolution and long distances still require cooling to improve the contrast between the object of interest and its background. Coolers are often large, heavy, and expensive, and can become a critical single point of failure in important applications.

Irvin from Clear Align stated, “Innovations in cooler lifespan are crucial. A significant portion of the market is maintaining them; cooling is a major maintenance project. Coolers without moving parts are developing, representing a trend toward making coolers cheaper and smaller.”

The need to address cooling issues is directly linked to the next generation of HOT detectors, which inherently require less cooling than sensors made from older generation materials. Stout from Teledyne FLIR stated, “If you operate at higher working temperatures, then the power demands of low-temperature coolers will decrease. This impacts the expected lifespan of coolers. Here at Teledyne FLIR, we have spent five years developing new cooler designs, and we know we will transition to these higher working temperature coolers.”

The impact of these new HOT detectors on cooler maintenance is significant. Stout stated, “We now have new world-class sensors with an average time between failures (MTBF) of nearly 30,000 hours. This has a tremendous impact on military users, whether it is applied in continuous monitoring for border patrol or other application scenarios. Now we can double the lifespan of coolers.”

The materials used in these new HOT detectors not only enhance logistics and maintenance but also reduce the cooling requirements for sensor system designers. “A significant part of this is the reduced cooling demand, so your cooler capacity can be lowered; it directly translates to power, reducing the demand on batteries and other power sources, and decreasing thermal management needs helps reduce SWaP,” he added.

In HOT detectors, “coolers are more efficient, with less thermal load, and you have a very compact sensor module,” Stout continued. “In comparison to indium antimonide, power consumption is reduced, size is significantly smaller, and resolution is better.”

Baylouny from Leonardo DRS pointed out that reducing cooling requirements has a significant impact on the design and capabilities of military optoelectronic sensors. “What you see on combat vehicles are optoelectronic sights, one for the gunner and one for the commander,” he said. “These are long-wave cooled detectors with a field of view that varies from wide to narrow. We won the third-generation project, replacing the need for cooled long-wave detectors with mid-wave and long-wave sensors on the same detector focal plane, allowing crews to see in either band. The advantage of long-wave is that you can see through smoke and obscurants to identify targets. Mid-wave offers better resolution and can see further. We can switch between or overlay both views.”

Uncooled Sensors

Some optoelectronic sensor applications, such as infantry rifle sights, are extremely sensitive to size, weight, power, and cost (SWaP-C). These applications often have to compromise on range and resolution for the sake of smaller size and cost. “Uncooled long-wave sensors represent a huge market because they are cheap,” Irvin from Clear Align noted.

Uncooled solutions must also compensate for their relative weaknesses in detection distance and resolution with larger lenses to enhance light sensitivity. The design trade-offs for uncooled sensors often involve complex skills. “Using uncooled cameras where cost is a primary consideration also involves SWaP,” Stout from Teledyne FLIR said.

Stout said, “An F1 aperture lens has a certain sensitivity. In cooled solutions, you can achieve this with an F4 lens. An uncooled camera might have a 1-watt load, but you are typically limited in focal length and distance you can see. Uncooled sensors are designed for driver assistance, not for long-range systems.”

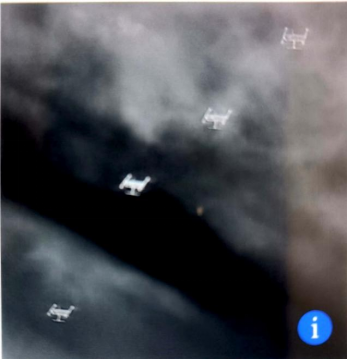

Nonetheless, advancements in uncooled detectors are improving resolution and detection distance. For example, Teledyne FLIR’s micro-radiometric camera designed for the palm-sized Black Hornet 4 drone has improved from a 160 × 120 pixel detector in the Black Hornet 3 to a 640 × 512 pixel camera in the Black Hornet 4.

With such resolution, “the ability to recognize objects at the same range becomes much more significant. This is 16 times the resolution of the previous generation camera. With the same number of pixels, you can see wider, perceive more situational awareness, and also see narrower, detecting further distances.”

Digital Image Processing

The embedded computing digital signal processing capabilities of today’s optoelectronic sensors are as important, if not more so, than the sensors themselves. Advanced processing technologies, such as high-performance central processing units (CPUs), field-programmable gate arrays (FPGAs), general-purpose graphics processing units (GPGPUs), and next-generation circuit technologies known as 3-D heterogeneous integration (3DHI), help to lower costs, increase range, improve resolution, and aid in extracting more situational awareness information from each digital image.

Combined with advanced processing technologies like artificial intelligence, machine learning, new forms of processing, standard-based rapid adaptive embedded computing architectures, and 3DHI, optoelectronic sensor designers can extract more useful information from digital images than ever before.

“From a morphological perspective, signal processing aids in identifying and recognizing targets,” Baylouny from Leonardo DRS said. In applications like passive sensing in radar and signal intelligence (SIGINT) and communications intelligence (COMINT), it can help fuse information together. We want to identify signals not for intelligence but for understanding.”

Our goal is to extract as much useful information as possible from optoelectronic images and eliminate noise in the images. Baylouny said, “These sensors require very high-end image and signal processing to achieve the highest possible signal-to-noise ratio to enhance signals and eliminate noise.”

Signal processing can also help system designers not only fuse information from optoelectronic sensors but also fuse information from RF sensors and even auditory sensors to create a rich and deep battlefield spatial picture. “A soldier on the battlefield has a radio, and when he presses a button, he can feel the RF signal.”

Signal processing can also assist on-orbit optoelectronic sensors (like those from Lockheed Martin) in making difficult predictions, such as where maneuvering hypersonic missiles will impact, and fuse information from long-wave infrared, mid-wave infrared, short-wave infrared, RF, radar, and other sensors.

How signal processing affects sensor data is a field where many technological innovations are coming into play. “We are seeing a general shift from general-purpose computing to vector processing, and now we are seeing and designing systems on chips (SoCs) that have vector processing capabilities internally,” Baylouny from Leonardo DRS said. “We are seeing automated target recognition and image recognition software on the sensors.”

Some of today’s SoC processors integrate GPGPU, CPU, and FPGA processing all in one device. Baylouny noted, “The development here is for autonomous control.”

Stout from Teledyne FLIR stated that his company has embedded processors capable of running high-computation algorithms for image processing over the past five to six years, extracting better night images, acquiring raw outputs from sensors, and enhancing them. “We now have chips with powerful computing capabilities. Five years ago, you had to run these algorithms on a server, but now you can run these algorithms correctly in products like drones or thermal weapon sights, frames, or sight solutions. We accomplish all these functions in a very small and affordable package.”

Stout mentioned that, for example, NVIDIA Corp. in Santa Clara, California, launched the Jetson Orin processor this year, capable of 200 trillion operations per second.

Advanced signal processing plays a crucial role in other enabling technologies like multispectral and hyperspectral sensing, where the task of the processors is to fuse information from different spectra to discover information that may be missed in a single spectral perception. “The digital detectors we use in the systems use 3DHI to integrate multilayer sensors in the same package,” Maestas from Raytheon said.

“In the future, we will focus on event-driven sensing, so only pixels important to the user will be sent, turning this information into knowledge. Event-driven sensors are already on the roadmap; today, they focus on very fast-moving objects. Our first batch of cameras is now in integration and will be integrated into military equipment in the next three to five years.”

Artificial Intelligence and Machine Learning

One of the most revolutionary improvements in optoelectronic sensors will involve artificial intelligence and machine learning. These enabling technologies can not only help sharpen images, improve distance, and detect hard-to-find objects but also assist the sensors themselves in inputting important information.

“Operators do not need to keep their eyes glued to the target,” Maestas from Raytheon said. AI helps to identify situations that differ from the norm. It can have an 80% confidence level that a target is a tank, a surface-to-air missile site, or another type of threat.

For the same reason, AI can also help detect targets that the human eye might miss, such as a small boat on the vast ocean. “The ocean is immense, and people do not realize how vast it is,” Maestas said. “With AI, we can scan the entire visible ocean surface and find objects that are not waves, whether they are trawlers, commercial vessels, or naval destroyers.”

Embedding AI into optoelectronic sensors can not only help identify small targets but also help reduce the SWaP of deployed systems. Irvin from Clear Align stated, “AI and imaging systems will improve these sensors, making them smaller, denser, and truly processing on the edge inside the camera; I see innovation happening. We are processing inside the chip for clarity, and we will achieve this in the field of AI.”

AI can enable combat personnel to detect targets in real-time with very low latency without power and thermal management issues. “AI can alleviate the operator’s requirement to identify targets and assist or alleviate some of the operator’s focus.” Moreover, AI algorithms can help filter out noise under conditions like atmospheric turbulence to reduce motion and distortion in images.

One challenge AI faces, especially for the military, is training algorithms with the correct data inputs. Many military conditions required for training AI are rarely available. “Military targets of interest are not easy to obtain, so how do you collect data on weather or targets of interest to get a robust model that can be deployed for target detection or situational awareness?” Stout asked.

In contrast, companies like Teledyne FLIR are creating computer models and synthetic data generation for training AI systems. Stout stated, “We can create wireframes and convert them into infrared images and view that vehicle from every angle and distance. Introducing synthetic data into training is a significant advancement.”

AI can also help humans absorb useful information from the vast amounts of data collected by today’s sensors. “The trend is toward increasingly high dynamic range and enhanced features provided to operators through AI and machine learning,” Baylouny from Leonardo DRS stated. “The dynamic range of these sensors can present information beyond what humans can consume, so we need to provide the best detectability for humans. The advantage of this work is automatic target recognition based on features and dynamics. Algorithm libraries are currently being established to automatically identify targets of interest.”

However, AI and machine learning have broader benefits than just automating sensor and processing tasks. Schrader from Lockheed Martin stated, “We can design things using digital twins. We can have digital versions of sensors and payloads to accelerate development and predict failures before they occur.”

Predicting failures and other issues in optoelectronic sensors is also part of AI and machine learning’s work. “We can tell the system how it is performing,” Schrader said. “Lockheed Martin is working with Intel to develop new types of processors that enable distributed command and control, where onboard processors can know what other systems are doing. They must communicate with each other and can operate without one another when necessary, making our systems smarter.”