Click the blue text to follow us

The workqueue is an important asynchronous execution mechanism in the Linux kernel, widely used in driver development, post-interrupt processing, and delayed processing scenarios. This article will systematically analyze this mechanism in terms of its usage, scheduling principles, and performance characteristics.

01

Introduction to Workqueues

In kernel development, certain operations (such as memory allocation and I/O) are not allowed to be executed in interrupt context. At this point, we can use workqueues to transfer tasks to process context for asynchronous execution, thereby avoiding blocking interrupt handling or other high-priority tasks.

Workqueues allow tasks to be deferred and completed by kernel threads (such as kworker) in process context, supporting blocking, sleeping, and other operations.

02

Usage of Workqueues

2.1 Simple Usage (System Default Workqueue)

#include <linux/workqueue.h>

static struct work_struct my_work;

static void work_handler(struct work_struct *work) {

// Actual processing logic

}

void trigger_work(void) {

INIT_WORK(&my_work, work_handler);

schedule_work(&my_work); // Asynchronous scheduling

}Suitable for simple tasks that do not require special control.

2.2 Custom Workqueue

struct workqueue_struct *wq;

static struct work_struct my_work;

static void work_handler(struct work_struct *work) {

// Actual processing logic

}

void init_my_workqueue(void) {

wq = alloc_workqueue("my_wq", WQ_UNBOUND | WQ_HIGHPRI, 1);

INIT_WORK(&my_work, work_handler);

queue_work(wq, &my_work);

}Supports setting scheduling policies, concurrency, etc., making it more suitable for complex scenarios.

2.3 Delayed Workqueue

static struct delayed_work dwork;

INIT_DELAYED_WORK(&dwork, work_handler);

queue_delayed_work(wq, &dwork, msecs_to_jiffies(500)); // Execute after a delay of 500msUsed for timed processing or to avoid frequent scheduling within a short period.

03

Scheduling Principles of Workqueues

The essence of workqueues is to insert the work_struct structure into the task queue of a worker thread pool, which is then scheduled for execution by the corresponding kworker thread at an appropriate time.

3.1 Overview of Core Structures

-

struct work_struct: Represents a single work task.

-

struct workqueue_struct: Represents a workqueue that can be created by developers.

-

kworker: The kernel thread used to execute tasks, the entity that actually schedules and runs work items.

-

worker_pool: The thread pool structure that manages multiple kworkers and suspended work items.

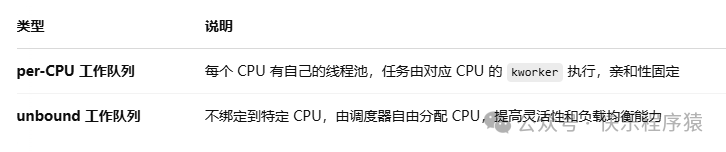

3.2 Two Types of Workqueues

3.3 Task Scheduling Process

-

Call schedule_work() / queue_work() to submit tasks;

-

The kernel inserts the work_struct into the work linked list of the thread pool;

-

If there is an idle kworker, it is scheduled for immediate execution;

-

Otherwise, a new kworker is started or an existing thread is waited for to become idle;

-

The kworker thread calls process_one_work() to execute the task processing function.

Note: The same work_struct cannot be executed concurrently; resubmitting an unfinished task will be ignored.

3.4 CPU Affinity and Scheduling Policies

-

Each kworker thread can set CPU affinity, determining on which CPUs it runs.

-

The WQ_UNBOUND queue uses a global thread pool, and its kworkers are not CPU-bound, making it more flexible.

-

The WQ_HIGHPRI can be used to set a high-priority thread pool for time-sensitive tasks.

-

The WQ_CPU_INTENSIVE indicates that the task is CPU-intensive, often used for data processing or computational tasks.

-

The WQ_MEM_RECLAIM flag is used for memory reclamation tasks, prioritizing scheduling.

You can check the distribution of kworkers in the current system as follows:

ps -eLo pid,psr,comm | grep kworker3.5 Concurrency

By default, each thread pool allows multiple kworkers to run simultaneously, with the specific number controlled by max_active. This value can be specified when calling alloc_workqueue(). For example:

alloc_workqueue("my_wq", WQ_UNBOUND, 4); // Allow up to 4 concurrent kworkers to execute tasksThis means that up to 4 tasks submitted to this queue can be executed concurrently, while the remaining tasks wait for availability.

04

Performance Analysis and Tuning

Although workqueues are easy to use, they may encounter performance issues such as high latency, untimely processing, and frequent thread switching in high-load or real-time scenarios. Here are some typical analysis methods and optimization techniques.

4.1 Scheduling Delay Analysis

You can analyze the scheduling and execution delays of tasks in workqueues using ftrace or perf tools:

Using ftrace:

echo function_graph > /sys/kernel/debug/tracing/current_trace

echo schedule_work > /sys/kernel/debug/tracing/set_ftrace_filter

cat /sys/kernel/debug/tracing/trace_pipeUsing perf to observe kworker activity:

perf top -p $(pgrep -d, kworker)Observe if a large amount of CPU time is consumed by kworkers.

4.2 Common Causes of Performance Bottlenecks

4.3 Optimization Suggestions

-

Split Large Tasks: Break long-running tasks into multiple short tasks to avoid blocking kworkers.

-

Set Concurrency Appropriately: Specify an appropriate max_active when using alloc_workqueue().

-

Use WQ_UNBOUND: Allow tasks to migrate freely, enhancing scheduling flexibility.

-

Avoid Duplicate Scheduling: Ensure that the same work_struct is not repeatedly queued before completion.

-

Debug Logs: Use dump_stack() and printk() to print the scheduling stack of tasks to assist in troubleshooting.

05

Conclusion

Workqueues are an elegant asynchronous execution mechanism in the Linux kernel, decoupling tasks from the CPU through kworkers, supporting both delayed processing in interrupt context and complex task scheduling. However, attention must be paid to issues such as concurrency, CPU affinity, and scheduling policies in practical use.

When used properly, workqueues are an efficient, flexible, and scalable asynchronous processing solution; when misused, they can lead to uncontrollable scheduling delays and performance bottlenecks.

Closing Remarks

👉 Welcome to follow our public account【Happy Programmer】, where we will continue to share core technologies of the Android system to help you become a true expert in deep Android development!

If you have any questions, feel free to leave a message for discussion, let’s tackle technical challenges together and explore every inch of depth in the Android system!

Exciting Collections

Recommended Columns

☞【Column One】audio-ALSA Architecture☞【Column Two】Android-audio Series☞【Column Three】C++ Design Patterns☞【Column Four】Exploring the Linux Kernel☞【Column Five】C++ Boilerplate