Every Monday, an original piece focusing on 5G, the Internet of Things, and artificial intelligence. Join my Top Insights to utilize fragmented time for learning.

Introduction

In the last issue, we explored Huawei’s general capability platform HiAI, primarily serving its terminal developers. It integrates many out-of-the-box algorithms and computational support, saving developers a significant amount of time. Article link: A former Huawei employee gives Huawei a free advertisement: The HiAI platform should be the best in the world. This week, we will continue to delve into Huawei’s artificial intelligence platform capabilities.

The author has also analyzed that vision is the dictator of senses. In the consumer sector, the future 5G infrastructure’s support for video and the emergence of various new terminals will further stimulate visual development. In the industrial sector, machine vision on production lines will enhance industrial quality control and process supervision more effectively. In the autonomous driving field, true intelligence should be the onboard camera, not the various onboard radar solutions we see today. Therefore, Huawei has also created a super application platform for machine vision: the HiLens edge-cloud collaborative multimodal development platform.

Platform Positioning

Huawei HiLens consists of edge devices such as the AI inference camera HiLens Kit and a cloud development platform. The platform includes one-stop skill development, device deployment and management, data management, and a skill marketplace, providing a development framework and environment to help users develop AI skills and push them to edge computing devices.

HiLens Edge

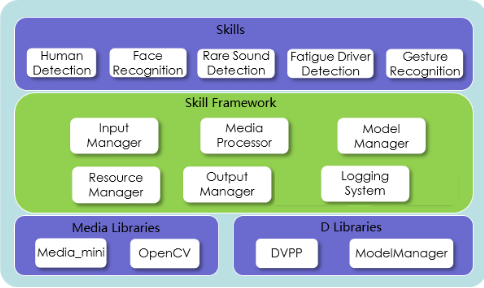

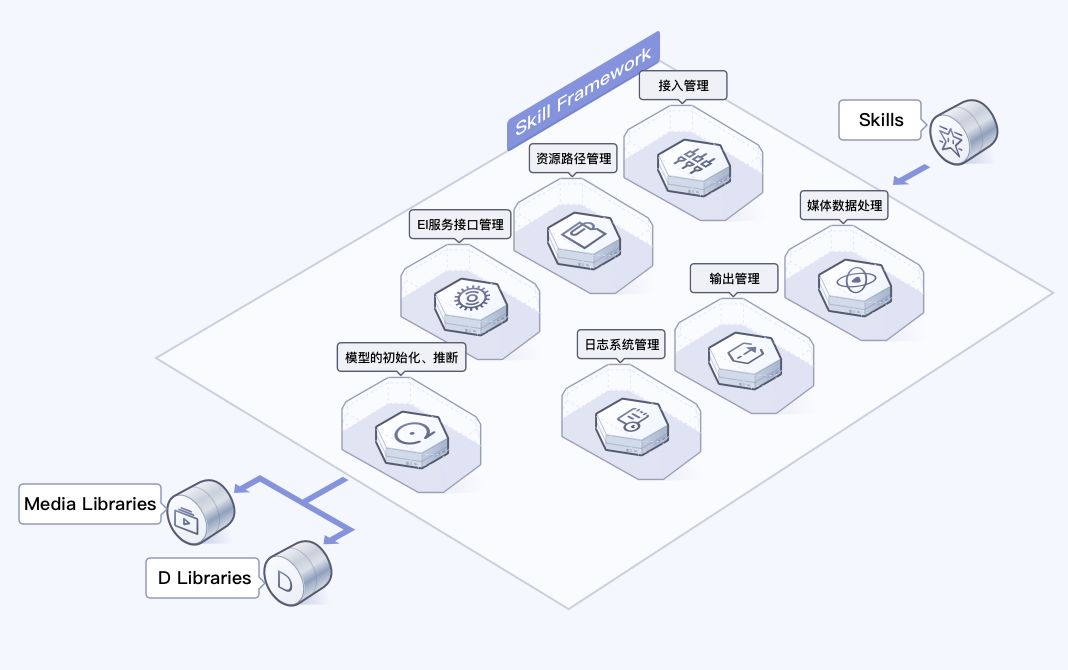

Skill Framework: The framework encapsulates underlying interfaces and implements commonly used management functions, allowing developers to easily develop skills on the Huawei HiLens management console and cultivate the AI ecosystem.

The structure of the HiLens Framework is shown in the diagram below. The HiLens Framework encapsulates the underlying multimedia processing library (camera/microphone driver module Media_mini), as well as the image processing library (DVPP Digital Vision Preprocessing) and model management library (ModelManager) related to the D chip (Huawei’s Da Vinci framework chip). Additionally, developers can also use the familiar visual processing library OpenCV.

On top of this, the HiLens Framework provides the following six modules for developers to facilitate the development of skills such as human detection, facial recognition, and fatigue driving detection. The module descriptions are shown in the table below:

HiAI Engine: As discussed in the last issue, Huawei’s general AI capabilities construct a fully connected service and all-scenario applications through open application capabilities, easily combining various AI capabilities and apps, making them smarter and more powerful. Capabilities include: facial recognition, image recognition, text recognition, natural language processing, video technology, human recognition, code recognition, and voice recognition.

Ascend310+Linux: The Ascend 310 chip is an AI chip developed by Huawei Technologies Co., Ltd., and is the first chip in the Ascend series. One Ascend 310 chip can achieve up to 16T of on-site computing power, supporting the simultaneous recognition of 200 different targets, including people, objects, traffic signs, and obstacles. It can process thousands of images per second, whether in a fast-moving car or on a high-speed production line, and can provide accessible and efficient computing power for various industries, whether in complex scientific research or daily educational activities.

Lightweight Agent: A multi-Agent system refers to a computing system where multiple distributed and parallel working agents collaborate to complete certain tasks or achieve certain goals. Terminal devices, especially mobile terminal devices, have high requirements for scale, so lightweight or narrowly defined embedded agents have become a trend.

IVE: Huawei Intelligent Video Analysis Acceleration Engine

NNIE: Short for Neural Network Inference Engine, it is a hardware unit in the HiSilicon media SoC specifically designed for accelerating the processing of neural networks, especially deep learning convolutional neural networks, supporting most existing public networks, such as Alexnet, VGG16, Googlenet, Resnet18, Resnet50 classification networks, Faster R-CNN, YOLO, SSD, RFCN detection networks, and SegNet, FCN scene segmentation networks.

LiteOS: Huawei’s lightweight IoT operating system launched for the Internet of Things field is a crucial part of Huawei’s IoT strategy, featuring lightweight, low power consumption, interoperability, rich components, and rapid development capabilities. It builds a domain-specific technology stack based on the business characteristics of the IoT field, providing developers with a “one-stop” complete software platform, effectively lowering development thresholds and shortening development cycles, and can be widely used in fields such as wearable devices, smart homes, vehicle networking, and LPWA.

Summary: On the edge side of HiLens, the platform mainly provides hardware carriers: smart cameras or smart stations. The cameras are intelligent and use Huawei’s self-developed HiSilicon chip series 35XX. They are also compatible with the self-developed lightweight operating system LiteOS (note that it is different from the Harmony operating system). Therefore, it still provides a comprehensive solution, including: chip, hardware module, operating system, algorithms and frameworks, and general artificial intelligence capabilities.

As mentioned at the beginning, Huawei’s HiLens provides an edge-cloud collaborative platform. After discussing the edge side, let’s talk about the cloud side.

HiLens Cloud Side

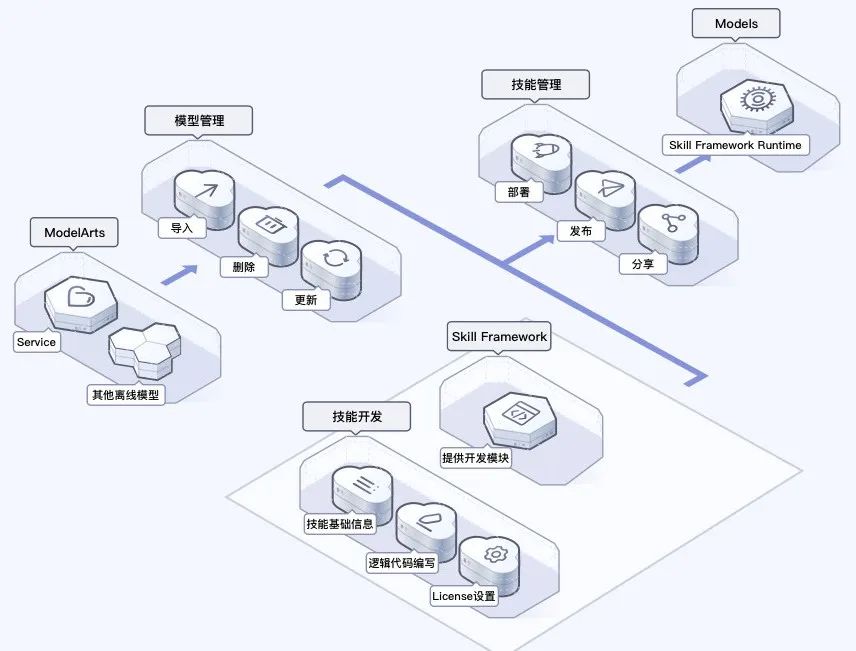

As shown in the figure above, the cloud side is relatively simple, mainly managing devices, developing skills, data statistics, and in the future, a skills marketplace for developers to monetize.

Platform Advantages

(1) Edge-cloud collaboration: Edge devices collaborate with cloud-side algorithm models to quickly improve accuracy. Reduce cloud data and save storage costs;

(2) Convenient development: Shield hardware details, a unified algorithm development framework, and closed basic components. Automatic adaptation, one-click deployment, skill development, validation platform, and powerful cloud data management capabilities;

(3) Rich skill marketplace: Pre-configured algorithm packages suitable for different chips, supporting the deployment of algorithms to HiLens Kit, smart stations, smart cameras, and third-party smart devices, releasing capabilities in various scenarios;

(4) Developer ecosystem: Empowering developers through the platform to establish an ecosystem.

For Developers

(1) Development Framework

-

Model management: Models can be imported from ModelArts or offline trained models, supporting model conversion;

-

Simple and easy to use: Provides one-stop service for development, deployment, release, and management, seamlessly integrating with user devices;

-

Modular development: The HiLens Framework encapsulates basic components, simplifying the development process and providing a unified API interface, supporting various development frameworks;

-

One-click release and deployment: After completing skill development, it can be published to the skill marketplace or directly deployed to edge devices;

(Click to enlarge)

(2) HiLens Framework

-

Provides easy-to-use development components: The HiLens Framework encapsulates basic components of video analysis algorithms, such as image processing, inference, logging, video streaming to the cloud, and EI cloud service integration, allowing developers to develop their own skills with minimal code.

-

Performance optimization combined with chip capabilities: For computational units that are time-consuming in AI algorithms, optimizations are made in conjunction with the HiSilicon chip architecture, greatly enhancing computational performance.

-

Skills are portable: Skills developed on the HiLens platform can run on any Ascend 310 device, including AI inference cameras HiLens Kit and smart stations.

-

Provides Python and C++ interfaces: Developers can use different language interfaces based on the scenario.

(Click to enlarge)

(3) Developer Ecosystem

-

Supports online model conversion: Models in formats such as caffe can be converted into om models that can run on Ascend 310 chips. This allows developers to quickly develop AI skills that can run on AI inference cameras HiLens Kit by importing models trained on ModelArts or offline.

-

HiLens Kit integrates the HiLens Framework: The HiLens Framework encapsulates the basic components of video analysis algorithms and optimizes them in conjunction with the HiSilicon chip architecture, providing developers with rich API interfaces and significantly improving the efficiency of AI application development.

-

ROS support: HiLens Kit can support driving hardware devices such as Arduino boards, MS Kinect, RPLidar, and web cameras.

-

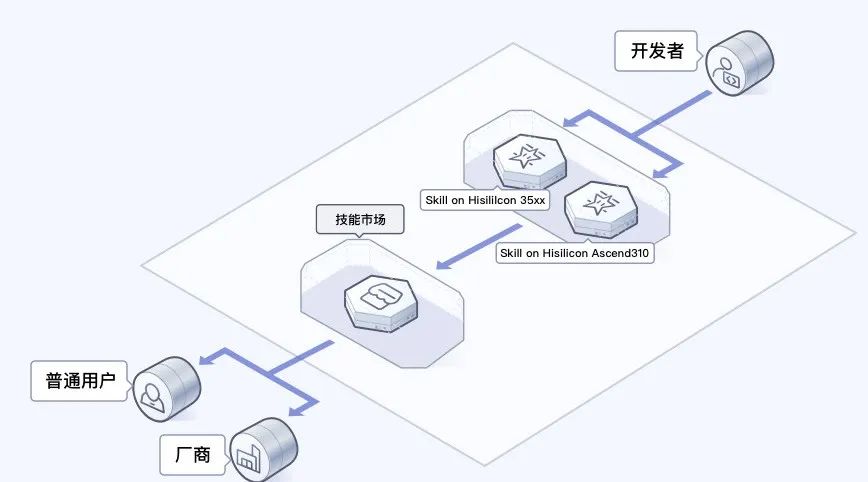

Good AI developer ecosystem: To build a good AI developer ecosystem, the HiLens platform provides a skills marketplace, encouraging developers to publish their skills that can run on HiSilicon 35xx series and Ascend 310 series chips to the marketplace for users to purchase and use.

(Click to enlarge)

Application Scenarios

For example, in smart campuses, the HiLens AI inference camera HiLens Kit and smart station integrated with Ascend chips are used. HiLens Kit is suitable for indoor scenarios, while the smart station supports outdoor deployment without a machine room. Both support video analysis scenarios with less than 16 channels, are compact, and facilitate the management of large amounts of video data analysis.

Recommended skills: facial recognition, vehicle recognition, safety helmet detection, etc. Hardware options are HiLens Kit for indoor and smart station for outdoor.

Skills Marketplace

The Huawei HiLens skills marketplace provides users with various AI skills for different scenarios, rich in variety and easy to install. After entering the skills marketplace, the content is very rich, and you can also develop your own skills as a developer and publish them for market transactions. The author has captured several common examples as shown in the following images:

HiLens Kit

Edge-cloud collaborative multimodal AI application development kit, supporting image, video, voice, and other data analysis and inference calculations. It can be widely used in smart monitoring, smart homes, robotics, drones, smart industries, smart stores, and other analytical scenarios. The usage flow diagram is as follows:

(Click to enlarge)

The technical development details will not be elaborated here. Huawei has basically realized the long-promoted one-stop AI platform application (foolproof, templated, drag-and-drop, click-based development).

Conclusion

As a platform-level and ecosystem-level enterprise, it is crucial to think from the perspective of developers or ecosystem participants. Although many application scenarios for artificial intelligence are not clear now, it is an inevitable trend. The lack of talent, expensive computing power, and long development times are current issues. Developers spend most of their time on these tasks, unable to focus on the scenarios where they should concentrate. Therefore, easy-to-use, affordable, flexible, and traffic-monetizable platform-level products are the future.

Looking forward to Huawei’s ecosystem performance.

Disclaimer:

This public account is a personal research topic sharing, not a commercial account with any commercial purpose. If the article content has any infringement or illegal information, please contact this account immediately for deletion. Thank you. Contact: [email protected]