Introduction

Content Summary

Content Summary

Keywords

Keywords

Sharing Outline

Sharing Outline

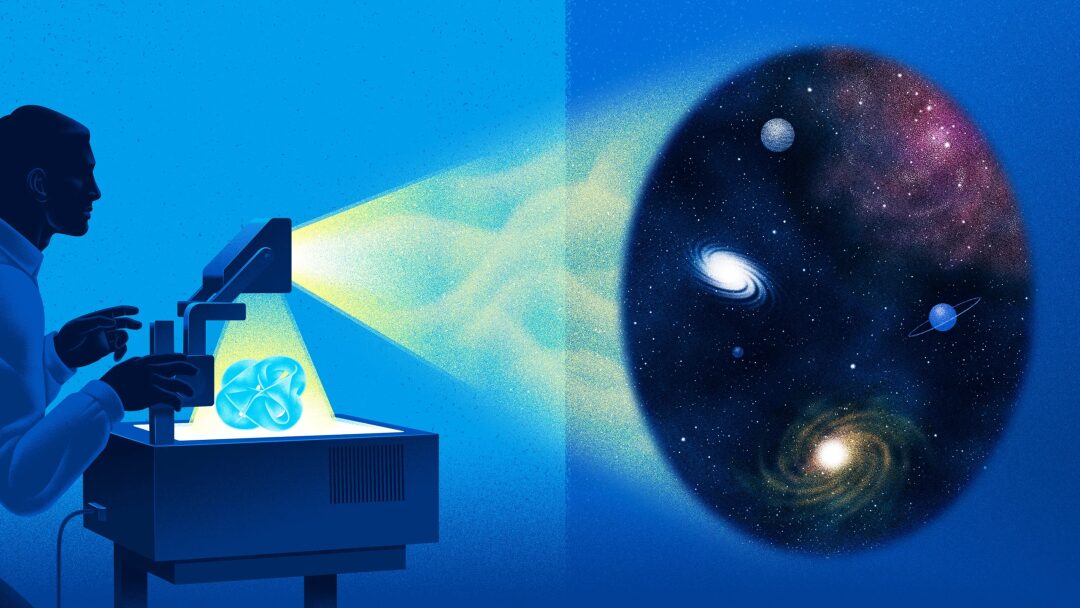

1. Scientific background of the research

2. Variability and sparsity of neural spike discharge activities (S-CV) and their equivalence

3. Indicators of high energy-efficient coding capabilities in neural networks: discharge irregularity and sparsity

References

References

-

Huang, M., Lin, W., Roe, A., & Yu, Y. (2024) A Unified Theory of Response Sparsity and Variability for Energy-Efficient Neural Coding. Preprint on BIORXIV/2024/614987. -

Rolls, E. T., & Tovee, M. J. (1995). Sparseness of the neuronal representation of stimuli in the primate temporal visual cortex. Journal of Neurophysiology, 73, 713-726. -

Treves, A., & Rolls, E. (2009). What determines the capacity of autoassociative memories in the brain? Network Computation in Neural Systems, 2, 371-397. -

Haider, B., Krause, M. R., Duque, A., et al. (2010). Synaptic and network mechanisms of sparse and reliable visual cortical activity during nonclassical receptive field stimulation. Neuron, 65, 107-121. -

Olshausen, B. A., & Field, D. J. (2004). Sparse coding of sensory inputs. Current Opinion in Neurobiology, 14, 481-487. -

Softky, W. R., & Koch, C. (1993). The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. Journal of Neuroscience, 13, 334-350. -

Lengler, J., & Steger, A. (2017). Note on the coefficient of variations of neuronal spike trains. Biological Cybernetics, 111, 229-235. -

Yu, Y., Migliore, M., Hines, M. L., & Shepherd, G. M. (2014). Sparse coding and lateral inhibition arising from balanced and unbalanced dendrodendritic excitation and inhibition. Journal of Neuroscience, 34, 13701–13713. -

Yu, Y., McTavish, T. S., Hines, M. L., Shepherd, G. M., Valenti, C., & Migliore, M. (2013). Sparse distributed representation of odors in a large-scale olfactory bulb circuit. PLoS Computational Biology, 9, e1003014. -

Marder, E. (2011). Variability, compensation, and modulation in neurons and circuits. Proceedings of the National Academy of Sciences, 108, 15542-15548. -

Faisal, A. A., Selen, L. P., & Wolpert, D. M. (2008). Noise in the nervous system. Nature Reviews Neuroscience, 9, 292-303.

Speaker

Speaker

Live Information

Live Information

Live time:September 28 (Saturday) 15:00-17:00

AI By Complexity Reading Group is Recruiting

Large models, multi-modal, and multi-agent systems are emerging one after another, with various neural network variants showcasing their capabilities on the AI stage. The exploration of emergent, hierarchical, robustness, nonlinearity, and evolution issues in the field of complex systems is ongoing. Excellent AI systems and innovative neural networks often exhibit characteristics of excellent complex systems to some extent. Therefore, how the developing theory and methods of complex systems can guide the design of future AI is becoming a highly focused issue.

The Intelligence Club, in collaboration with Assistant Professor You Yizhuang from the University of California, San Diego, Associate Professor Liu Yu from Beijing Normal University, PhD student Zhang Zhang from the School of Systems Science at Beijing Normal University, and Master students Mu Yun and Yang Mingzhe, and PhD student Tian Yang from Tsinghua University, jointly initiated the “AI By Complexity” reading group to explore how to measure the “goodness” of complex systems? How to understand the mechanisms of complex systems? Can these understandings inspire us to design better AI models? Essentially helping us design better AI systems. The reading group started on June 10, held every Monday evening from 20:00 to 22:00. Friends engaged in related research fields or interested in AI+Complexity are welcome to sign up for the reading group for discussions!

Previous Shares:

-

First session: Zhang Zhang, Yu Guo, Tian Yang, Mu Yun, Liu Yu, Yang Mingzhe: How to quantify and drive the next generation of AI systems through complexity. -

Second session: Xu Yizhou, Weng Kangyu: Research on structured noise and neural network initialization from the perspective of statistical physics and information theory. -

Third session: Liu Yu: “Compression is Intelligence” and Algorithmic Information Theory. -

Fourth session: Cheng Aohua, Xiong Wei: From high-order interactions to neural operator models: Inspiring better AI. -

Fifth session: Jiang Chunheng: Network properties determine the performance of neural network models. -

Sixth session: Yang Mingzhe: Causal emergence inspiring AI’s “thinking”. -

Seventh session: Zhu Qunxi: From complex systems to generative artificial intelligence. -

Ninth session: Lan Yueheng: Life, intelligent emergence, and complex systems research.

Click “Read the original text” to register for the reading group.