FisheyeMultiNet Real-time Multi-task Deep Learning Network for Surround View Autonomous Parking System

Background:

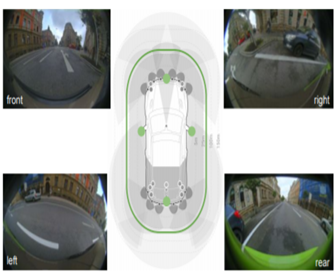

With advancements in deep learning and computer vision algorithms, autonomous driving has gained significant attention, and automatic parking is one of the application scenarios of autonomous driving. It is a low-speed, unorganized, and complex behavior that requires 360-degree near-field sensing of the vehicle’s surroundings. The complexity of autonomous driving systems poses many limitations on hardware, prompting researchers to propose a comprehensive system that detects objects that hinder safe parking based on camera-based deep learning algorithms on low-power embedded systems.

Problems to Solve:

Although fisheye cameras have a very wide field of view, generally, to observe the environment around the vehicle in 360 degrees, only four cameras are needed. However, fisheye cameras exhibit extremely complex projection geometry.

While convolutional neural networks have become standard components for most visual perception tasks in autonomous vehicles, semantic segmentation has become more mature, capable of detecting road surfaces, lanes, road markings, road edges, and other road objects, real-time performance remains a challenge.

Solutions:

First, an overview of parking system use cases is provided, such as parallel parking, vertical parking, fuzzy parking, fishbone parking, home parking, and valet parking, indicating that traditional ultrasonic sensors alone cannot safely park and need to be integrated with fisheye cameras and computer vision, along with a strong object detection capability.

The visual modules required for safe parking include: parking space detection (the first and most important step), free space detection (collision avoidance), pedestrian detection, vehicle detection, cyclist detection (distinguishing between pedestrians and cyclists), and dirt detection (dirt can lead to decreased computer vision performance).

The comprehensive system proposed by researchers includes embedded systems, use cases, and deep learning architectures. First, in the overall software architecture, a system-on-chip (SoC) with a computing capability of 1 trillion operations per second and power consumption of less than 10 watts was selected for the embedded system.

A multi-task network is proposed to run on a hardware accelerator, jointly executing the tasks of semantic segmentation, object detection, and dirt detection, developing architecture in internal parking datasets (USA, Europe, and China).

Conclusion:

The researchers detailed various aspects of the FisheyeMultiNet real-time multi-task deep learning network parking system, including embedded system architecture, parking use cases that need to be addressed, and visual algorithms to solve these use cases. By designing a minimal system with an efficient multi-task learning architecture, the architecture uses four fisheye cameras to provide a 360-degree view to observe the vehicle’s surrounding environment. Meanwhile, the researchers also provided detailed quantitative results of the deep learning structure, indicating that the accuracy of the multi-task network is only slightly lower than that of the single-task network, while the computational efficiency of the multi-task network is better and has the potential to improve performance through further fine-tuning, making it a more suitable choice for future embedded parking systems. Furthermore, the researchers released a dataset containing 5,000 images with semantic segmentation and bounding box annotations to encourage further research.

Using Image Processing Algorithms to Detect Lanes

Background:

Before the advent of autonomous driving technology, many vehicles were equipped with lane-keeping assistance functions, especially well-known brand cars, which could keep the vehicle within the lane through sound or vibration alerts, steering interventions, etc. However, with the emergence of autonomous vehicles, lane tracking functions have become even more important, allowing drivers to operate the vehicle without manual intervention, following a predetermined route along the lane to reach their destination. This article discusses lane detection for autonomous vehicles through image processing methods.

Problems to Solve:

To enable autonomous vehicles to know what actions to take if they need to change lanes in the future, it is first necessary to detect the lane in which the autonomous vehicle is located, capturing a snapshot of the lane with cameras; then processing the image to identify the lane; subsequently determining the region of interest (ROI); drawing a virtual line in the lane to make the path appear as a curve, where the angle of the curve indicates the curvature of the road, which will indicate whether the vehicle should continue straight or turn right or left.

Solutions:

First, improve the image quality by converting the RGB (Red, Green, Blue) color space into the HSV (Hue, Saturation, and Value) color space.

Use the Canny edge detection algorithm (to detect edges, as lane lines are edged lines), Hough Transform (a method for finding and displaying shapes), and Sobel filtering method (a method for detecting edges individually) to find the lane lines.

Find the lane area (ROI), either by forming a rectangle with the triangular area formed by the intersection of the left and right lane lines or by finding the ROI by intersecting the horizontal line with the lane line.

The researchers created an autonomous driving prototype vehicle. The vehicle is equipped with a Raspberry Pi (a Linux-based single-board computer), a Raspberry Pi camera, robotic car components, circuit testing boards, cables, batteries, and a power bank, with the battery connected to the motor, and the power bank powering the Raspberry Pi.

The vehicle starts, and the camera begins to operate, immediately capturing image frames. Using image processing methods to detect lanes, find the ROI, and guide the vehicle into the lane, the speed of the left and right motors can determine the direction of travel.

Conclusion:

To avoid poor lane tracking and traffic accidents caused by not adhering to the pre-established lane rules of autonomous vehicles, the researchers employed an autonomous driving prototype vehicle, utilizing Canny edge detection algorithms, Sobel filters (edge detection algorithms), and other image processing algorithms to track the lane. Such algorithms were executed and tested on this prototype vehicle, resulting in the vehicle being able to detect the lane and travel along the defined lane, ultimately successfully reaching the destination. In the future, researchers plan to use generative neural networks, deep learning, and various machine learning algorithms to detect lanes and traffic signs, ultimately improving the safety of autonomous vehicles.

Apple’s New Patent: Automatically Recommending Preferred Lanes for Vehicles

Background:

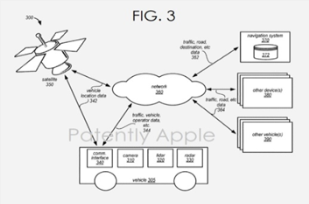

To improve driving safety, while the demand for automatic navigation and control systems in vehicles increases, modern cars are equipped with various remote sensing devices, such as radar and ultrasonic sensors. Automatic navigation and control systems can process data collected from sensors to detect and describe objects in the environment, improving driving efficiency and safety, reducing travel time, fuel consumption, environmental pollution associated with vehicle travel, and overall travel costs. However, current GPS navigation applications, blind spot detection, and warning systems cannot address traffic flow issues from the vehicle’s perspective, such as guiding the vehicle operator to a lane that is more efficient than the lane the vehicle is currently traveling in.

Patent Introduction:

First, the vehicle will be equipped with a sensor fusion module and a lane recommendation module.

The lane recommendation module or sensor fusion module may additionally consider other data, such as the vehicle’s destination, driver-defined preferences, driving style, or data from the navigation system, including data related to the vehicle’s preferred and alternate routes, such as the locations of highway exits.

Sensor data can be combined with other information to determine the vehicle’s preferred lane. Through a lane cost calculation function, the preferred lane with lower or minimal costs can be calculated, where some cost calculation functions may use weighting methods to consider user preferences or driving styles, or other information to determine the preferred driving route.

Recommendation information is pushed to the driver, automatic control system, and vehicle administrator, displayed on the dashboard, navigation system screen, heads-up display, or handheld devices, and can also be shared with nearby vehicles, law enforcement or traffic officials, systems, navigation systems, remote databases, and other storage systems.

Innovation:

Using sensors that are part of the vehicle, or installed on other vehicles, obstacles, lamp posts, road signs, and other stationary objects, or embedded ground sensors, can collect data about the vehicle’s surrounding environment, such as detecting obstacles on the lane, such as road debris or defects, as well as the position, speed, size of the obstacles, and detecting the route characteristics of the driving lane, such as width, curvature, lane markings, and positions.

The sensors send the collected data to the vehicle’s memory, which can exchange other data with the sensors. For example, setting or modifying the operating parameters of the sensors or monitoring the error status of the sensors, and can also store driver preferences (weighting factors of the cost calculation function), driving styles, etc.

The processor equipped in the vehicle (a general-purpose computer processor with other functions) or a processor dedicated to implementing functions related to automatic lane recommendation can achieve the sensor fusion module, analyzing memory data or raw data from the sensors. Moreover, the fusion module can receive measured geometric shapes and metadata, such as the lateral position, width, and curvature of the lane. Lane information can be provided directly by the sensors or from databases, navigation systems, external sensors, etc. Meanwhile, information from outside the vehicle can be communicated through one or more wireless communication interfaces.

When determining the recommended driving route, various relationships of traffic metrics will be considered. For example, generally, recommending a lane with a higher longitudinal position standard deviation and average speed is more desirable. In some cases, lanes with lower average traffic density and lower standard deviation of speed are preferred.

Automated Data Augmentation Method for Training Traffic Sign Recognition Modules

Background:

In autonomous vehicles and path planning, accurately recognizing traffic signs using images from the vehicle’s front camera is crucial for safe driving. However, due to blurriness, lighting changes, and damage, images can be distorted, and detection performance may decline. Machine learning models are one solution, but they are time-consuming and costly. Researchers have proposed a new end-to-end framework to augment traffic sign training data using optimal reinforcement learning strategies and various Generative Adversarial Network (GAN) models to train traffic sign recognition modules.

Problems to Solve:

Current research still focuses on classifying cropped traffic signs using deep learning models, lacking a general end-to-end approach to handle complete images from front cameras.

Deep convolutional GANs, super-resolution GANs (SR-GAN), style GANs, and cycleGANs can generate images of varying realism for landscapes and faces/cars, but in wide-field images obtained from automotive-grade front cameras, the resolution of images generated for specific areas of interest—traffic signs—is low.

Solutions:

Researchers proposed an innovative automatic data augmentation method that maps daytime training data to nighttime while enhancing classification performance. Furthermore, they utilized traffic sign bounding box (BBCAN), employing a feedforward generator equipped with a U-Net architecture and a CNN-based discriminator, and invoked reinforcement learning (RL) models to identify optimal transformation strategies for recognizing traffic sign bounding boxes.

Three methods were used to automatically augment training data. First, the simple augmentation method included blending (BLEND) and simple augmentation (SAUG); secondly, CycleCAN and BBCAN; and finally, reinforcement learning method (RLAUG).

Researchers analyzed the performance of the Object Detection System (ODS) to evaluate the effectiveness of the automatic data augmentation method. The ODS was trained on 80% of daytime images from the LISA traffic sign dataset (US traffic sign video) and tested on 20% of daytime images from the same dataset, as well as annotated real-time nighttime images from the Berkeley DeepDrive and Nexar datasets. The ODS without data augmentation had detection accuracy/recall rates of 0.897/0.883 for daytime images and 0.70/0.662 for nighttime images, hence the new method aims to improve ODS’s nighttime detection performance while retaining daytime detection performance.

Conclusion:

To accurately recognize traffic signs, researchers utilized images from the vehicle’s front camera, optimal reinforcement learning-based transformation strategies, and GAN-generated images encompassing daytime to nighttime, using the automatic data augmentation method to assist in training the target detector to accurately recognize traffic signs that are blurred, damaged, or obscured. The proposed automatic data augmentation method (RLAUG+BBGAN) can significantly increase the amount of labeled data for robust traffic sign recognition functions and other machine learning applications, mapping training data from one domain to another, improving the accuracy/recall rates of recognizing traffic signs from nighttime images from 0.70/0.66 to 0.83/0.71, and enhancing the precision and recall of classifying traffic signs by 3% to 7%, while saving testing processing costs. Therefore, this automatic data augmentation method can also be used to train other target detector modules related to autonomous driving and path planning functions.

Deep Reinforcement Learning Enhances Highway Autonomous Driving Safety

Background:

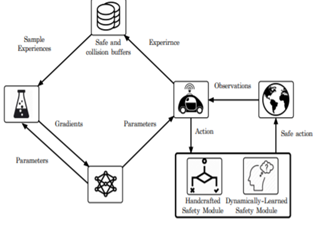

With the emergence of autonomous vehicles, researchers have begun to focus on improving the safety of autonomous vehicles. Due to the variability of driving environments and the uncertainty of road users, how autonomous vehicles make safe decisions is a challenging problem. Now, researchers have proposed a deep reinforcement learning system that combines the advantages of rule-based and learning-based approaches, promising to accelerate the learning process of autonomous vehicles and reduce the occurrence of collision accidents.

Problems to Solve:

Although many people apply RL (Reinforcement Learning) to planning and decision-making in autonomous vehicles, RL agents may not achieve a certain level of safety in safety-critical applications.

Another approach is to combine external knowledge to improve the safety of exploration, also based on rule-based methods. In highly dynamic and evolving environments, this method cannot guarantee the prevention of undesirable behaviors. Furthermore, rule-based methods cannot scale to unknown situations. During the learning phase, research on combining external knowledge with models is limited.

Solutions:

The system proposed by researchers aims to encode prior knowledge from the environment into the learning process, including two modules: one is an artificial safety module based on heuristic safety rules for general driving behavior. The dynamic learning safety module is data-driven safety rules learned from driving data. Specifically, the dynamic learning safety module includes a model that predicts safety over a longer time frame beyond immediate reinforcement learning rewards, and can predict the future actions of other road users and incorporate them into the decision-making stage.

Researchers simulated driving scenarios for autonomous vehicles on a three-lane highway, surrounded by up to six cars, and employed 20 metrics (such as vehicle speed) to demonstrate the driving scenario, while the autonomous vehicle had eight operational choices (acceleration, lane change, etc.). Then, a highly computationally efficient point mass model was used to model each vehicle. Data was collected to train an RNN (Recurrent Neural Network) to predict future dynamics, and if it would lead to an accident within a given range, RL would impose penalties to reinforce its memory of unsafe states, accelerating the learning process to avoid such accidents in the future.

Conclusion:

For the safety of autonomous driving on three-lane highways, researchers proposed a deep reinforcement learning framework, as heuristic safety rules are susceptible to unexpected behaviors, especially in changing environments. To mitigate this issue, a learning-based mechanism was proposed to learn safety patterns from historical driving data. To achieve this, an RNN was trained to predict the future state of vehicles within a limited range, determining whether the future state violates safety rules. Additionally, the system was evaluated in a simulated environment with varying traffic densities, demonstrating that integrating a learning-based module can accelerate the learning process of vehicles and significantly reduce collision situations. Moreover, this system can also be applied to advanced driver assistance systems, utilizing state/action data to simulate learning driver strategies.

Rivian’s New Autonomous Driving Patent: Methods, Systems, and Media for Controlling Access to Vehicle Functions

Background:

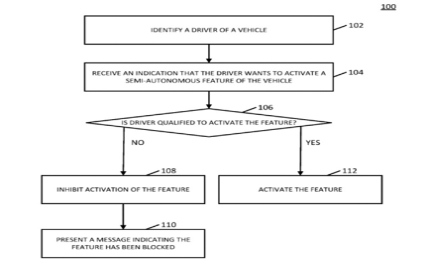

As autonomous vehicle technology becomes increasingly common in daily life, and many vehicles are equipped with autonomous or semi-autonomous driving technologies, not all drivers are prepared to perform certain specific functions associated with such technologies. Therefore, American electric vehicle startup Rivian submitted a patent titled “Methods, Systems, and Media for Controlling Access to Vehicle Functions” aimed at restricting certain drivers from executing certain autonomous or semi-autonomous functions to prevent catastrophic traffic accidents and ultimately prevent significant setbacks in the development of the autonomous driving industry.

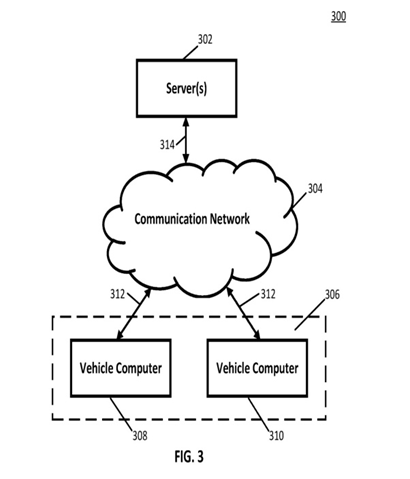

Patent Introduction:

First, the vehicle will identify the driver and determine the identification information.

When the driver attempts to activate the semi-autonomous driving function, the platform will try to determine whether the driver is qualified to activate this function. If deemed qualified, the vehicle will activate the function. However, if the vehicle determines that the driver is not qualified, the function will be disabled, and a notification will be displayed to inform the driver that the requested function is unavailable.

The decision process for determining whether the driver is qualified will occur within the onboard computer, which will be connected to a “communication network”, which will then run to a server responsible for making the final decision in the process, determining whether the driver is qualified to access the requested function.

Innovation:

Designed for the autonomous driving era, this control system patent aims to provide a method for controlling access to vehicle functions within limited boundaries. The method can automatically control access to semi-autonomous driving functions and media controls based on individual characteristics (customization), using identity recognition technology to first determine the identification information of the vehicle driver.

Upon receiving an indication from the driver wanting to activate a specific vehicle function, the determination of whether the driver is qualified to activate that function is made based on the identification information.

If it is determined that the driver is not qualified to activate a specific function, that function will be prohibited from being activated, and information indicating that the function is unavailable will be displayed on the user interface; once it is determined that the driver is qualified to activate the function, that function will be automatically activated.

Wearable Brain-Machine Interface Enables Disabled Individuals to Control Vehicles and More

Background:

Brain-machine interfaces are a crucial component of rehabilitation technology, allowing patients with amyotrophic lateral sclerosis, chronic stroke, or other severe motor disabilities to wirelessly control prosthetics, electric wheelchairs, interact with computers, or manipulate small robotic cars. Now, researchers from Georgia Tech, Kent State University, and Wichita State University have developed a new portable wearable brain-machine interface…

Problems to Solve:

To enable disabled individuals to wirelessly control other devices, it typically requires wearing an electrode cap to collect visual evoked potential signals from the human brain. Such electrode caps use wet electrodes, adhesives, and wires to connect to computer devices interpreting these signals, making them cumbersome.

The visual evoked potential signals from the human brain can help users direct system actions, but these signals are of low amplitude, within the range of tens of microvolts, similar to the electrical noise of the human body, making them difficult to detect. Therefore, in addition to meeting sensing needs, detecting and analyzing such signals is also very challenging.

Solutions:

Researchers designed a new portable, wearable wireless brain-machine interface system using wireless sensors and electronic devices suitable for skin, which includes four parts: elastic electrodes that directly contact the scalp secured with straps; ultra-thin nanomembrane electrodes shaped to fit the neck; printing electrodes similar to skin placed on the skin beneath the ear, and connecting electrodes equipped with a Bluetooth telemetry unit in a flexible circuit. The dry, soft electrodes attach to the skin without the need for adhesives or gels. They are easy to use, reducing noise and interference, with a high data transmission rate.

The recorded brain signals are processed in the flexible circuit and then wirelessly transmitted to a tablet via Bluetooth. Since human brain signals change as diversely as animal images, deep learning neural network algorithms commonly used for classifying animal images are also applied to accurately detect visual evoked potential signals from the human brain. Moreover, researchers also utilized deep learning models to identify which information collected by the electrodes is most effective for classifying brain signals.

Conclusion:

The researchers evaluated the system on six test subjects, finding that the subjects could successfully control electric wheelchairs and small robotic vehicles, and could also control display systems without a keyboard, joystick, or other controllers. The researchers will further study fully elastic, wireless self-adhesive electrodes that can be directly installed on densely haired scalps without any headgear support. Additionally, the system will be further miniaturized to incorporate more electrodes for other studies. The system can also be reconfigured to monitor the motion-evoked potential signals or motor imagery of patients with movement disorders. In the long run, it could also allow scientists to monitor human neural activity, discovering biological markers related to new, non-invasive Alzheimer’s pathology, thus preventing dementia.

“Recognizing Drivers Through the Steering Wheel”

Background:

Automakers and researchers are exploring how to recognize drivers to create personalized driving experiences. Recognizing drivers is also the first step in analyzing drivers and categorizing vehicle operations. Successfully recognizing drivers can bring various benefits, such as simplifying the driving experience without cumbersome identity verification, enhancing safety through intrusion detection systems, and implementing personalized onboard service models (such as models that recognize driver health issues).

Problems to Solve:

Recent studies have utilized CAN-bus data collected from vehicles to recognize drivers. However, such studies have limitations, primarily collecting data from controlled driving settings; additionally, studies based on natural driving data and numerous sensors may incorrectly apply trained models to recognize vehicles rather than drivers. Research utilizing a single sensor requires prolonged observation of the driver for reliable recognition. Most modern vehicles are equipped with adaptive cruise control systems that provide longitudinal vehicle control information. Therefore, existing recognition models and studies are outdated, and the signals input by drivers are the most reliable.

Solutions:

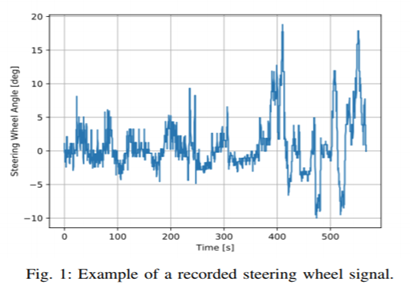

The researchers proposed and evaluated a machine learning method for statistically analyzing steering wheel signals. Under natural driving conditions, driving data from 72 drivers covering 680,000 kilometers was collected. By reading such CAN-bus data through the onboard diagnostic port equipped with an electronic dog and transmitting such signals to a smartphone via Bluetooth, GPS and accelerometer signals were also included and transmitted in real-time to a server via cellular networks.

The researchers first preprocessed the collected data, analyzed the steering wheel signals gathered, and designed a neural network. After processing the above signals, they were input into the neural network. Log-spectral features were computed as additional features and input into the neural network to improve accuracy. These features were prepared first, and a neural network was established using the TensorFlow library. The optimal window size was determined (3.5 seconds); subsequently, the correlation between model accuracy and required observation time was provided (the longer the time observing the driver, the higher the recognition accuracy), and finally, the confusion matrix of the model’s recognition was studied (drivers with similar driving styles were inaccurately identified).

Conclusion:

The study’s results, based on a natural driving research database, found that the GRU (Gated Recurrent Unit)-based neural network outperformed traditional methods, improving the prediction accuracy of 15 drivers from less than 15% to over 65%. Compared to existing state-of-the-art recognition methods, the recognition performance improved fivefold. When utilizing a global research dataset of 72 drivers, the prediction recognition accuracy of this method improved 25 times compared to random guessing. However, drivers with similar driving styles were misidentified, indicating that a dataset with greater diversity among drivers would yield higher accuracy. Based on this research, it can be concluded that customizing single sensor methods can significantly enhance recognition accuracy while avoiding misidentifications due to differences in vehicles or regions. In future work, combining this method with existing methods and additional sensors could achieve optimal driver recognition outcomes.

Apple’s New Patent: Imaging System Unaffected by Solar Radiation for Target Detection

Background:

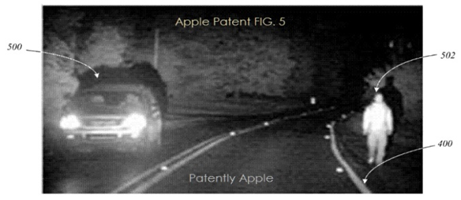

Vehicle navigation systems, automatic control systems, and drivers operating vehicles all rely on imaging systems to improve vehicle operation. Imaging systems can identify targets and track the movement of targets that may intersect with the vehicle’s intended path. Depending on the dynamic recognition range of the imaging system and the optical settings used to capture images of the surrounding environment, certain types of radiation may blur or “blind” the pixels on the image sensors in the imaging system, making it difficult to identify targets within that area.

Patent Introduction:

Solar radiation and glare, haze, and differences in lighting conditions for specific scenes captured during the day and night cause variations in the generated images, while images captured using specific infrared spectral wavelength bands are not affected by sunlight or such conditions, allowing the images output by target detection algorithms to be distinguished from images in natural lighting phenomena. Subsequently, the control system uses such images to detect objects, sending commands to one or more vehicle systems based on the object’s position.

Another target detection system includes an imaging system that generates a first image based on visible light spectrum and a second image based on infrared spectrum, with the control system receiving disparity indications related to target detection, thereby sending commands to one or more vehicle systems to achieve disparity responses based on the disparity indications. Disparity indications include target information not detected in the first image, while detected in the second image.

Innovation:

The atmosphere can severely attenuate radiation at specific wavelengths; thus, imaging within atmospheric-affected wavelength bands can utilize illumination control. For example, illumination can be provided by light sources directly associated with the imaging system. Usable light sources include incandescent, tungsten, halogen, infrared, near-infrared LEDs, or lasers, and other infrared or near-infrared light sources can also be used. Many light sources working in the infrared spectrum are undetectable by humans, thus avoiding disturbances to the imaging system from other vehicles or pedestrians next to the vehicle utilizing the imaging system.

The vehicle control system can receive disparity indications related to target detection, including moving vehicles, pedestrians, and whether traffic lights were correctly detected in the first or second image, namely, the first image generated by the visible light spectrum and the second image generated by the infrared spectrum, and then execute responses based on such differences, guiding the imaging system to identify targets or sending commands to change vehicle behavior, such as sending commands to the braking system to reduce speed or to the steering system to modify the path. Such differences enable other components of the imaging system to have additional time for target detection, classification, or response.

– END –

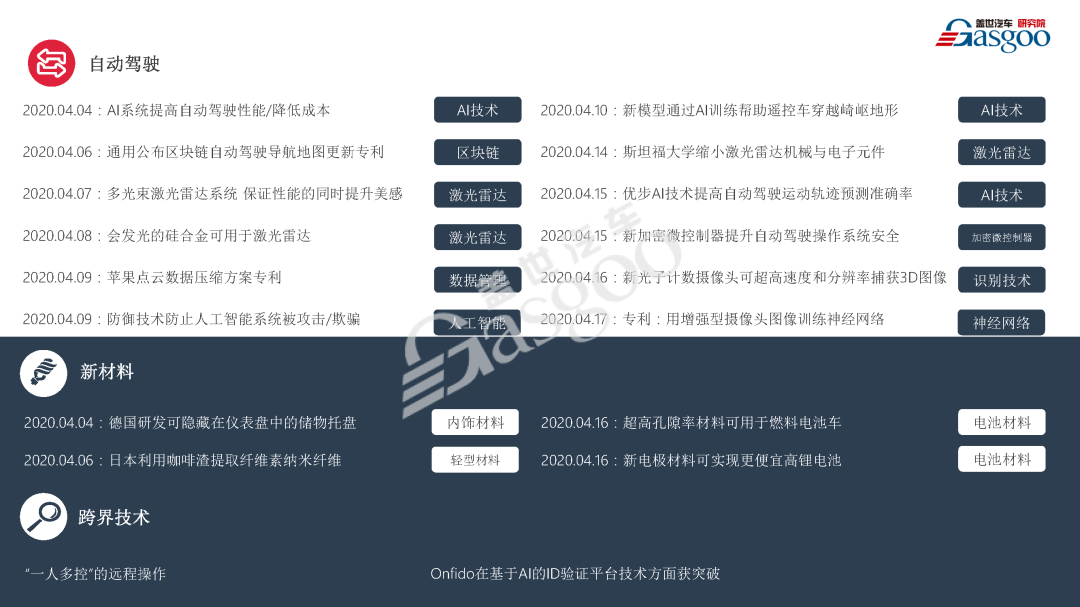

The automotive industry is undergoing a significant technological transformation. To help companies in the industry grasp global technology trends in a timely manner, the Gaishi Automotive Research Institute will take the lead in continuously exploring global cutting-edge technologies in intelligent networking, autonomous driving, new energy, new materials, and cross-border fields, launching the “2020 Global Automotive Cutting-edge Technology Intelligence” electronic publication. Through the Gaishi platform, the latest technologies will be collected, summarized, filtered, and analyzed to provide industry users with the most timely and comprehensive updates on new technology developments.

– Gaishi Automotive Report Recommendation –

— Journal Subscription —

This issue of the technology journal consists of 26 pages. If you wish to subscribe to the journal, please click the “Read More” button in the lower left corner or long press the QR code below to sign up↓↓↓

Product Features: Timeliness, Accuracy, Traceability, Uniqueness;

Content Coverage: Intelligent Networking, Autonomous Driving, New Energy, New Materials, Cross-border Technologies, Startup Discovery, etc.;

Release Frequency: Every two weeks (about 25 issues per year, excluding holidays);

Subscription Price: 25 issues, 60,000 yuan/year

PS: The report only covers the electronic version!!!