Follow us for free subscriptions to the latest avionics news.

Abstract

In response to the current challenges in the testing practices of onboard device driver software, which include complex operations, high repetitiveness, large workloads, and low efficiency, this paper proposes an automated testing environment framework based on driver source code analysis and driver function configuration. This framework describes the task structure, working process, and physical structure using different design views, addressing the challenges of automated testing for driver software. A prototype verification tool was developed based on this framework, which has achieved good application results in actual projects.

Keywords

Device driver software; Driver software testing; Automated testing; Testing environment; Bus testing

0 Introduction

Device driver software is a crucial component of embedded operating systems, responsible for the transmission of data between lower-level hardware devices and upper-level application software, serving as the foundation for external information exchange by application software. Therefore, when conducting onboard software qualification evaluations, device driver software must independently carry out testing at the configuration item level.

Currently, the variety and number of onboard device interfaces are increasing, and their implementation mechanisms are becoming more complex. During driver software testing, testers need to design test scripts for each driver API function, covering aspects such as parameter validity, return value correctness, and data transmission accuracy. Each script must also have trigger conditions designed, compiled, and downloaded, and then triggered in the auxiliary testing interface to stimulate the hardware environment for data transmission and reception, checking the response of the test scripts to the data. This testing process is cumbersome, error-prone, highly repetitive, and labor-intensive. While many tools assist in conducting black-box testing of embedded software, none possess the capability for automated testing of device driver software, failing to support software testing targeting API interfaces. Against this backdrop, this paper proposes a method for constructing an automated testing environment for embedded device driver software to assist in driver software testing.

1 Onboard Driver Software Development and Its Environment

1.1 Characteristics of Onboard Driver Software Development

Onboard embedded software often utilizes the VxWorks real-time operating system, with its driver software designed based on the VxWorks device driver structure. In VxWorks, hardware control can be achieved in two ways: one involves using VxWorks’ customized rules for device driver software, registering the driver software with the operating system for use; the other involves directly using custom driver software, bypassing the operating system’s management.

Driver programs developed based on VxWorks device management rules require frequent reference to programming manuals and the invocation of ioctl functions for control due to their unified file format operations, leading to poor maintainability and readability of the programs. Additionally, the driver program development process must adhere to VxWorks device management rules, and there are limitations on the number of devices, which has hindered widespread adoption in practical aviation engineering applications.

Custom-structured device driver programs are simpler and more intuitive, directly segmenting hardware address access into functional divisions and function encapsulations, forming a series of independent API function sets. Such driver software typically includes numerous API functions, with specific logical calling relationships between them. Each API function contains varying numbers and types of parameters, with certain logical relationships between parameters. Therefore, application software developers must consider the normal and abnormal parameters and usage scenarios for each API, inadvertently increasing the workload for developers and introducing certain risks and hidden dangers for application software. To address these issues, comprehensive testing of driver API functions is necessary to ensure that errors can be reasonably avoided even under abnormal calling conditions, enhancing the safety of driver API functions and reducing development risks for application software.

1.2 Operating Environment of Onboard Driver Software

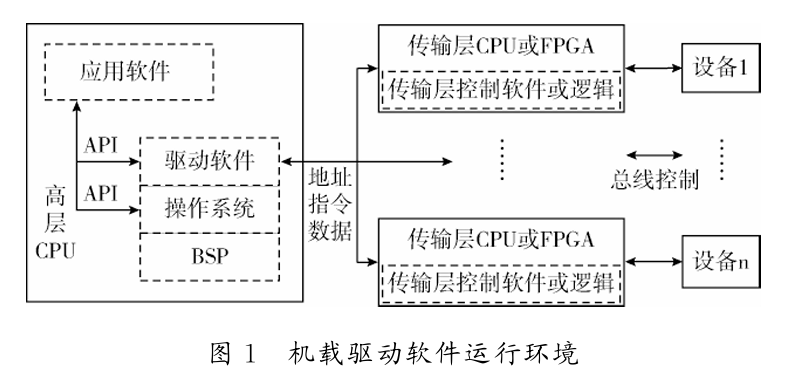

Although onboard driver software is managed as an independent software configuration item in software engineering management, its operation cannot be independent; it must be called by application software, forming executable target code that runs on the target CPU. Onboard driver software typically does not directly access hardware devices but instead accesses physical hardware indirectly through the transport layer. Its typical operating environment is shown in Figure 1.

The typical operating environment of onboard driver software consists of a high-level CPU, transport layer, and external devices.

The high-level CPU loads the driver software, operating system, board support software, and application software. The driver software provides a set of API interfaces for application software to access various peripherals, while the type of device driven, driving method, and driving content are determined by the application software when calling the API. The driver software only converts the device access data from the application software into corresponding addresses, instructions, and data, sending them to the transport layer CPU via the internal bus. The transport layer consists of several control modules, such as CPUs or FPGAs, that control different devices, running specific control software or logic for external devices. According to the control timing of external devices, the addresses, instructions, and data issued by the high-level driver software are parsed and distributed to form control signals recognizable by external devices, enabling control of external devices. External devices typically consist of bus interfaces formed by a series of ASICs, which in aviation onboard devices usually include 1553B, AFDX, 1394B, FC, and other buses.

1.3 Requirements for the Testing Environment of Onboard Driver Software

Onboard driver software is characterized by single functionality, numerous interfaces, complex parameters, and driver coupling. When conducting software testing for such software, it is necessary to design calling processes for numerous API interface functions, forming test scripts that comprehensively cover parameters, return values, functionalities, and relationships, and then observe the device’s response results in the hardware interface environment. This testing process is defined in software engineering as the testing design, testing execution, and testing summary process. Correspondingly, in practical engineering, complete requirements for the onboard driver software testing environment have been proposed regarding testing design, testing execution, and testing summary.

(1) Testing Design Requirements

Driver software testing is an activity targeting each API interface function within the driver software. Therefore, it is necessary to test each API under specific application scenarios. Before testing, testers must design test scripts that can cover the parameters, return values, functionalities, and related APIs of the APIs being tested, achieving the invocation of driver API interfaces, which are then compiled and downloaded to the high-level CPU for execution along with the driver software being tested. Additionally, the test scripts must also possess control capabilities for the testing execution, allowing testers to control the testing process according to testing needs.

(2) Testing Execution Requirements

The bus data transmission and reception implemented by the driver software are reflected as data transmission and reception in the cross-link environment. Therefore, it is necessary to use the cross-link environment as the testing execution carrier to achieve functional verification of the driver software being tested. The onboard cross-link environment typically features complex interfaces, a wide variety, a large number, and high real-time requirements. Thus, to effectively simulate real onboard devices and meet the environmental requirements of the system being tested, the cross-link environment should also possess similarly complex interfaces and strong real-time support capabilities.

(3) Testing Summary Requirements

By invoking driver API functions through test scripts and achieving data transmission and reception of driver software through the testing environment, it is ultimately necessary to review and summarize the API execution results to complete a complete testing process. The results of driver software execution mainly include two aspects: the API return value results and the functional operation results of the API. When testing return values, results must be obtained and reported to testers according to the return value types. The functional operation results of the API refer to the actual hardware device operations completed by the driver software, such as data transmission and reception. To test the completion of API functionalities, different assessment criteria must be established based on the input-output nature of the driver software. For example, if the tested API is for sending data functionality, it should verify the consistency of the received data with the sent data in the cross-link environment; if the tested API is for receiving data functionality, it should send data in the cross-link environment, report through scripts, and check the consistency of the received data with the sent data. Therefore, the testing environment imposes requirements for result observation in the design of test scripts.

2 Framework Design

2.1 Working Principle

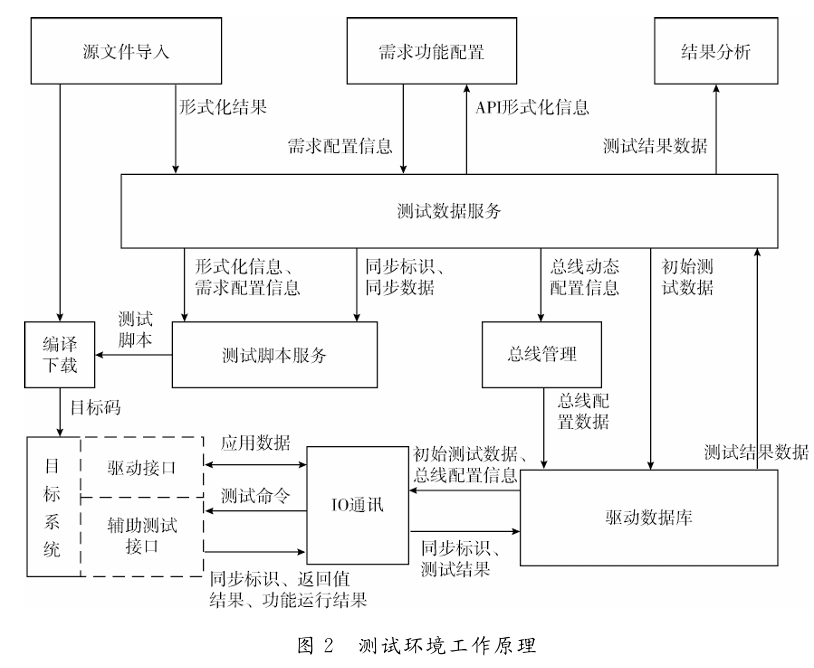

The distributed automated driver testing environment is constructed with the source code and requirements of the driver software as input and the test report as output. Its working principle is illustrated in Figure 2.

After importing the software source code into the testing environment, the driver parser decomposes and transforms the source code, forming formalized driver API functions. Testers configure the functional requirements of the driver software, and after synchronization processing with the API functions, the test script service generates test script files. The test scripts not only include calls to the API functions but also contain necessary test control code, synchronization code, and test result reporting code. These test scripts are compiled and downloaded to the target system for execution along with the source files. Meanwhile, the automated testing environment maps and manages the API functions and their configuration information with the bus interfaces, downloading them along with the initial test data required for testing to the executable driver database. During test initialization, the driver database initializes IO communication based on the bus configuration information and establishes a connection with the auxiliary testing interface of the target system. When testing starts, the driver database initiates the test execution command, which is transmitted to the test scripts in the target system via IO communication and the auxiliary testing interface. Upon receiving this command, the test scripts begin to loop through the program parts of the API tests. When testing the API functions, the test scripts interact with the IO communication interface in the testing environment through the driver interface, achieving mutual communication of test data between the API functions and the test data in the driver database. The final test results of the test scripts include both the API return value results and the functional operation results, which are uploaded to the driver database via IO communication and submitted to the result analysis module for test result determination and display, generating a test report.

Based on the above working principle, different view models using UML modeling methods describe the task structure, working process, and physical structure of the automated testing environment for driver software.

2.2 Task Structure Design

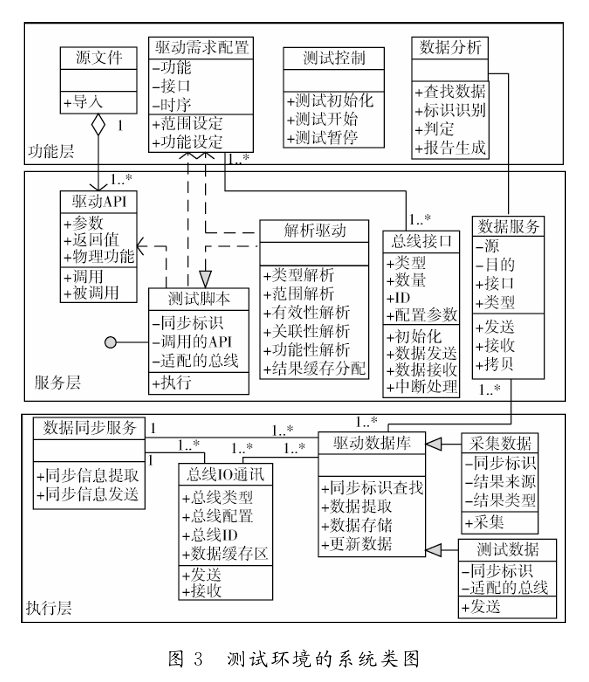

The automated testing environment for driver software is structured according to task hierarchy, divided into functional layer, service layer, and execution layer. Each layer’s tasks are implemented through various classes, with the main system class diagram of the driver software testing environment shown in Figure 3.

2.2.1 Functional Layer Design

At the functional layer, the main tasks include user interface operations, such as importing driver source files, configuring functional requirements, controlling the testing process, and analyzing test results.

The import of driver source files primarily involves parsing and formalizing the source file fields.

The requirement configuration work is the foundation of the entire testing environment, where testers configure the functional attributes and relationships of each parsed API. Functional attribute configuration includes configuring APIs for sending, receiving, initializing, interrupting, or other service properties, and when parameters are involved, configuring the expression range, valid range, parameter relevance, pointers, etc. Relationship configuration includes configuring the relevance between the tested API and other APIs, calling order, and API-level configurations, as well as mapping configurations between API parameters and bus interfaces. Test control is used to activate relevant functions of the service layer and execution layer, including three operations: test initialization, test start, and test pause. The task of test initialization is to trigger the service layer to initialize the test scripts and test data, and then trigger the execution layer to initialize the driver database and bus, with inputs from the requirement configuration process and outputs being the compilable downloadable test script source files, executable test data, and ready execution layer IO bus. The test start task sends a synchronization start code to the tested system via the execution layer’s IO communication, activating the test scripts inserted in the tested system, which continuously trigger the execution layer for data transmission and reception until testing is complete. The test pause uses an asynchronous trigger and synchronous convergence method to pause the current test task, issuing a pause command to the target system from the execution layer to halt the current test task before the next test case runs.

The data analysis task summarizes the test data after testing, marking the conclusion of the entire testing process. This task collects synchronization identifiers, test data, and result data from the testing process, automatically matching them with the tested API driver, judging execution results based on the range setting information from the requirement configuration, and generating a test report.

2.2.2 Service Layer Design

The service layer is invisible to users and consists of internal tasks triggered by operations at the functional layer, primarily completed by three major service tasks: test script, test data, and bus management.

The test script service is triggered by the functional layer’s test initialization, extracting requirement configuration information and API formalization information stored in the test data service to generate test script files according to pre-established strategies. In addition to simulating various complex application scenarios to call APIs in the test script files, the test script service must also embed a small amount of code in the test scripts for synchronization and command interaction with the testing environment, such as handling test start and test pause commands, reporting test progress (achieved by synchronization identifiers), and reporting test completion. This information interacts with the testing environment through the auxiliary testing interface of the tested system, playing an important role in synchronizing the testing process and data.

The test data service first stores the information generated from the import of source files and requirement configuration in the functional layer, then provides synchronization identifiers and synchronization data information for API testing to the test script service to support the environmental data required during API calls. It also provides dynamic configuration information of the bus interfaces relied upon by each API to the bus management service to support the control of bus interfaces by configuration APIs. Finally, the test data service provides interfaces for data transmission and reception with the execution layer, issuing initial test data, collecting test result data, and reporting all data to the data analysis task in the functional layer to complete the test summary.

The bus management service extracts the IO communication interfaces and their dynamic configuration information required for driver testing, dynamically managing the relationship between bus interfaces and API parameters. During testing execution, it adapts to different parameter configurations made by APIs on the interfaces, such as communication rates, verification methods, etc., assisting in completing the testing work of configuration APIs.

2.2.3 Execution Layer Design

The execution layer is deployed on independent real-time nodes, completing direct interaction between the testing environment and the tested system. This layer’s tasks are primarily composed of the driver database and IO communication.

The driver database initializes the test data issued by the test data service locally, allowing it to be quickly searched and applied during real-time operation. After testing begins, the driver database receives the script synchronization identifier from the target system, searches for the test data matching the synchronization identifier, and determines the flow of test data based on the synchronization identifier, ultimately completing interaction with the target system through IO communication. The driver database reports the completed test data back to the service layer for feedback to the functional layer and data analysis.

IO communication completes the preliminary configuration of the bus interfaces under the command of the test initialization command, preparing the IO interfaces in the testing environment for interaction with the target system. The term “relative readiness” means not fully ready, having only completed the creation of IO interfaces and initial operations. During testing execution, further initialization operations must be implemented for the parameter configuration functions of APIs on the bus. In IO communication, synchronization and command interaction interfaces are set up, connecting with the auxiliary testing interface of the target system to achieve interaction of testing commands, synchronization identifiers, and execution results with the target system.

2.3 Working Process Design

Based on the three levels of system task structure division, the working process of the automated testing environment for driver software is designed into five stages: source file import and requirement configuration, test initialization, compilation and download, test execution, and test termination.

2.3.1 Source File Import and Requirement Configuration Stage

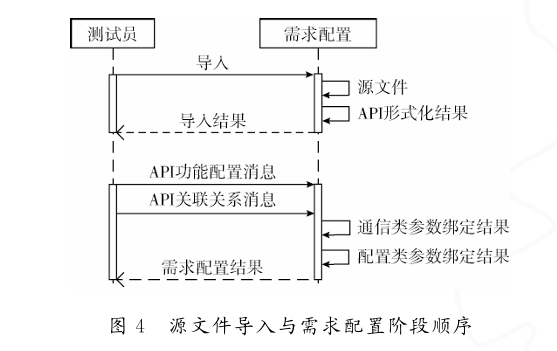

The driver software testing environment begins with understanding the tested system, achieved through source file import and requirement configuration. This working process is described by a timing diagram as shown in Figure 4. In this stage, testers perform source file import through human-computer interaction operations at the functional layer, and after parsing and formalizing the source files in the service layer, they again perform functional configuration and relationship configuration for the tested driver software through functional layer operations. During the configuration process, the service layer stores the configuration information and reports the configuration results to the testers.

2.3.2 Test Initialization Stage

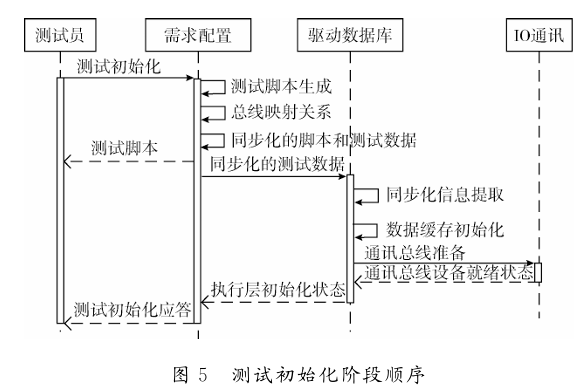

After completing the basic configuration of the tested driver software, the operator initiates the test initialization work. Upon receiving the test initialization command, the service layer generates test scripts and test data based on the configuration information. The test scripts are fed back to the testers for the next stage of compilation, while the test data is issued to the execution layer via network services, where the driver database and IO communication interfaces are initialized, and the final initialization results are reported back to the testers. The sequence of this stage is shown in Figure 5.

2.3.3 Compilation and Download Stage

The test script files generated during the test initialization process need to be compiled together with the driver software source code and downloaded to the target system for waiting execution. This process can be completed manually through the development environment or automatically by generating Makefile files in the testing environment, calling the compilation and download commands provided by the development environment.

2.3.4 Test Execution Stage

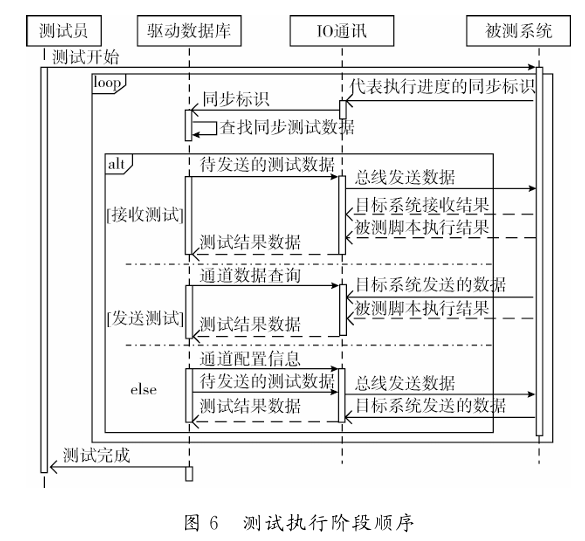

Before the target system begins testing, it is in a waiting state. When the tester initiates execution, the network service sends the test execution command to the execution layer’s driver database and issues the execution test command code to the target system’s auxiliary testing port through the IO communication interface. Upon receiving this command code, the target system sequentially loops through the test cases in the test scripts. When executing test cases, the test scripts first send the case synchronization identifier to the testing environment’s IO through the auxiliary testing port. The execution layer receives this synchronization identifier and searches for the corresponding test type and its test data. The test type refers to the type of functionality being tested for the driver software, including receive tests, send tests, and other tests. The execution layer responds differently based on the test type.

When the test type is a receive test, it indicates that the tested driver API is a data receiving function. The execution layer needs to prepare the necessary environmental data to send to the target system and read its data reception results and API return value results through the auxiliary testing interface, storing them in the driver database for subsequent upload to the functional layer to determine the correctness of the tested API’s receiving functionality.

Similarly, when the test type is a send test, it indicates that the driver API is a sending function, with the target system issuing preset test data, and the execution layer waiting to receive it, storing the results in the driver database.

When the test type is of another type, it indicates that the tested API is a configuration function. The execution layer directly initializes the bus according to the interface configuration information in the driver database, which is consistent with the initialization information of the API on the testing script, meaning both the testing environment and the tested system initialize the interface with the same parameter information. Then, a data transmission and reception operation is performed on the initialized interface, and the results are collected and stored in the driver database.

Once all test cases in the test scripts have been executed, the driver database checks the count of executed cases and notifies the service layer and functional layer of the test completion status, while also reporting to the driver database. The sequence of the test execution stage is shown in Figure 6.

2.3.5 Test Termination

When conducting driver software testing using the automated testing environment, situations may arise where invalid test scripts or test data occur due to unreasonable requirement configurations. When invalid tests are discovered during the testing execution process, to avoid the continuation of large-scale invalid testing, a test pause command is set in the testing environment to terminate the current unreasonable testing process. The test termination is not affected by the current execution progress and can be initiated by testers at any time after the test begins, functioning as an asynchronous message. This command is sent to the execution layer via network services, which notifies the target system to suspend the script task after completing the current case script, before running the next case. The execution layer receives the feedback, suspends the driver database information, and reports the execution results to the functional layer.

The terminated testing process can be reinitialized for execution or continued after environmental confirmation initiated by the tester.

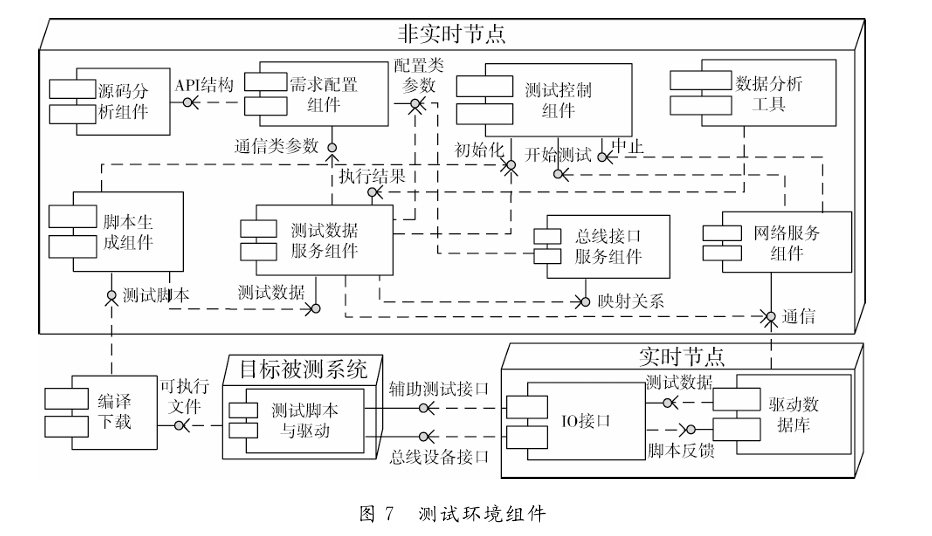

2.4 Physical Structure Design

The target tested system is typically an onboard embedded system characterized by complex interfaces and strong real-time requirements. Therefore, when deploying the automated testing environment for driver software, it is necessary to consider the testing environment’s ability to meet interface and real-time requirements. The components of the automated testing environment for driver software are shown in Figure 7.

For complex interface environments, common aviation bus interface types can be covered, and a sufficient number of communication IOs can be expanded to achieve generality in the testing environment. The execution layer is equipped with commonly used aviation onboard device buses, all managed under unified IO communication services. The onboard device operating environment is often based on the VxWorks embedded real-time operating system. To reduce the differences between the testing environment and the actual operating environment, the same operating system is used in the execution layer for real-time scheduling, managing the driver database and meeting the real-time requirements of bus communication. Therefore, the IO communication and driver database components, which have interface characteristics and strong real-time requirements, are deployed on real-time node computers.

Other components in the testing environment do not have strong real-time or bus interface characteristics but have higher requirements for user-friendliness and character parsing efficiency. Thus, these components are deployed on non-real-time node computers. Communication of test data and commands between non-real-time nodes and real-time nodes occurs via network services, with non-real-time nodes primarily running functional layer and service layer tasks, while real-time nodes run execution layer tasks.

3 Application Example

Based on the above software framework design process, a prototype tool platform for the automated testing environment of onboard driver software, named ADATE (airborne drivers’ automated test environment), was developed and designed. This platform includes two parts: upper computer master control software and lower computer execution software. The master control software completes functions such as analysis and configuration of the tested driver software, mapping of bus interface parameters, generation and editing of test scripts, control of the testing process, and analysis and report generation of test results through a user-friendly human-computer interaction interface. The execution software is deployed on an industrial computer equipped with analog, discrete, CAN bus, RS232/422/485, ARINC429, MIL-STD-1553B bus interface cards, utilizing the VxWorks 5.5 kernel to achieve a communication cycle of 1ms for bus communication.

This platform has been applied in a software testing project for a certain type of machine’s electrical management computer system, completing a large amount of testing work for its GJB289A driver software and HB6096 driver software configuration items. Compared to purely manual testing, it offers advantages such as higher coverage, faster execution speed, and more problem discovery, significantly improving the testing efficiency of onboard driver software.

4 Conclusion

Currently, the efficiency of onboard device driver software testing is low, and conventional automated testing tools can only support limited processes. The automated driver software testing environment design method proposed in this paper fills the gap in automated testing for driver software, applicable to the testing process of embedded device driver software targeting API functions. The prototype tool platform designed based on this method has validated its effectiveness in engineering practice. Future work will further improve and optimize this method and tool platform, addressing issues such as the integrity and consistency judgment of driver software requirement configurations, optimization of test script generation strategies and algorithms, and optimization of test result determination methods.

(This article is selected from “Computer Engineering and Design” by authors Gao Hu, Zheng Jun, and Zhou Quanjian, from the Quality Engineering Center of the China Aviation Comprehensive Technology Research Institute. This article is reproduced solely for the purpose of knowledge dissemination. If there are any copyright issues, please contact us promptly!)